Embedding Versioning

Let’s say that you have read a very helpful post demystifying embeddings and you’re really excited. Your social media company can certainly use them, so you fire up your notebook and start typing away. As the clock ticks, excitement turns to frustration and you wonder: how do people even do this?

There are a few gotcha moments with embeddings. No post could ever cover every scenario, but this one will attempt to give you some practical advice in how to version your project and intuitively understand your data using embeddings.

Bert Embedding: Example

But first, let’s look at how we would create an embedding. Here is example code for how you can put together a simple Bert embedding on Hugging Face in just a few lines of code.

Versioning Best Practices

Iteration is at the root of this endeavor, and iteration requires that you be able to keep track of what you have done. Staying organized can save you a lot of time and trouble, so versioning is key.

In the preceding post, you can see how embeddings enable cross-team collaboration. Now put yourself in the position of the engineer working at a self-driving car startup training the embedding for stop signs. Say your first few models yielded no interesting results, but on the fifth try you got something interesting. You trained the embedding with more data, tweaked a few parameters, and things seem to be going well. Your colleague recommended a promising new technique, and you tried it out on your next iteration on a small scale. It worked great, so you train it with a larger dataset, and . . . the results are worse than what you had yesterday. Now you want to go back to your best version. You are looking for Untitled5_1_final.ipynb—or was it Untitled5_finalfinal.ipynb?

Your troubles won’t end there. Your boss says that the company is rapidly expanding to Europe, and you must retrain the embedding with a new European dataset. Versioning is a perpetual concern with embeddings. You need a system.

How do you compare two versions of vector representation of your data? It is not the same as comparing performance, which is one-dimensional—it is more complex. There are not a lot of good answers to this problem yet, but there are common reasons why you would change your embeddings’ version. Embeddings will change for wide variety of reasons, but here are the main three, in order from largest to smallest:

- Change in your model’s architecture: This is a bigger change than before. Changing your model’s architecture can change the dimensionality of the embedding vectors. If the layers become larger/smaller, your vectors will too.

- Use another extraction method: In the event of the model not changing, you can still try several embedding extraction methods and compare between them.

- Retraining your model: Once you retrain the model from which you extract the embeddings, the parameters that define it will change. Hence, the values of the components of the vectors will change as well.

Drawing a comparison to semantic versioning, you can think of a model architecture change as a major version change. Embeddings can have a very different dimensionality, use different extraction techniques, and require retraining. Even if the extraction technique stays the same, the meaning of dimensions changes completely. This change necessarily breaks backward compatibility.

The second change could be seen as a minor version change since the dimensionality and meaning of dimensions may change but retraining is not required. It is best to consider these as breaking compatibility as well.

The third change can be thought of as a patch version change. You are not changing anything about the embedding itself or its method of extraction, you are simply retraining the model with new data. Downstream teams should not have any problem using the new embedding in the existing system.

A more realistic example would be a model that ingests inputs of different types. You can think of this case as an embedding that is a combination of other embeddings. Any change in the way you deal with those inputs will cause a change in your end embedding vector. The vector should represent the combination of inputs so that your model can decide with as much relevant information as possible. Now your versioning problem starts to get very complicated.

Understanding Your Data Using Embeddings

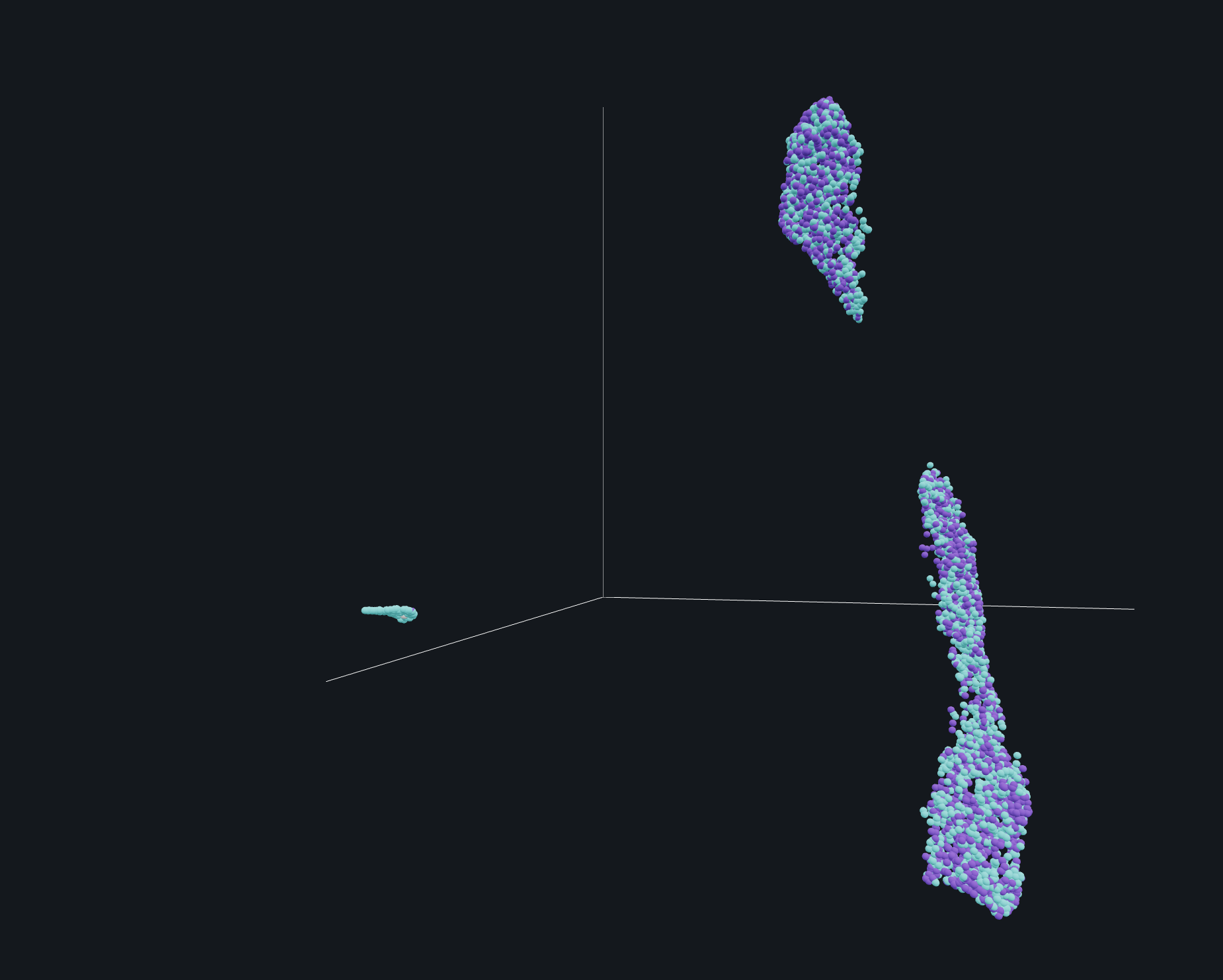

If you can visualize your embedding, you can understand it. Machine learning engineers are very good at understanding data representation in two and even three dimensions. Humans in general do clustering intuitively. Seeing an embedding visually goes a long way toward helping you understand what it is doing. This should be possible, since embeddings are a vector representation and vectors are easy to plot. If you can see the clusters of points, you can generally gain at least some understanding of what each dimension means. Alas, visualizing things in hundreds of dimensions is very challenging.

Luckily there is extensive literature on dimensionality reduction. For several reasons, the most successful methods for the representation of embeddings have been neighbor graphs, in particular UMAP (Uniform Manifold Approximation and Projection for Dimension Reduction). To learn more about visualizing your embeddings, including a dissection of t-SNE vs. UMAP, check out the next post from my colleague Francisco Castillo Carrasco.