Tokenization and Tokenizers for Machine Learning

Unstructured data makes up 80% of the data generated in today’s world. In order to make sense out of unstructured data, many different processing techniques have been proposed. For unstructured text data and natural language processing (NLP) applications, tokenization is a core component of the processing pipeline. The goal of tokenization is to convert an unstructured text document into numerical data that is suitable for predictive and/or prescriptive analytics.

In this post, we take a closer look at what tokenization is, why it matters, how to use it, and some exciting applications in the real-world. We also see how tokenization can be used to create word embeddings, which are a key component of many NLP models. So, buckle up and get ready to dive into the world of tokenization!

What Is Tokenization in AI?

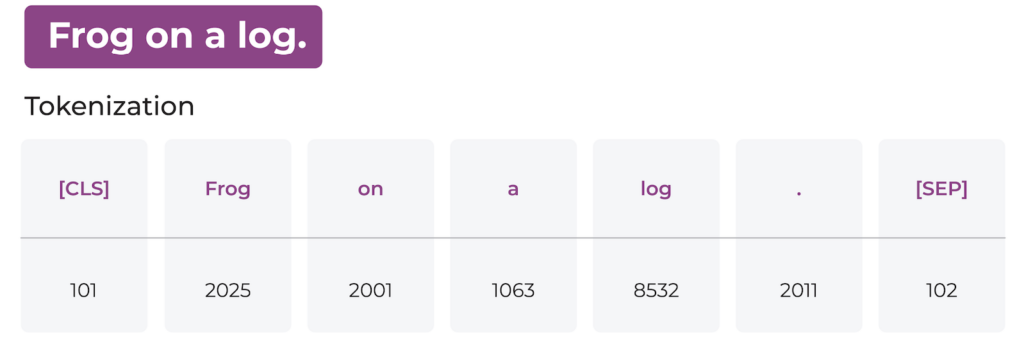

Tokenization is the first step in preprocessing text data for machine learning and NLP tasks. It involves breaking down a text document into smaller units called tokens, which can be words, phrases, or individual characters. These tokens can then be used as a vector representation of text documents to perform various NLP tasks such as sentiment analysis, named entity recognition, and text classification. The goal of tokenization is to find a small representation of text that makes the most sense to a machine learning model.

Why Use Tokenization?

Tokenization is a crucial step in NLP because text data can be messy and highly unstructured. Without proper tokenization, it can be very difficult to extract meaningful information from text data. It helps to standardize the input data, making it easier to process and analyze. Without tokenization, NLP models would have to work with the entire text as a single sequence of characters, which is computationally expensive and difficult to model accurately.

Additionally, tokenization can help to address language-specific challenges such as stemming, lemmatization, and stop-word removal. These techniques are used to normalize text by removing redundant information, making it easier to analyze and model.

Different Types of Tokenization with Examples

There are many different open-source tools that can be used to perform tokenization on unstructured data. However, it is important to understand different types of tokenization before blindly generating tokens from your data since the type of tokenization can significantly affect the performance and business impact of your model. Let’s try to understand the different types of tokenization with real-life examples in Python. We will be leveraging a commonly used Python library called nltk (Natural Language Toolkit) and its tokenization module for examples.

Word Tokenization

Word tokenization is one of the simplest tokenization methods that involves breaking text into individual words using rules such as punctuation and spaces. The specific output of tokens in this method depends on the splitting rules of words. We can split the words by white space, punctuation, or specific characters depending on the NLP application.

import nltk

from nltk.tokenize import word_tokenize

text = "This was one of my favorite movies last year!"

words = word_tokenize(text)

print(words)

['This','was','one','of','my','favorite','movies','last','year','!']

Sentence Tokenization

This tokenization method involves breaking text into individual sentences using rules such as punctuation and line breaks. Sentence tokenization is useful for NLP tasks that require analyzing the meaning of individual sentences.

import nltk

from nltk.tokenize import sent_tokenize

text = "The second season of the show was better. I wish it never ended!"

sentences = sent_tokenize(text)

print(sentences)

['The second season of the show was better.','I wish it never ended!']

Punctuation Tokenization

The punctuation tokenizer splits the sentences into words and characters based on the punctuation in the text.

import nltk

from nltk.tokenize import word_tokenize

text = 'He said, "I cant believe it!" and then ran off, laughing hysterically.'

tokens = nltk.word_tokenize(text)

print(tokens)

['He','said',',','``','I','cant','believe','it',"''",'and','then','ran','off','laughing','hysterically','.']Treebank Tokenization

Treebank tokenization similarly separates punctuation from words, but also includes special handling for certain types of tokens, such as contractions and numbers. It uses a list of common rules for English language and English-based tokenization. It is named after the Penn Treebank corpus, which is a large annotated corpus of English text that has been widely used in natural language processing research.

import nltk

from nltk.tokenize import TreebankWordTokenizer

text = "The company announced that it had hired a new CRO, who has 20+ years of experience."

tokenizer = TreebankWordTokenizer()

tokens = tokenizer.tokenize(text)

print(tokens)

['The','company','announced','that','it','had','a','new','CEO','who','has','20+','years','experience','.']

Morphological Tokenization

Morphological tokenization is a type of word tokenization that involves splitting words into their constituent morphemes, which are the smallest units of meaning in a language. For example, the word “unhappy” can be tokenized into two morphemes: “un-” (a prefix meaning “not”) and “happy” (the root word). Here is an example in Python:

import nltk

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

word = "jumped"

stem = stemmer.stem(word)

print(stem)Applications of Tokenization in Real-World

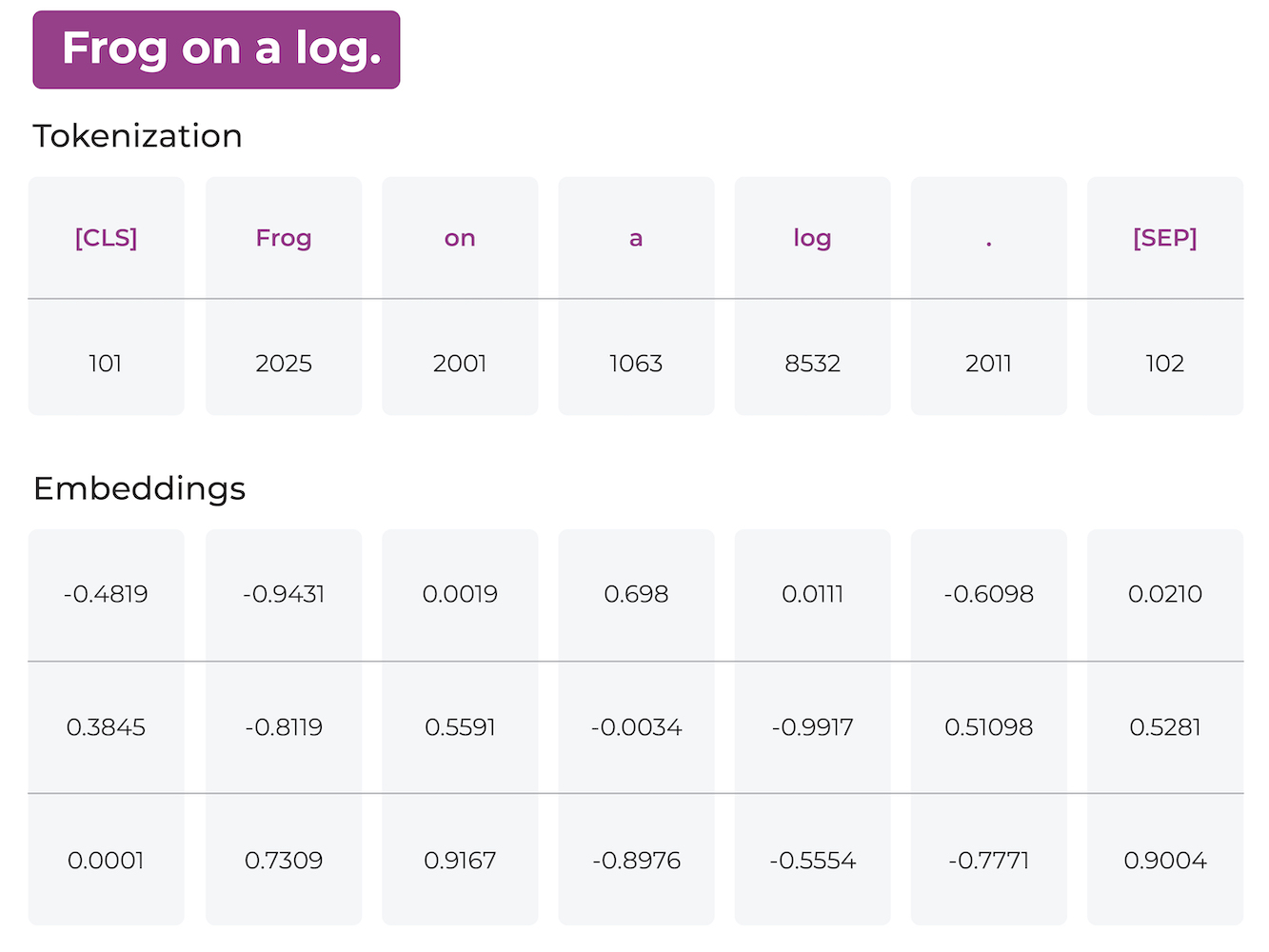

Tokenization and Word Embeddings

An important application of tokenization in machine learning is word embeddings. Word embeddings are a crucial component of many NLP models, and tokenization plays a critical role in their creation. Word embeddings are vector representations of words, where each dimension of the vector represents a different aspect of the word’s meaning. These vector representations are created using tokenization, where each word is tokenized into individual tokens and then embedded into a vector representation. Hence, tokenization is applied to a large text document to generate tokens first, and then the tokens are used to generate embedding vectors of unstructured data.

The embeddings are then used as inputs to NLP models, allowing the models to understand the meaning of the words in the text. However, with large text datasets, the dimensionality of the embeddings can become very high, leading to a large number of features for the model to process. This can cause issues with overfitting and slow down the training process.

To mitigate this, it’s important to monitor the embeddings during the training process and apply dimensionality reduction techniques such as t-distributed stochastic neighbor embedding (t-SNE) or Uniform Manifold Approximation and Projection (UMAP) to reduce the number of dimensions. These techniques help to retain the most important information in the embeddings while reducing the number of dimensions and speeding up the training process.

Tokenization and Generative AI

Tokenization plays a crucial role in generative AI and large language models like ChatGPT. These models are trained on large amounts of text data and use tokenization to break the text into individual tokens, or words. These tokens are then embedded into vector representations, allowing the model to capture the meaning of the words in the text and generate new text that is semantically similar to the input.

For example, a large language model like ChatGPT can be trained on a massive corpus of text data and then used to generate new text based on a prompt. By breaking the input text into tokens and embedding them into vectors, the model is able to understand the meaning of the text and generate new text that is semantically similar to the input. This allows us to generate text that is not only grammatically correct but also semantically meaningful.

The use of tokenization and word embeddings is crucial in ensuring that the output generated by generative AI models is accurate and meaningful. It allows these models to understand the context and meaning of the text, enabling them to generate text that is not only grammatically correct but also semantically coherent.

What Are the Challenges of Tokenization?

Tokenization faces a few challenges, including:

- Ambiguity: Tokenization can be ambiguous, especially in languages where words can have multiple meanings. For example, the word “run” could refer to a physical activity, a computer program, or the act of managing or operating something. This ambiguity can make it difficult for a tokenizer to accurately break down the text into individual tokens.

- Out-of-vocabulary (OOV) words: Tokenization can also encounter OOV words, which are words that are not in the tokenizer’s vocabulary. OOV words can be problematic, especially in domain-specific texts, where they occur frequently.

- Noise: Large text documents can contain a lot of noise, including typos, abbreviations, and special characters that can hinder tokenization.

- Language-specific challenges: Different languages have unique challenges when it comes to tokenization. For example, languages with complex writing systems, such as Chinese and Japanese, require specialized tokenization methods.

How Can You Overcome Some of the Common Challenges of Tokenization?

Potential solutions span:

- Preprocessing: Preprocessing involves cleaning and normalizing text before tokenization. This step can help remove noise, correct spelling mistakes, and convert text to a standardized format.

- Regularization: Regularization involves standardizing the use of certain characters, such as apostrophes and hyphens, in text. This can help reduce ambiguity in tokenization.

- Language-specific tokenization: For languages with complex writing systems, specialized tokenization methods can be used. For example, in Chinese, words are not separated by spaces, so a method called word segmentation is used to identify word boundaries.

- Subword tokenization: Subword tokenization involves breaking down words into smaller subword units to handle OOV words. This approach can be effective in handling domain-specific terms and rare words.

Conclusion

Tokenization is a crucial component of natural language processing and machine learning applications. By breaking down unstructured text data into smaller units, tokenization provides a structured representation of text data that can be used for various NLP tasks. The different types of tokenization methods and tools enable researchers to handle different types of text data, and the resulting tokens can be used to generate word embeddings that provide a deeper understanding of the meaning of text.

Overall, tokenization provides a powerful means to analyze and model unstructured text data, but it requires careful consideration and attention to ensure that the right methods are applied and the resulting tokens are optimized for the specific NLP tasks at hand. As NLP applications continue to evolve and grow, it’s clear that tokenization will continue to be an important component of the field, unlocking new insights and applications for unstructured text data.