Prompt management allows you to create, store, and modify prompts for interacting with LLMs. By managing prompts systematically, you can improve reuse, consistency, and experiment with variations across different models and inputs.

Unlike traditional software, AI applications are non-deterministic and depend on natural language to provide context and guide model output. The pieces of natural language and associated model parameters embedded in your program are known as “prompts.”

Optimizing your prompts is typically the highest-leverage way to improve the behavior of your application, but “prompt engineering” comes with its own set of challenges. You want to be confident that changes to your prompts have the intended effect and don’t introduce regressions.

To get started, jump to Quickstart: Prompts.

Phoenix offers a comprehensive suite of features to streamline your prompt engineering workflow.

Prompt Management - Create, store, modify, and deploy prompts for interacting with LLMs

Prompt Playground - Play with prompts, models, invocation parameters and track your progress via tracing and experiments

Span Replay - Replay the invocation of an LLM. Whether it's an LLM step in an LLM workflow or a router query, you can step into the LLM invocation and see if any modifications to the invocation would have yielded a better outcome.

Prompts in Code - Phoenix offers client SDKs to keep your prompts in sync across different applications and environments.

Version and track changes made to prompt templates

Prompt management allows you to create, store, and modify prompts for interacting with LLMs. By managing prompts systematically, you can improve reuse, consistency, and experiment with variations across different models and inputs.

Key benefits of prompt management include:

Reusability: Store and load prompts across different use cases.

Versioning: Track changes over time to ensure that the best performing version is deployed for use in your application.

Collaboration: Share prompts with others to maintain consistency and facilitate iteration.

To learn how to get started with prompt management, see Create a prompt

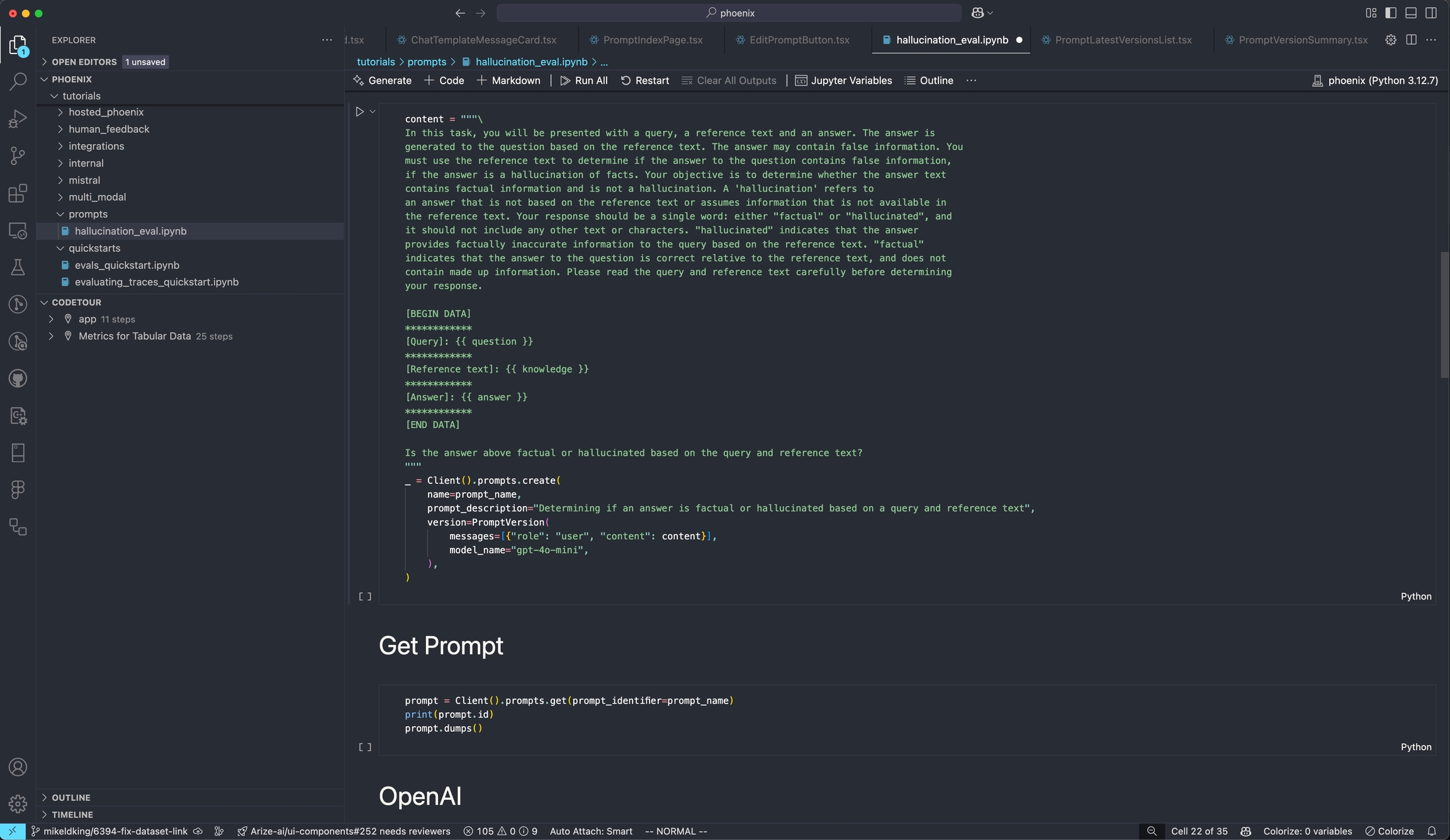

Pull and push prompt changes via Phoenix's Python and TypeScript Clients

Using Phoenix as a backend, Prompts can be managed and manipulated via code by using our Python or TypeScript SDKs.

With the Phoenix Client SDK you can:

prompts dynamically

templates by name, version, or tag

templates with runtime variables and use them in your code. Native support for OpenAI, Anthropic, Gemini, Vercel AI SDK, and more. No propriatry client necessary.

Support for and . Execute tools defined within the prompt. Phoenix prompts encompasses more than just the text and messages.

To learn more about managing Prompts in code, see

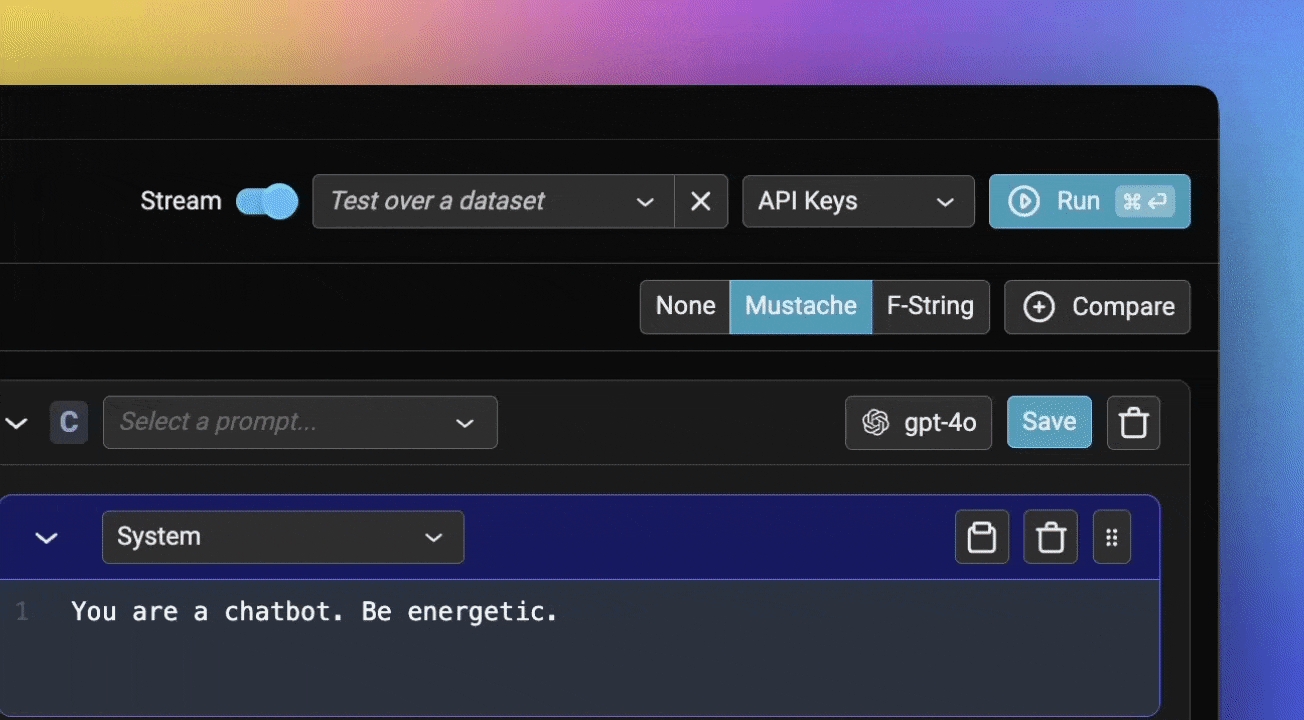

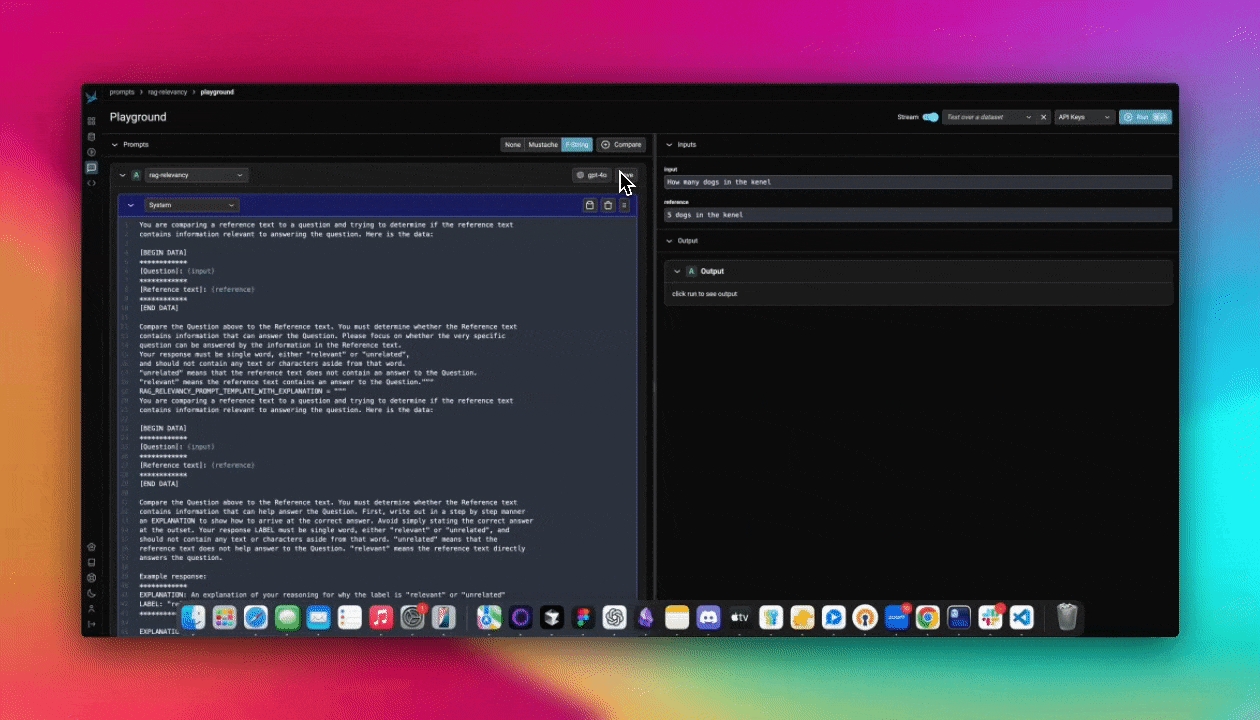

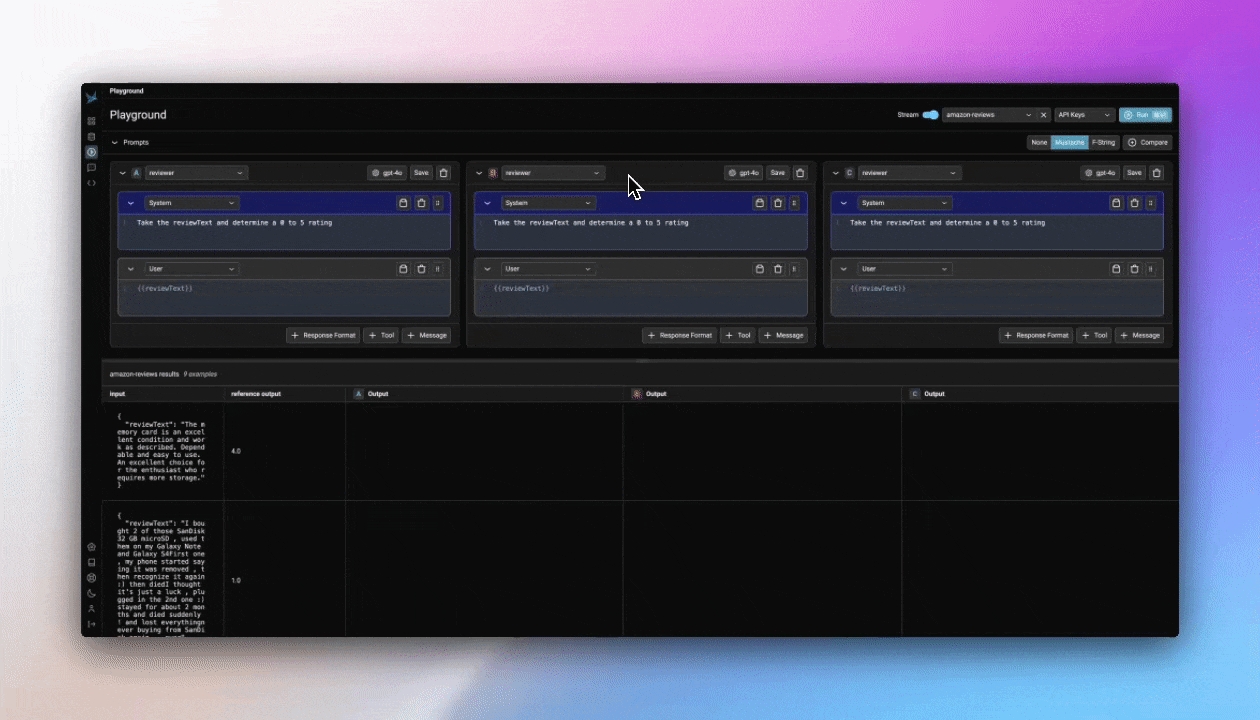

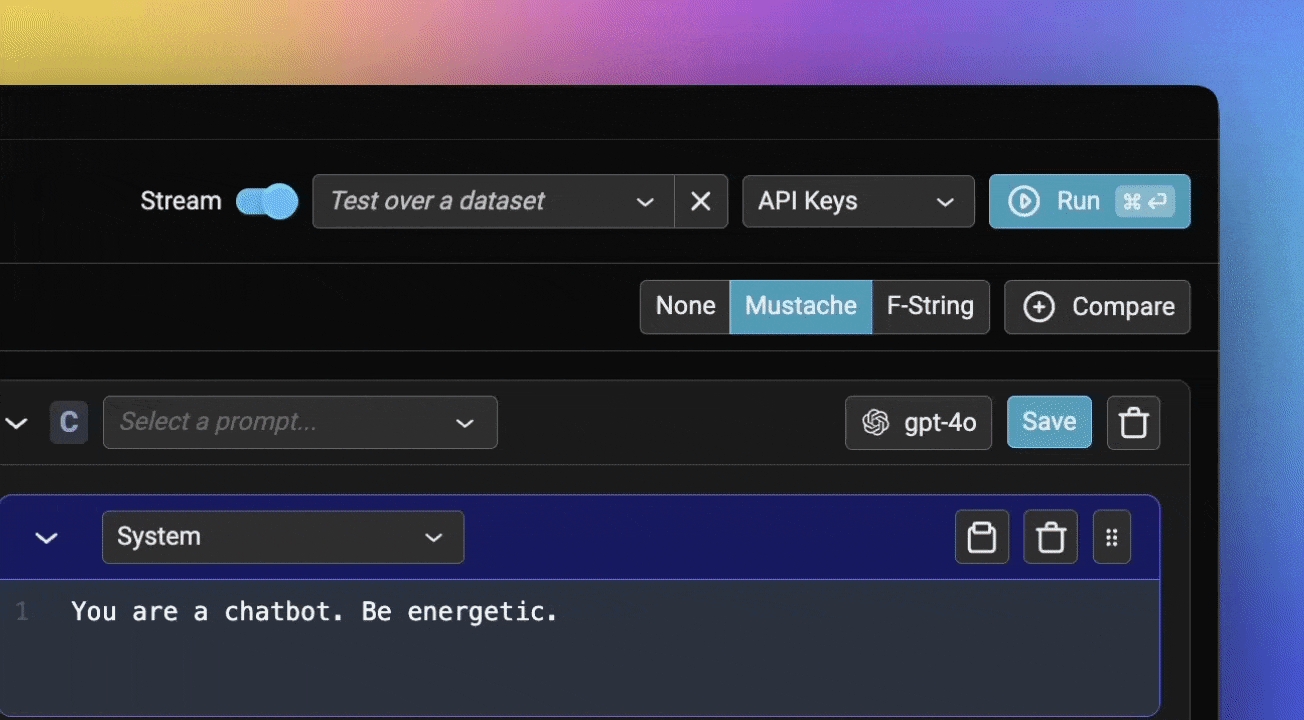

Phoenix's Prompt Playground makes the process of iterating and testing prompts quick and easy. Phoenix's playground supports various AI providers (OpenAI, Anthropic, Gemini, Azure) as well as custom model endpoints, making it the ideal prompt IDE for you to build experiment and evaluate prompts and models for your task.

Speed: Rapidly test variations in the , model, invocation parameters, , and output format.

Reproducibility: All runs of the playground are recorded as traces and experiments, unlocking annotations and evaluation.

Datasets: Use dataset examples as a fixture to run a prompt variant through its paces and to evaluate it systematically.

Prompt Management: Load, edit, and save prompts directly within the playground.

To learn more on how to use the playground, see Using the Playground

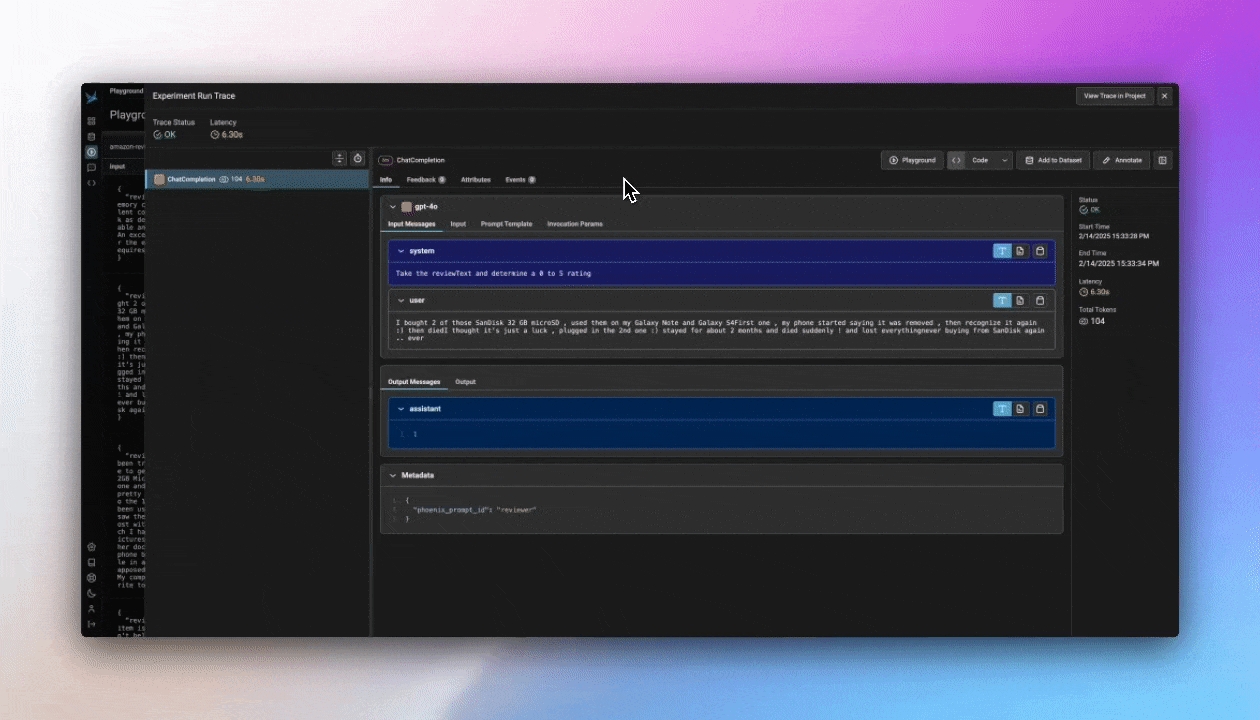

Replay LLM spans traced in your application directly in the playground

Have you ever wanted to go back into a multi-step LLM chain and just replay one step to see if you could get a better outcome? Well you can with Phoenix's Span Replay. LLM spans that are stored within Phoenix can be loaded into the Prompt Playground and replayed. Replaying spans inside of Playground enables you to debug and improve the performance of your LLM systems by comparing LLM provider outputs, tweaking model parameters, changing prompt text, and more.

Chat completions generated inside of Playground are automatically instrumented, and the recorded spans are immediately available to be replayed inside of Playground.