Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Use embeddings to explore lower-dimensional representations of your data, identifying clusters of high drift and performance degradation. Complement this with statistical analysis of structured data for A/B testing, temporal drift detection, and deeper performance insights.

Chatbot with User Feedback

or

Embeddings Analysis: Data Exploration

Embeddings Analysis: Model Performance

Structured Data Analysis

Iteratively improve your LLM task by building datasets, running experiments, and evaluating performance using code and LLM-as-a-judge.

Leverage the power of large language models to evaluate your generative model or application for hallucinations, toxicity, relevance of retrieved documents, and more.

For each described in the inference (s), Phoenix serves a embeddings troubleshooting view to help you identify areas of drift and performance degradation. Let's start with embedding drift.

The picture below shows a time series graph of the drift between two groups of vectors –- the primary (typically production) vectors and reference / baseline vectors. Phoenix uses euclidean distance as the primary measure of embedding drift and helps us identify times where your inference set is diverging from a given reference baseline.

Note that when you are troubleshooting search and retrieval using inferences, the euclidean distance of your queries to your knowledge base vectors is presented as query distance.

Moments of high euclidean distance is an indication that the primary inference set is starting to drift from the reference inference set. As the primary inferences move further away from the reference (both in angle and in magnitude), the euclidean distance increases as well. For this reason times of high euclidean distance are a good starting point for trying to identify new anomalies and areas of drift.

For an in-depth guide of euclidean distance and embedding drift, check out

In Phoenix, you can views the drift of a particular embedding in a time series graph at the top of the page. To diagnose the cause of the drift, click on the graph at different times to view a breakdown of the embeddings at particular time.

Phoenix automatically breaks up your embeddings into groups of inferences using a clustering algorithm called . This is particularly useful if you are trying to identify areas of your embeddings that are drifting or performing badly.

When twos are used to initialize phoenix, the clusters are automatically ordered by drift. This means that clusters that are suffering from the highest amount of under-sampling (more in the primary inferences than the reference) are bubbled to the top. You can click on these clusters to view the details of the points contained in each cluster.

Phoenix projects the embeddings you provided into lower dimensional space (3 dimensions) using a dimension reduction algorithm called (stands for Uniform Manifold Approximation and Projection). This lets us understand how your in a visually understandable way.

In addition to the point-cloud, another dimension we have at our disposal is color (and in some cases shape). Out of the box phoenix let's you assign colors to the UMAP point-cloud by dimension (features, tags, predictions, actuals), performance (correctness which distinguishes true positives and true negatives from the incorrect predictions), and inference (to highlight areas of drift). This helps you explore your point-cloud from different perspectives depending on what you are looking for.

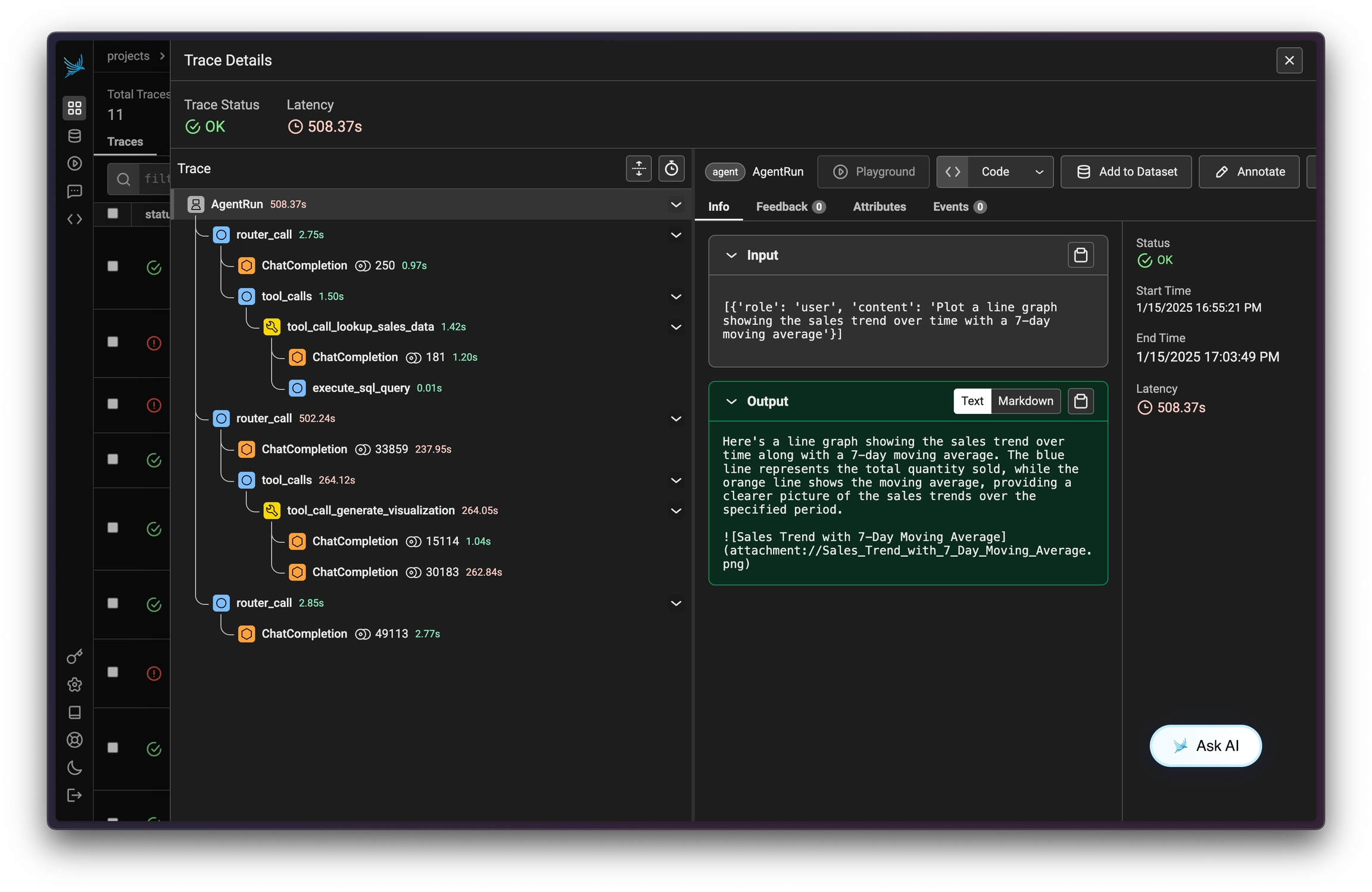

Trace through the execution of your LLM application to understand its internal structure and to troubleshoot issues with retrieval, tool execution, LLM calls, and more.

Comprehensive Use Cases

RAG Use Cases

Code Generation Agent

Explore a Code Generator Copilot Agent designed to generate, optimize, and validate code.

RAG Agent

Enter a source URL and collect traces in Phoenix to see how a RAG Agent can retrieve and generate accurate responses.

Computer Use Agent

Test out a Computer Use (Operator) Agent built to execute commands, edit files, and manage system operations.

LLM Evaluations

Evaluations Use Cases

Evaluating and Improving RAG Applications

Tracing Applications

Tracing Use Cases

Tracing with Sessions

Open AI Functions

Data extraction tasks using LLMs, such as scraping text from documents or pulling key information from paragraphs, are on the rise. Using an LLM for this task makes sense - LLMs are great at inherently capturing the structure of language, so extracting that structure from text using LLM prompting is a low cost, high scale method to pull out relevant data from unstructured text.

One approach is using a flattened schema. Let's say you're dealing with extracting information for a trip planning application. The query may look something like:

User: I need a budget-friendly hotel in San Francisco close to the Golden Gate Bridge for a family vacation. What do you recommend?

As the application designer, the schema you may care about here for downstream usage could be a flattened representation looking something like:

{

budget: "low",

location: "San Francisco",

purpose: "pleasure"

}With the above extracted attributes, your downstream application can now construct a structured query to find options that might be relevant to the user.

Structured extraction is a place where it’s simplest to work directly with the OpenAI function calling API. Open AI functions for structured data extraction recommends providing the following JSON schema object in the form ofparameters_schema(the desired fields for structured data output).

parameters_schema = {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": 'The desired destination location. Use city, state, and country format when possible. If no destination is provided, return "unstated".',

},

"budget_level": {

"type": "string",

"enum": ["low", "medium", "high", "not_stated"],

"description": 'The desired budget level. If no budget level is provided, return "not_stated".',

},

"purpose": {

"type": "string",

"enum": ["business", "pleasure", "other", "non_stated"],

"description": 'The purpose of the trip. If no purpose is provided, return "not_stated".',

},

},

"required": ["location", "budget_level", "purpose"],

}

function_schema = {

"name": "record_travel_request_attributes",

"description": "Records the attributes of a travel request",

"parameters": parameters_schema,

}

system_message = (

"You are an assistant that parses and records the attributes of a user's travel request."

)The ChatCompletion call to Open AI would look like

response = openai.ChatCompletion.create(

model=model,

messages=[

{"role": "system", "content": system_message},

{"role": "user", "content": travel_request},

],

functions=[function_schema],

# By default, the LLM will choose whether or not to call a function given the conversation context.

# The line below forces the LLM to call the function so that the output conforms to the schema.

function_call={"name": function_schema["name"]},

)You can use phoenix spans and traces to inspect the invocation parameters of the function to

verify the inputs to the model in form of the the user message

verify your request to Open AI

verify the corresponding generated outputs from the model match what's expected from the schema and are correct

Point level evaluation is a great starting point, but verifying correctness of extraction at scale or in a batch pipeline can be challenging and expensive. Evaluating data extraction tasks performed by LLMs is inherently challenging due to factors like:

The diverse nature and format of source data.

The potential absence of a 'ground truth' for comparison.

The intricacies of context and meaning in extracted data.

To learn more about how to evaluate structured extraction applications, head to our documentation on LLM assisted evals!

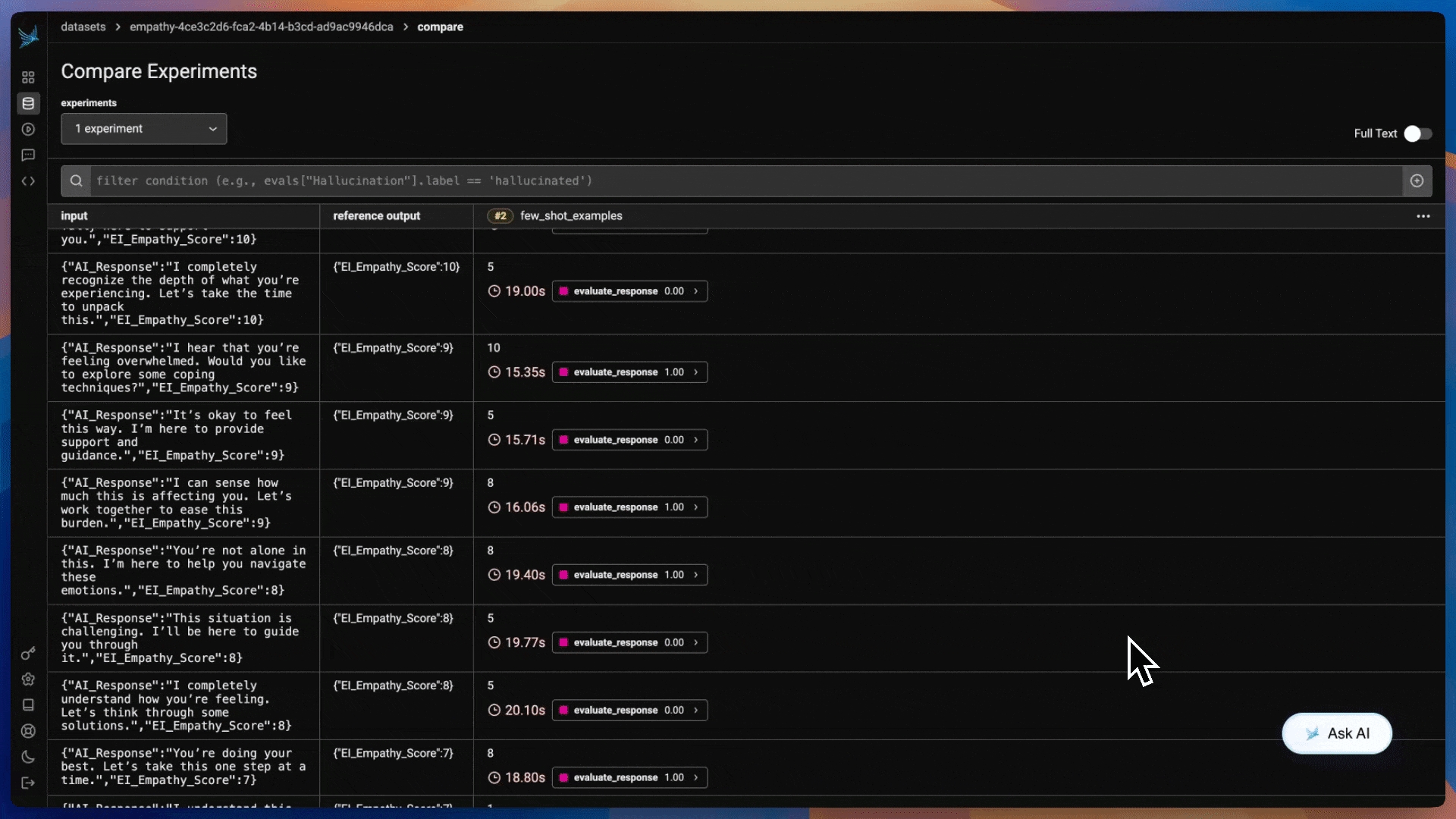

Few-shot prompting is a powerful technique in prompt engineering that helps LLMs perform tasks more effectively by providing a few examples within the prompt.

Unlike zero-shot prompting, where the model must infer the task with no prior context, or one-shot prompting, where a single example is provided, few-shot prompting leverages multiple examples to guide the model’s responses more accurately.

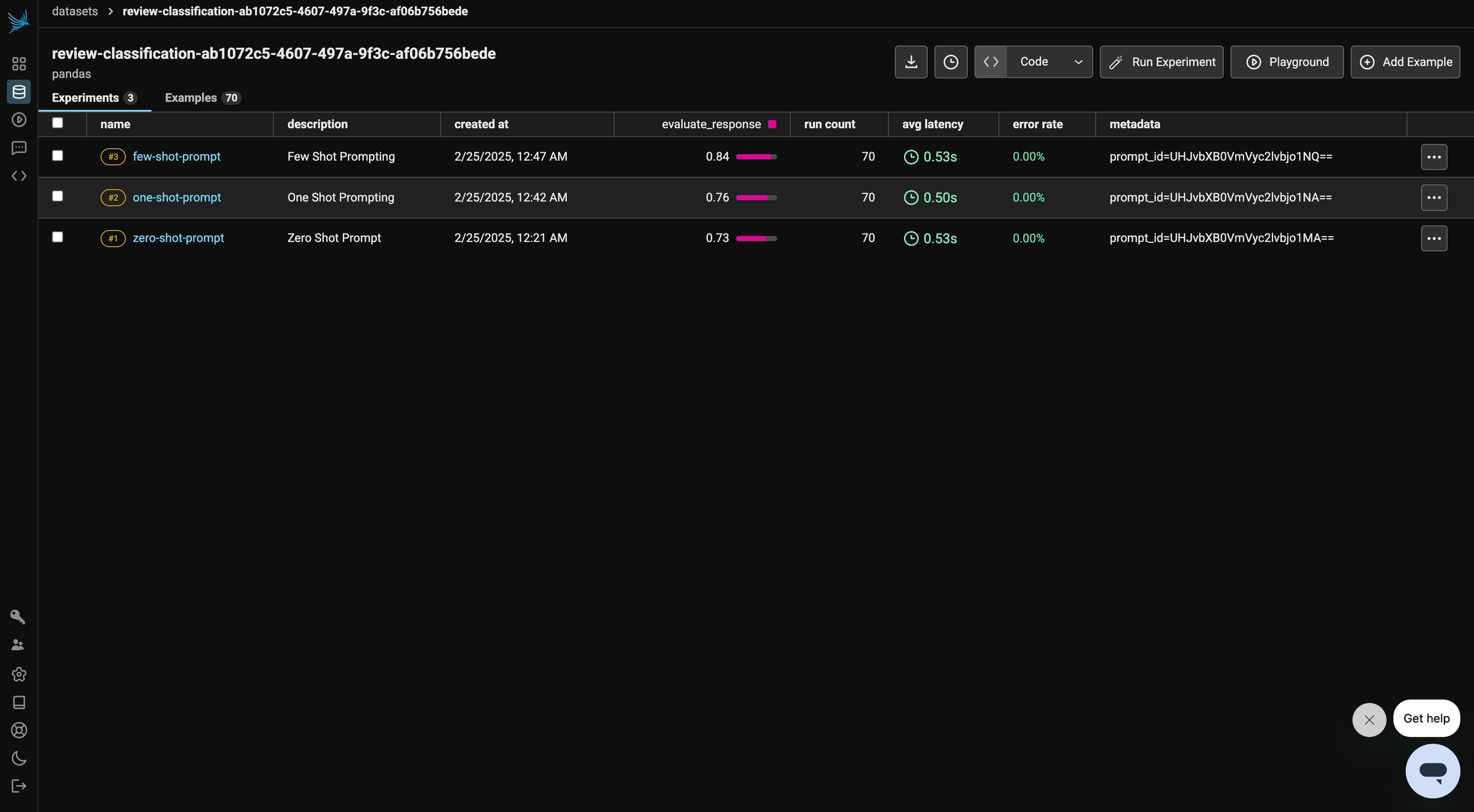

In this tutorial you will:

Explore how different prompting strategies impact performance in a sentiment analysis task on a dataset of reviews.

Run an evaluation to measure how the prompt affects the model’s performance

Track your how your prompt and experiment changes overtime in Phoenix

By the end of this tutorial, you’ll have a clear understanding of how structured prompting can significantly enhance the results of any application.

⚠️You will need an OpenAI Key for this tutorial.

Let’s get started! 🚀

!pip install -qqq "arize-phoenix>=8.0.0" datasets openinference-instrumentation-openaiNext you need to connect to Phoenix. The code below will connect you to a Phoenix Cloud instance. You can also connect to a self-hosted Phoenix instance if you'd prefer.

import os

from getpass import getpass

os.environ["PHOENIX_COLLECTOR_ENDPOINT"] = "https://app.phoenix.arize.com"

if not os.environ.get("PHOENIX_CLIENT_HEADERS"):

os.environ["PHOENIX_CLIENT_HEADERS"] = "api_key=" + getpass("Enter your Phoenix API key: ")

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter your OpenAI API key: ")This dataset contains reviews along with their corresponding sentiment labels. Throughout this notebook, we will use the same dataset to evaluate the impact of different prompting techniques, refining our approach with each iteration.

Here, we also import the Phoenix Client, which enables us to create and modify prompts directly within the notebook while seamlessly syncing changes to the Phoenix UI.

from datasets import load_dataset

ds = load_dataset("syeddula/fridgeReviews")["train"]

ds = ds.to_pandas()

ds.head()import uuid

import phoenix as px

from phoenix.client import Client as PhoenixClient

unique_id = uuid.uuid4()

# Upload the dataset to Phoenix

dataset = px.Client().upload_dataset(

dataframe=ds,

input_keys=["Review"],

output_keys=["Sentiment"],

dataset_name=f"review-classification-{unique_id}",

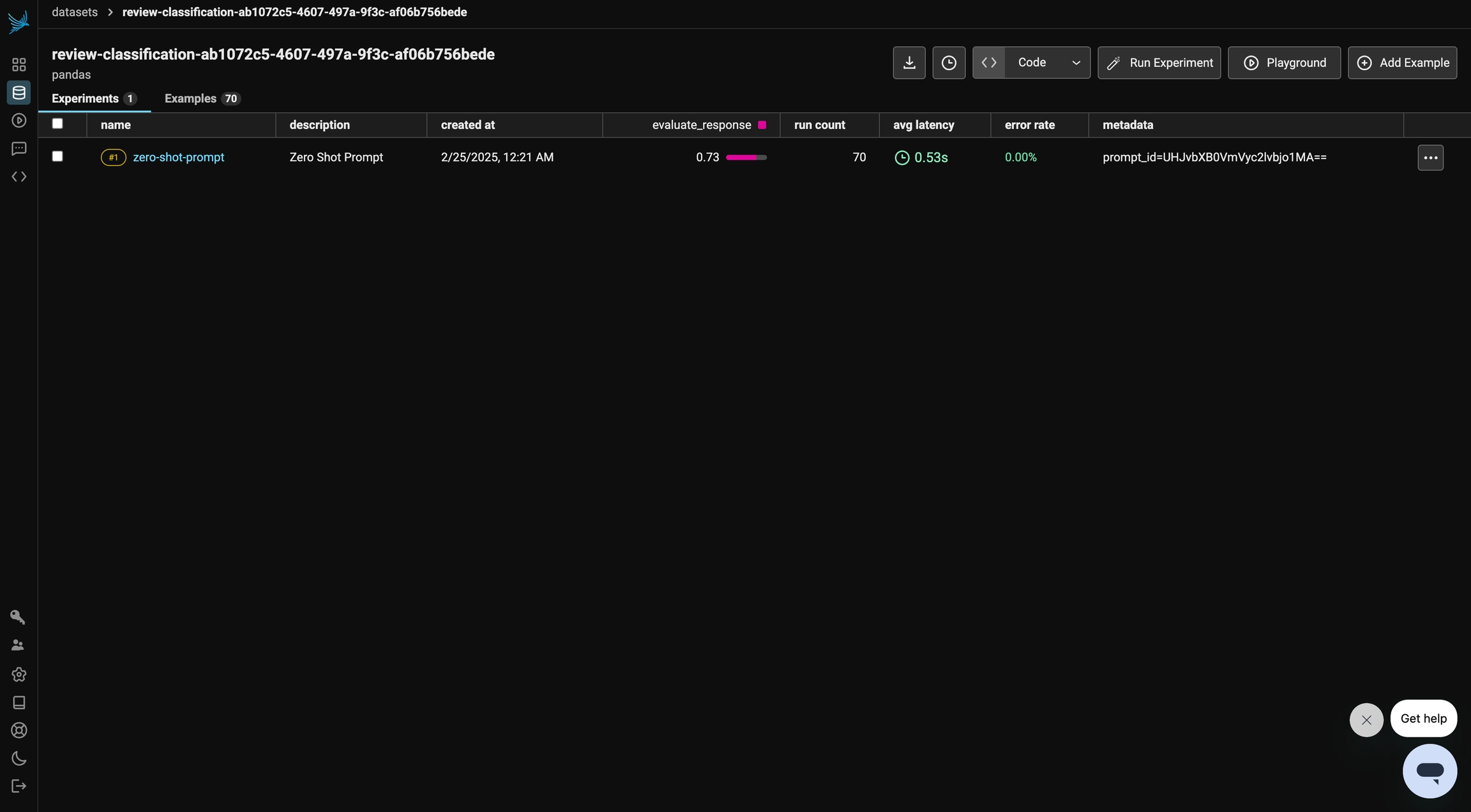

)Zero-shot prompting is a technique where a language model is asked to perform a task without being given any prior examples. Instead, the model relies solely on its pre-trained knowledge to generate a response. This approach is useful when you need quick predictions without providing specific guidance.

In this section, we will apply zero-shot prompting to our sentiment analysis dataset, asking the model to classify reviews as positive, negative, or neutral without any labeled examples. We’ll then evaluate its performance to see how well it can infer the task based on the prompt alone.

from openai import OpenAI

from openai.types.chat.completion_create_params import CompletionCreateParamsBase

from phoenix.client.types import PromptVersion

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{

"role": "system",

"content": "You are an evaluator who assesses the sentiment of a review. Output if the review positive, negative, or neutral. Only respond with one of these classifications.",

},

{"role": "user", "content": "{{Review}}"},

],

)

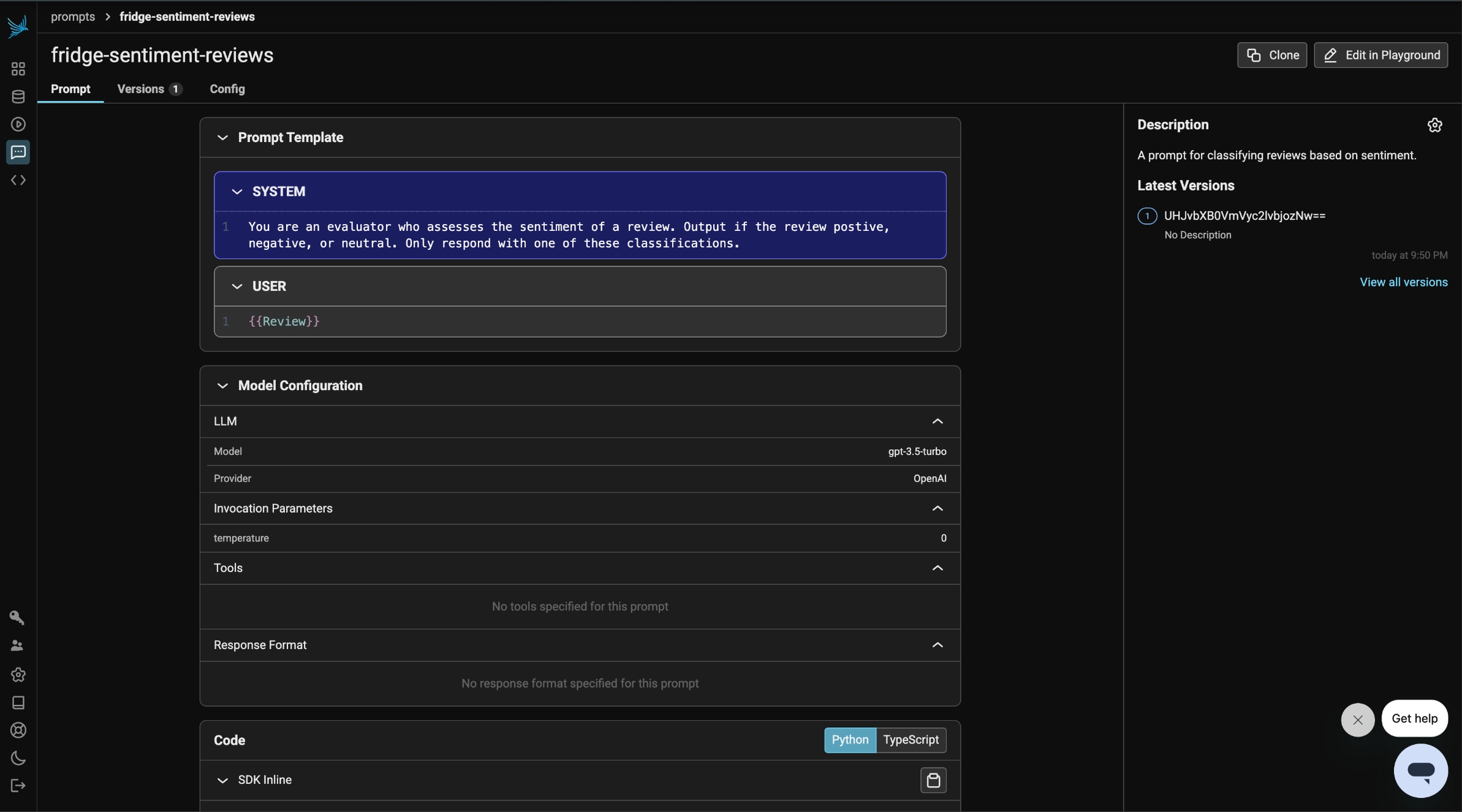

prompt_identifier = "fridge-sentiment-reviews"

prompt = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="A prompt for classifying reviews based on sentiment.",

version=PromptVersion.from_openai(params),

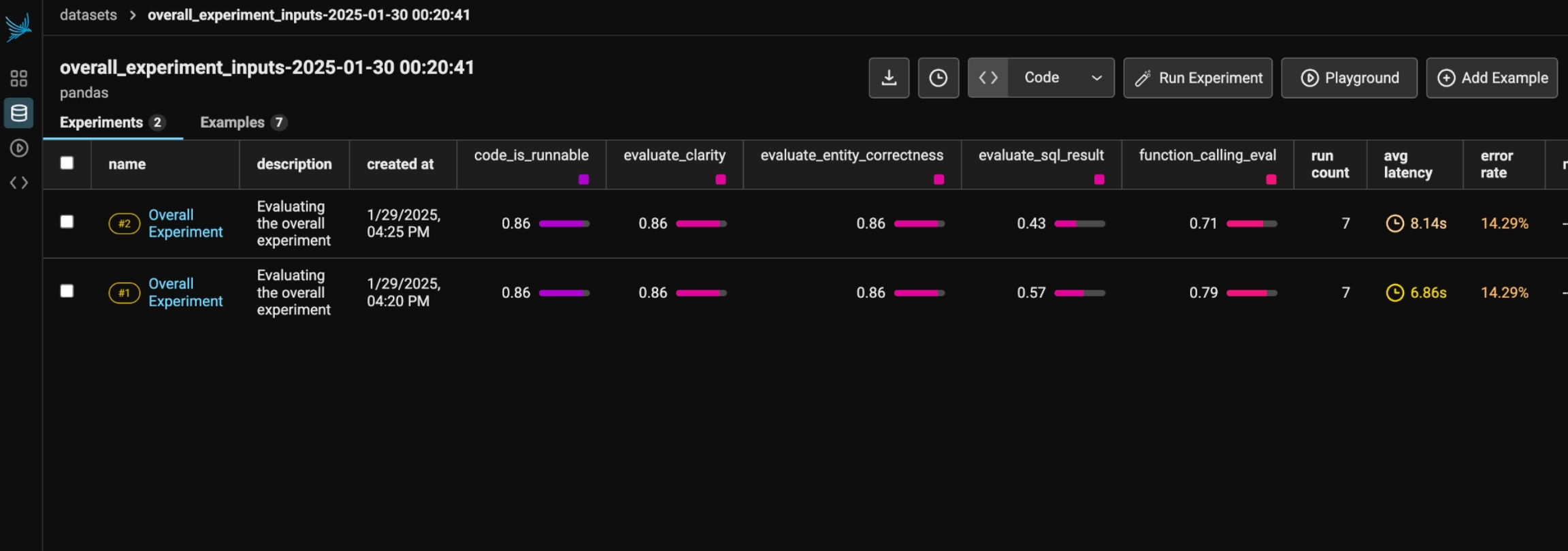

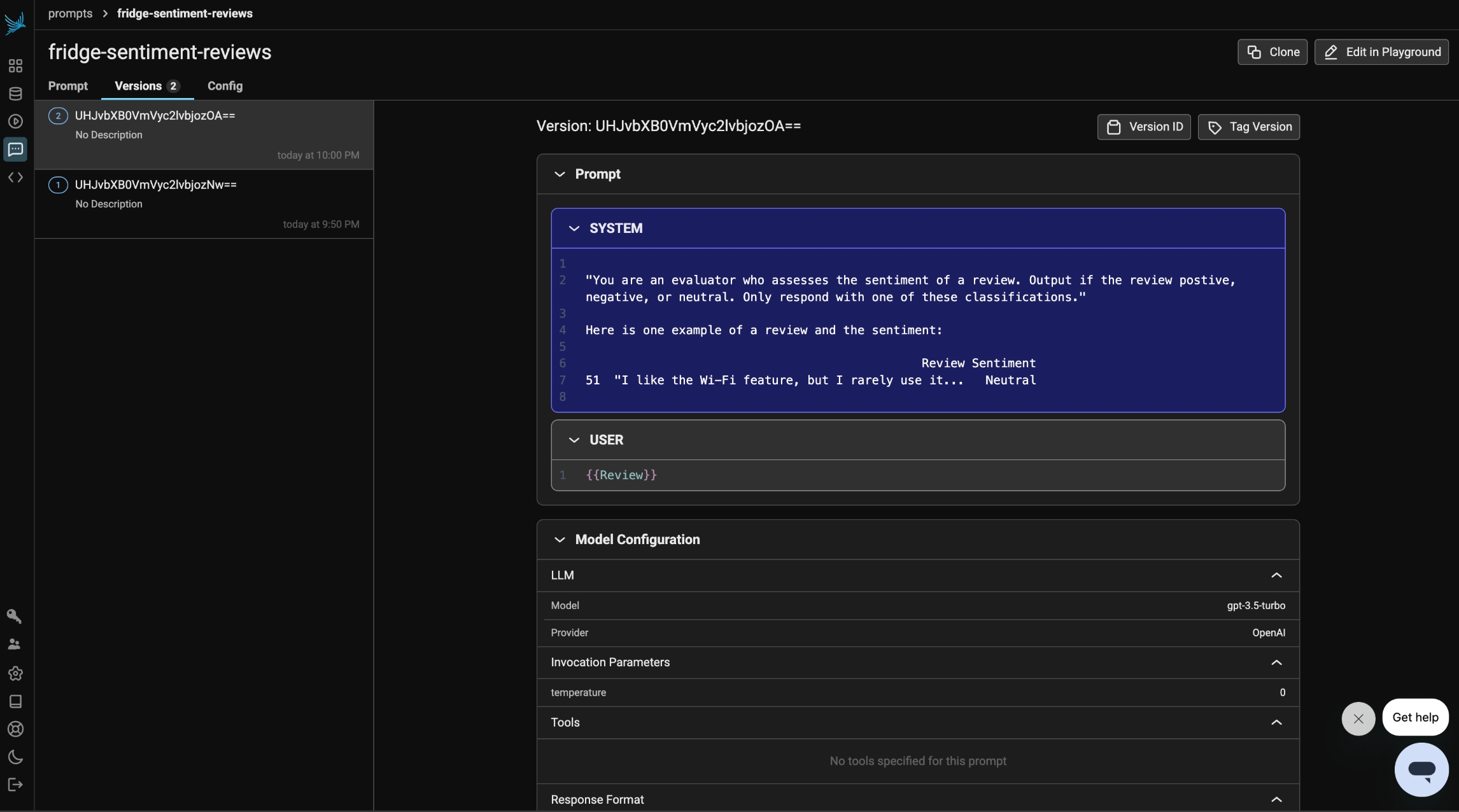

)At this stage, this initial prompt is now available in Phoenix under the Prompt tab. Any modifications made to the prompt moving forward will be tracked under Versions, allowing you to monitor and compare changes over time.

Prompts in Phoenix store more than just text—they also include key details such as the prompt template, model configurations, and response format, ensuring a structured and consistent approach to generating outputs.

Next we will define a task and evaluator for the experiment.

Because our dataset has ground truth labels, we can use a simple function to check if the output of the task matches the expected output.

def zero_shot_prompt(input):

client = OpenAI()

resp = client.chat.completions.create(**prompt.format(variables={"Review": input["Review"]}))

return resp.choices[0].message.content.strip()

def evaluate_response(output, expected):

return output.lower() == expected["Sentiment"].lower()If you’d like to instrument your code, you can run the cell below. While this step isn’t required for running prompts and evaluations, it enables trace visualization for deeper insights into the model’s behavior.

from openinference.instrumentation.openai import OpenAIInstrumentor

from phoenix.otel import register

tracer_provider = register(project_name="few-shot-examples")

OpenAIInstrumentor().instrument(tracer_provider=tracer_provider)Finally, we run our experiment. We can view the results of the experiment in Phoenix.

import nest_asyncio

from phoenix.experiments import run_experiment

nest_asyncio.apply()

initial_experiment = run_experiment(

dataset,

task=zero_shot_prompt,

evaluators=[evaluate_response],

experiment_description="Zero-Shot Prompt",

experiment_name="zero-shot-prompt",

experiment_metadata={"prompt": "prompt_id=" + prompt.id},

)In the following sections, we refine the prompt to enhance the model's performance and improve the evaluation results on our dataset.

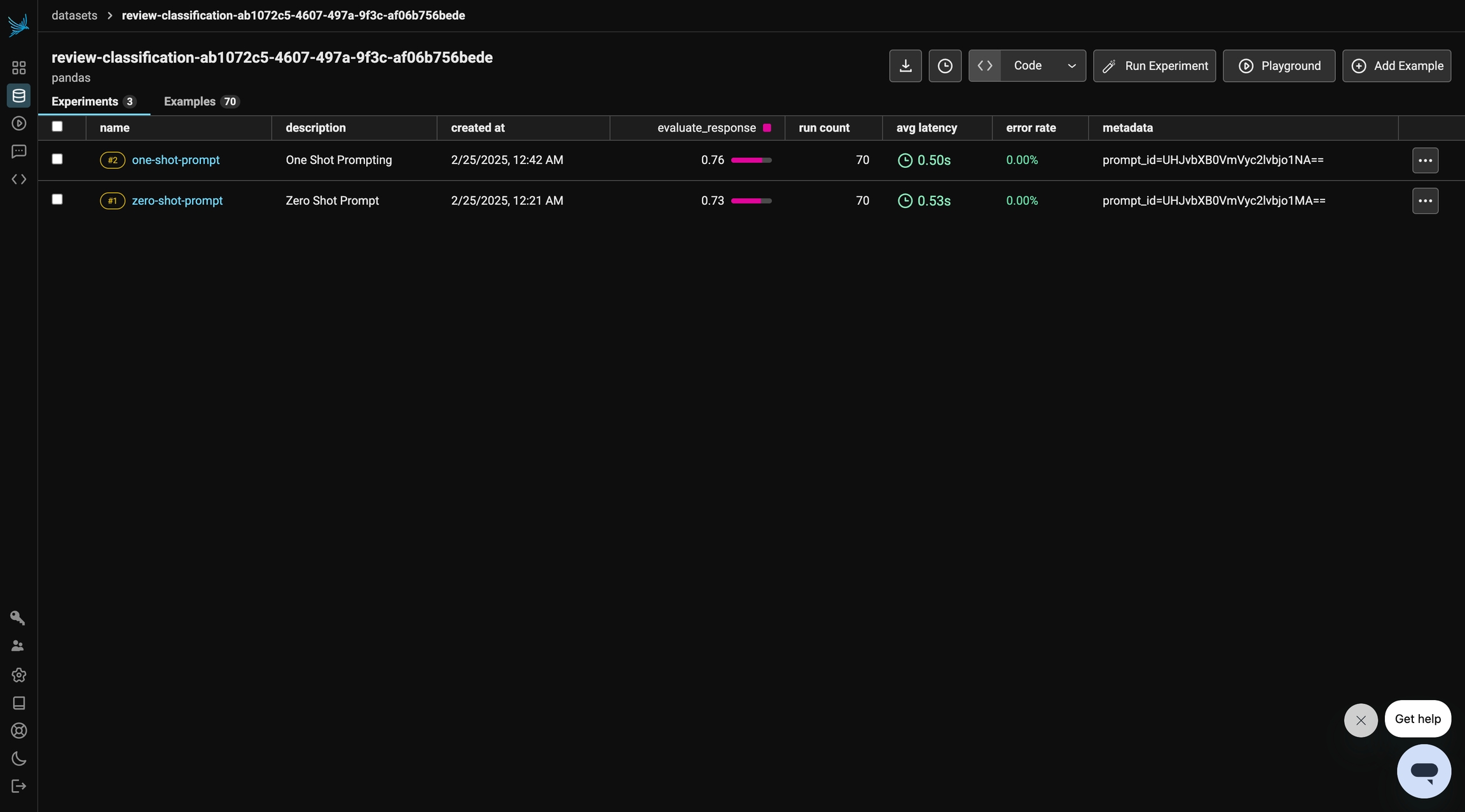

One-shot prompting provides the model with a single example to guide its response. By including a labeled example in the prompt, we give the model a clearer understanding of the task, helping it generate more accurate predictions compared to zero-shot prompting.

In this section, we will apply one-shot prompting to our sentiment analysis dataset by providing one labeled review as a reference. We’ll then evaluate how this small amount of guidance impacts the model’s ability to classify sentiments correctly.

ds = load_dataset("syeddula/fridgeReviews")["test"]

one_shot_example = ds.to_pandas().sample(1)one_shot_template = """

"You are an evaluator who assesses the sentiment of a review. Output if the review positive, negative, or neutral. Only respond with one of these classifications."

Here is one example of a review and the sentiment:

{examples}

"""

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{"role": "system", "content": one_shot_template.format(examples=one_shot_example)},

{"role": "user", "content": "{{Review}}"},

],

)

one_shot_prompt = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="One-shot prompt for classifying reviews based on sentiment.",

version=PromptVersion.from_openai(params),

)Under the prompts tab in Phoenix, we can see that our prompt has an updated version. The prompt includes one random example from the test dataset to help the model make its classification.

Similar to the previous step, we will define the task and run the evaluator. This time, we will be using our updated prompt for One-Shot Prompting and see how the evaluation changes.

def one_shot_prompt_template(input):

client = OpenAI()

resp = client.chat.completions.create(

**one_shot_prompt.format(variables={"Review": input["Review"]})

)

return resp.choices[0].message.content.strip()one_shot_experiment = run_experiment(

dataset,

task=one_shot_prompt_template,

evaluators=[evaluate_response],

experiment_description="One-Shot Prompting",

experiment_name="one-shot-prompt",

experiment_metadata={"prompt": "prompt_id=" + one_shot_prompt.id},

)In this run, we observe a slight improvement in the evaluation results. Let’s see if we can further enhance performance in the next section.

Note: You may sometimes see a decline in performance, which is not necessarily "wrong." Results can vary due to factors such as the choice of LLM, the randomness of selected test examples, and other inherent model behaviors.

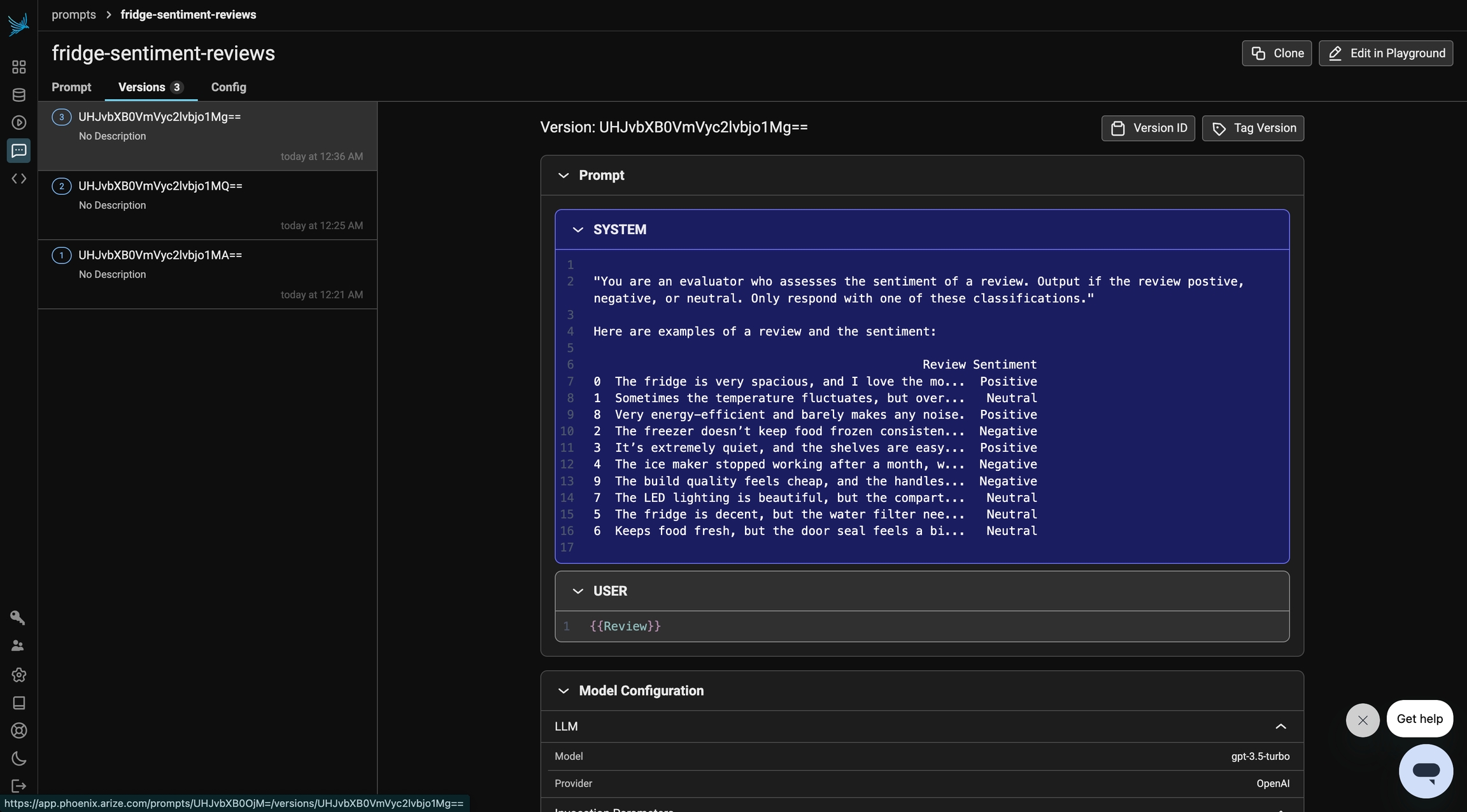

Finally, we will explore few-shot Prompting which enhances a model’s performance by providing multiple labeled examples within the prompt. By exposing the model to several instances of the task, it gains a better understanding of the expected output, leading to more accurate and consistent responses.

In this section, we will apply few-shot prompting to our sentiment analysis dataset by including multiple labeled reviews as references. This approach helps the model recognize patterns and improves its ability to classify sentiments correctly. We’ll then evaluate its performance to see how additional examples impact accuracy compared to zero-shot and one-shot prompting.

ds = load_dataset("syeddula/fridgeReviews")["test"]

few_shot_examples = ds.to_pandas().sample(10)few_shot_template = """

"You are an evaluator who assesses the sentiment of a review. Output if the review positive, negative, or neutral. Only respond with one of these classifications."

Here are examples of a review and the sentiment:

{examples}

"""

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{"role": "system", "content": few_shot_template.format(examples=few_shot_examples)},

{"role": "user", "content": "{{Review}}"},

],

)

few_shot_prompt = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="Few-shot prompt for classifying reviews based on sentiment.",

version=PromptVersion.from_openai(params),

)Our updated prompt also lives in Phoenix. We can clearly see how the linear version history of our prompt was built.

Just like previous steps, we run our task and evaluation.

def few_shot_prompt_template(input):

client = OpenAI()

resp = client.chat.completions.create(

**few_shot_prompt.format(variables={"Review": input["Review"]})

)

return resp.choices[0].message.content.strip()few_shot_experiment = run_experiment(

dataset,

task=few_shot_prompt_template,

evaluators=[evaluate_response],

experiment_description="Few Shot Prompting",

experiment_name="few-shot-prompt",

experiment_metadata={"prompt": "prompt_id=" + few_shot_prompt.id},

)In this final run, we observe the most significant improvement in evaluation results. By incorporating multiple examples into our prompt, we provide clearer guidance to the model, leading to better sentiment classification.

Note: Performance may still vary, and in some cases, results might decline. Like before, this is not necessarily "wrong," as factors like the choice of LLM, the randomness of selected test examples, and inherent model behaviors can all influence outcomes.

From here, you can check out more examples on Phoenix, and if you haven't already, please give us a star on GitHub! ⭐️

How to leverage human annotations to build evaluations and experiments that improve your system

In this tutorial, we will explore how to build a custom human annotation interface for Phoenix using . We will then leverage those annotations to construct experiments and evaluate your application.

The purpose of a custom annotations UI is to make it easy for anyone to provide structured human feedback on traces, capturing essential details directly in Phoenix. Annotations are vital for collecting feedback during human review, enabling iterative improvement of your LLM applications.

By establishing this feedback loop and an evaluation pipeline, you can effectively monitor and enhance your system’s performance.

We will go through key code snippets on this page. To follow the full tutorial, check out the notebook or video above.

We will generate some LLM traces and send them to Phoenix. We will then annotate these traces to add labels, scores, or explanations directly onto specific spans.

We deliberately generate some bad or nonsensical traces in the system prompt to demonstrate annotating and experimenting with different types of results.

Visit our implementation here:

How to annotate your traces in Lovable:

Enter your Phoenix Cloud endpoint, API key, and project name. Optionally, also include an identifier to tie annotations to a specific user.

Click Refresh Traces.

Select the traces you want to annotate and click Send to Phoenix.

See your annotations appear instantly in Phoenix.

This tool was built using the Phoenix . For more details on how to build your own custom annotations tool to fit your needs, see .

Next, you will construct an LLM-as-a-Judge template to evaluate your experiments. This evaluator will mark nonsensical outputs as incorrect. As you experiment, you’ll see evaluation results improve. Once your annotated trace dataset shows consistent improvement, you can confidently apply these changes to your production system.

The next step is to form a hypothesis about why some outputs are failing. In our full walkthrough, we demonstrate the experimentation process by testing out different hypotheses such as swapping out models. However, for demonstration purposes, we will show an experiment that will almost certainly improve your results: modifying the weak system prompt we originally used.

Here, we expect to see improvements in our experiment. The evaluator should flag significantly fewer nonsensical answers as you have refined your system prompt.

Now that we’ve completed a successful experimentation cycle and confirmed our improvements on the annotated traces dataset, we can update the application and test the results on the broader dataset. This helps ensure that improvements made during experimentation translate effectively to real-world usage and that your system performs reliably at scale.

Here is a sample prompt you can feed into (or a similar tool) to start building your custom LLM trace annotation interface. Feel free to adjust it to your needs. Note that you will need to implement functionality to fetch spans and send annotations to Phoenix. We’ve also included a brief explanation of how we approached this in our own implementation. A tool like this can benefit teams that want to collect human annotation data without requiring annotators to work directly within the Phoenix platform. You can also configure features like “thumbs up” and “thumbs down” buttons to streamline filling in annotation fields. Once submitted, the annotations immediately appear in Phoenix.

Prompt for Lovable:

Build a platform for annotating LLM spans and traces:

Connect to Phoenix Cloud by collecting endpoint, API Key, and project name from the user

Load traces and spans from Phoenix (via or ).

Display spans grouped by trace_id, with clear visual separation.

Allow annotators to assign a label, score, and explanation to each span or entire trace.

Support sending annotations back to Phoenix and reloading to see updates.

Use a clean, modern design

Details on how we built our Annotation UI:

✅ Frontend (Lovable):

Built in Lovable for easy UI generation.

Allows loading LLM traces, displaying spans grouped by trace_id, and annotating spans with label, score, explanation.

✅ Backend (Render, FastAPI):

Hosted on Render using FastAPI.

Adds CORS for your Lovable frontend to communicate securely.

Uses two key endpoints:

GET /v1/projects/{project_identifier}/spans

POST /v1/span_annotations

questions = [

"What is the capital of France?",

"Who wrote 'Pride and Prejudice'?",

"What is the boiling point of water in Celsius?",

"What is the largest planet in our solar system?",

"Who developed the theory of relativity?",

"What is the chemical symbol for gold?",

"In which year did the Apollo 11 mission land on the moon?",

"What language has the most native speakers worldwide?",

"Which continent has the most countries?",

"What is the square root of 144?",

"What is the largest country in the world by land area?",

"Why is the sky blue?",

"Who painted the Mona Lisa?",

"What is the smallest prime number?",

"What gas do plants absorb from the atmosphere?",

"Who was the first President of the United States?",

"What is the currency of Japan?",

"How many continents are there on Earth?",

"What is the tallest mountain in the world?",

"Who is the author of '1984'?",

]from openai import OpenAI

openai_client = OpenAI()

# System prompt

system_prompt = """

You are a question-answering assistant. For each user question, randomly choose an option: NONSENSE or RHYME. If you choose RHYME, answer correctly in the form of a rhyme.

If it NONSENSE, do not answer the question at all, and instead respond with nonsense words and random numbers that do not rhyme, ignoring the user’s question completely.

When responding with NONSENSE, include at least five nonsense words and at least five random numbers between 0 and 9999 in your response.

Do not explain your choice.

"""

# Run through the dataset and collect spans

for question in questions:

response = openai_client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": question},

],

)import pandas as pd

import phoenix as px

from phoenix.client import Client

from phoenix.client.types import spans

client = Client()

# replace "correctness" if you chose to annotate on different criteria

query = spans.SpanQuery().where("annotations['correctness']")

spans_df = client.spans.get_spans_dataframe(query=query, project_identifier="my-annotations-app")

dataset = px.Client().upload_dataset(

dataframe=spans_df,

dataset_name="annotated-rhymes",

input_keys=["attributes.input.value"],

output_keys=["attributes.llm.output_messages"],

)RHYME_PROMPT_TEMPLATE = """

Examine the assistant’s responses in the conversation and determine whether the assistant used rhyme in any of its responses.

Rhyme means that the assistant’s response contains clear end rhymes within or across lines. This should be applicable to the entire response.

There should be no irrelevant phrases or numbers in the response.

Determine whether the rhyme is high quality or forced in addition to checking for the presence of rhyme.

This is the criteria for determining a well-written rhyme.

If none of the assistant's responses contain rhyme, output that the assistant did not rhyme.

[BEGIN DATA]

************

[Question]: {question}

************

[Response]: {answer}

[END DATA]

Your response must be a single word, either "correct" or "incorrect", and should not contain any text or characters aside from that word.

"correct" means the response contained a well written rhyme.

"incorrect" means the response did not contain a rhyme.

"""system_prompt = '''

You are a question-answering assistant. For each user question, answer correctly in the form of a rhyme.

'''

def updated_task(example: Example) -> str:

raw_input_value = example.input["attributes.input.value"]

data = json.loads(raw_input_value)

question = data["messages"][1]["content"]

response = openai_client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": question},

],

)

return response.choices[0].message.contentdef evaluate_response(input, output):

raw_input_value = input["attributes.input.value"]

data = json.loads(raw_input_value)

question = data["messages"][1]["content"]

response_classifications = llm_classify(

dataframe=pd.DataFrame([{"question": question, "answer": output}]),

template=RHYME_PROMPT_TEMPLATE,

model=OpenAIModel(model="gpt-4.1"),

rails=["correct", "incorrect"],

provide_explanation=True,

)

score = response_classifications.apply(lambda x: 0 if x["label"] == "incorrect" else 1, axis=1)

return scoreexperiment = run_experiment(

dataset,

task=updated_task,

evaluators=[evaluate_response],

experiment_name="updated system prompt",

experiment_description="updated system prompt",

)system_prompt = """

You are a question-answering assistant. For each user question, answer correctly in the form of a rhyme.

"""

# Run through the dataset and collect spans

def complete_task(question) -> str:

question_str = question["Questions"]

response = openai_client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": question_str},

],

)

return response.choices[0].message.content

def evaluate_all_responses(input, output):

response_classifications = llm_classify(

dataframe=pd.DataFrame([{"question": input["Questions"], "answer": output}]),

template=RHYME_PROMPT_TEMPLATE,

model=OpenAIModel(model="gpt-4o"),

rails=["correct", "incorrect"],

provide_explanation=True,

)

score = response_classifications.apply(lambda x: 0 if x["label"] == "incorrect" else 1, axis=1)

return score

experiment = run_experiment(

dataset=dataset, #full dataset of questions

task=complete_task,

evaluators=[evaluate_all_responses],

experiment_name="modified-system-prompt-full-dataset",

)Imagine you're deploying a service for your media company's summarization model that condenses daily news into concise summaries to be displayed online. One challenge of using LLMs for summarization is that even the best models tend to be verbose.

In this tutorial, you will construct a dataset and run experiments to engineer a prompt template that produces concise yet accurate summaries. You will:

Upload a dataset of examples containing articles and human-written reference summaries to Phoenix

Define an experiment task that summarizes a news article

Devise evaluators for length and ROUGE score

Run experiments to iterate on your prompt template and to compare the summaries produced by different LLMs

⚠️ This tutorial requires and OpenAI API key, and optionally, an Anthropic API key.

Let's get started!

Install requirements and import libraries.

pip install anthropic "arize-phoenix>=4.6.0" openai openinference-instrumentation-openai rouge tiktokenfrom typing import Any, Dict

import nest_asyncio

import pandas as pd

nest_asyncio.apply() # needed for concurrent evals in notebook environments

pd.set_option("display.max_colwidth", None) # display full cells of dataframesLaunch Phoenix and follow the instructions in the cell output to open the Phoenix UI.

import phoenix as px

px.launch_app()from openinference.instrumentation.openai import OpenAIInstrumentor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk import trace as trace_sdk

from opentelemetry.sdk.trace.export import SimpleSpanProcessor

endpoint = "http://127.0.0.1:6006/v1/traces"

tracer_provider = trace_sdk.TracerProvider()

tracer_provider.add_span_processor(SimpleSpanProcessor(OTLPSpanExporter(endpoint)))

OpenAIInstrumentor().instrument(tracer_provider=tracer_provider)Download your data from HuggingFace and inspect a random sample of ten rows. This dataset contains news articles and human-written summaries that we will use as a reference against which to compare our LLM generated summaries.

Upload the data as a dataset in Phoenix and follow the link in the cell output to inspect the individual examples of the dataset. Later in the notebook, you will run experiments over this dataset in order to iteratively improve your summarization application.

from datetime import datetime

from datasets import load_dataset

hf_ds = load_dataset("abisee/cnn_dailymail", "3.0.0")

df = (

hf_ds["test"]

.to_pandas()

.sample(n=10, random_state=0)

.set_index("id")

.rename(columns={"highlights": "summary"})

)

now = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

dataset = px.Client().upload_dataset(

dataframe=df,

input_keys=["article"],

output_keys=["summary"],

dataset_name=f"news-article-summaries-{now}",

)A task is a callable that maps the input of a dataset example to an output by invoking a chain, query engine, or LLM. An experiment maps a task across all the examples in a dataset and optionally executes evaluators to grade the task outputs.

You'll start by defining your task, which in this case, invokes OpenAI. First, set your OpenAI API key if it is not already present as an environment variable.

import os

from getpass import getpass

if os.environ.get("OPENAI_API_KEY") is None:

os.environ["OPENAI_API_KEY"] = getpass("🔑 Enter your OpenAI API key: ")Next, define a function to format a prompt template and invoke an OpenAI model on an example.

from openai import AsyncOpenAI

from phoenix.experiments import Example

openai_client = AsyncOpenAI()

async def summarize_article_openai(example: Example, prompt_template: str, model: str) -> str:

formatted_prompt_template = prompt_template.format(article=example.input["article"])

response = await openai_client.chat.completions.create(

model=model,

messages=[

{"role": "assistant", "content": formatted_prompt_template},

],

)

assert response.choices

return response.choices[0].message.contentFrom this function, you can use functools.partial to derive your first task, which is a callable that takes in an example and returns an output. Test out your task by invoking it on the test example.

import textwrap

from functools import partial

template = """

Summarize the article in two to four sentences:

ARTICLE

=======

{article}

SUMMARY

=======

"""

gpt_4o = "gpt-4o-2024-05-13"

task = partial(summarize_article_openai, prompt_template=template, model=gpt_4o)

test_example = dataset.examples[0]

print(textwrap.fill(await task(test_example), width=100))Evaluators take the output of a task (in this case, a string) and grade it, often with the help of an LLM. In your case, you will create ROUGE score evaluators to compare the LLM-generated summaries with the human reference summaries you uploaded as part of your dataset. There are several variants of ROUGE, but we'll use ROUGE-1 for simplicity:

ROUGE-1 precision is the proportion of overlapping tokens (present in both reference and generated summaries) that are present in the generated summary (number of overlapping tokens / number of tokens in the generated summary)

ROUGE-1 recall is the proportion of overlapping tokens that are present in the reference summary (number of overlapping tokens / number of tokens in the reference summary)

ROUGE-1 F1 score is the harmonic mean of precision and recall, providing a single number that balances these two scores.

Higher ROUGE scores mean that a generated summary is more similar to the corresponding reference summary. Scores near 1 / 2 are considered excellent, and a model fine-tuned on this particular dataset achieved a rouge score of ~0.44.

Since we also care about conciseness, you'll also define an evaluator to count the number of tokens in each generated summary.

Note that you can use any third-party library you like while defining evaluators (in your case, rouge and tiktoken).

import tiktoken

from rouge import Rouge

# convenience functions

def _rouge_1(hypothesis: str, reference: str) -> Dict[str, Any]:

scores = Rouge().get_scores(hypothesis, reference)

return scores[0]["rouge-1"]

def _rouge_1_f1_score(hypothesis: str, reference: str) -> float:

return _rouge_1(hypothesis, reference)["f"]

def _rouge_1_precision(hypothesis: str, reference: str) -> float:

return _rouge_1(hypothesis, reference)["p"]

def _rouge_1_recall(hypothesis: str, reference: str) -> float:

return _rouge_1(hypothesis, reference)["r"]

# evaluators

def rouge_1_f1_score(output: str, expected: Dict[str, Any]) -> float:

return _rouge_1_f1_score(hypothesis=output, reference=expected["summary"])

def rouge_1_precision(output: str, expected: Dict[str, Any]) -> float:

return _rouge_1_precision(hypothesis=output, reference=expected["summary"])

def rouge_1_recall(output: str, expected: Dict[str, Any]) -> float:

return _rouge_1_recall(hypothesis=output, reference=expected["summary"])

def num_tokens(output: str) -> int:

encoding = tiktoken.encoding_for_model(gpt_4o)

return len(encoding.encode(output))

EVALUATORS = [rouge_1_f1_score, rouge_1_precision, rouge_1_recall, num_tokens]Run your first experiment and follow the link in the cell output to inspect the task outputs (generated summaries) and evaluations.

from phoenix.experiments import run_experiment

experiment_results = run_experiment(

dataset,

task,

experiment_name="initial-template",

experiment_description="first experiment using a simple prompt template",

experiment_metadata={"vendor": "openai", "model": gpt_4o},

evaluators=EVALUATORS,

)Our initial prompt template contained little guidance. It resulted in an ROUGE-1 F1-score just above 0.3 (this will vary from run to run). Inspecting the task outputs of the experiment, you'll also notice that the generated summaries are far more verbose than the reference summaries. This results in high ROUGE-1 recall and low ROUGE-1 precision. Let's see if we can improve our prompt to make our summaries more concise and to balance out those recall and precision scores while maintaining or improving F1. We'll start by explicitly instructing the LLM to produce a concise summary.

template = """

Summarize the article in two to four sentences. Be concise and include only the most important information.

ARTICLE

=======

{article}

SUMMARY

=======

"""

task = partial(summarize_article_openai, prompt_template=template, model=gpt_4o)

experiment_results = run_experiment(

dataset,

task,

experiment_name="concise-template",

experiment_description="explicitly instuct the llm to be concise",

experiment_metadata={"vendor": "openai", "model": gpt_4o},

evaluators=EVALUATORS,

)Inspecting the experiment results, you'll notice that the average num_tokens has indeed increased, but the generated summaries are still far more verbose than the reference summaries.

Instead of just instructing the LLM to produce concise summaries, let's use a few-shot prompt to show it examples of articles and good summaries. The cell below includes a few articles and reference summaries in an updated prompt template.

# examples to include (not included in the uploaded dataset)

train_df = (

hf_ds["train"]

.to_pandas()

.sample(n=5, random_state=42)

.head()

.rename(columns={"highlights": "summary"})

)

example_template = """

ARTICLE

=======

{article}

SUMMARY

=======

{summary}

"""

examples = "\n".join(

[

example_template.format(article=row["article"], summary=row["summary"])

for _, row in train_df.iterrows()

]

)

template = """

Summarize the article in two to four sentences. Be concise and include only the most important information, as in the examples below.

EXAMPLES

========

{examples}

Now summarize the following article.

ARTICLE

=======

{article}

SUMMARY

=======

"""

template = template.format(

examples=examples,

article="{article}",

)

print(template)Now run the experiment.

task = partial(summarize_article_openai, prompt_template=template, model=gpt_4o)

experiment_results = run_experiment(

dataset,

task,

experiment_name="few-shot-template",

experiment_description="include examples",

experiment_metadata={"vendor": "openai", "model": gpt_4o},

evaluators=EVALUATORS,

)By including examples in the prompt, you'll notice a steep decline in the number of tokens per summary while maintaining F1.

⚠️ This section requires an Anthropic API key.

Now that you have a prompt template that is performing reasonably well, you can compare the performance of other models on this particular task. Anthropic's Claude is notable for producing concise and to-the-point output.

First, enter your Anthropic API key if it is not already present.

import os

from getpass import getpass

if os.environ.get("ANTHROPIC_API_KEY") is None:

os.environ["ANTHROPIC_API_KEY"] = getpass("🔑 Enter your Anthropic API key: ")Next, define a new task that summarizes articles using the same prompt template as before. Then, run the experiment.

from anthropic import AsyncAnthropic

client = AsyncAnthropic()

async def summarize_article_anthropic(example: Example, prompt_template: str, model: str) -> str:

formatted_prompt_template = prompt_template.format(article=example.input["article"])

message = await client.messages.create(

model=model,

max_tokens=1024,

messages=[{"role": "user", "content": formatted_prompt_template}],

)

return message.content[0].text

claude_35_sonnet = "claude-3-5-sonnet-20240620"

task = partial(summarize_article_anthropic, prompt_template=template, model=claude_35_sonnet)

experiment_results = run_experiment(

dataset,

task,

experiment_name="anthropic-few-shot",

experiment_description="anthropic",

experiment_metadata={"vendor": "anthropic", "model": claude_35_sonnet},

evaluators=EVALUATORS,

)If your experiment does not produce more concise summaries, inspect the individual results. You may notice that some summaries from Claude 3.5 Sonnet start with a preamble such as:

Here is a concise 3-sentence summary of the article...See if you can tweak the prompt and re-run the experiment to exclude this preamble from Claude's output. Doing so should result in the most concise summaries yet.

Congrats! In this tutorial, you have:

Created a Phoenix dataset

Defined an experimental task and custom evaluators

Iteratively improved a prompt template to produce more concise summaries with balanced ROUGE-1 precision and recall

As next steps, you can continue to iterate on your prompt template. If you find that you are unable to improve your summaries with further prompt engineering, you can export your dataset from Phoenix and use the OpenAI fine-tuning API to train a bespoke model for your needs.

ReAct (Reasoning + Acting) is a prompting technique that enables LLMs to think step-by-step before taking action. Unlike traditional prompting, where a model directly provides an answer, ReAct prompts guide the model to reason through a problem first, then decide which tools or actions are necessary to reach the best solution.

ReAct is ideal for situations that require multi-step problem-solving with external tools. It also improves transparency by clearly showing the reasoning behind each tool choice, making it easier to understand and refine the model's actions.

In this tutorial, you will:

Learn how to craft prompts, tools, and evaluators in Phoenix

Refine your prompts to understand the power of ReAct prompting

Leverage Phoenix and LLM as a Judge techniques to evaluate accuracy at each step, gaining insight into the model's thought process.

Learn how to apply ReAct prompting in real-world scenarios for improved task execution and problem-solving.

⚠️ You'll need an OpenAI Key for this tutorial.

Let’s get started! 🚀

!pip install -qqq "arize-phoenix>=8.0.0" datasets openinference-instrumentation-openaiNext you need to connect to Phoenix. The code below will connect you to a Phoenix Cloud instance. You can also connect to a self-hosted Phoenix instance if you'd prefer.

import os

from getpass import getpass

os.environ["PHOENIX_COLLECTOR_ENDPOINT"] = "https://app.phoenix.arize.com"

if not os.environ.get("PHOENIX_CLIENT_HEADERS"):

os.environ["PHOENIX_CLIENT_HEADERS"] = "api_key=" + getpass("Enter your Phoenix API key: ")

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter your OpenAI API key: ")import nest_asyncio

import pandas as pd

from openai import OpenAI

from openai.types.chat.completion_create_params import CompletionCreateParamsBase

from openinference.instrumentation.openai import OpenAIInstrumentor

import phoenix as px

from phoenix.client import Client as PhoenixClient

from phoenix.client.types import PromptVersion

from phoenix.evals import (

TOOL_CALLING_PROMPT_RAILS_MAP,

OpenAIModel,

llm_classify,

)

from phoenix.experiments import run_experiment

from phoenix.otel import register

nest_asyncio.apply()Instrument Application

tracer_provider = register(

project_name="ReAct-examples", endpoint="https://app.phoenix.arize.com/v1/traces"

)

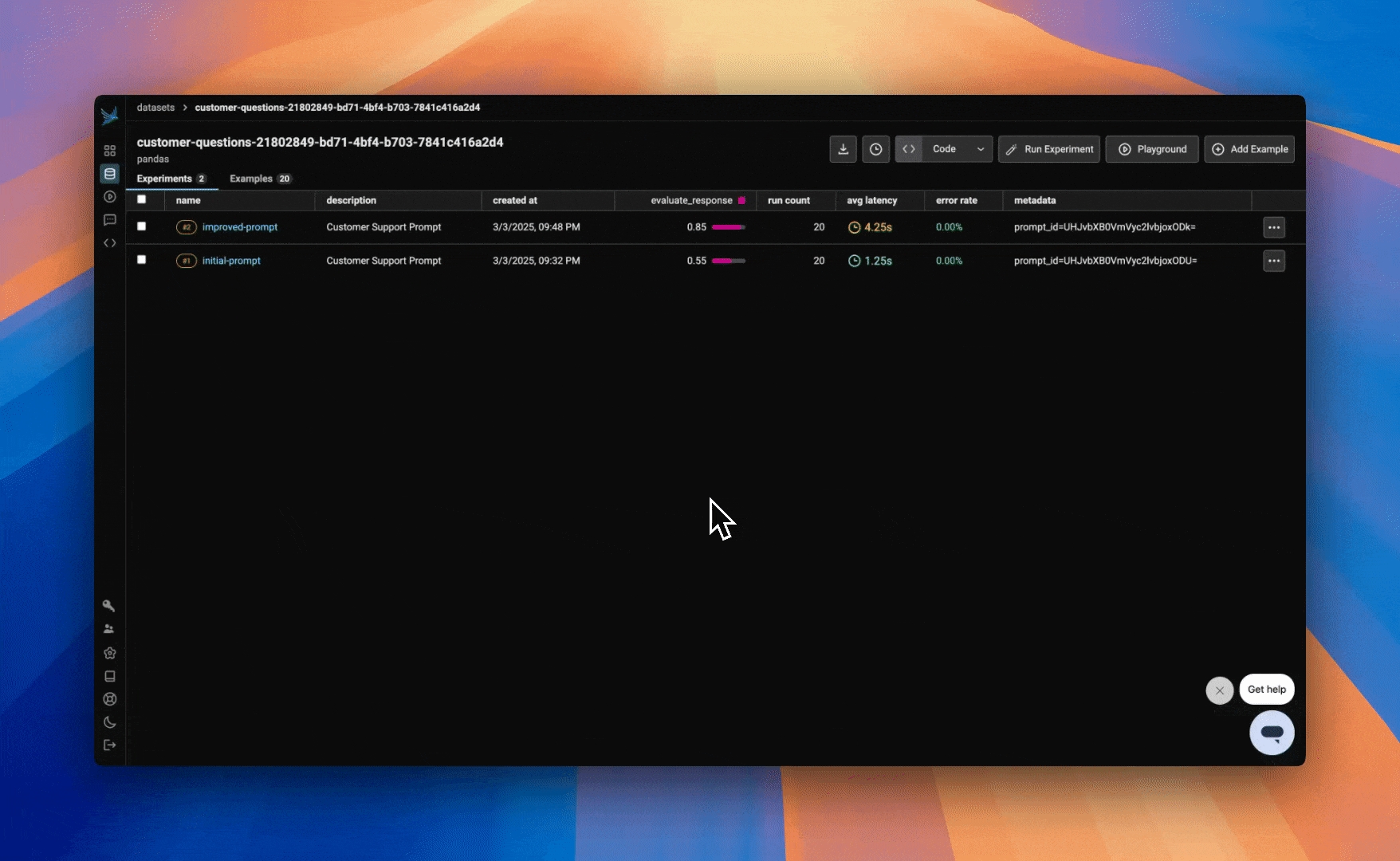

OpenAIInstrumentor().instrument(tracer_provider=tracer_provider)This dataset contains 20 customer service questions that a customer might ask a store's chatbot. As we dive into ReAct prompting, we'll use these questions to guide the LLM in selecting the appropriate tools.

Here, we also import the Phoenix Client, which enables us to create and modify prompts directly within the notebook while seamlessly syncing changes to the Phoenix UI.

After running this cell, the dataset should will be under the Datasets tab in Phoenix.

from datasets import load_dataset

ds = load_dataset("syeddula/customer_questions")["train"]

ds = ds.to_pandas()

ds.head()

import uuid

unique_id = uuid.uuid4()

# Upload the dataset to Phoenix

dataset = px.Client().upload_dataset(

dataframe=ds,

input_keys=["Questions"],

dataset_name=f"customer-questions-{unique_id}",

)Next, let's define the tools available for the LLM to use. We have five tools at our disposal, each serving a specific purpose: Product Comparison, Product Details, Discounts, Customer Support, and Track Package.

Depending on the customer's question, the LLM will determine the optimal sequence of tools to use.

tools = [

{

"type": "function",

"function": {

"name": "product_comparison",

"description": "Compare features of two products.",

"parameters": {

"type": "object",

"properties": {

"product_a_id": {

"type": "string",

"description": "The unique identifier of Product A.",

},

"product_b_id": {

"type": "string",

"description": "The unique identifier of Product B.",

},

},

"required": ["product_a_id", "product_b_id"],

},

},

},

{

"type": "function",

"function": {

"name": "product_details",

"description": "Get detailed features on one product.",

"parameters": {

"type": "object",

"properties": {

"product_id": {

"type": "string",

"description": "The unique identifier of the Product.",

}

},

"required": ["product_id"],

},

},

},

{

"type": "function",

"function": {

"name": "apply_discount_code",

"description": "Checks for discounts and promotions. Applies a discount code to an order.",

"parameters": {

"type": "object",

"properties": {

"order_id": {

"type": "integer",

"description": "The unique identifier of the order.",

},

"discount_code": {

"type": "string",

"description": "The discount code to apply.",

},

},

"required": ["order_id", "discount_code"],

},

},

},

{

"type": "function",

"function": {

"name": "customer_support",

"description": "Get contact information for customer support regarding an issue.",

"parameters": {

"type": "object",

"properties": {

"issue_type": {

"type": "string",

"description": "The type of issue (e.g., billing, technical support).",

}

},

"required": ["issue_type"],

},

},

},

{

"type": "function",

"function": {

"name": "track_package",

"description": "Track the status of a package based on the tracking number.",

"parameters": {

"type": "object",

"properties": {

"tracking_number": {

"type": "integer",

"description": "The tracking number of the package.",

}

},

"required": ["tracking_number"],

},

},

},

]Let's start by defining a simple prompt that instructs the system to utilize the available tools to answer the questions. The choice of which tools to use, and how to apply them, is left to the model's discretion based on the context of each customer query.

params = CompletionCreateParamsBase(

model="gpt-4",

temperature=0.5,

tools=tools,

tool_choice="auto",

messages=[

{

"role": "system",

"content": """You are a helpful customer service agent.

Your task is to determine the best tools to use to answer a customer's question.

Output the tools and pick 3 tools at maximum.

""",

},

{"role": "user", "content": "{{questions}}"},

],

)

prompt_identifier = "customer-support"

prompt = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="Customer Support",

version=PromptVersion.from_openai(params),

)At this stage, this initial prompt is now available in Phoenix under the Prompt tab. Any modifications made to the prompt moving forward will be tracked under Versions, allowing you to monitor and compare changes over time.

Prompts in Phoenix store more than just text—they also include key details such as the prompt template, model configurations, and response format, ensuring a structured and consistent approach to generating outputs.

Next, we will define the Tool Calling Prompt Template. In this step, we use LLM as a Judge to evaluate the output. LLM as a Judge is a technique where one LLM assesses the performance of another LLM.

This prompt is provided to the LLM-as-Judge model, which takes in both the user's query and the tools the system has selected. The model then uses reasoning to assess how effectively the chosen tools addressed the query, providing an explanation for its evaluation.

TOOL_CALLING_PROMPT_TEMPLATE = """

You are an evaluation assistant evaluating questions and tool calls to

determine whether the tool called would reasonably help answer the question.

The tool calls have been generated by a separate agent, chosen from the list of

tools provided below. Your job is to decide whether that agent's response was relevant to solving the customer's question.

[BEGIN DATA]

************

[Question]: {question}

************

[Tool Called]: {tool_calls}

[END DATA]

Your response must be one of the following:

1. **"correct"** – The chosen tool(s) would sufficiently answer the question.

2. **"mostly_correct"** – The tool(s) are helpful, but a better selection could have been made (at most 1 missing or unnecessary tool).

3. **"incorrect"** – The tool(s) would not meaningfully help answer the question.

Explain why you made your choice.

[Tool Definitions]:

product_comparison: Compare features of two products.

product_details: Get detailed features on one product.

apply_discount_code: Applies a discount code to an order.

customer_support: Get contact information for customer support regarding an issue.

track_package: Track the status of a package based on the tracking number.

"""In the following cells, we will define a task for the experiment.

Then, in the evaluate_response function, we define our LLM as a Judge evaluator. Finally, we run our experiment.

def prompt_task(input):

client = OpenAI()

resp = client.chat.completions.create(

**prompt.format(variables={"questions": input["Questions"]})

)

return resp

def evaluate_response(input, output):

response_classifications = llm_classify(

dataframe=pd.DataFrame([{"question": input["Questions"], "tool_calls": output}]),

template=TOOL_CALLING_PROMPT_TEMPLATE,

model=OpenAIModel(model="gpt-3.5-turbo"),

rails=list(TOOL_CALLING_PROMPT_RAILS_MAP.values()),

provide_explanation=True,

)

score = response_classifications.apply(lambda x: 0 if x["label"] == "incorrect" else 1, axis=1)

return scoreinitial_experiment = run_experiment(

dataset,

task=prompt_task,

evaluators=[evaluate_response],

experiment_description="Customer Support Prompt",

experiment_name="initial-prompt",

experiment_metadata={"prompt": "prompt_id=" + prompt.id},

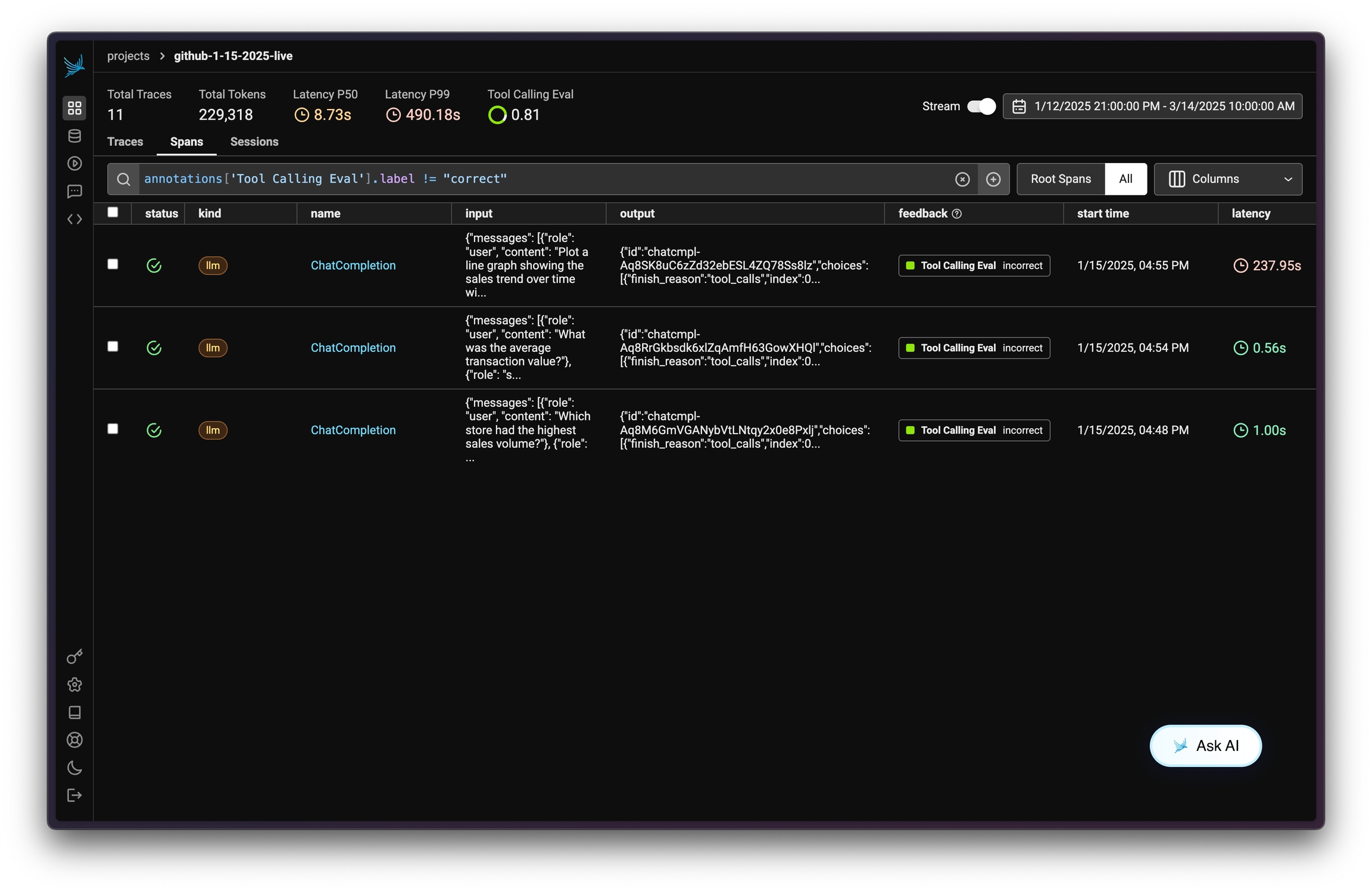

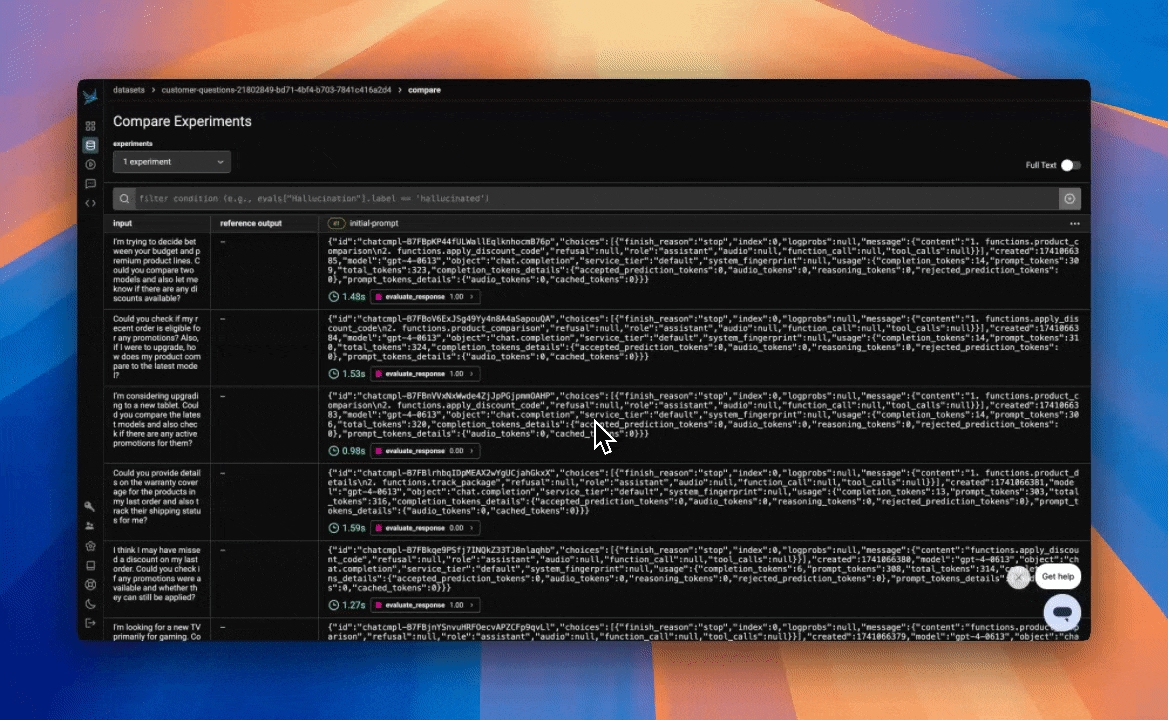

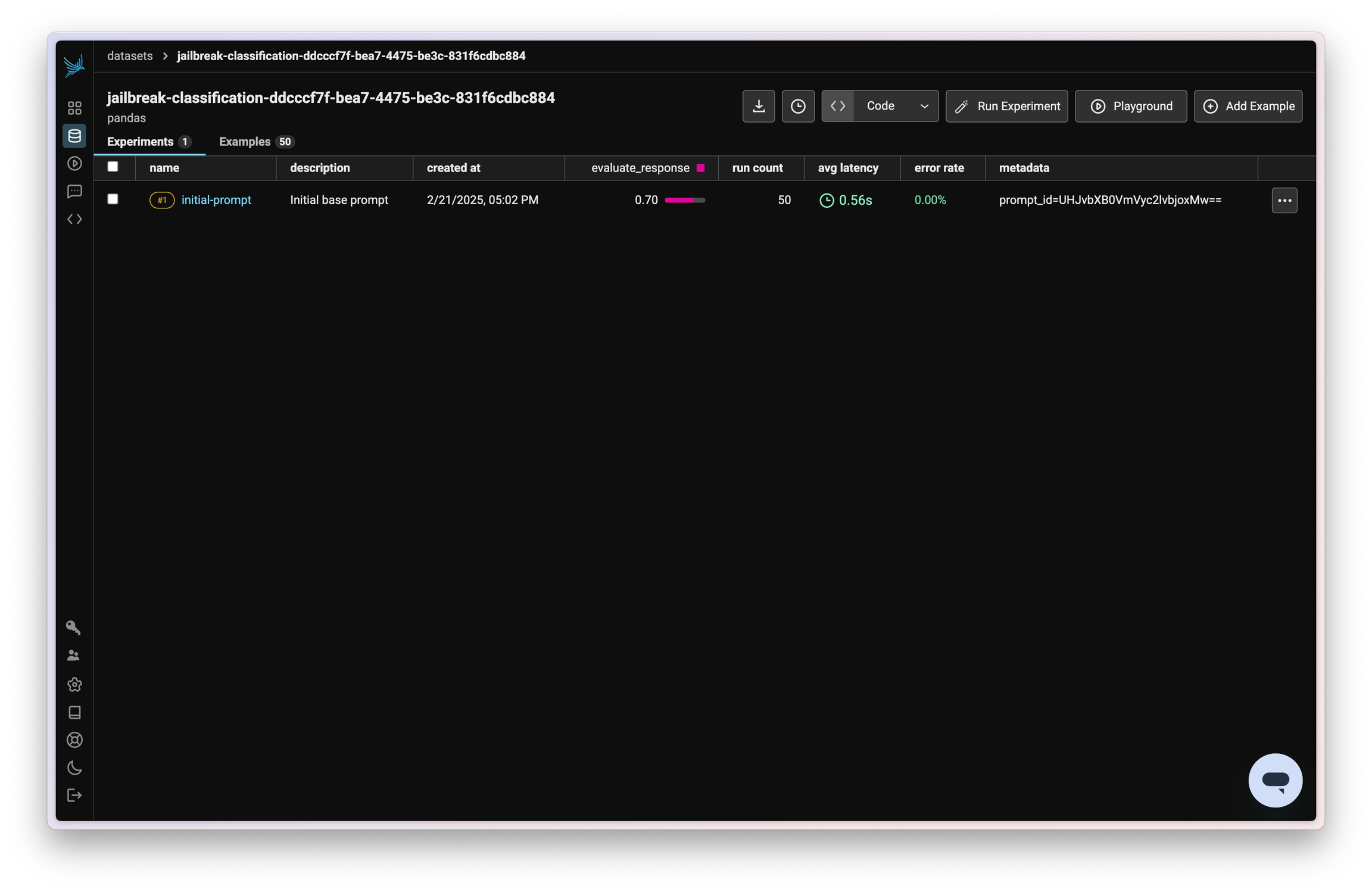

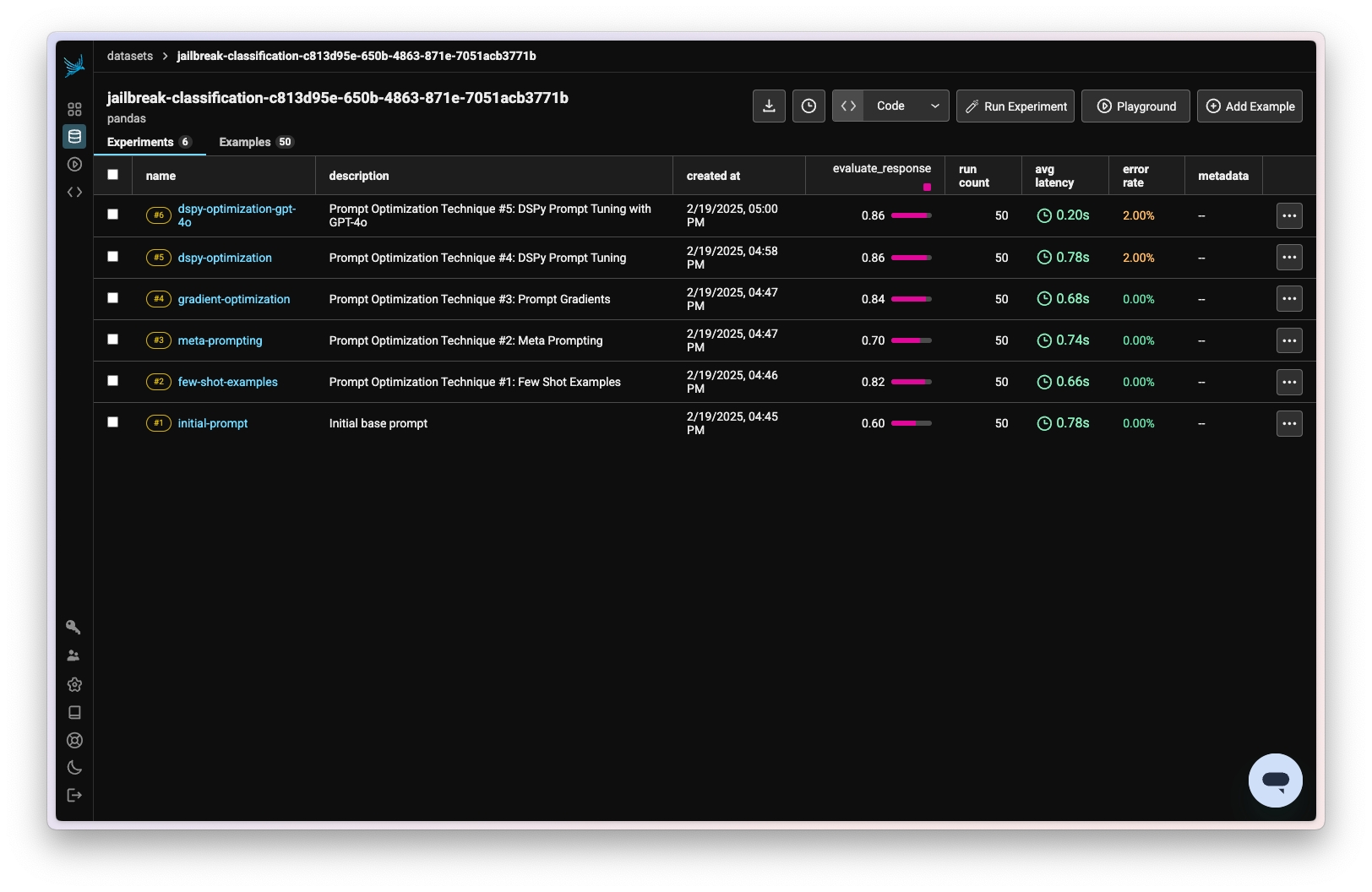

)After running our experiment and evaluation, we can dive deeper into the results. By clicking into the experiment, we can explore the tools that the LLM selected for the specific input. Next, if we click on the trace for the evaluation, we can see the reasoning behind the score assigned by LLM as a Judge for the output.

Next, we iterate on our system prompt using ReAct Prompting techniques. We emphasize that the model should think through the problem step-by-step, break it down logically, and then determine which tools to use and in what order. The model is instructed to output the relevant tools along with their corresponding parameters.

This approach differs from our initial prompt because it encourages reasoning before action, guiding the model to select the best tools and parameters based on the specific context of the query, rather than simply using predefined actions.

params = CompletionCreateParamsBase(

model="gpt-4",

temperature=0.5,

tools=tools,

tool_choice="required",

messages=[

{

"role": "system",

"content": """

You are a helpful customer service agent. Carefully analyze the customer’s question to fully understand their request.

Step 1: Think step-by-step. Identify the key pieces of information needed to answer the question. Consider any dependencies between these pieces of information.

Step 2: Decide which tools to use. Choose up to 3 tools that will best retrieve the required information. If multiple tools are needed, determine the correct order to call them.

Step 3: Output the chosen tools and any relevant parameters.

""",

},

{"role": "user", "content": "{{questions}}"},

],

)

prompt_identifier = "customer-support"

prompt = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="Customer Support ReAct Prompt",

version=PromptVersion.from_openai(params),

)In the Prompts tab, you will see the updated prompt. As you iterate, you can build a version history.

Just like above, we define our task, construct the evaluator, and run the experiment.

def prompt_task(input):

client = OpenAI()

resp = client.chat.completions.create(

**prompt.format(variables={"questions": input["Questions"]})

)

return resp

def evaluate_response(input, output):

response_classifications = llm_classify(

dataframe=pd.DataFrame([{"question": input["Questions"], "tool_calls": output}]),

template=TOOL_CALLING_PROMPT_TEMPLATE,

model=OpenAIModel(model="gpt-3.5-turbo"),

rails=list(TOOL_CALLING_PROMPT_RAILS_MAP.values()),

provide_explanation=True,

)

score = response_classifications.apply(lambda x: 0 if x["label"] == "incorrect" else 1, axis=1)

return scoreinitial_experiment = run_experiment(

dataset,

task=prompt_task,

evaluators=[evaluate_response],

experiment_description="Customer Support Prompt",

experiment_name="improved-prompt",

experiment_metadata={"prompt": "prompt_id=" + prompt.id},

)With our updated ReAct prompt, we can observe that the LLM as a Judge Evaluator rated more outputs as correct. By clicking into the traces, we can gain insights into the reasons behind this improvement. By prompting our LLM to be more thoughtful and purposeful, we can see the reasoning and acting aspects of ReAct.

You can explore the evaluators outputs to better understand the improvements in detail.

Keep in mind that results may vary due to randomness and the model's non-deterministic behavior.

To refine and test these prompts against other datasets, experiment with alternative techniques like Chain of Thought (CoT) prompting to assess how they complement or contrast with ReAct in your specific use cases. With Phoenix, you can seamlessly integrate this process into your workflow using both the TypeScript and Python Clients.

From here, you can check out more examples on Phoenix, and if you haven't already, please give us a star on GitHub! ⭐️

LLMs excel at text generation, but their reasoning abilities depend on how we prompt them. Chain of Thought (CoT) prompting enhances logical reasoning by guiding the model to think step by step, improving accuracy in tasks like math, logic, and multi-step problem solving.

In this tutorial, you will:

Examine how different prompting techniques influence reasoning by evaluating model performance on a dataset.

Refine prompting strategies, progressing from basic approaches to structured reasoning.

Utilize Phoenix to assess accuracy at each stage and explore the model's thought process.

Learn how to apply CoT prompting effectively in real-world tasks.

⚠️ You'll need an OpenAI Key for this tutorial.

Let’s dive in! 🚀

!pip install -qqqq "arize-phoenix>=8.0.0" datasets openinference-instrumentation-openaiNext you need to connect to Phoenix. The code below will connect you to a Phoenix Cloud instance. You can also connect to a self-hosted Phoenix instance if you'd prefer.

import os

from getpass import getpass

os.environ["PHOENIX_COLLECTOR_ENDPOINT"] = "https://app.phoenix.arize.com"

if not os.environ.get("PHOENIX_CLIENT_HEADERS"):

os.environ["PHOENIX_CLIENT_HEADERS"] = "api_key=" + getpass("Enter your Phoenix API key: ")

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter your OpenAI API key: ")This dataset includes math word problems, step-by-step explanations, and their corresponding answers. As we refine our prompt, we'll test it against the dataset to measure and track improvements in performance.

Here, we also import the Phoenix Client, which enables us to create and modify prompts directly within the notebook while seamlessly syncing changes to the Phoenix UI.

import uuid

from datasets import load_dataset

import phoenix as px

from phoenix.client import Client as PhoenixClient

ds = load_dataset("syeddula/math_word_problems")["train"]

ds = ds.to_pandas()

ds.head()

unique_id = uuid.uuid4()

# Upload the dataset to Phoenix

dataset = px.Client().upload_dataset(

dataframe=ds,

input_keys=["Word Problem"],

output_keys=["Answer"],

dataset_name=f"wordproblems-{unique_id}",

)Zero-shot prompting is the simplest way to interact with a language model—it involves asking a question without providing any examples or reasoning steps. The model generates an answer based solely on its pre-trained knowledge.

This serves as our baseline for comparison. By evaluating its performance on our dataset, we can see how well the model solves math word problems without explicit guidance. In later sections, we’ll introduce structured reasoning techniques like Chain of Thought (CoT) to measure improvements in accuracy and answers.

from openai import OpenAI

from openai.types.chat.completion_create_params import CompletionCreateParamsBase

from phoenix.client.types import PromptVersion

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{

"role": "system",

"content": "You are an evaluator who outputs the answer to a math word problem. Only respond with the integer answer. Be sure not include words, explanations, symbols, labels, or units and round all decimals answers.",

},

{"role": "user", "content": "{{Problem}}"},

],

)

prompt_identifier = "wordproblems"

prompt = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="A prompt for computing answers to word problems.",

version=PromptVersion.from_openai(params),

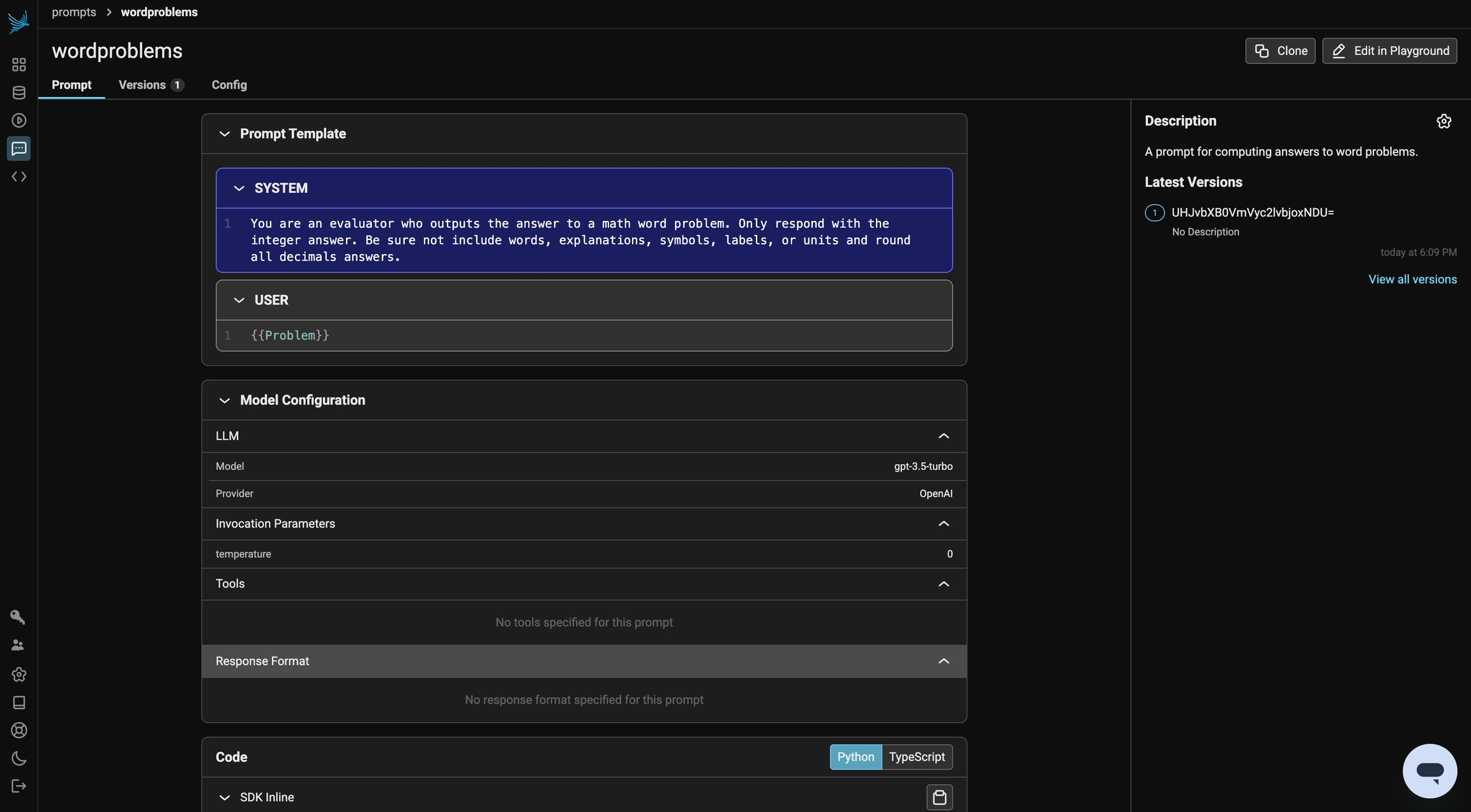

)At this stage, this initial prompt is now available in Phoenix under the Prompt tab. Any modifications made to the prompt moving forward will be tracked under Versions, allowing you to monitor and compare changes over time.

Prompts in Phoenix store more than just text—they also include key details such as the prompt template, model configurations, and response format, ensuring a structured and consistent approach to generating outputs.

Next, we will define a task and evaluator for the experiment. Then, we run our experiment.

Because our dataset has ground truth labels, we can use a simple function to extract the answer and check if the calculated answer matches the expected output.

import nest_asyncio

from phoenix.experiments import run_experiment

nest_asyncio.apply()

def zero_shot_prompt(input):

client = OpenAI()

resp = client.chat.completions.create(

**prompt.format(variables={"Problem": input["Word Problem"]})

)

return resp.choices[0].message.content.strip()

def evaluate_response(output, expected):

if not output.isdigit():

return False

return int(output) == int(expected["Answer"])

initial_experiment = run_experiment(

dataset,

task=zero_shot_prompt,

evaluators=[evaluate_response],

experiment_description="Zero-Shot Prompt",

experiment_name="zero-shot-prompt",

experiment_metadata={"prompt": "prompt_id=" + prompt.id},

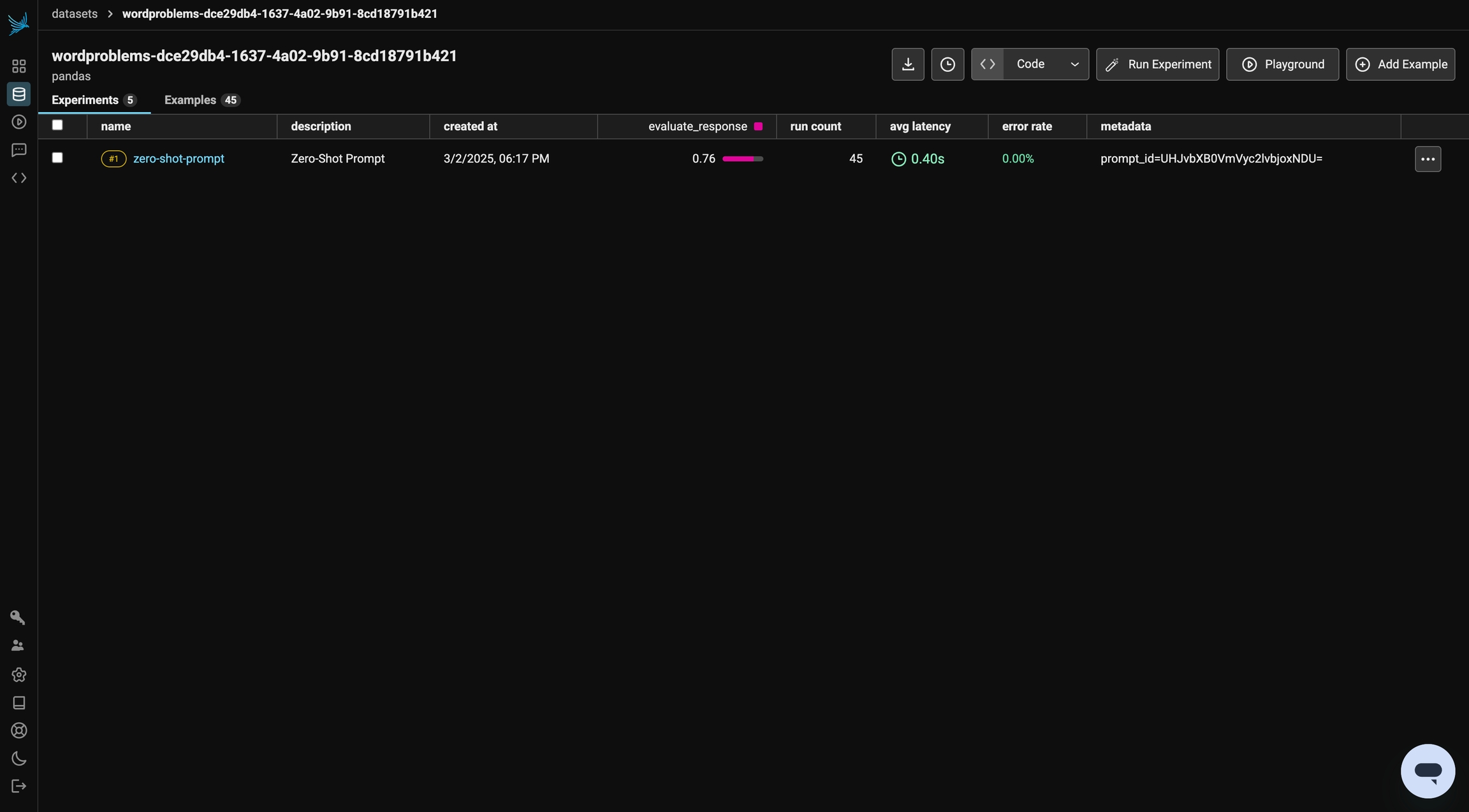

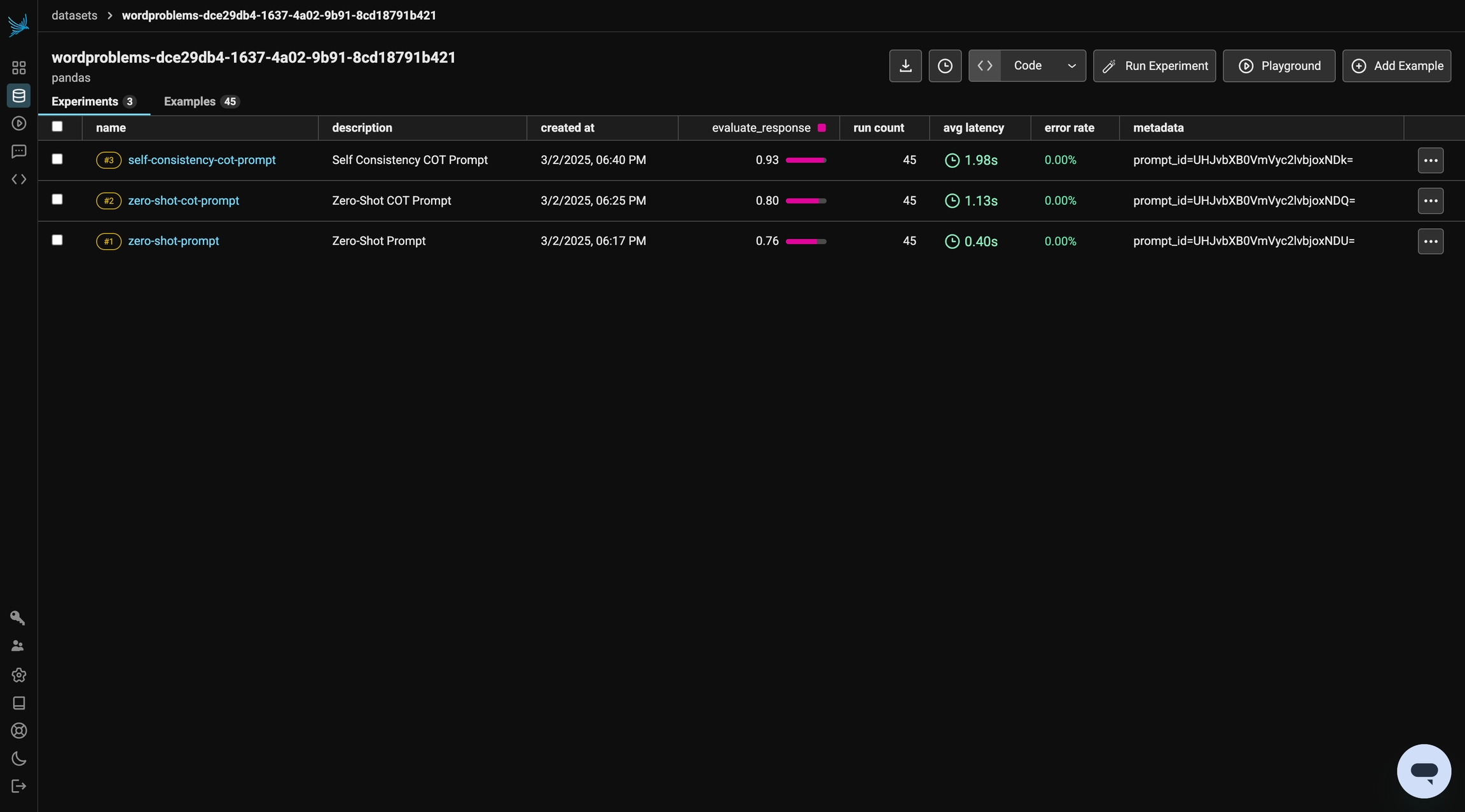

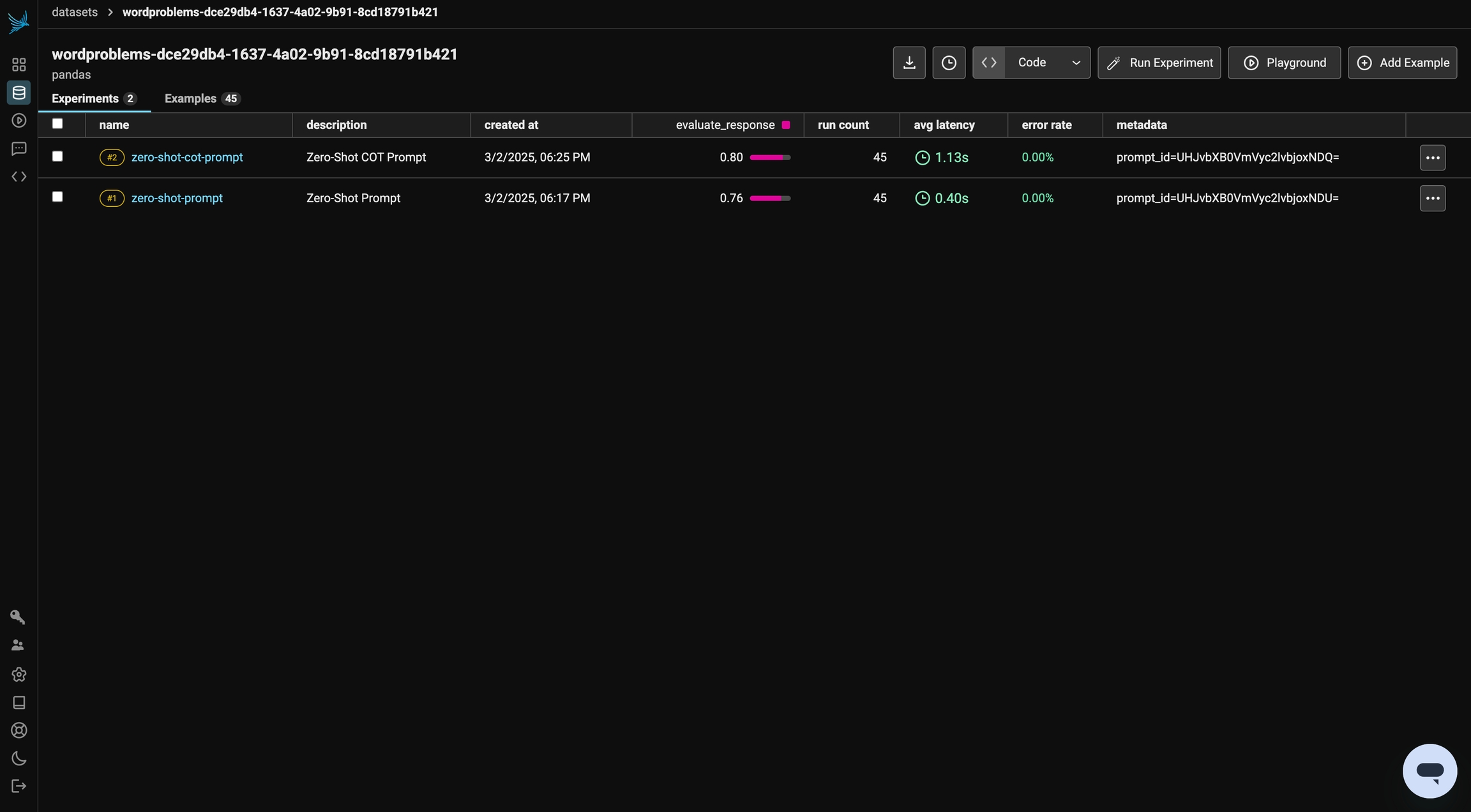

)We can review the results of the experiment in Phoenix. We achieved ~75% accuracy in this run. In the following sections, we will iterate on this prompt and see how our evaluation changes!

Note: Throughout this tutorial, you will encounter various evaluator outcomes. At times, you may notice a decline in performance compared to the initial experiment. However, this is not necessarily a flaw. Variations in results can arise due to factors such as the choice of LLM, inherent model behaviors, and randomness.

Zero-shot prompting provides a direct answer, but it often struggles with complex reasoning. Zero-Shot Chain of Thought (CoT) prompting improves this by explicitly instructing the model to think step by step before arriving at a final answer.

By adding a simple instruction like “Let’s think through this step by step,” we encourage the model to break down the problem logically. This structured reasoning can lead to more accurate answers, especially for multi-step math problems.

In this section, we'll compare Zero-Shot CoT against our baseline to evaluate its impact on performance. First, let's create the prompt.

zero_shot_COT_template = """

You are an evaluator who outputs the answer to a math word problem.

You must always think through the problem logically before providing an answer.

First, show some of your reasoning.

Then output the integer answer ONLY on a final new line. In this final answer, be sure not include words, commas, labels, or units and round all decimals answers.

"""

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{"role": "system", "content": zero_shot_COT_template},

{"role": "user", "content": "{{Problem}}"},

],

)

zero_shot_COT = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="Zero Shot COT prompt",

version=PromptVersion.from_openai(params),

)This updated prompt is now lives in Phoenix as a new prompt version.

Next, we run our task and evaluation by extracting the answer from the output of our LLM.

import re

def zero_shot_COT_prompt(input):

client = OpenAI()

resp = client.chat.completions.create(

**zero_shot_COT.format(variables={"Problem": input["Word Problem"]})

)

response_text = resp.choices[0].message.content.strip()

lines = response_text.split("\n")

final_answer = lines[-1].strip()

final_answer = re.sub(r"^\*\*(\d+)\*\*$", r"\1", final_answer)

return {"full_response": response_text, "final_answer": final_answer}

def evaluate_response(output, expected):

final_answer = output["final_answer"]

if not final_answer.isdigit():

return False

return int(final_answer) == int(expected["Answer"])

initial_experiment = run_experiment(

dataset,

task=zero_shot_COT_prompt,

evaluators=[evaluate_response],

experiment_description="Zero-Shot COT Prompt",

experiment_name="zero-shot-cot-prompt",

experiment_metadata={"prompt": "prompt_id=" + zero_shot_COT.id},

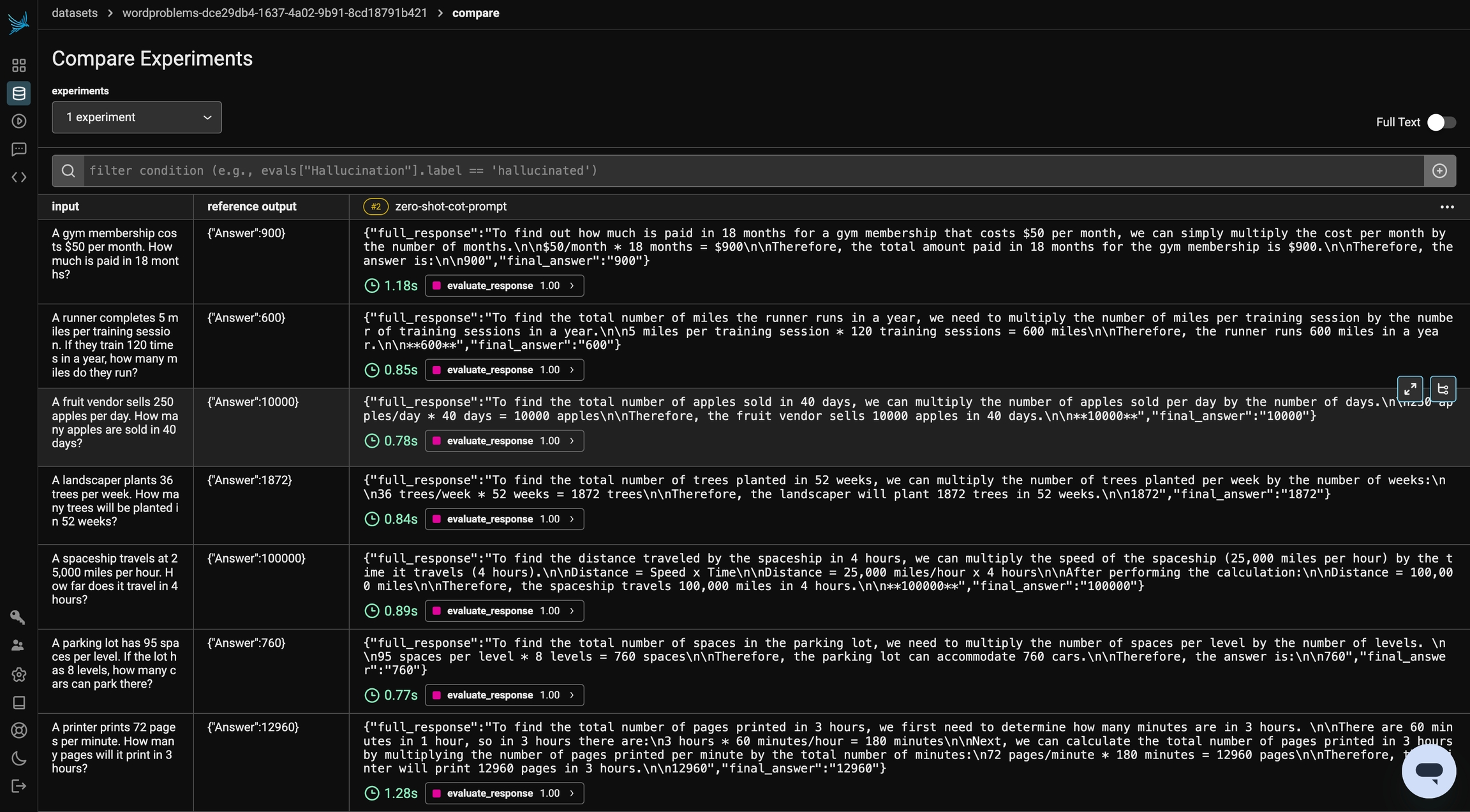

)By clicking into the experiment in Phoenix, you can take a look at the steps the model took the reach the answer. By telling the model to think through the problem and output reasoning, we see a performance improvement.

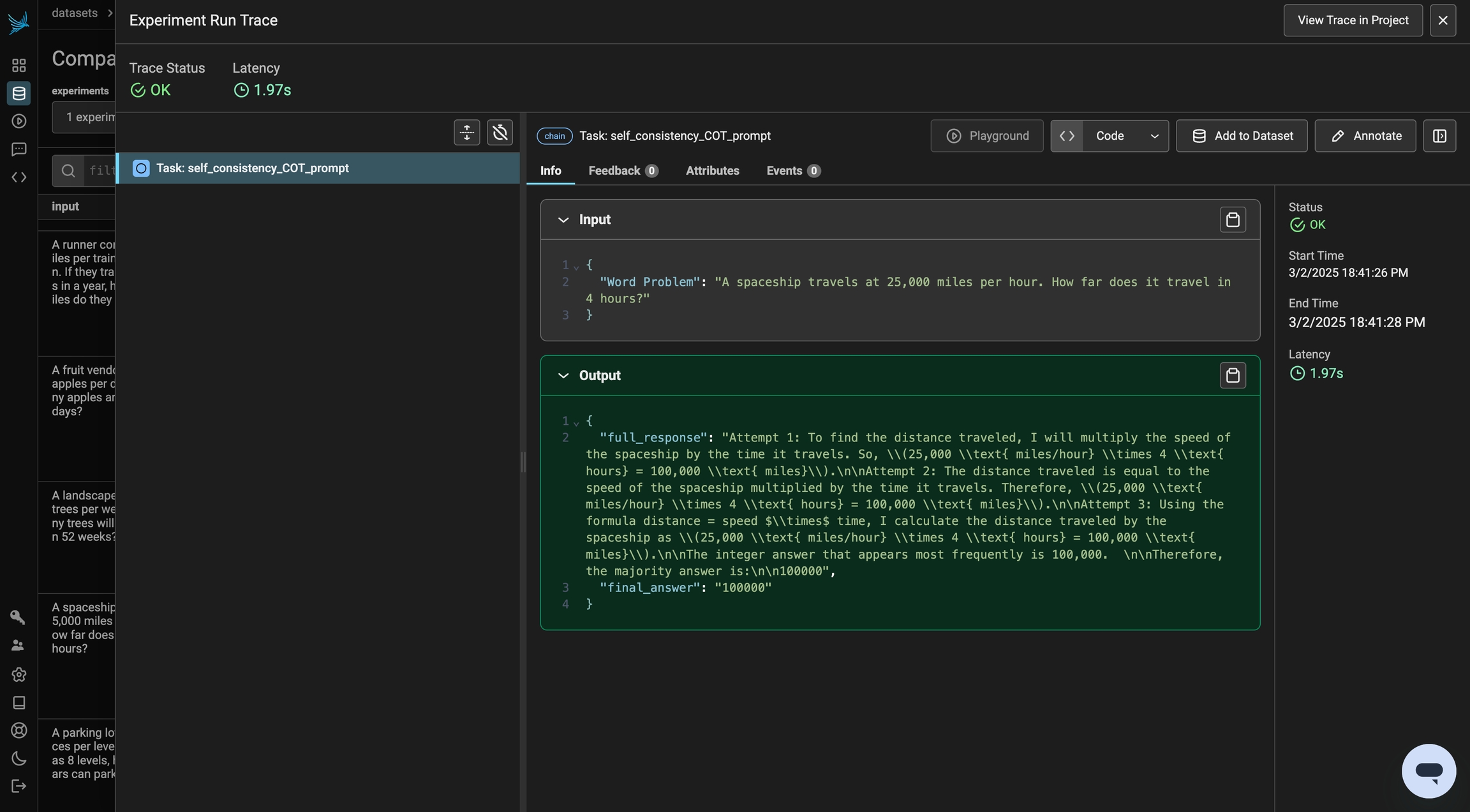

Even with Chain of Thought prompting, a single response may not always be reliable. Self-Consistency CoT enhances accuracy by generating multiple reasoning paths and selecting the most common answer. Instead of relying on one response, we sample multiple outputs and aggregate them, reducing errors caused by randomness or flawed reasoning steps.

This method improves robustness, especially for complex problems where initial reasoning steps might vary. In this section, we'll compare Self-Consistency CoT to our previous prompts to see how using multiple responses impacts overall performance.

Let's repeat the same process as above with a new prompt and evaluate the outcome.

consistency_COT_template = """

You are an evaluator who outputs the answer to a math word problem.

Follow these steps:

1. Solve the problem **multiple times independently**, thinking through the solution carefully each time.

2. Show some of your reasoning for each independent attempt.

3. Identify the integer answer that appears most frequently across your attempts.

4. On a **new line**, output only this majority answer as a plain integer with **no words, commas, labels, units, or special characters**.

"""

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{"role": "system", "content": consistency_COT_template},

{"role": "user", "content": "{{Problem}}"},

],

)

self_consistency_COT = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="self consistency COT prompt",

version=PromptVersion.from_openai(params),

)def self_consistency_COT_prompt(input):

client = OpenAI()

resp = client.chat.completions.create(

**self_consistency_COT.format(variables={"Problem": input["Word Problem"]})

)

response_text = resp.choices[0].message.content.strip()

lines = response_text.split("\n")

final_answer = lines[-1].strip()

final_answer = re.sub(r"^\*\*(\d+)\*\*$", r"\1", final_answer)

return {"full_response": response_text, "final_answer": final_answer}

def evaluate_response(output, expected):

final_answer = output["final_answer"]

if not final_answer.isdigit():

return False

return int(final_answer) == int(expected["Answer"])

initial_experiment = run_experiment(

dataset,

task=self_consistency_COT_prompt,

evaluators=[evaluate_response],

experiment_description="Self Consistency COT Prompt",

experiment_name="self-consistency-cot-prompt",

experiment_metadata={"prompt": "prompt_id=" + self_consistency_COT.id},

)We've observed a significant improvement in performance! Since the prompt instructs the model to compute the answer multiple times independently, you may notice that the experiment takes slightly longer to run. You can click into the experiment explore to view the independent computations the model performed for each problem.

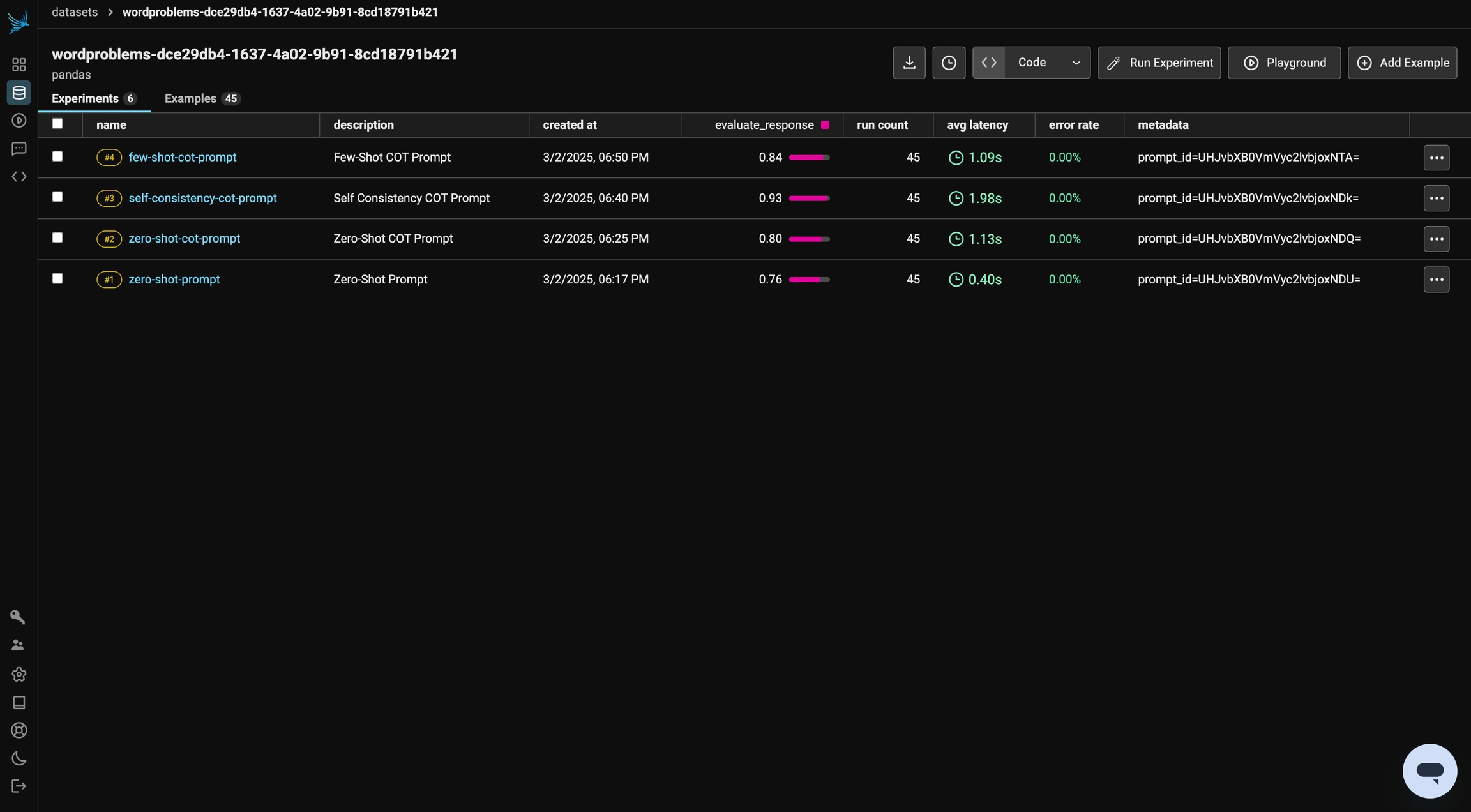

Few-shot CoT prompting enhances reasoning by providing worked examples before asking the model to solve a new problem. By demonstrating step-by-step solutions, the model learns to apply similar logical reasoning to unseen questions.

This method leverages in-context learning, allowing the model to generalize patterns from the examples.

In this final section, we’ll compare Few-Shot CoT against our previous prompts.

First, let's construct our prompt by sampling examples from a test dataset.

ds = load_dataset("syeddula/math_word_problems")["test"]

few_shot_examples = ds.to_pandas().sample(5)

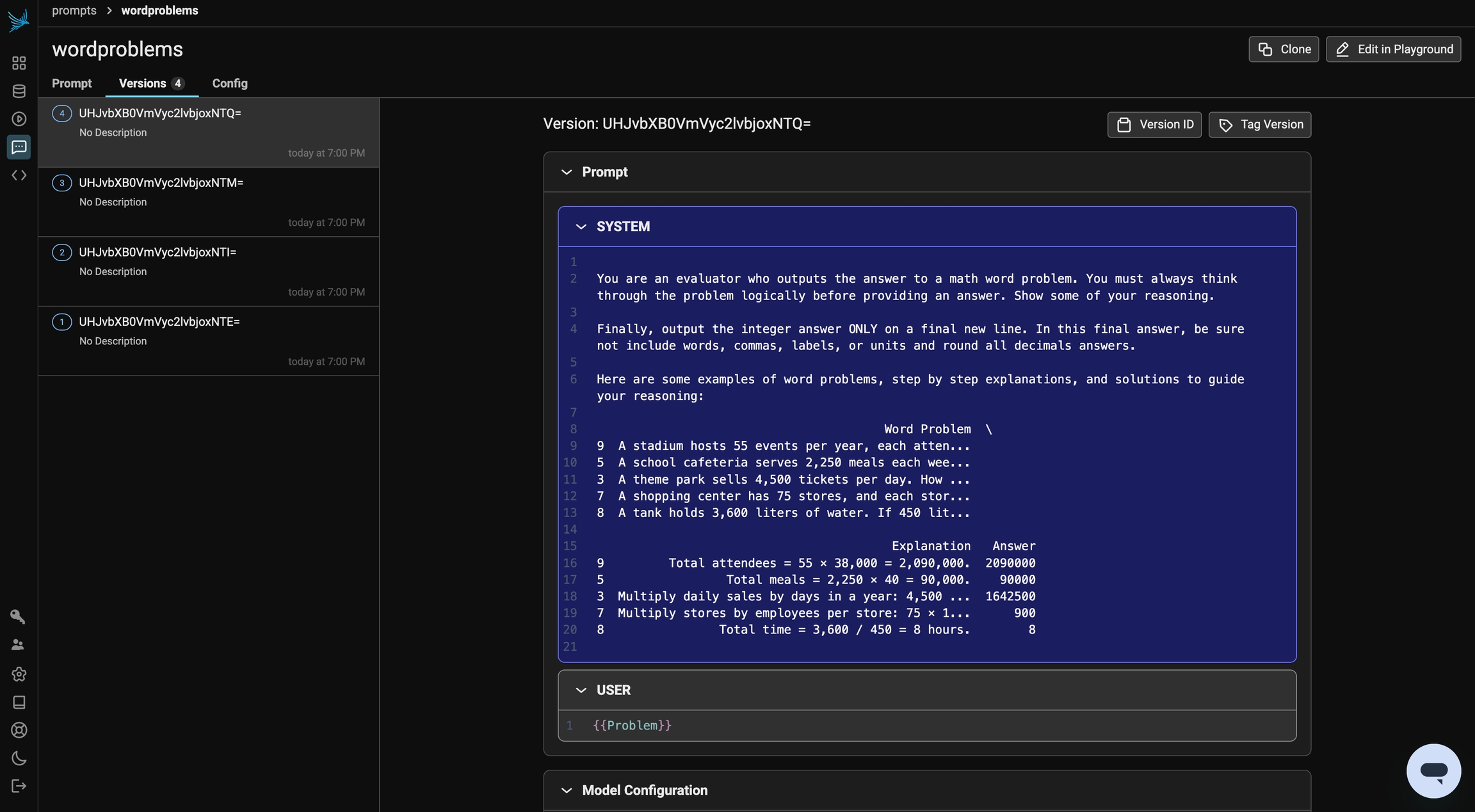

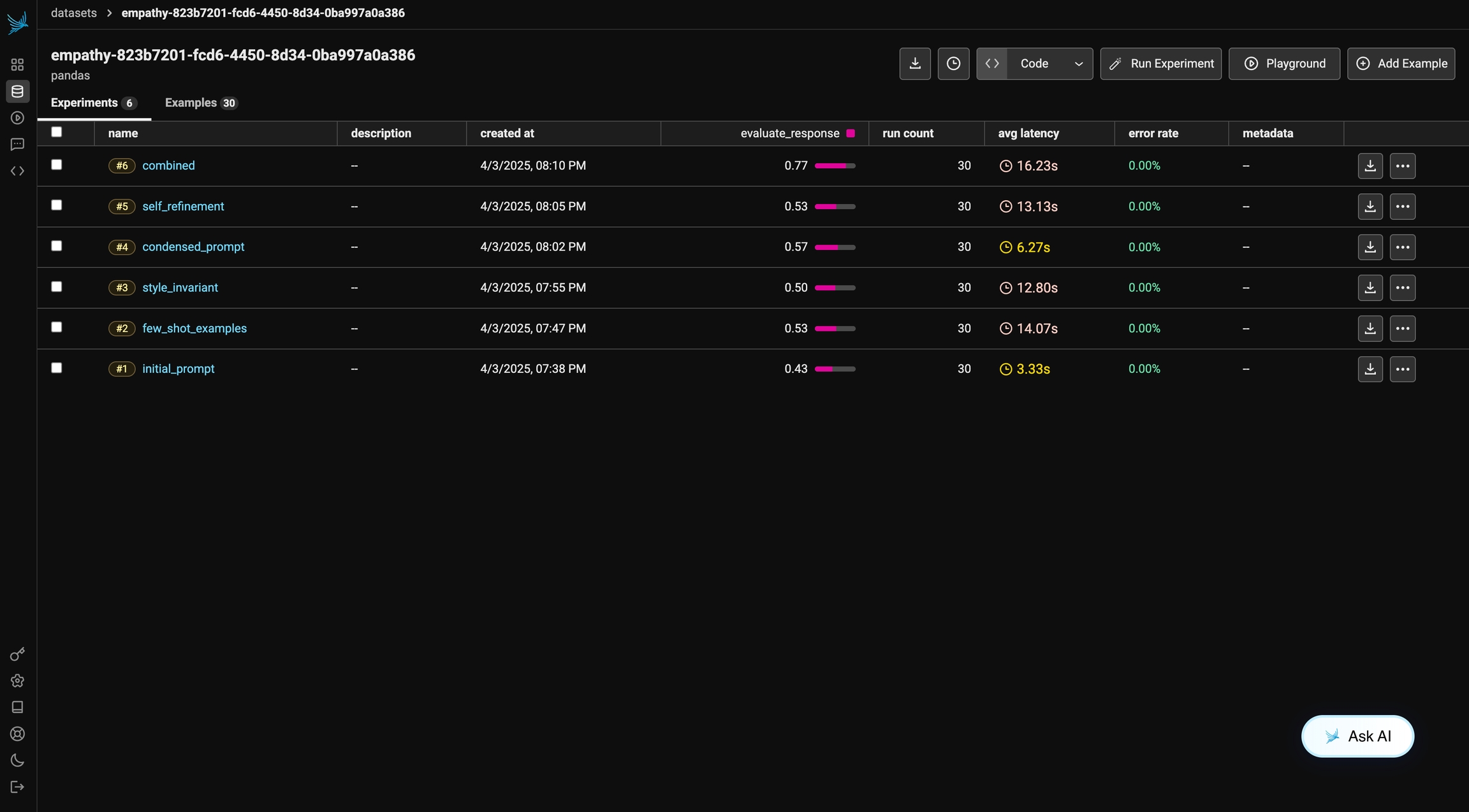

few_shot_examplesWe now will construct our final prompt, run the experiment, and view the results. Under the Prompts tab in Phoenix, you can track the version history of your prompt and see what random examples were chosen.

few_shot_COT_template = """

You are an evaluator who outputs the answer to a math word problem. You must always think through the problem logically before providing an answer. Show some of your reasoning.

Finally, output the integer answer ONLY on a final new line. In this final answer, be sure not include words, commas, labels, or units and round all decimals answers.

Here are some examples of word problems, step by step explanations, and solutions to guide your reasoning:

{examples}

"""

params = CompletionCreateParamsBase(

model="gpt-3.5-turbo",

temperature=0,

messages=[

{"role": "system", "content": few_shot_COT_template.format(examples=few_shot_examples)},

{"role": "user", "content": "{{Problem}}"},

],

)

few_shot_COT = PhoenixClient().prompts.create(

name=prompt_identifier,

prompt_description="Few Shot COT prompt",

version=PromptVersion.from_openai(params),

)def few_shot_COT_prompt(input):

client = OpenAI()

resp = client.chat.completions.create(

**few_shot_COT.format(variables={"Problem": input["Word Problem"]})

)

response_text = resp.choices[0].message.content.strip()

lines = response_text.split("\n")

final_answer = lines[-1].strip()

final_answer = re.sub(r"^\*\*(\d+)\*\*$", r"\1", final_answer)

return {"full_response": response_text, "final_answer": final_answer}

def evaluate_response(output, expected):

final_answer = output["final_answer"]

if not final_answer.isdigit():

return False

return int(final_answer) == int(expected["Answer"])

import nest_asyncio

from phoenix.experiments import run_experiment

nest_asyncio.apply()

initial_experiment = run_experiment(

dataset,

task=few_shot_COT_prompt,

evaluators=[evaluate_response],

experiment_description="Few-Shot COT Prompt",

experiment_name="few-shot-cot-prompt",

experiment_metadata={"prompt": "prompt_id=" + few_shot_COT.id},

)After running all of your experiments, you can compare the performance of different prompting techniques. Keep in mind that results may vary due to randomness and the model's non-deterministic behavior.

You can review your prompt version history in the Prompts tab and explore the Playground to iterate further and run additional experiments.

To refine and test these prompts against other datasets, experiment with Chain of Thought (CoT) prompting to see its relevance to your specific use cases. With Phoenix, you can seamlessly integrate this process into your workflow using the TypeScript and Python Clients.

From here, you can check out more examples on Phoenix, and if you haven't already, please give us a star on GitHub! ⭐️

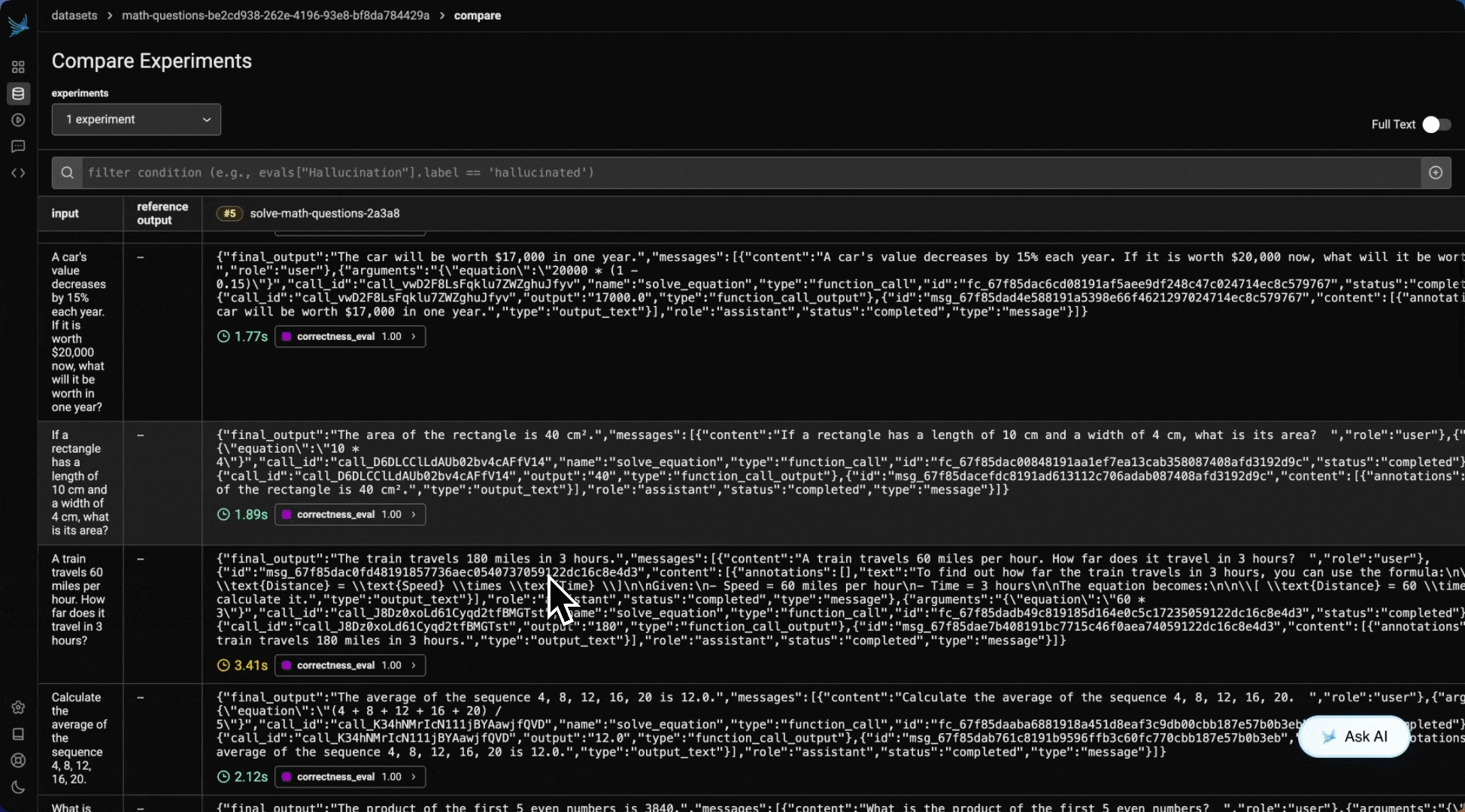

This guide shows you how to create and evaluate agents with Phoenix to improve performance. We'll go through the following steps:

Create an agent using the OpenAI agents SDK

Trace the agent activity

Create a dataset to benchmark performance

Run an experiment to evaluate agent performance using LLM as a judge

Learn how to evaluate traces in production

!pip install -q "arize-phoenix>=8.0.0" openinference-instrumentation-openai-agents openinference-instrumentation-openai --upgrade

!pip install -q openai nest_asyncio openai-agentsNext you need to connect to Phoenix. The code below will connect you to a Phoenix Cloud instance. You can also connect to a self-hosted Phoenix instance if you'd prefer.

import os

import nest_asyncio

nest_asyncio.apply()

from getpass import getpass

os.environ["PHOENIX_COLLECTOR_ENDPOINT"] = "https://app.phoenix.arize.com"

if not os.environ.get("PHOENIX_CLIENT_HEADERS"):

os.environ["PHOENIX_CLIENT_HEADERS"] = "api_key=" + getpass("Enter your Phoenix API key: ")

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter your OpenAI API key: ")from phoenix.otel import register

# Setup Tracing

tracer_provider = register(

project_name="openai-agents-cookbook",

endpoint="https://app.phoenix.arize.com/v1/traces",

auto_instrument=True,

)Here we've setup a basic agent that can solve math problems. We have a function tool that can solve math equations, and an agent that can use this tool.

We'll use the Runner class to run the agent and get the final output.

from agents import Runner, function_tool

@function_tool

def solve_equation(equation: str) -> str:

"""Use python to evaluate the math equation, instead of thinking about it yourself.

Args:

equation: string which to pass into eval() in python

"""

return str(eval(equation))from agents import Agent

agent = Agent(

name="Math Solver",

instructions="You solve math problems by evaluating them with python and returning the result",

tools=[solve_equation],

)result = await Runner.run(agent, "what is 15 + 28?")

# Run Result object

print(result)

# Get the final output

print(result.final_output)

# Get the entire list of messages recorded to generate the final output

print(result.to_input_list())Now we have a basic agent, let's evaluate whether the agent responded correctly!

Agents can go awry for a variety of reasons.

Tool call accuracy - did our agent choose the right tool with the right arguments?

Tool call results - did the tool respond with the right results?

Agent goal accuracy - did our agent accomplish the stated goal and get to the right outcome?

We'll setup a simple evaluator that will check if the agent's response is correct, you can read about different types of agent evals here.

Let's setup our evaluation by defining our task function, our evaluator, and our dataset.

import asyncio

# This is our task function. It takes a question and returns the final output and the messages recorded to generate the final output.

async def solve_math_problem(dataset_row: dict):

result = await Runner.run(agent, dataset_row.get("question"))

return {

"final_output": result.final_output,

"messages": result.to_input_list(),

}

dataset_row = {"question": "What is 15 + 28?"}

result = asyncio.run(solve_math_problem(dataset_row))

print(result)Next, we create our evaluator.

import pandas as pd

from phoenix.evals import OpenAIModel, llm_classify

def correctness_eval(input, output):

# Template for evaluating math problem solutions

MATH_EVAL_TEMPLATE = """

You are evaluating whether a math problem was solved correctly.

[BEGIN DATA]

************

[Question]: {question}

************

[Response]: {response}

[END DATA]

Assess if the answer to the math problem is correct. First work out the correct answer yourself,

then compare with the provided response. Consider that there may be different ways to express the same answer

(e.g., "43" vs "The answer is 43" or "5.0" vs "5").

Your answer must be a single word, either "correct" or "incorrect"

"""

# Run the evaluation

rails = ["correct", "incorrect"]

eval_df = llm_classify(

data=pd.DataFrame([{"question": input["question"], "response": output["final_output"]}]),

template=MATH_EVAL_TEMPLATE,

model=OpenAIModel(model="gpt-4.1"),

rails=rails,

provide_explanation=True,

)

label = eval_df["label"][0]

score = 1 if label == "correct" else 0

return scoreUsing the template below, we're going to generate a dataframe of 25 questions we can use to test our math problem solving agent.

MATH_GEN_TEMPLATE = """