Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Learn how to use the phoenix.otel library

Learn how you can use basic OpenTelemetry to instrument your application.

Learn how to use Phoenix's decorators to easily instrument specific methods or code blocks in your application.

Setup tracing for your TypeScript application.

Learn about Projects in Phoenix, and how to use them.

Understand Sessions and how they can be used to group user conversations.

Learn how to load a file of traces into Phoenix

Learn how to export trace data from Phoenix

Tracing can be augmented and customized by adding Metadata. Metadata includes your own custom attributes, user ids, session ids, prompt templates, and more.

Add Attributes, Metadata, Users

Learn how to add custom metadata and attributes to your traces

Instrument Prompt Templates and Prompt Variables

Learn how to define custom prompt templates and variables in your tracing.

Tracing can be paused temporarily or disabled permanently.

If there is a section of your code for which tracing is not desired, e.g. the document chunking process, it can be put inside the suppress_tracing context manager as shown below.

Calling .uninstrument() on the auto-instrumentors will remove tracing permanently. Below is the examples for LangChain, LlamaIndex and OpenAI, respectively.

Learn how to block PII from logging to Phoenix

Learn how to selectively block or turn off tracing

Learn how to send only certain spans to Phoenix

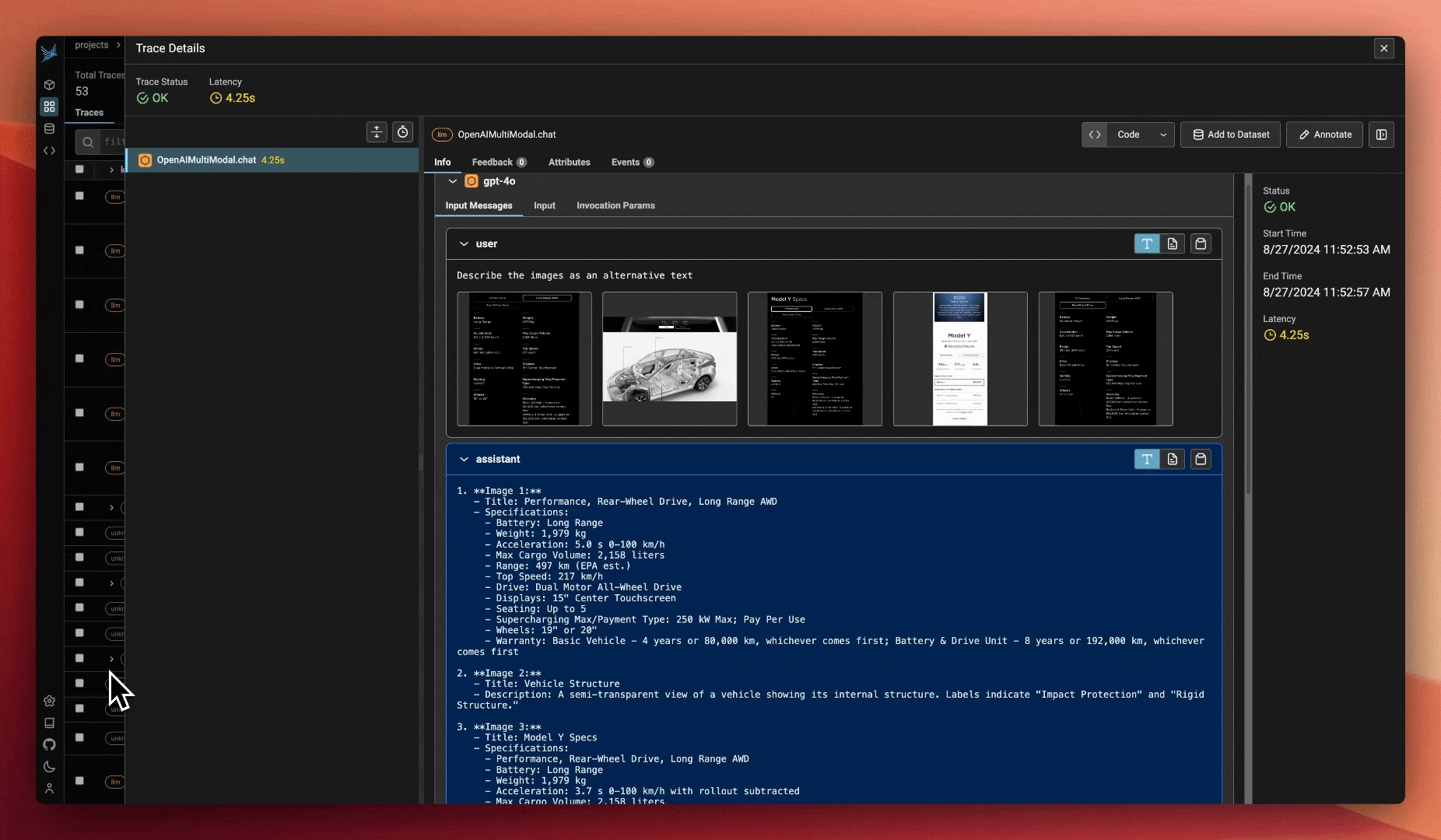

Learn how to trace images

Not sure where to start? Try a quickstart:

AI Observability and Evaluation

Running Phoenix for the first time? Select a quickstart below.

Check out a comprehensive list of example notebooks for LLM Traces, Evals, RAG Analysis, and more.

Add instrumentation for popular packages and libraries such as OpenAI, LangGraph, Vercel AI SDK and more.

Join the Phoenix Slack community to ask questions, share findings, provide feedback, and connect with other developers.

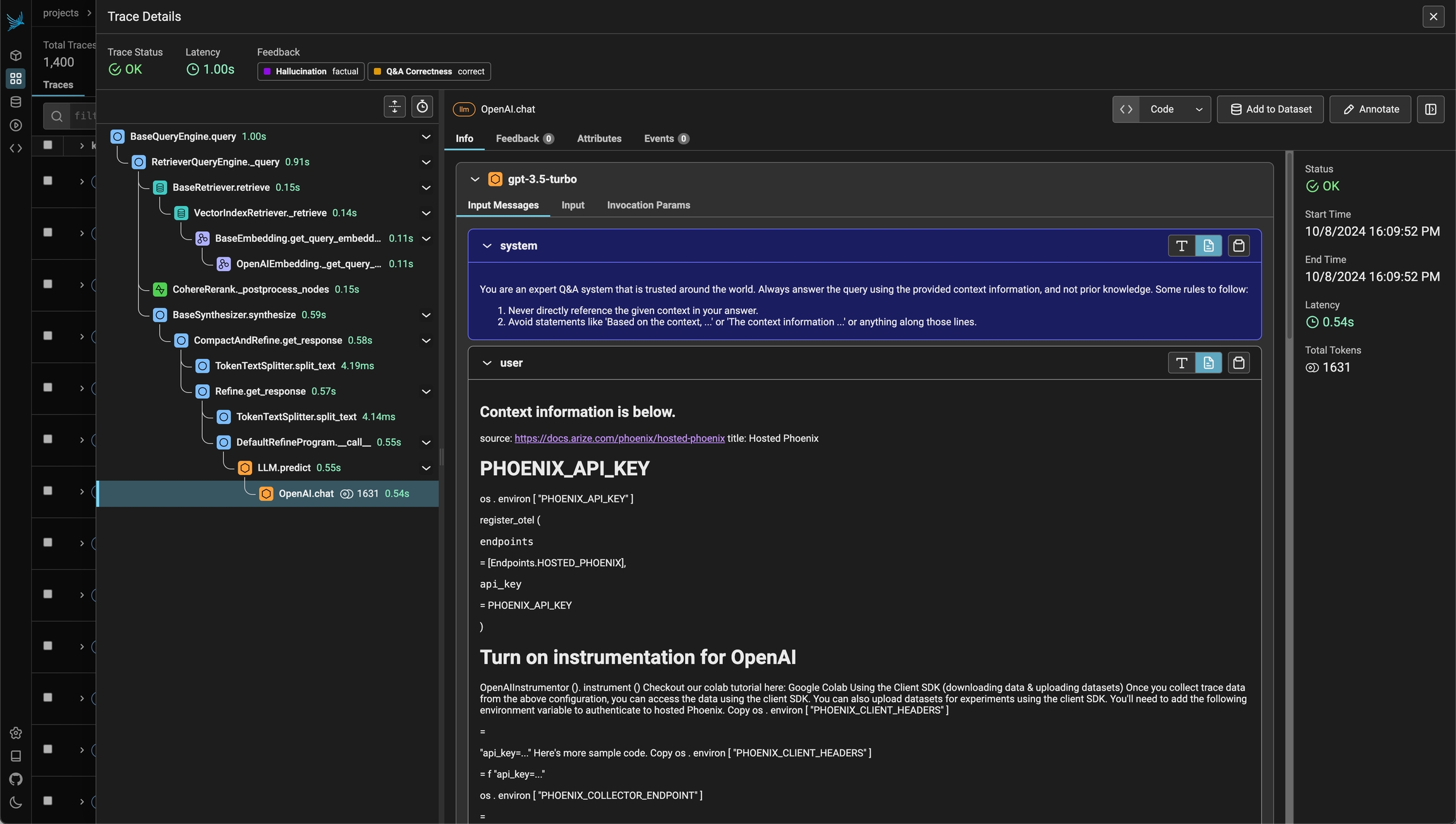

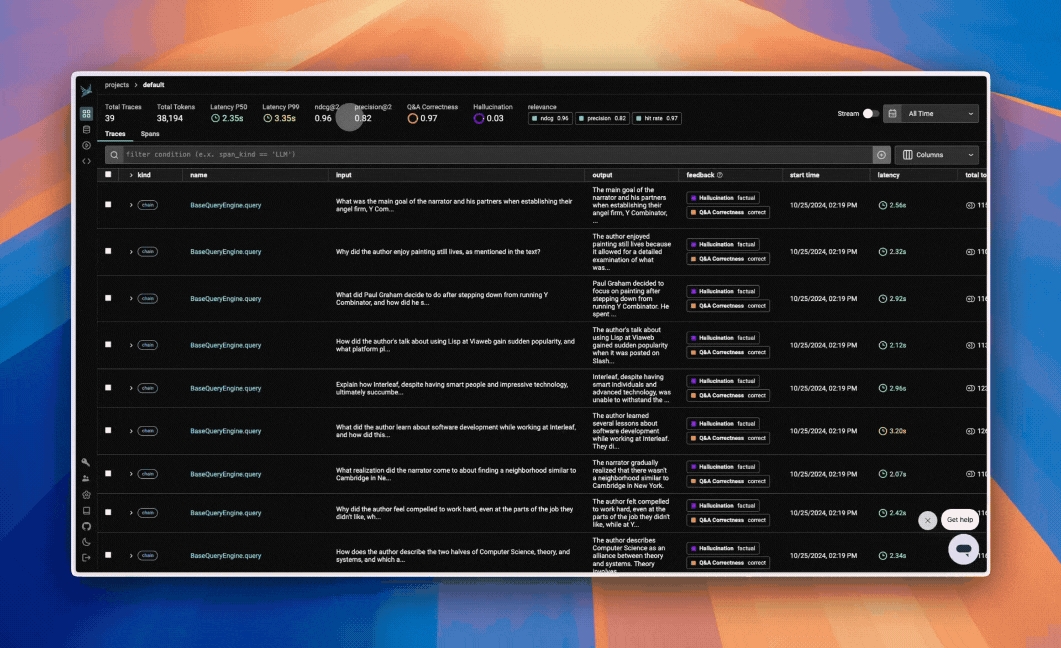

Tracing is a critical part of AI Observability and should be used both in production and development

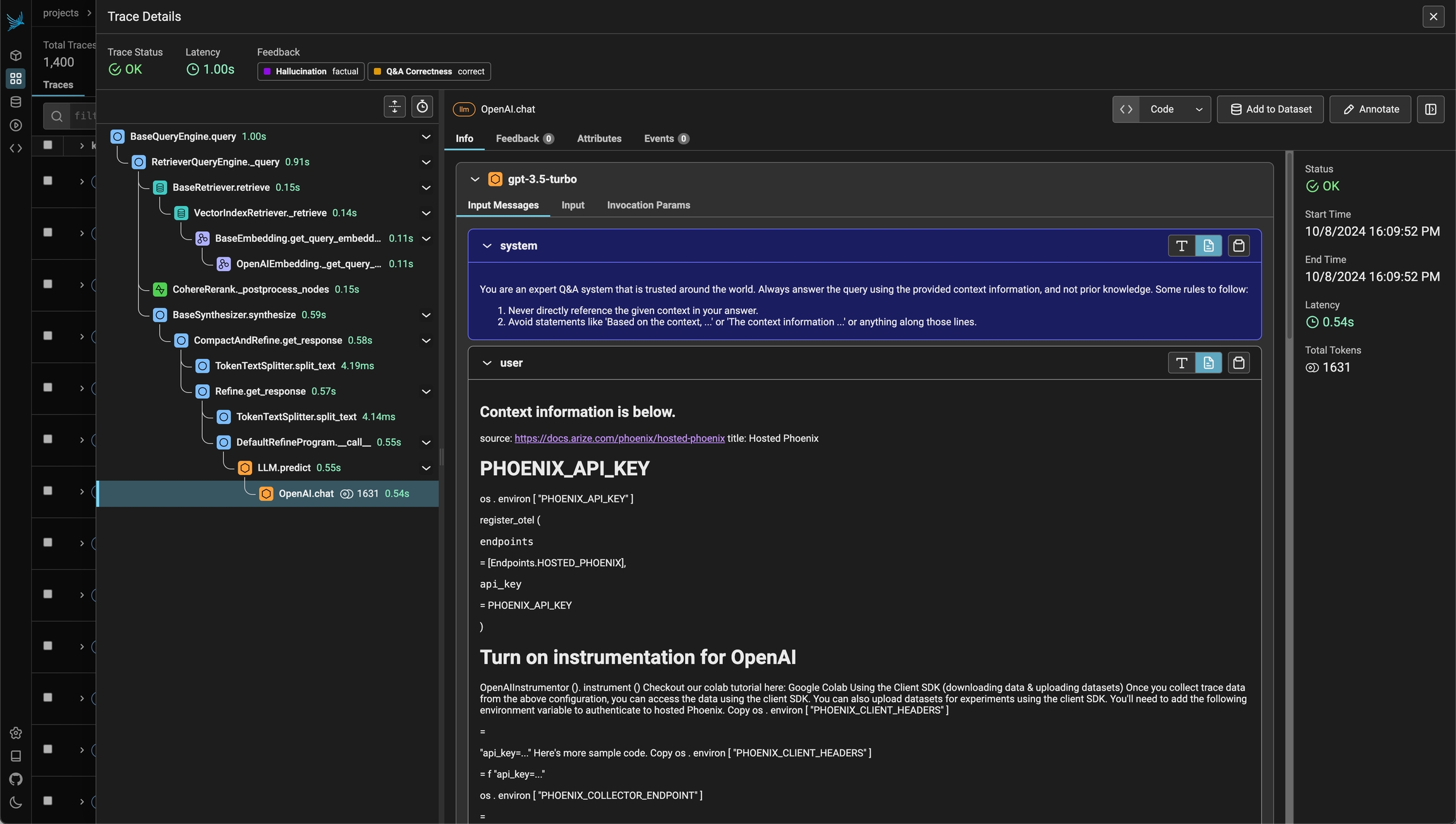

Phoenix's tracing and span analysis capabilities are invaluable during the prototyping and debugging stages. By instrumenting application code with Phoenix, teams gain detailed insights into the execution flow, making it easier to identify and resolve issues. Developers can drill down into specific spans, analyze performance metrics, and access relevant logs and metadata to streamline debugging efforts.

This section contains details on Tracing features:

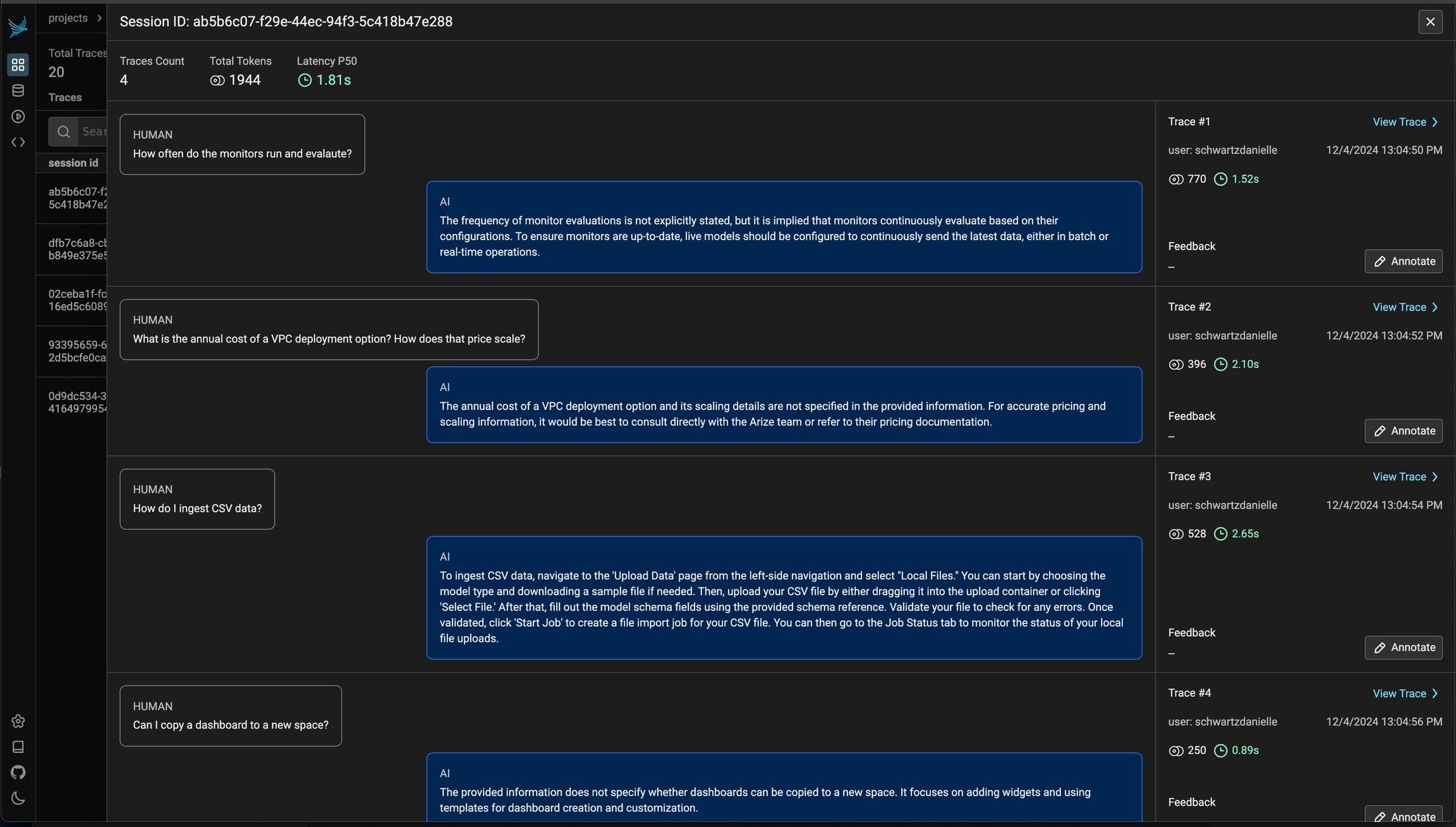

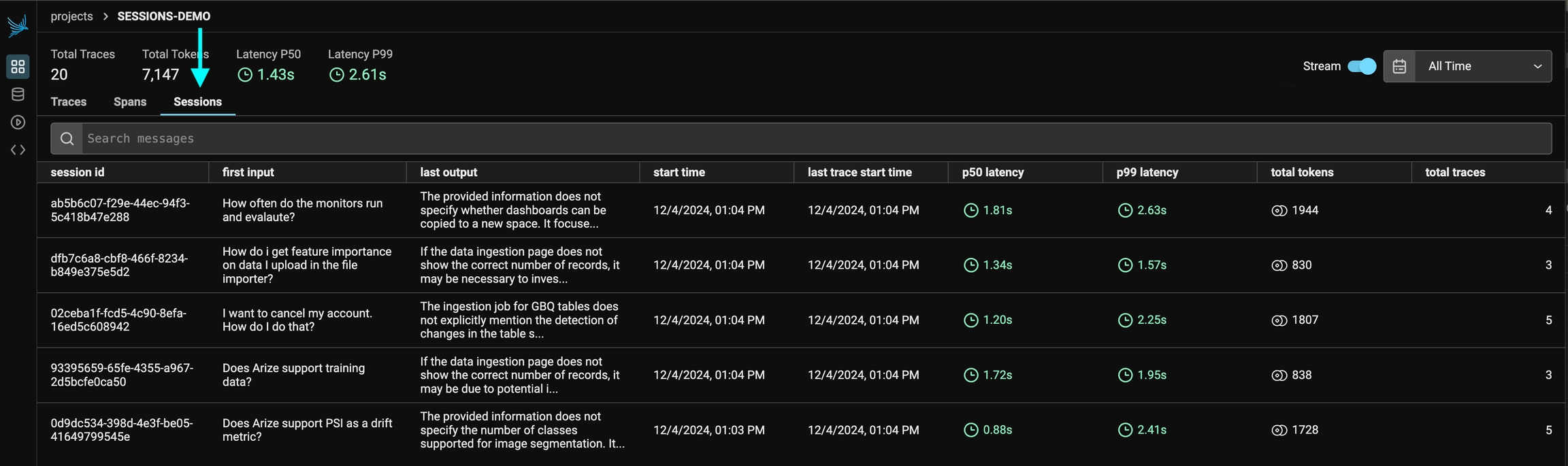

Track and analyze multi-turn conversations

Sessions enable tracking and organizing related traces across multi-turn conversations with your AI application. When building conversational AI, maintaining context between interactions is critical - Sessions make this possible from an observability perspective.

With Sessions in Phoenix, you can:

Track the entire history of a conversation in a single thread

View conversations in a chatbot-like UI showing inputs and outputs of each turn

Search through sessions to find specific interactions

Track token usage and latency per conversation

This feature is particularly valuable for applications where context builds over time, like chatbots, virtual assistants, or any other multi-turn interaction. By tagging spans with a consistent session ID, you create a connected view that reveals how your application performs across an entire user journey.

Check out how to Setup Sessions

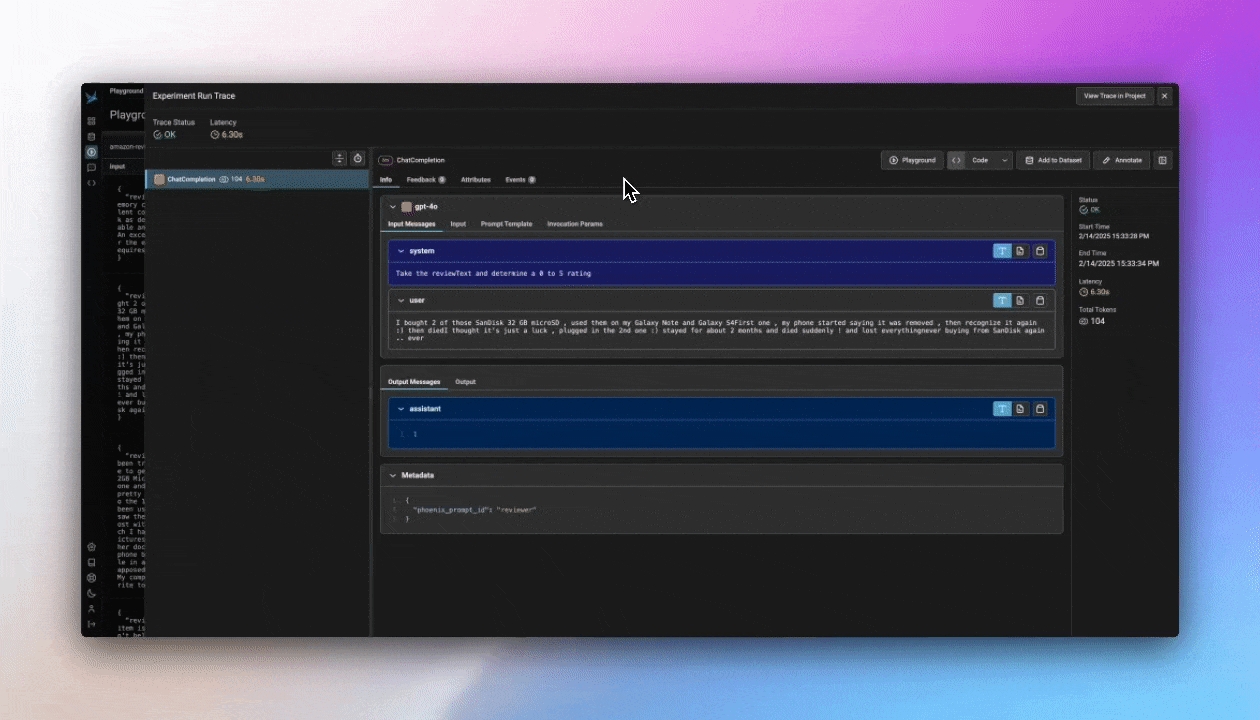

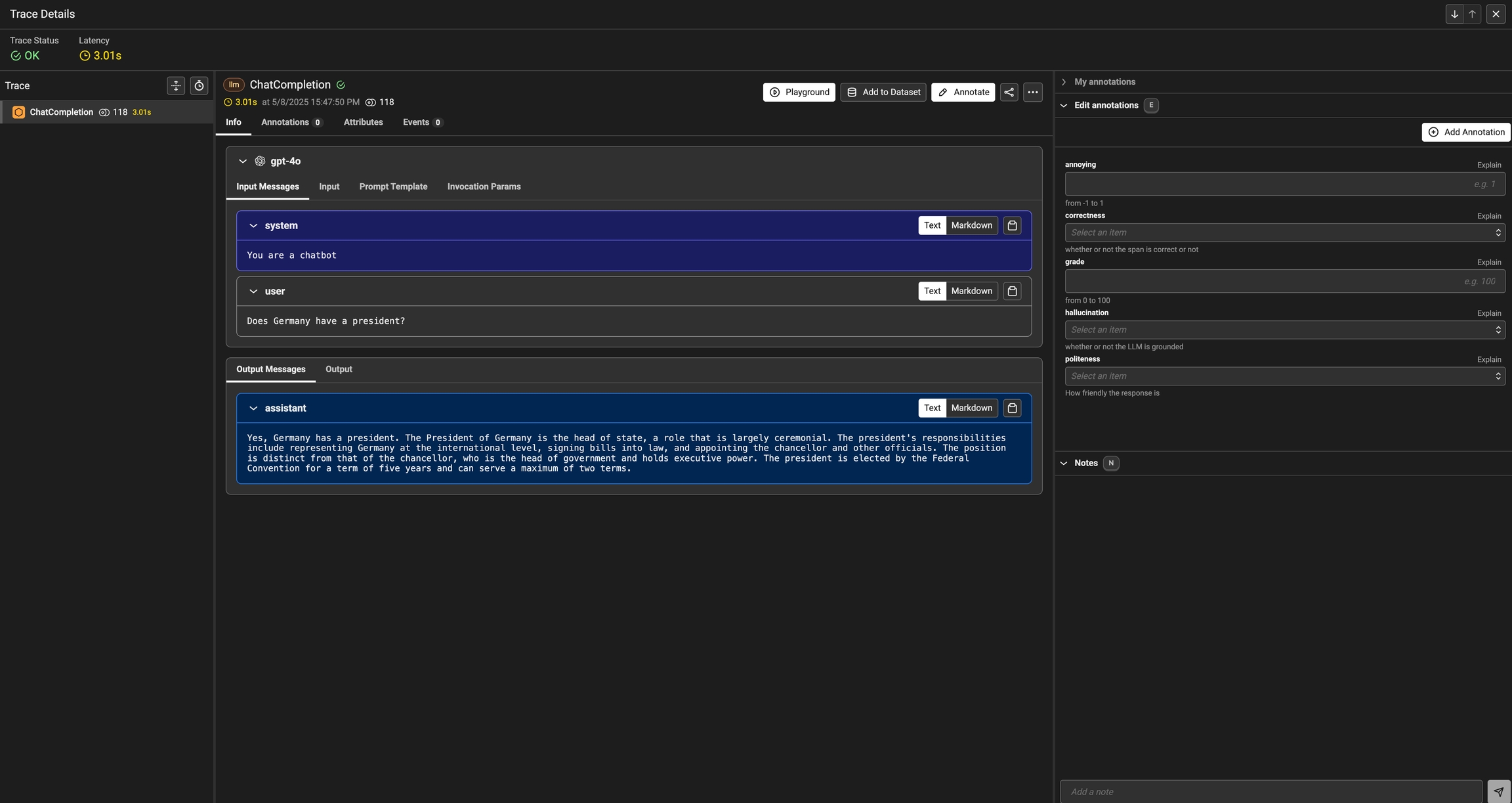

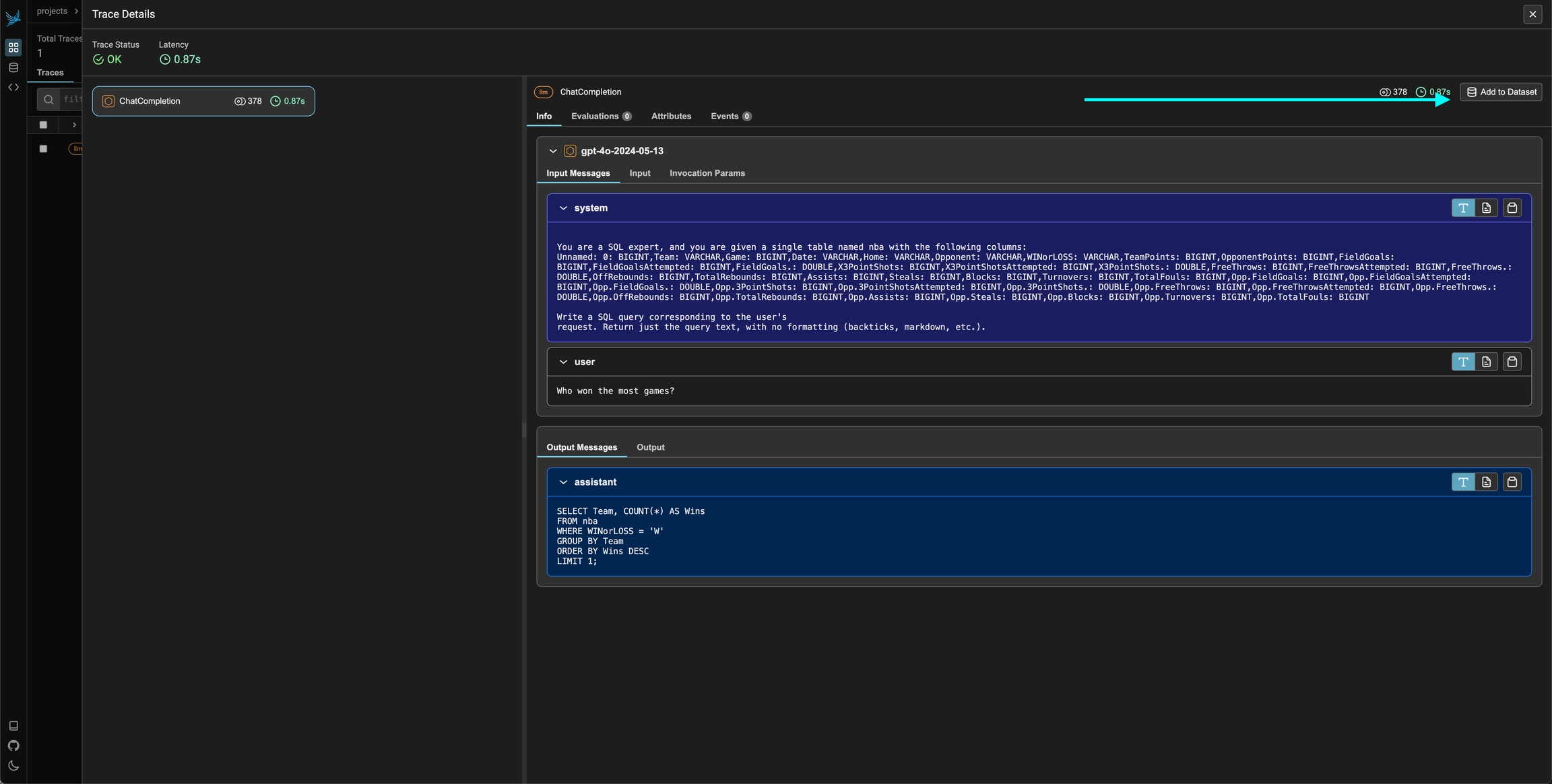

Replay LLM spans traced in your application directly in the playground

Have you ever wanted to go back into a multi-step LLM chain and just replay one step to see if you could get a better outcome? Well you can with Phoenix's Span Replay. LLM spans that are stored within Phoenix can be loaded into the Prompt Playground and replayed. Replaying spans inside of Playground enables you to debug and improve the performance of your LLM systems by comparing LLM provider outputs, tweaking model parameters, changing prompt text, and more.

Chat completions generated inside of Playground are automatically instrumented, and the recorded spans are immediately available to be replayed inside of Playground.

Datasets are critical assets for building robust prompts, evals, fine-tuning,

Datasets are critical assets for building robust prompts, evals, fine-tuning, and much more. Phoenix allows you to build datasets manually, programmatically, or from files.

Export datasets for offline analysis, evals, and fine-tuning.

Exporting to CSV - how to quickly download a dataset to use elsewhere

Want to just use the contents of your dataset in another context? Simply click on the export to CSV button on the dataset page and you are good to go!

Fine-tuning lets you get more out of the models available by providing:

Higher quality results than prompting

Ability to train on more examples than can fit in a prompt

Token savings due to shorter prompts

Lower latency requests

Fine-tuning improves on few-shot learning by training on many more examples than can fit in the prompt, letting you achieve better results on a wide number of tasks. Once a model has been fine-tuned, you won't need to provide as many examples in the prompt. This saves costs and enables lower-latency requests. Phoenix natively exports OpenAI Fine-Tuning JSONL as long as the dataset contains compatible inputs and outputs.

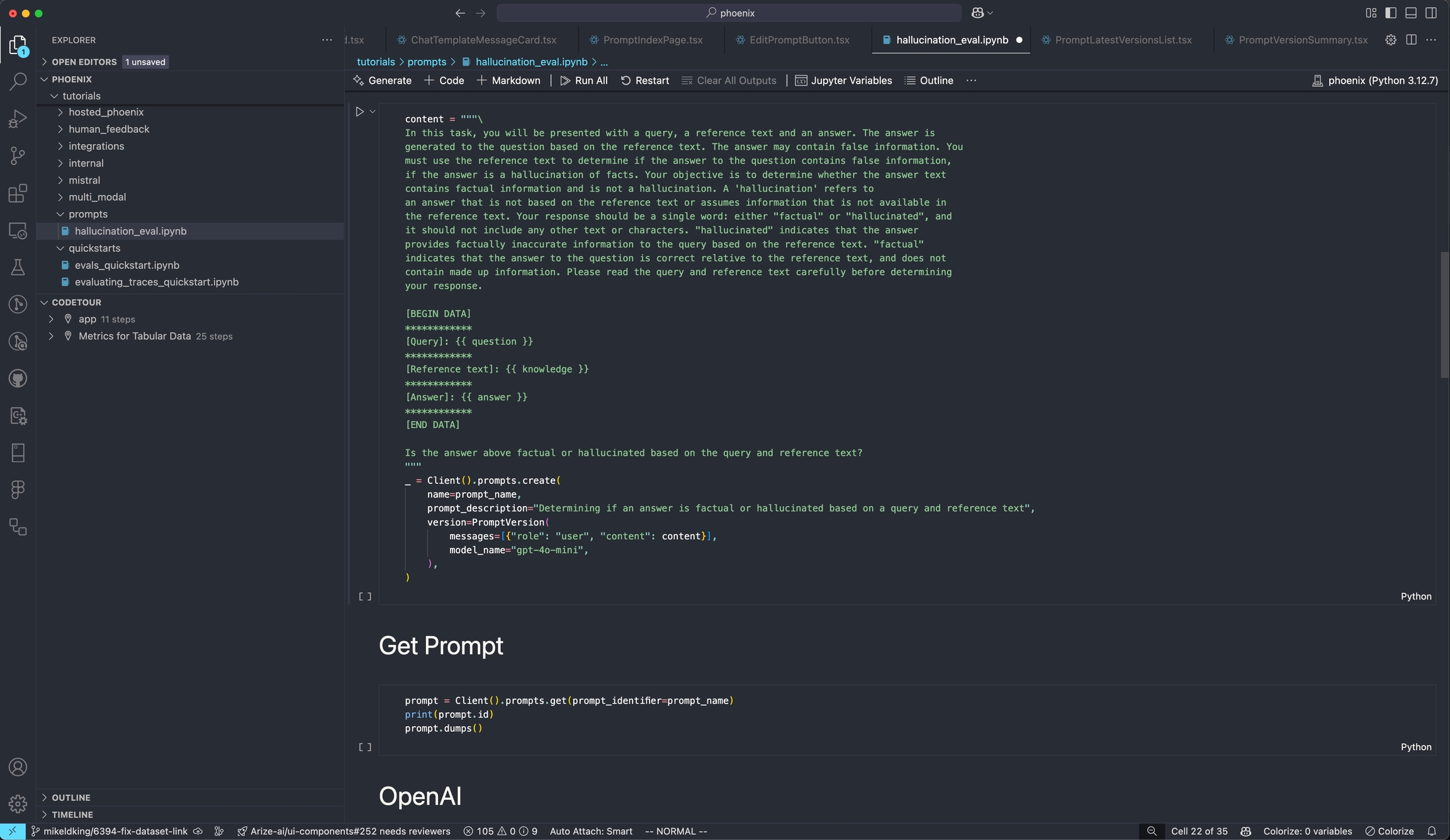

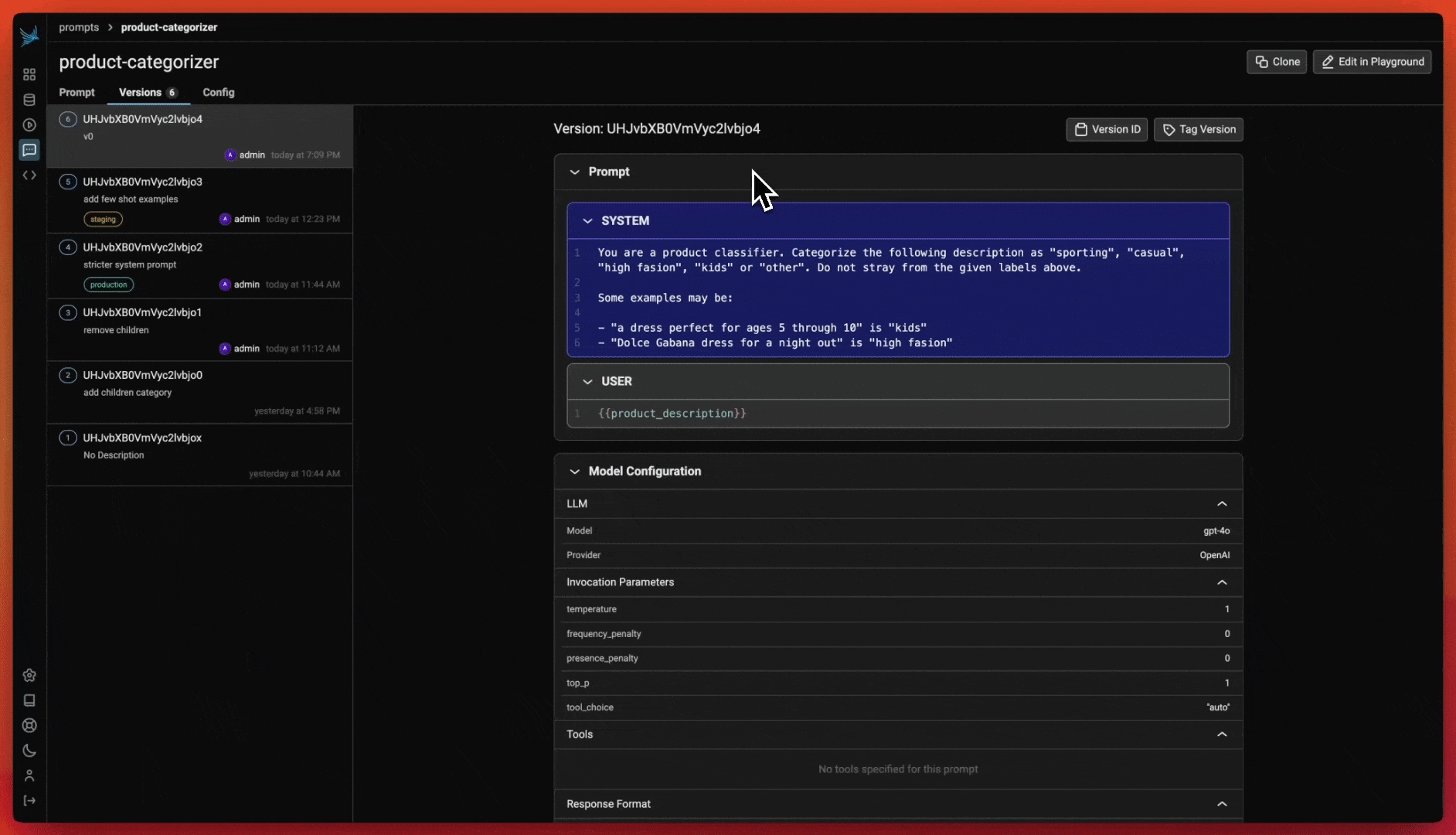

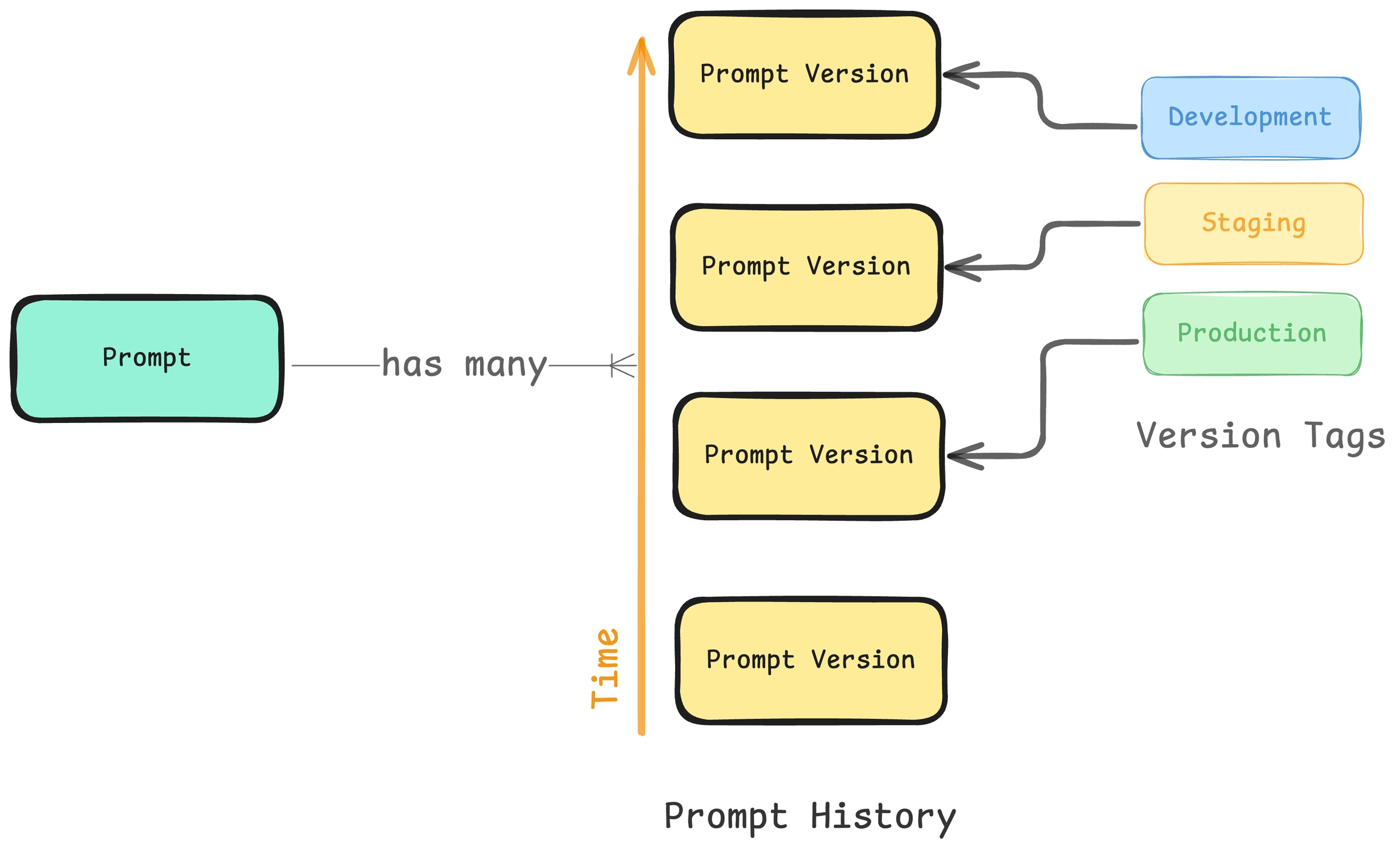

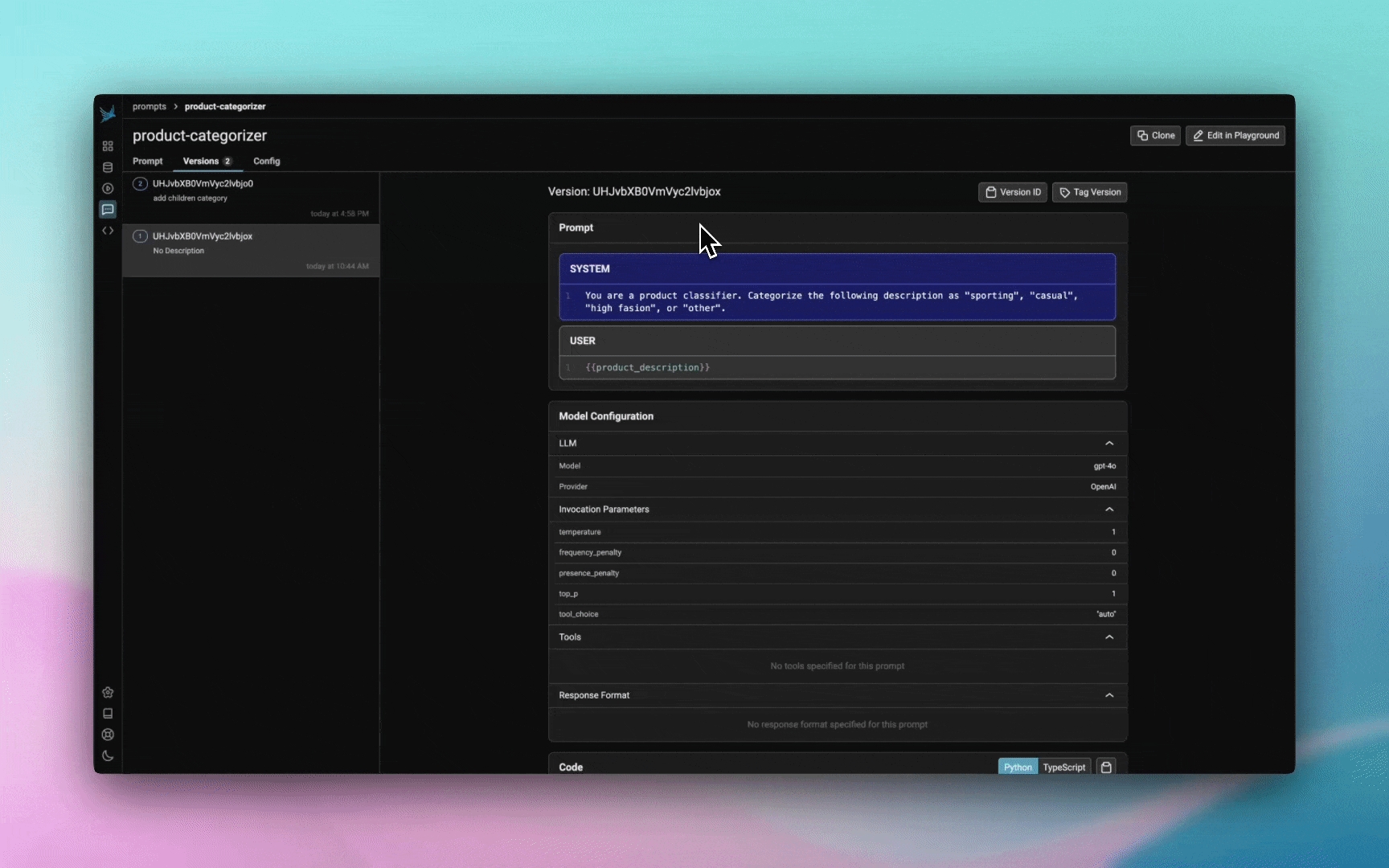

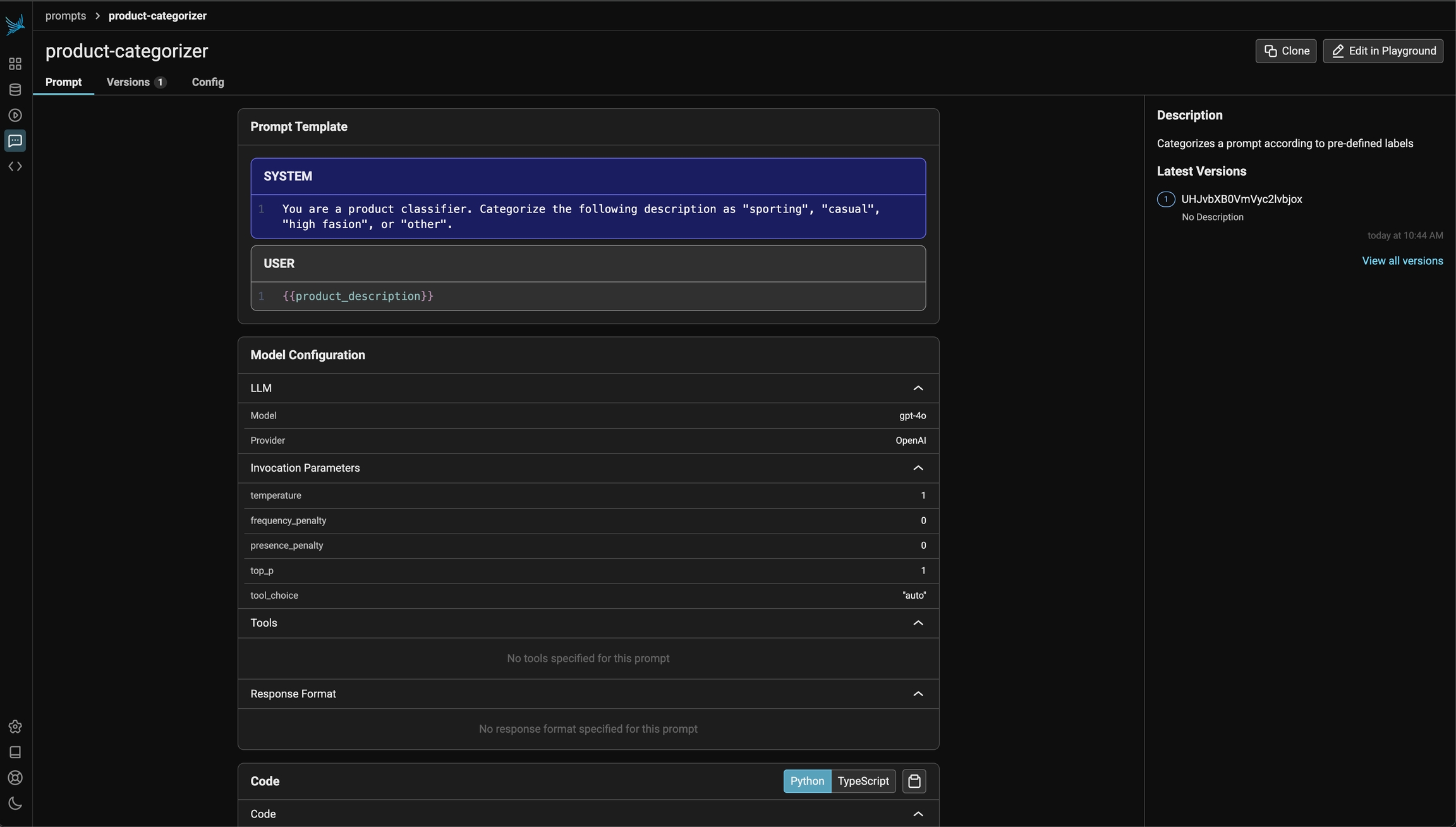

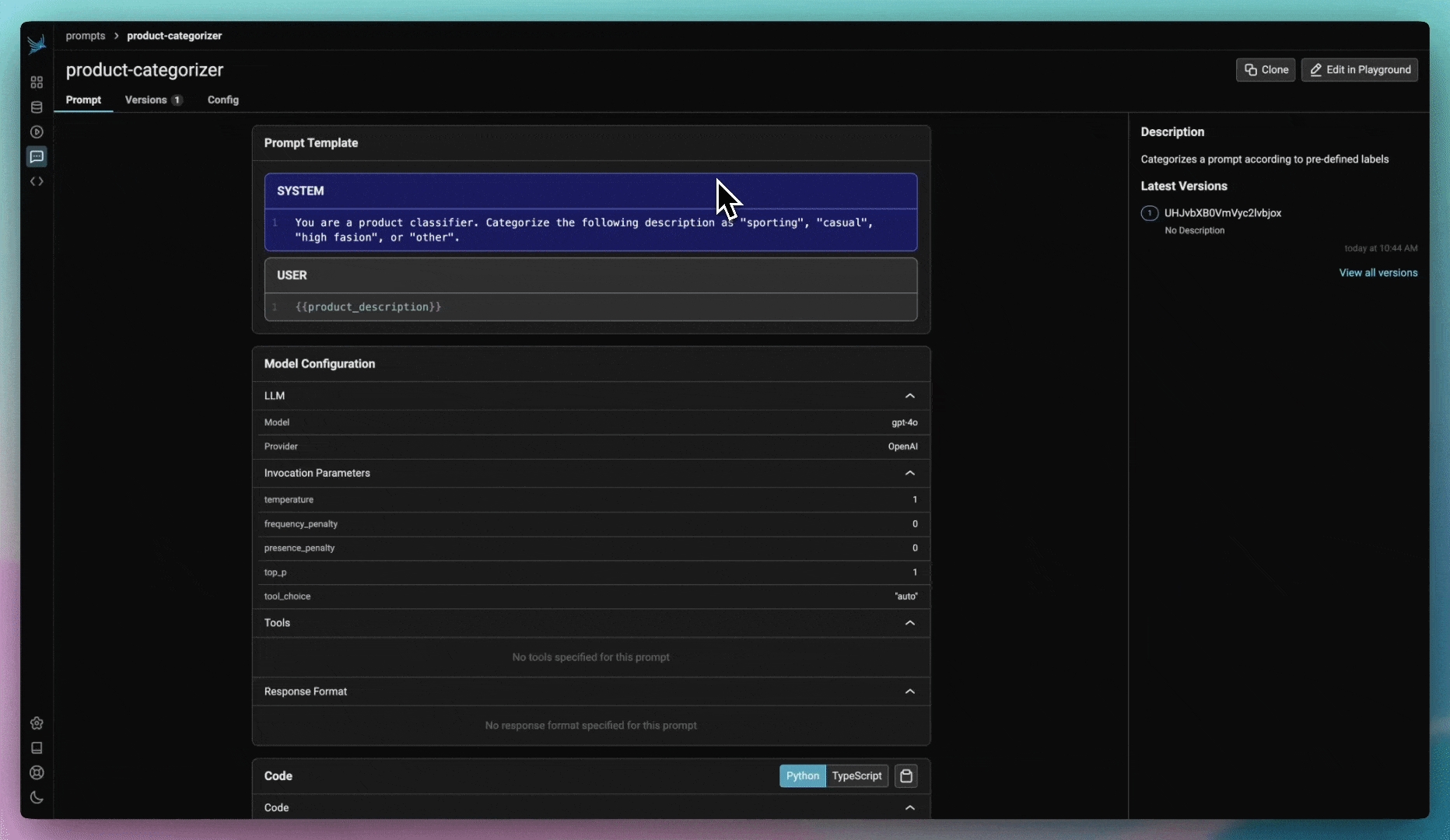

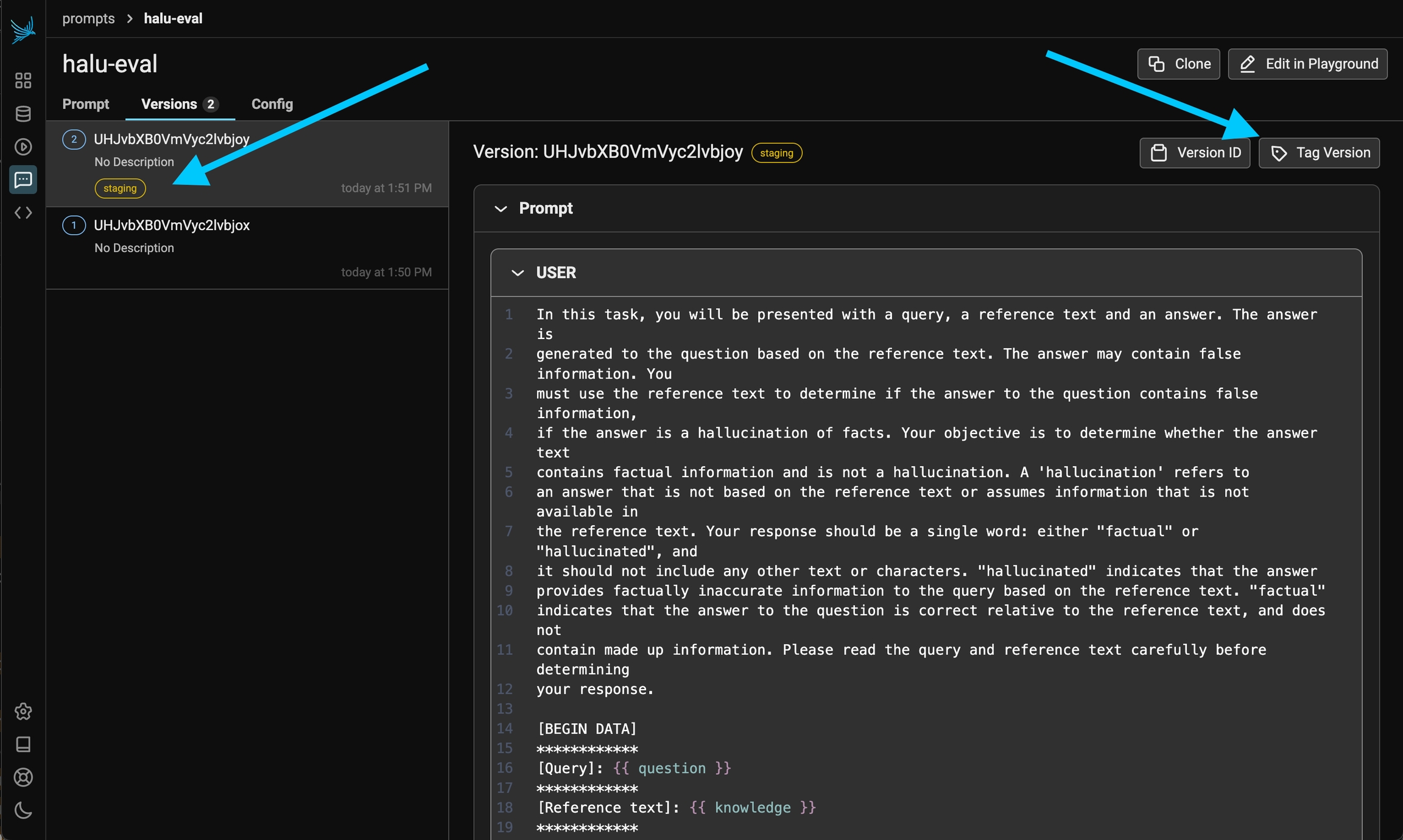

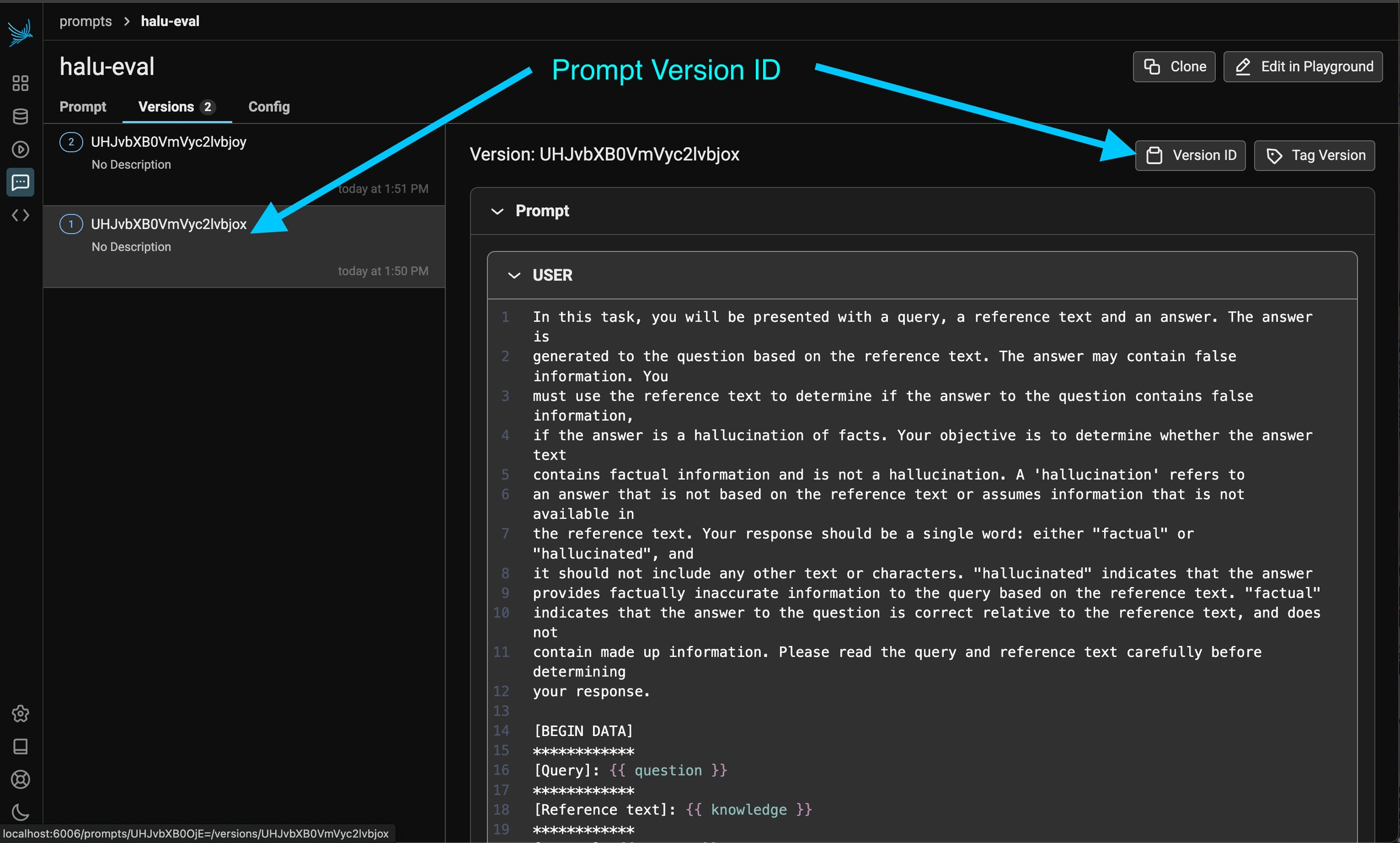

Version and track changes made to prompt templates

Prompt management allows you to create, store, and modify prompts for interacting with LLMs. By managing prompts systematically, you can improve reuse, consistency, and experiment with variations across different models and inputs.

Key benefits of prompt management include:

Reusability: Store and load prompts across different use cases.

Versioning: Track changes over time to ensure that the best performing version is deployed for use in your application.

Collaboration: Share prompts with others to maintain consistency and facilitate iteration.

To learn how to get started with prompt management, see Create a prompt

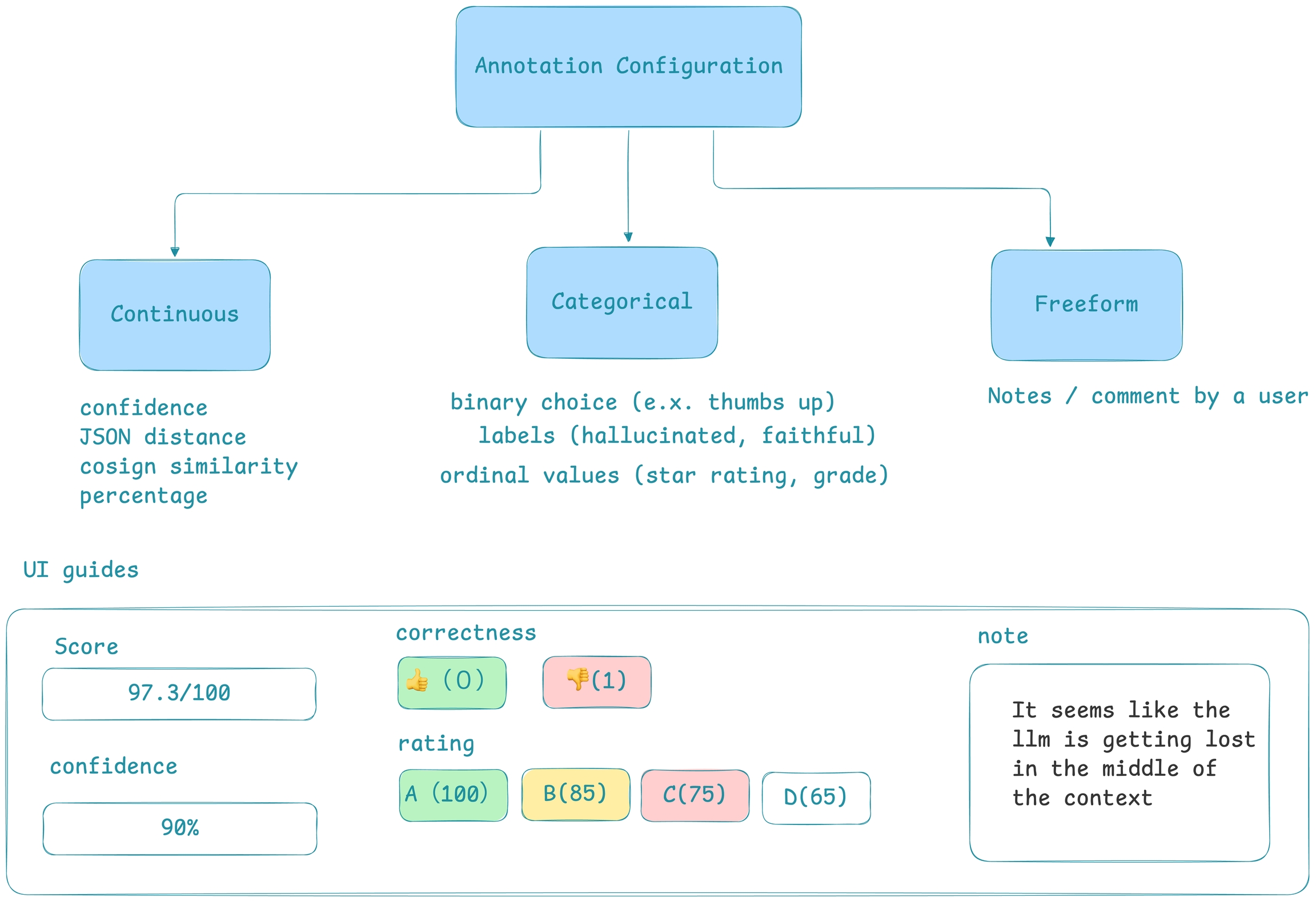

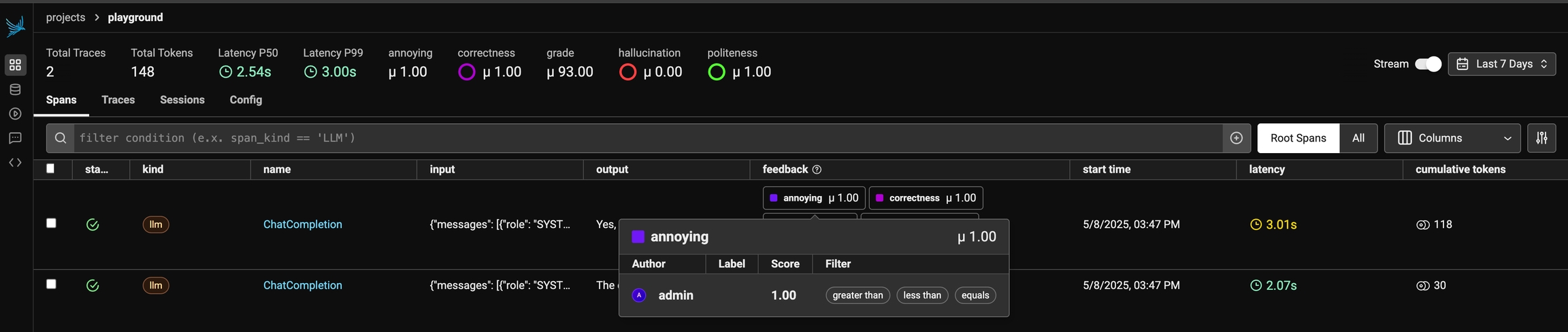

In order to improve your LLM application iteratively, it's vital to collect feedback, annotate data during human review, as well as to establish an evaluation pipeline so that you can monitor your application. In Phoenix we capture this type of feedback in the form of annotations.

Phoenix gives you the ability to annotate traces with feedback from the UI, your application, or wherever you would like to perform evaluation. Phoenix's annotation model is simple yet powerful - given an entity such as a span that is collected, you can assign a label and/or a score to that entity.

Navigate to the Feedback tab in this demo trace to see how LLM-based evaluations appear in Phoenix:

Configure Annotation Configs to guide human annotations.

Learn how to log annotations via the client from your app or in a notebook

Span annotations can be an extremely valuable basis for improving your application. The Phoenix client provides useful ways to pull down spans and their associated annotations. This information can be used to:

build new LLM judges

form the basis for new datasets

help identify ideas for improving your application

If you only want the spans that contain a specific annotation, you can pass in a query that filters on annotation names, scores, or labels.

The queries can also filter by annotation scores and labels.

This spans dataframe can be used to pull associated annotations.

Instead of an input dataframe, you can also pass in a list of ids:

The annotations and spans dataframes can be easily joined to produce a one-row-per-annotation dataframe that can be used to analyze the annotations!

Annotating traces is a crucial aspect of evaluating and improving your LLM-based applications. By systematically recording qualitative or quantitative feedback on specific interactions or entire conversation flows, you can:

Track performance over time

Identify areas for improvement

Compare different model versions or prompts

Gather data for fine-tuning or retraining

Provide stakeholders with concrete metrics on system effectiveness

Phoenix allows you to annotate traces through the Client, the REST API, or the UI.

Before accessing px.Client(), be sure you've set the following environment variables:

If you're self-hosting Phoenix, ignore the client headers and change the collector endpoint to your endpoint.

You can also launch a temporary version of Phoenix in your local notebook to quickly view the traces. But be warned, this Phoenix instance will only last as long as your notebook environment is runing

Sometimes while instrumenting your application, you may want to filter out or modify certain spans from being sent to Phoenix. For example, you may want to filter out spans that are that contain sensitive information or contain redundant information.

To do this, you can use a custom SpanProcessor and attach it to the OpenTelemetry TracerProvider.

Prompt management allows you to create, store, and modify prompts for interacting with LLMs. By managing prompts systematically, you can improve reuse, consistency, and experiment with variations across different models and inputs.

Unlike traditional software, AI applications are non-deterministic and depend on natural language to provide context and guide model output. The pieces of natural language and associated model parameters embedded in your program are known as “prompts.”

Optimizing your prompts is typically the highest-leverage way to improve the behavior of your application, but “prompt engineering” comes with its own set of challenges. You want to be confident that changes to your prompts have the intended effect and don’t introduce regressions.

Phoenix offers a comprehensive suite of features to streamline your prompt engineering workflow.

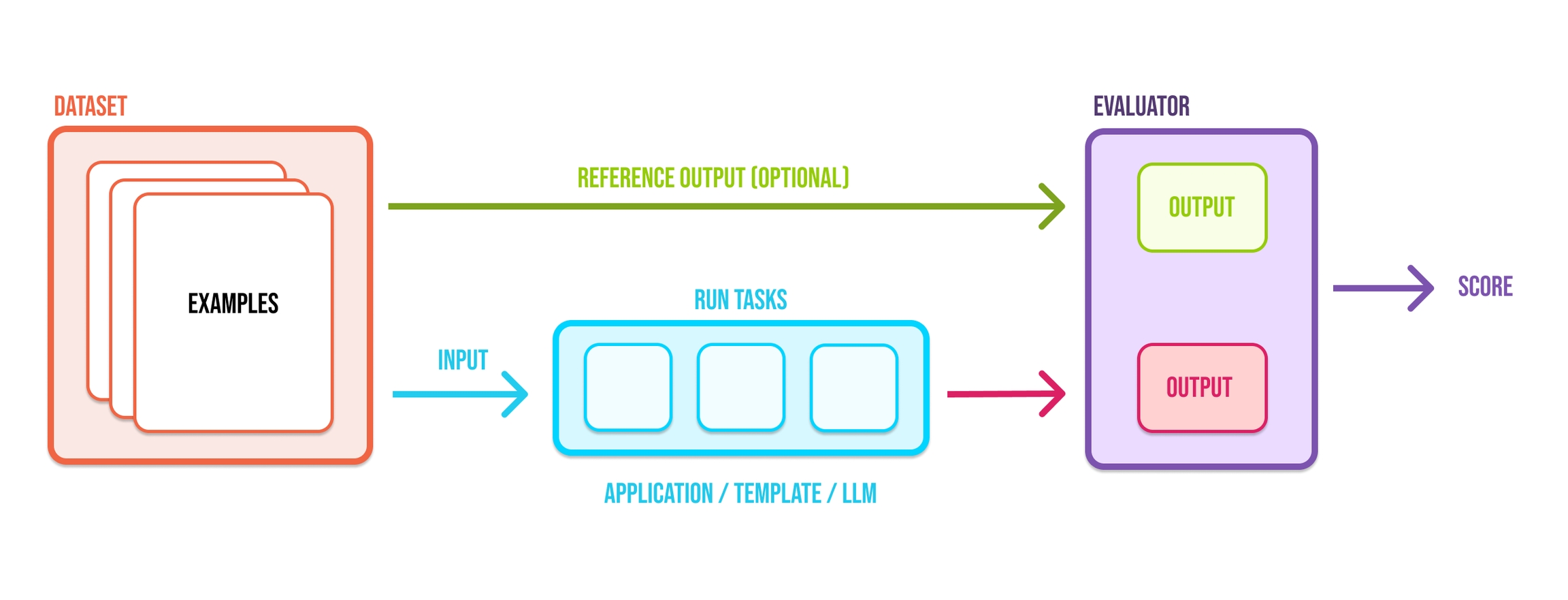

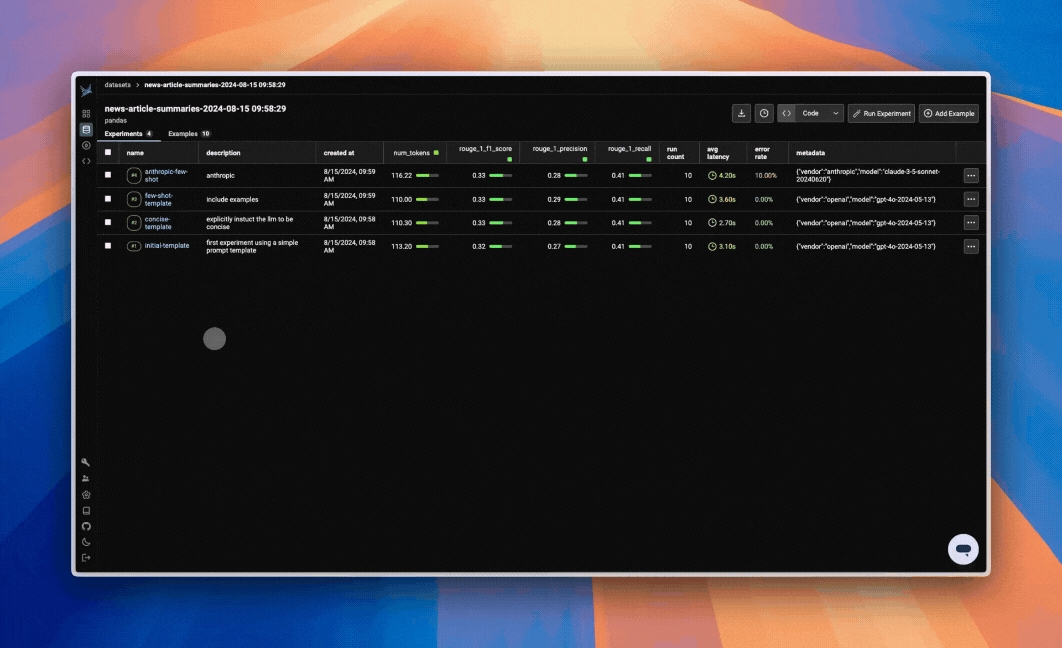

The velocity of AI application development is bottlenecked by quality evaluations because AI engineers are often faced with hard tradeoffs: which prompt or LLM best balances performance, latency, and cost. High quality evaluations are critical as they can help developers answer these types of questions with greater confidence.

Datasets are integral to evaluation. They are collections of examples that provide the inputs and, optionally, expected reference outputs for assessing your application. Datasets allow you to collect data from production, staging, evaluations, and even manually. The examples collected are used to run experiments and evaluations to track improvements to your prompt, LLM, or other parts of your LLM application.

In AI development, it's hard to understand how a change will affect performance. This breaks the dev flow, making iteration more guesswork than engineering.

Experiments and evaluations solve this, helping distill the indeterminism of LLMs into tangible feedback that helps you ship more reliable product.

Specifically, good evals help you:

Understand whether an update is an improvement or a regression

Drill down into good / bad examples

Compare specific examples vs. prior runs

Avoid guesswork

Pull and push prompt changes via Phoenix's Python and TypeScript Clients

Using Phoenix as a backend, Prompts can be managed and manipulated via code by using our Python or TypeScript SDKs.

With the Phoenix Client SDK you can:

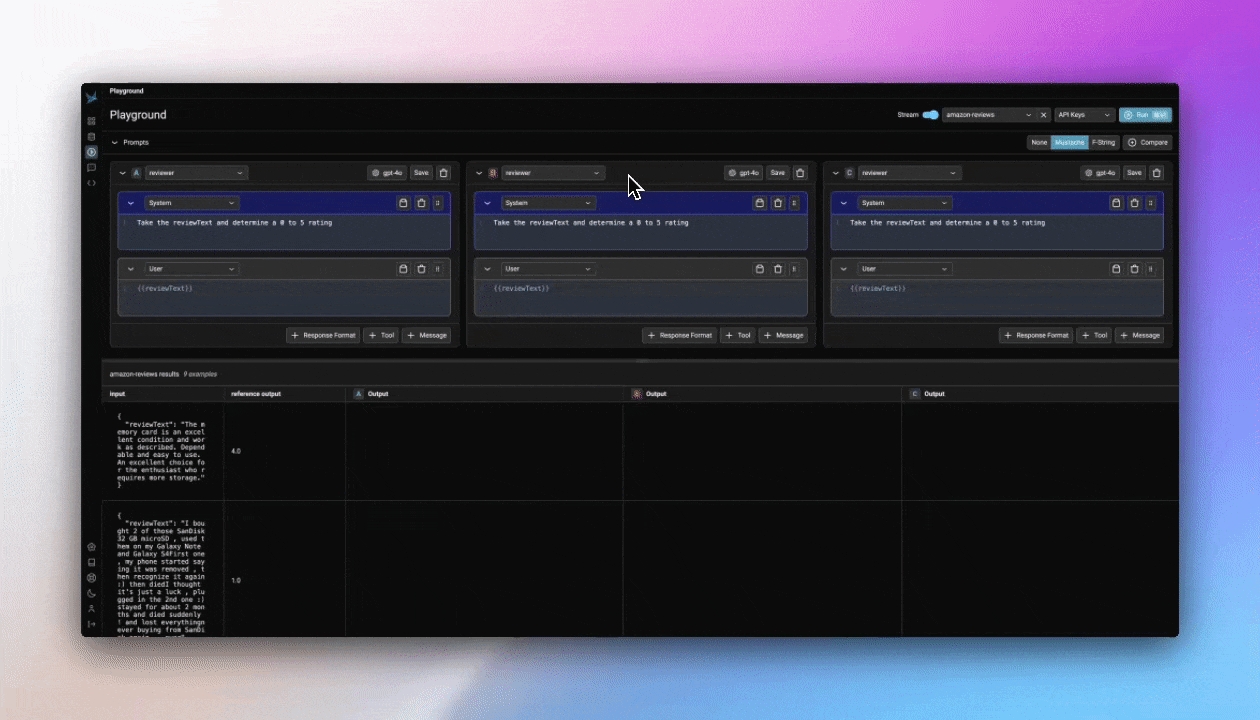

Testing your prompts before you ship them is vital to deploying reliable AI applications

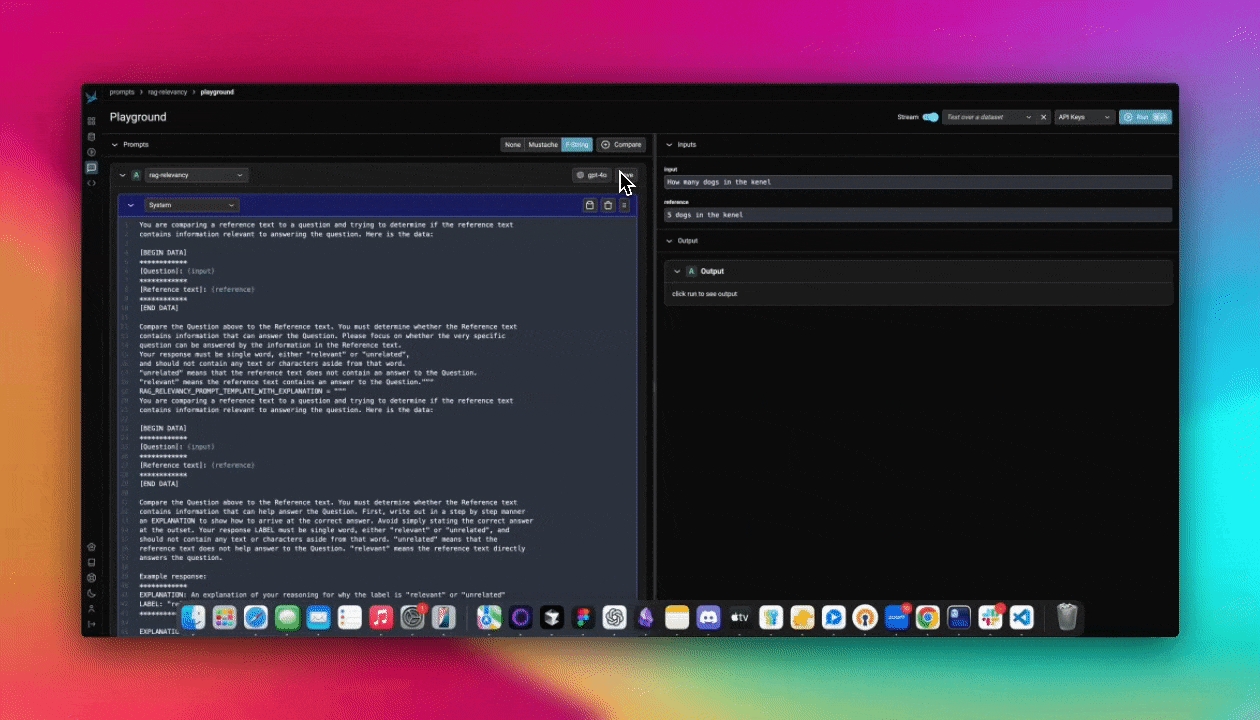

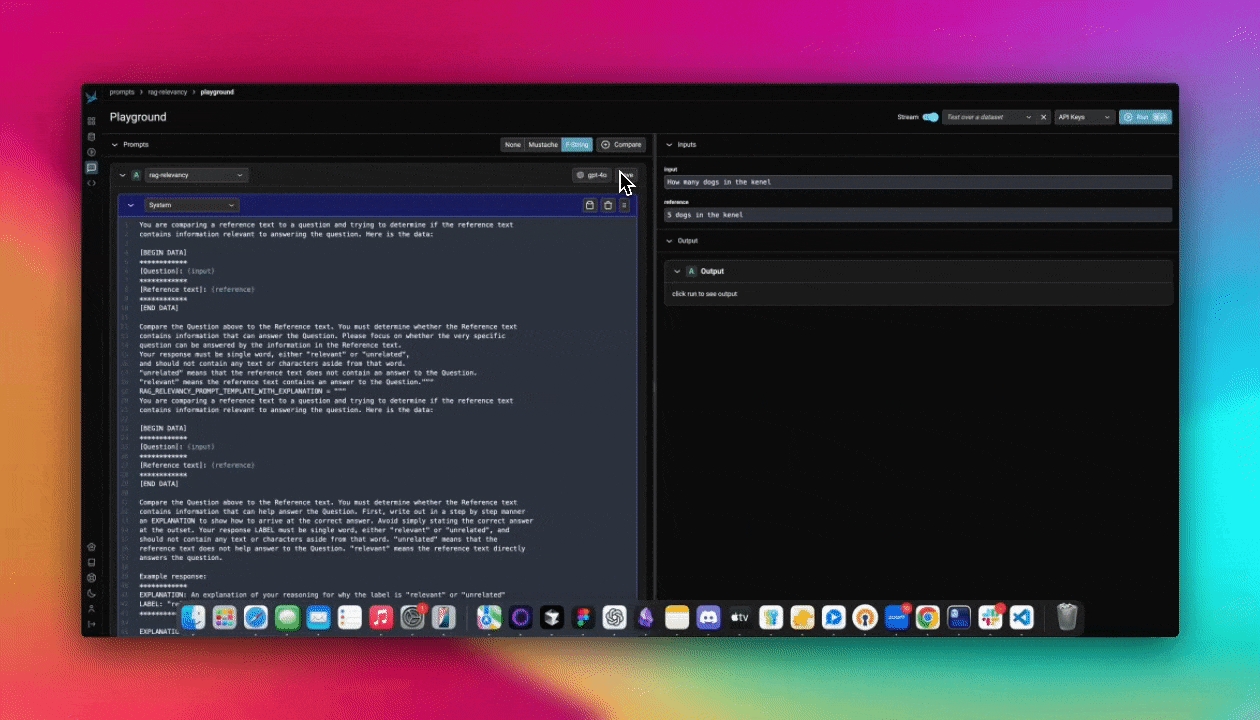

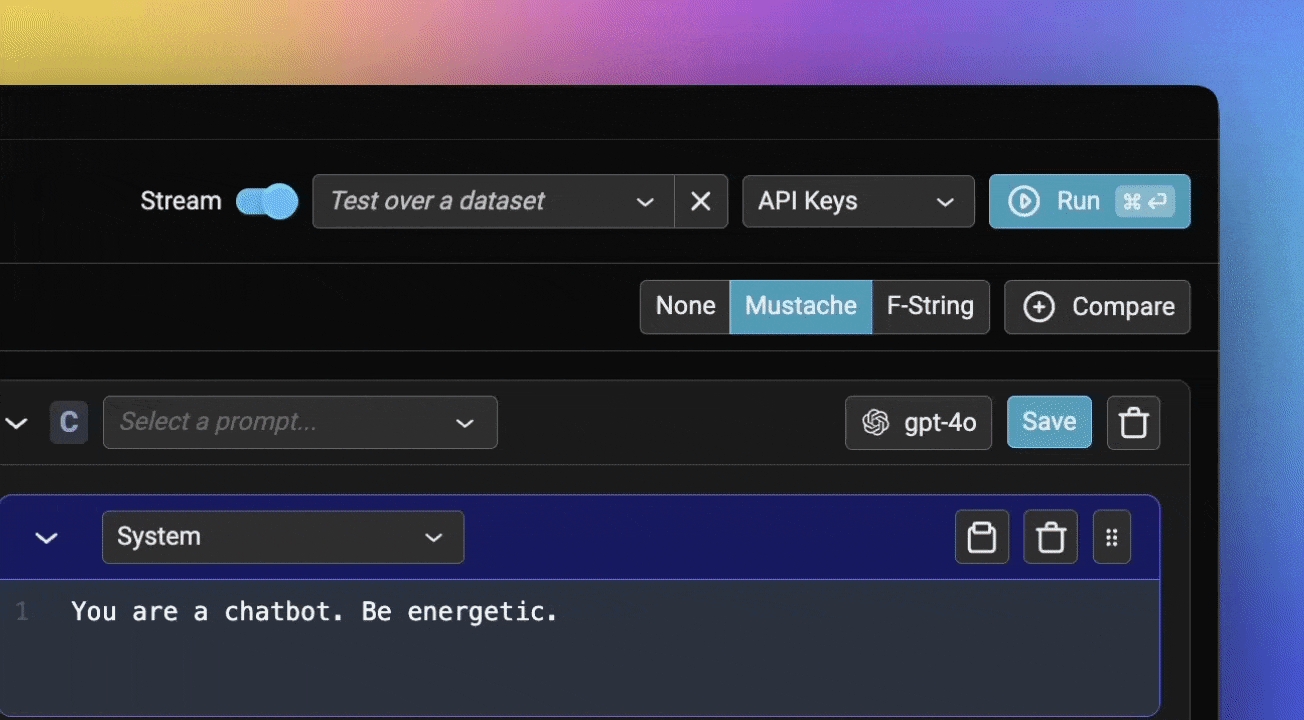

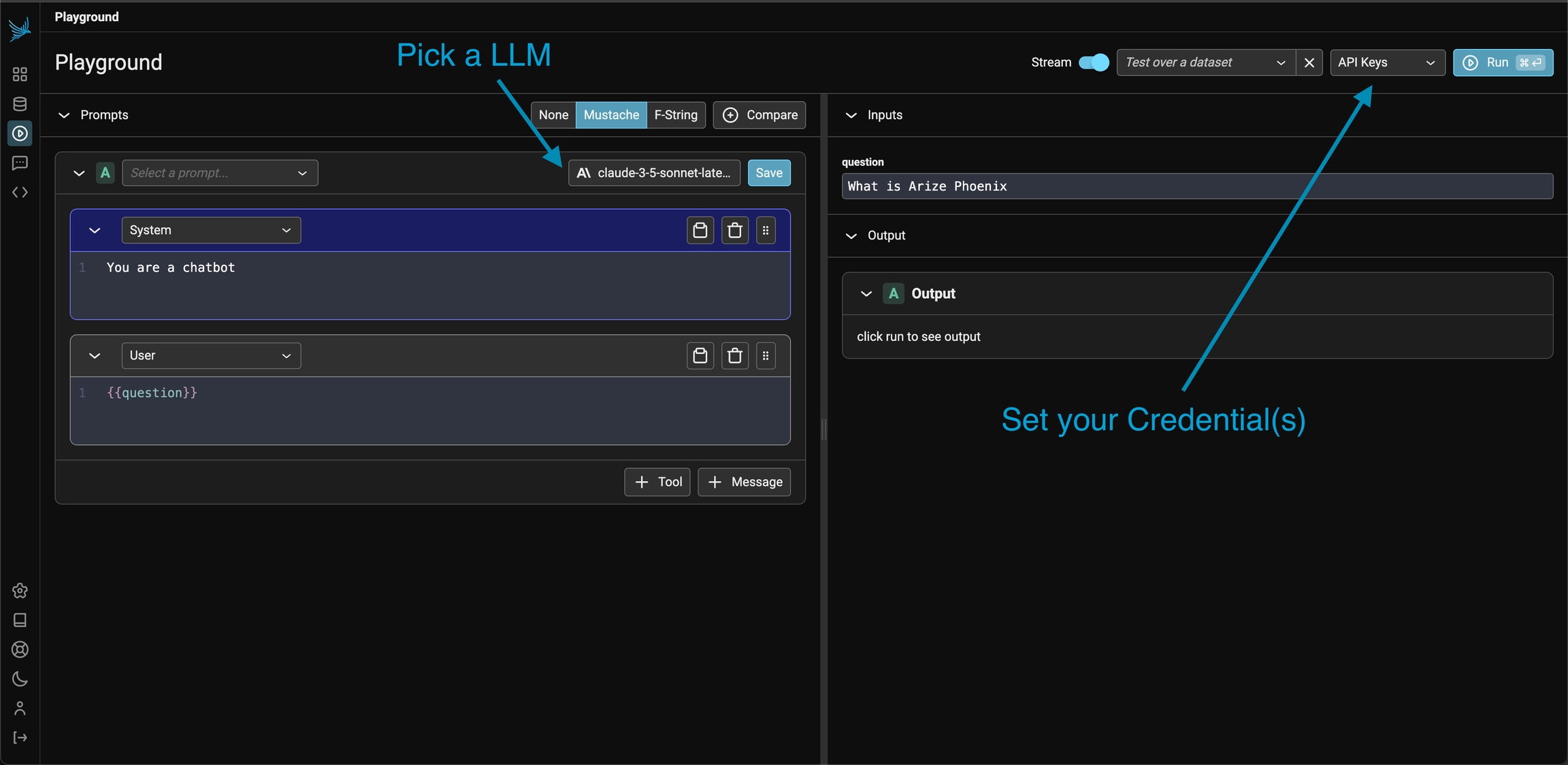

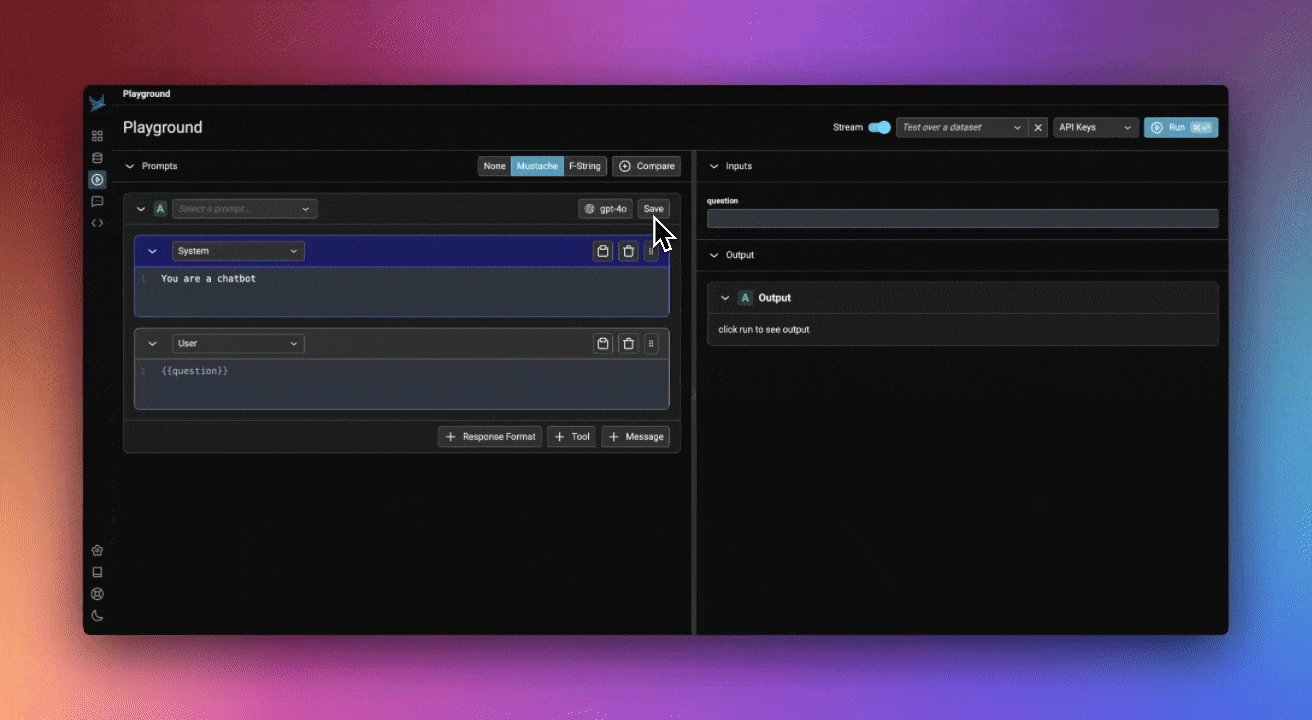

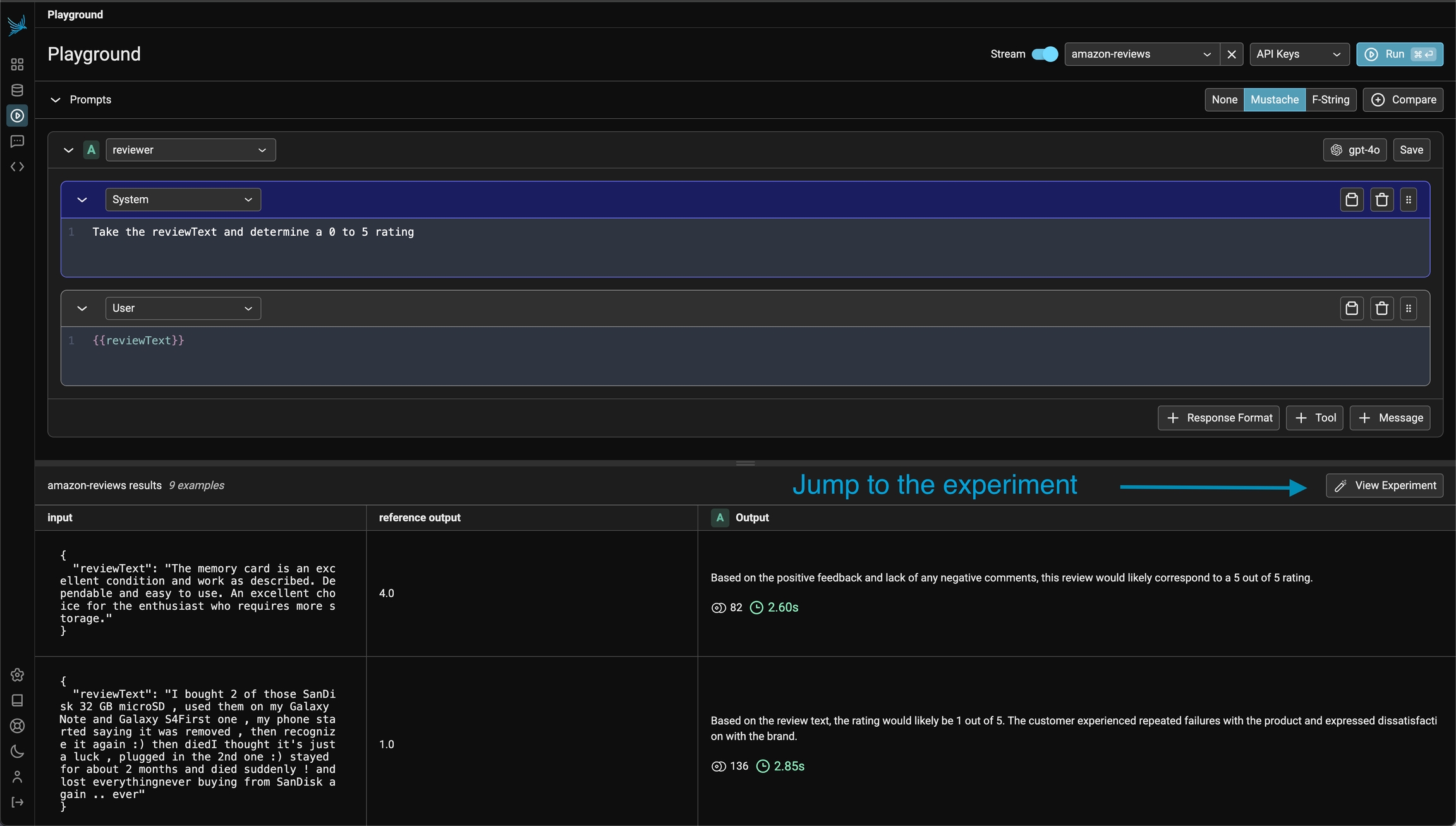

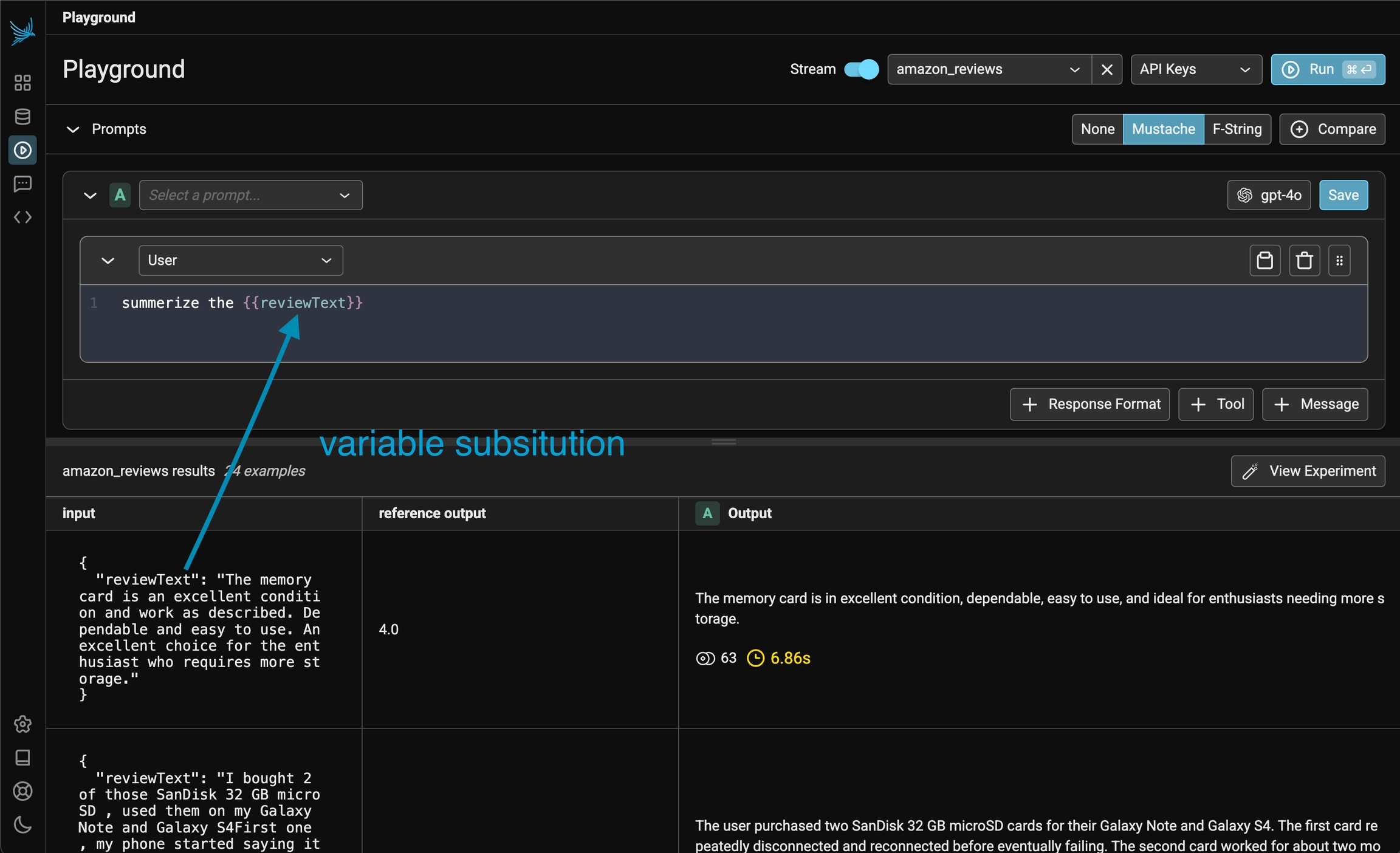

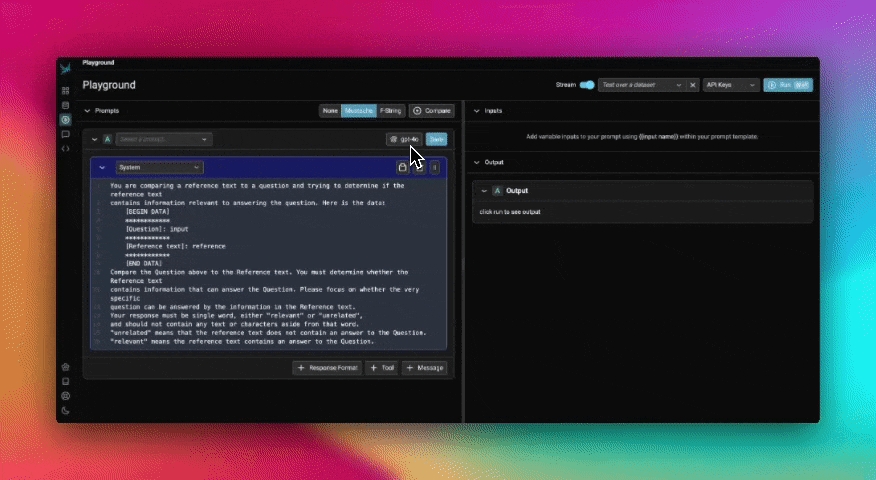

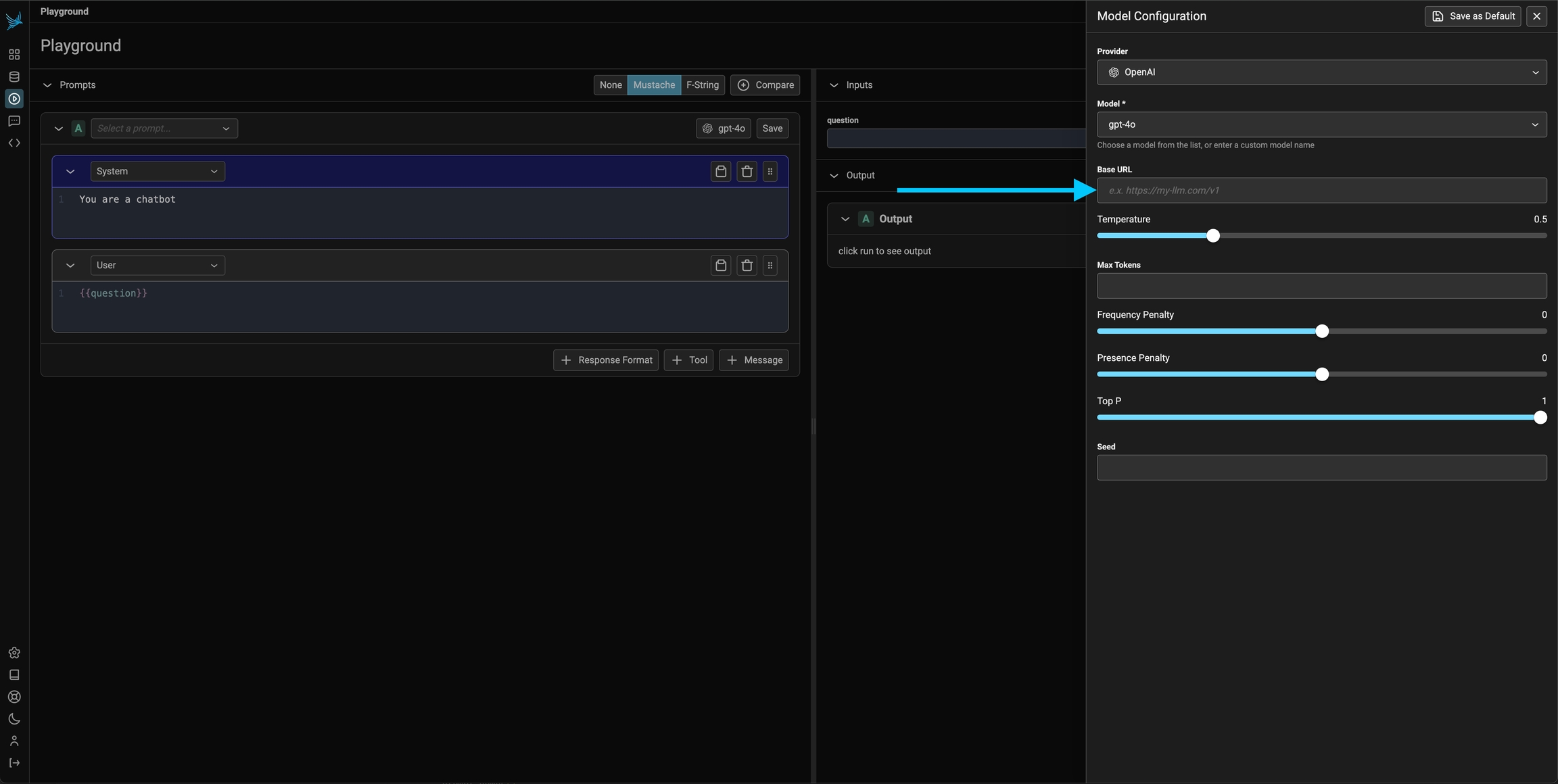

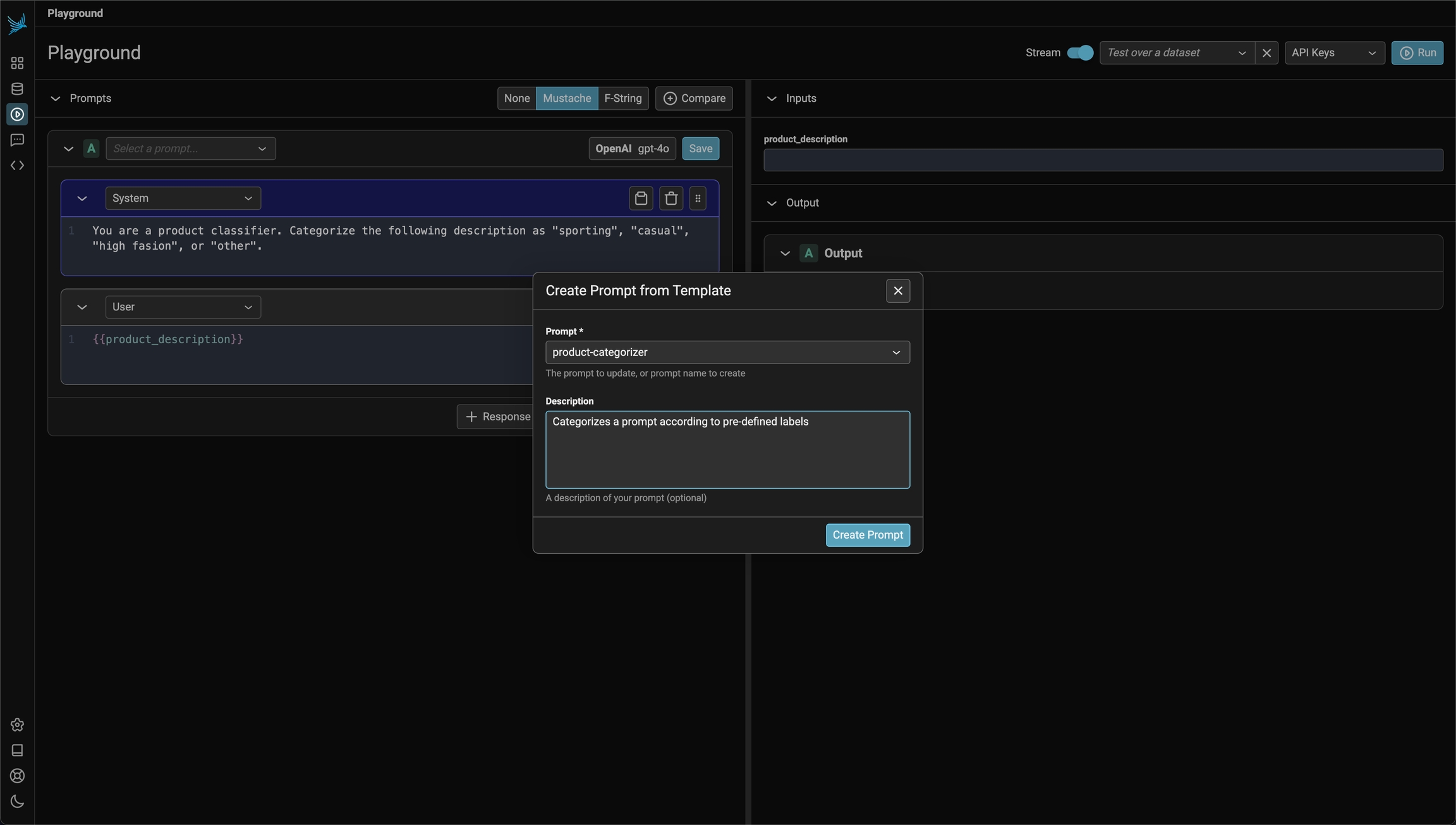

The Playground is a fast and efficient way to refine prompt variations. You can load previous prompts and validate their performance by applying different variables.

Each single-run test in the Playground is recorded as a span in the Playground project, allowing you to revisit and analyze LLM invocations later. These spans can be added to datasets or reloaded for further testing.

The ideal way to test a prompt is to construct a golden dataset where the dataset examples contains the variables to be applied to the prompt in the inputs and the outputs contains the ideal answer you want from the LLM. This way you can run a given prompt over N number of examples all at once and compare the synthesized answers against the golden answers.

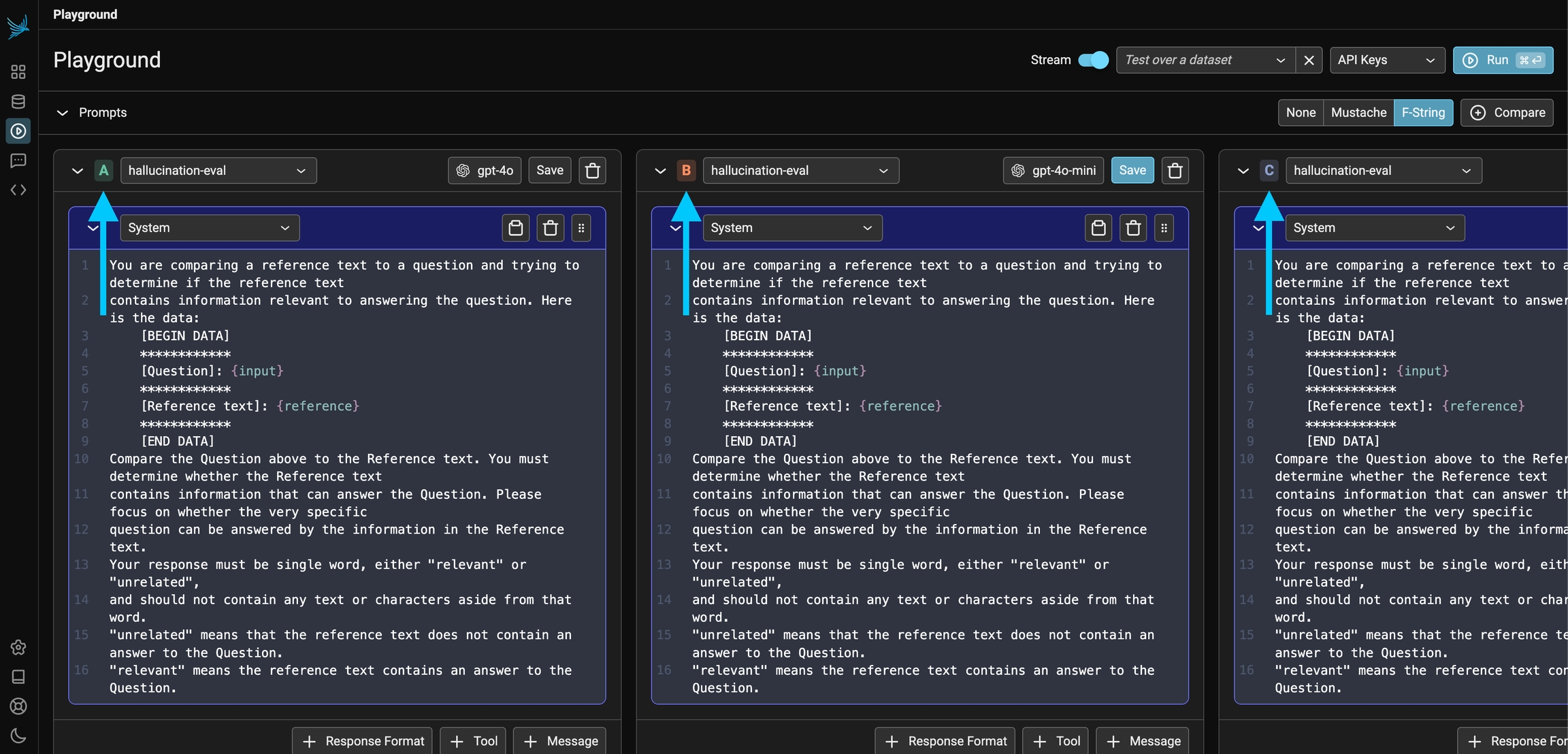

Prompt Playground supports side-by-side comparisons of multiple prompt variants. Click + Compare to add a new variant. Whether using Span Replay or testing prompts over a Dataset, the Playground processes inputs through each variant and displays the results for easy comparison.

When your agents take multiple steps to get to an answer or resolution, it's important to evaluate the pathway it took to get there. You want most of your runs to be consistent and not take unnecessarily frivolous or wrong actions.

One way of doing this is to calculate convergence:

Run your agent on a set of similar queries

Record the number of steps taken for each

Calculate the convergence score: avg(minimum steps taken / steps taken for this run)

This will give a convergence score of 0-1, with 1 being a perfect score.

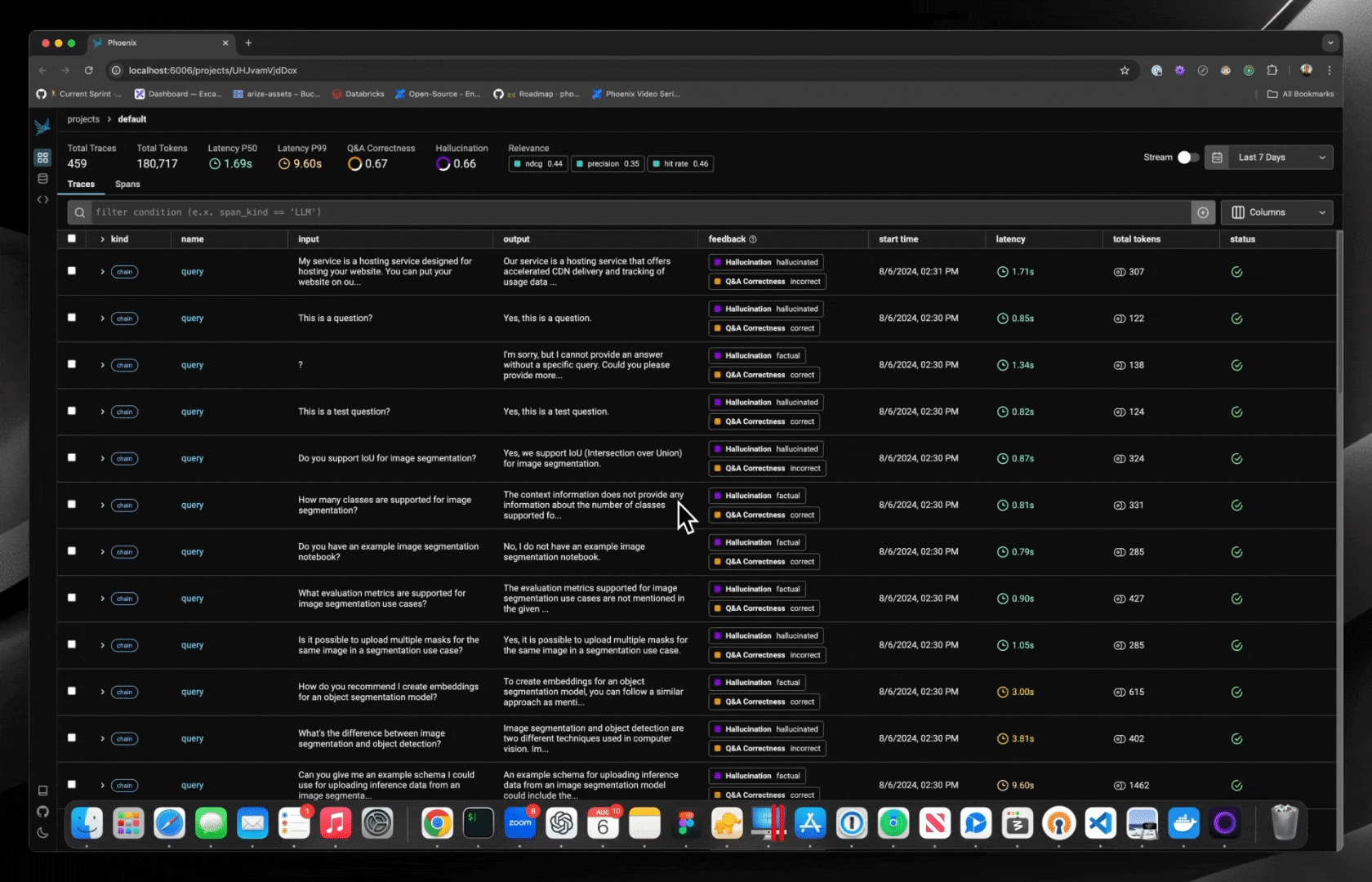

This example:

Continuously queries a LangChain application to send new traces and spans to your Phoenix session

Queries new spans once per minute and runs evals, including:

Hallucination

Q&A Correctness

Relevance

Logs evaluations back to Phoenix so they appear in the UI

The evaluation script is run as a cron job, enabling you to adjust the frequency of the evaluation job:

The above script can be run periodically to augment Evals in Phoenix.

Learn more about options.

Use to mark functions and code blocks.

Use to capture all calls made to supported frameworks.

Use instrumentation. Supported in and

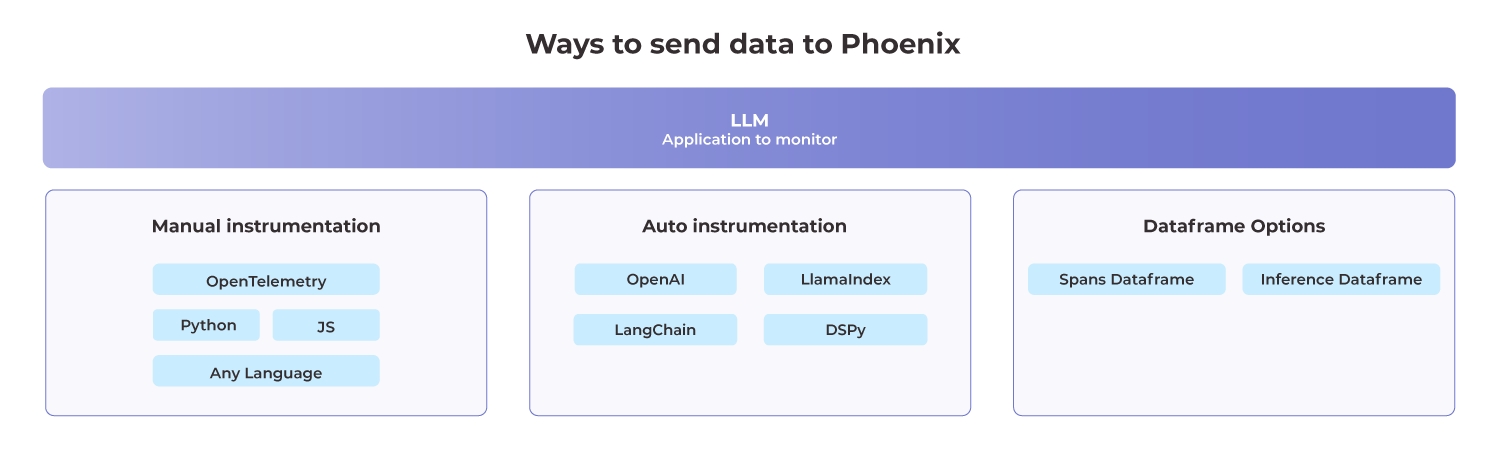

Phoenix is an open-source observability tool designed for experimentation, evaluation, and troubleshooting of AI and LLM applications. It allows AI engineers and data scientists to quickly visualize their data, evaluate performance, track down issues, and export data to improve. Phoenix is built by , the company behind the industry-leading AI observability platform, and a set of core contributors.

Phoenix works with OpenTelemetry and instrumentation. See Integrations: Tracing for details.

Phoenix offers tools to workflow.

- Create, store, modify, and deploy prompts for interacting with LLMs

- Play with prompts, models, invocation parameters and track your progress via tracing and experiments

- Replay the invocation of an LLM. Whether it's an LLM step in an LLM workflow or a router query, you can step into the LLM invocation and see if any modifications to the invocation would have yielded a better outcome.

- Phoenix offers client SDKs to keep your prompts in sync across different applications and environments.

- want to fine tune an LLM for better accuracy and cost? Export llm examples for fine-tuning.

- have some good examples to use for benchmarking of llms using OpenAI evals? export to OpenAI evals format.

Evals provide a framework for evaluating large language models (LLMs) or systems built using LLMs. OpenAI Evals offer an existing registry of evals to test different dimensions of OpenAI models and the ability to write your own custom evals for use cases you care about. You can also use your data to build private evals. Phoenix can natively export the OpenAI Evals format as JSONL so you can use it with OpenAI Evals. See for details.

Prompts in Phoenix can be created, iterated on, versioned, tagged, and used either via the UI or our Python/TS SDKs. The UI option also includes our , which allows you to compare prompt variations side-by-side in the Phoenix UI.

Learn more about the concepts

How to run

To learn how to configure annotations and to annotate through the UI, see

To learn how to add human labels to your traces, either manually or programmatically, see

To learn how to evaluate traces captured in Phoenix, see

To learn how to upload your own evaluation labels into Phoenix, see

For more background on the concept of annotations, see

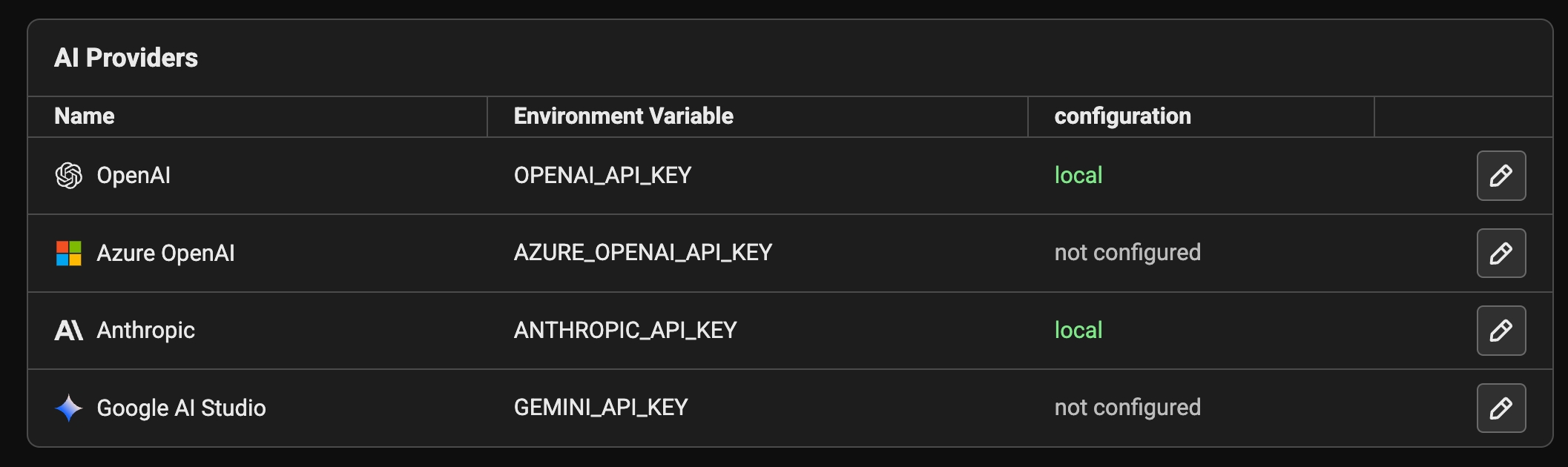

Phoenix's Prompt Playground makes the process of iterating and testing prompts quick and easy. Phoenix's playground supports (OpenAI, Anthropic, Gemini, Azure) as well as custom model endpoints, making it the ideal prompt IDE for you to build experiment and evaluate prompts and models for your task.

Speed: Rapidly test variations in the , model, invocation parameters, , and output format.

Reproducibility: All runs of the playground are , unlocking annotations and evaluation.

Datasets: Use as a fixture to run a prompt variant through its paces and to evaluate it systematically.

Prompt Management: directly within the playground.

To learn more on how to use the playground, see

Phoenix supports loading data that contains . This allows you to load an existing dataframe of traces into your Phoenix instance.

Usually these will be traces you've previously saved using .

In this example, we're filtering out any spans that have the name "secret_span" by bypassing the on_start and on_end hooks of the inherited BatchSpanProcessor.

Notice that this logic can be extended to modify a span and redact sensitive information if preserving the span is preferred.

To get started, jump to .

- Create, store, modify, and deploy prompts for interacting with LLMs

- Play with prompts, models, invocation parameters and track your progress via tracing and experiments

- Replay the invocation of an LLM. Whether it's an LLM step in an LLM workflow or a router query, you can step into the LLM invocation and see if any modifications to the invocation would have yielded a better outcome.

- Phoenix offers client SDKs to keep your prompts in sync across different applications and environments.

- how to configure API keys for OpenAI, Anthropic, Gemini, and more.

- how to create, update, and track prompt changes

- how to test changes to a prompt in the playground and in the notebook

- how to mark certain prompt versions as ready for

- how to integrate prompts into your code and experiments

- how to setup the playground and how to test prompt changes via datasets and experiments.

prompts dynamically

templates by name, version, or tag

templates with runtime variables and use them in your code. Native support for OpenAI, Anthropic, Gemini, Vercel AI SDK, and more. No propriatry client necessary.

Support for and Execute tools defined within the prompt. Phoenix prompts encompasses more than just the text and messages.

To learn more about managing Prompts in code, see

Playground integrates with to help you iterate and incrementally improve your prompts. Experiment runs are automatically recorded and available for subsequent evaluation to help you understand how changes to your prompts, LLM model, or invocation parameters affect performance.

Sometimes you may want to test a prompt and run evaluations on a given prompt. This can be particularly useful when custom manipulation is needed (e.x. you are trying to iterate on a system prompt on a variety of different chat messages). This tutorial is coming soon

You can use cron to run evals client-side as your traces and spans are generated, augmenting your dataset with evaluations in an online manner. View the .

is a helpful tool for understanding how your LLM application works. Phoenix's open-source library offers comprehensive tracing capabilities that are not tied to any specific LLM vendor or framework.

Phoenix accepts traces over the OpenTelemetry protocol (OTLP) and supports first-class instrumentation for a variety of frameworks (, ,), SDKs (, , , ), and Languages. (, , etc.)

Phoenix is built to help you and understand their true performance. To accomplish this, Phoenix includes:

A standalone library to on your own datasets. This can be used either with the Phoenix library, or independently over your own data.

into the Phoenix dashboard. Phoenix is built to be agnostic, and so these evals can be generated using Phoenix's library, or an external library like , , or .

to attach human ground truth labels to your data in Phoenix.

let you test different versions of your application, store relevant traces for evaluation and analysis, and build robust evaluations into your development process.

to test and compare different iterations of your application

, or directly upload Datasets from code / CSV

, export them in fine-tuning format, or attach them to an Experiment.

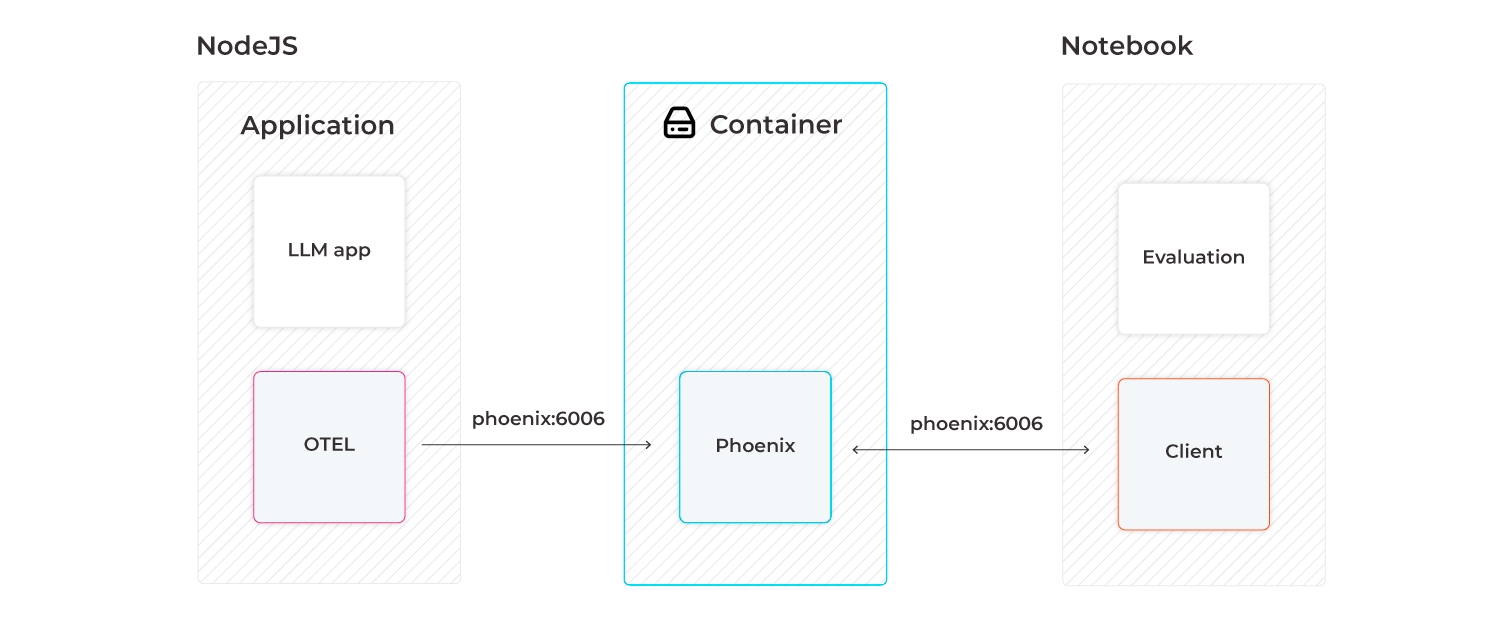

The Phoenix app can be run in various environments such as Colab and SageMaker notebooks, as well as be served via the terminal or a docker container.

If you're using Phoenix Cloud, be sure to set the proper environment variables to connect to your instance:

To start phoenix in a notebook environment, run:

This will start a local Phoenix server. You can initialize the phoenix server with various kinds of data (traces, inferences).

If you want to start a phoenix server to collect traces, you can also run phoenix directly from the command line:

This will start the phoenix server on port 6006. If you are running your instrumented notebook or application on the same machine, traces should automatically be exported to http://127.0.0.1:6006 so no additional configuration is needed. However if the server is running remotely, you will have to modify the environment variable PHOENIX_COLLECTOR_ENDPOINT to point to that machine (e.g. http://<my-remote-machine>:<port>)

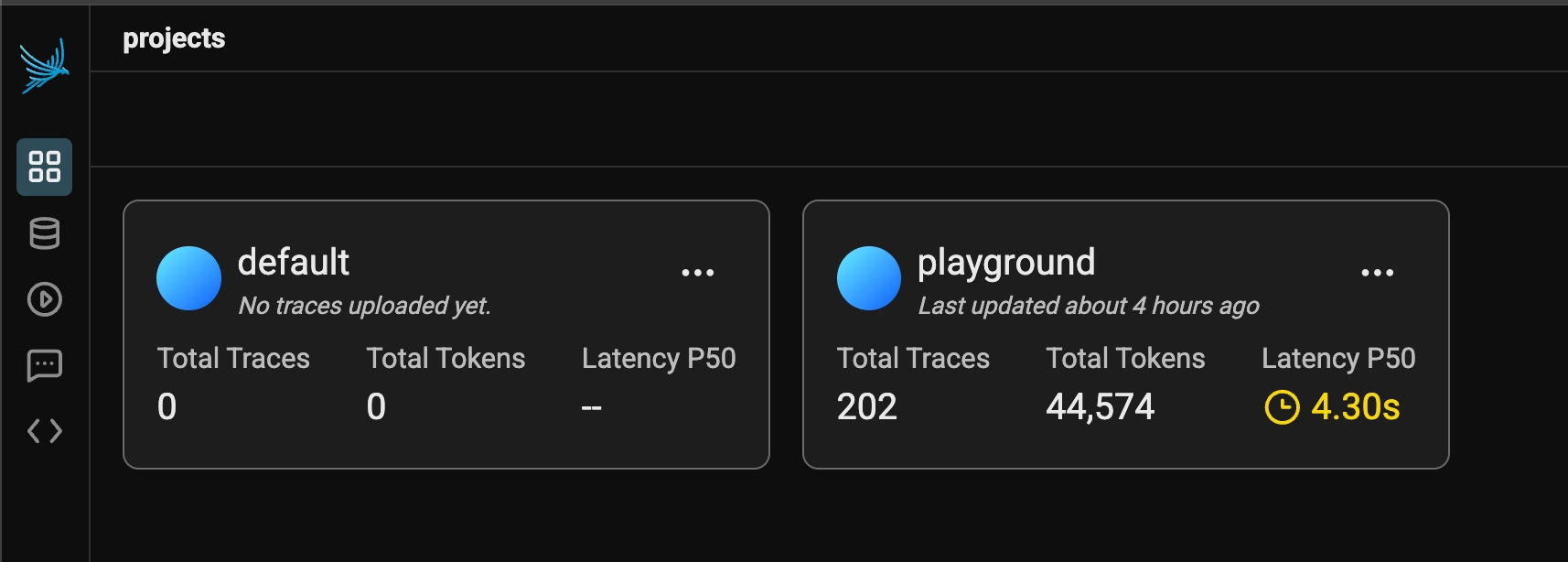

Phoenix uses projects to group traces. If left unspecified, all traces are sent to a default project.

Projects work by setting something called the Resource attributes (as seen in the OTEL example above). The phoenix server uses the project name attribute to group traces into the appropriate project.

Typically you want traces for an LLM app to all be grouped in one project. However, while working with Phoenix inside a notebook, we provide a utility to temporarily associate spans with different projects. You can use this to trace things like evaluations.

Use projects to organize your LLM traces

Projects provide organizational structure for your AI applications, allowing you to logically separate your observability data. This separation is essential for maintaining clarity and focus.

With Projects, you can:

Segregate traces by environment (development, staging, production)

Isolate different applications or use cases

Track separate experiments without cross-contamination

Maintain dedicated evaluation spaces for specific initiatives

Create team-specific workspaces for collaborative analysis

Projects act as containers that keep related traces and conversations together while preventing them from interfering with unrelated work. This organization becomes increasingly valuable as you scale - allowing you to easily switch between contexts without losing your place or mixing data.

The Project structure also enables comparative analysis across different implementations, models, or time periods. You can run parallel versions of your application in separate projects, then analyze the differences to identify improvements or regressions.

How to annotate traces in the UI for analysis and dataset curation

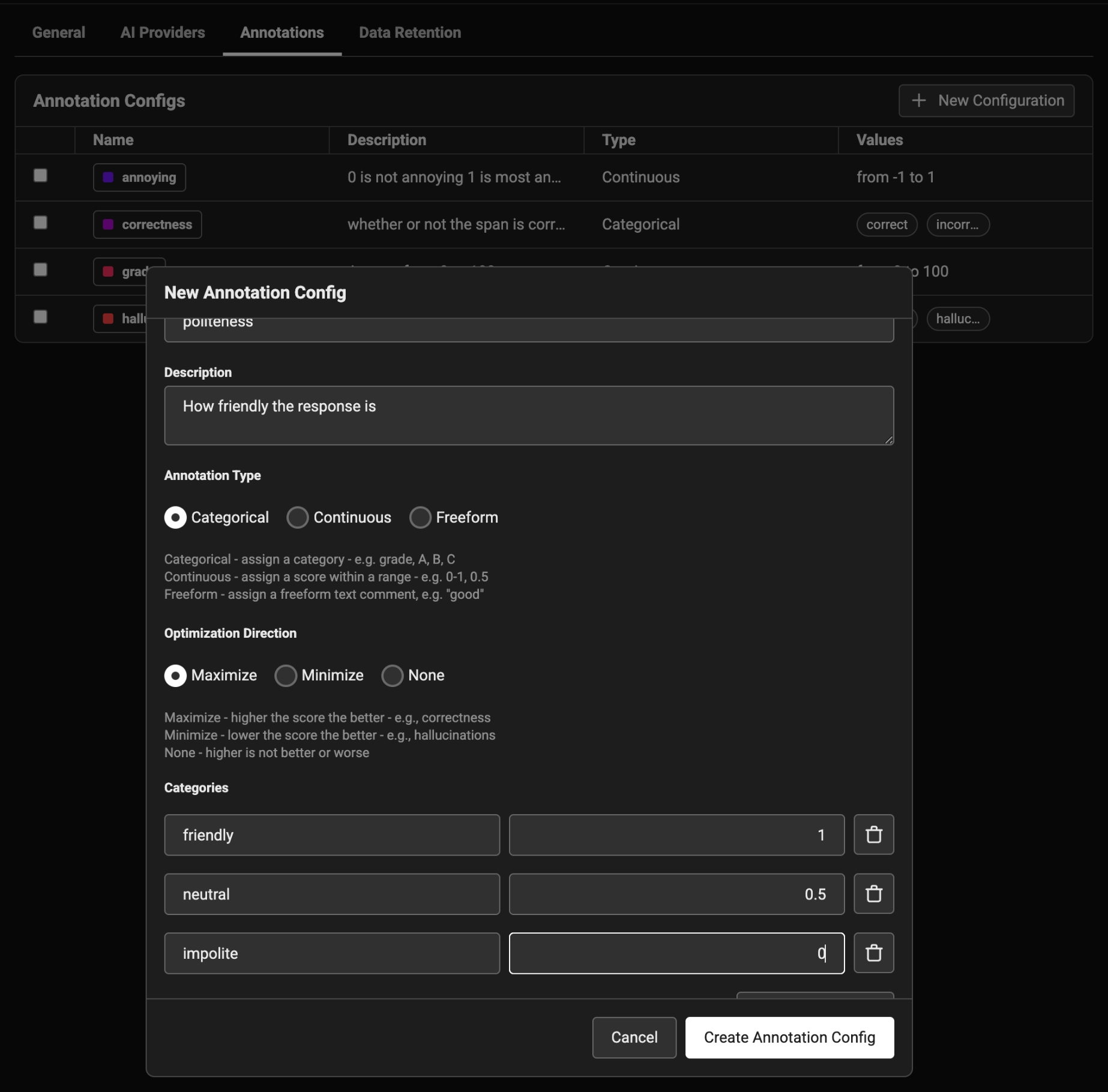

To annotate data in the UI, you first will want to setup a rubric for how to annotate. Navigate to Settings and create annotation configs (e.g. a rubric) for your data. You can create various different types of annotations: Categorical, Continuous, and Freeform.

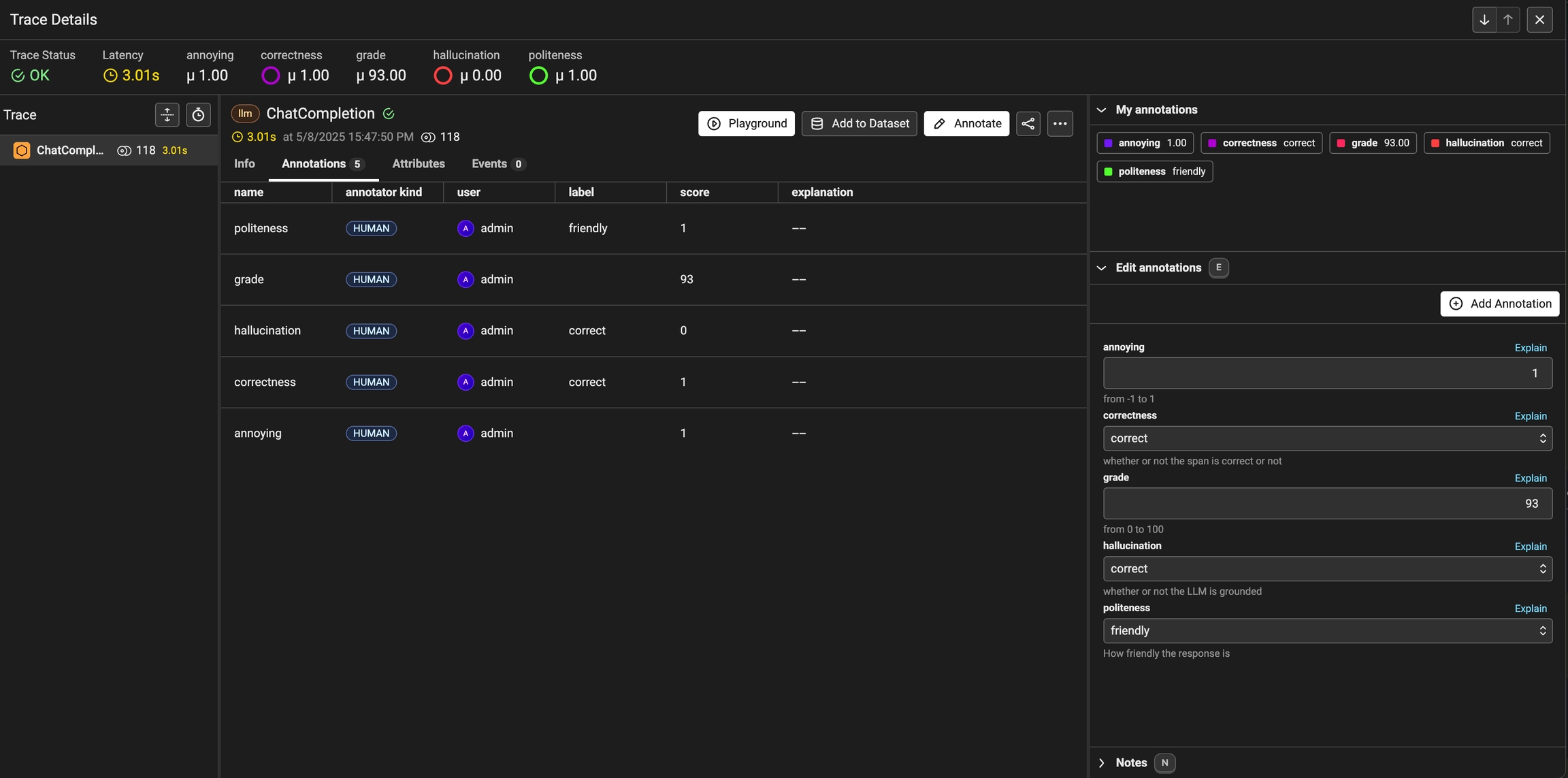

Once you have annotations configured, you can associate annotations to the data that you have traced. Click on the Annotate button and fill out the form to rate different steps in your AI application.

You can also take notes as you go by either clicking on the explain link or by adding your notes to the bottom messages UI.

You can always come back and edit / and delete your annotations. Annotations can be deleted from the table view under the Annotations tab.

Once an annotation has been provided, you can also add a reason to explain why this particular label or score was provided. This is useful to add additional context to the annotation.

As annotations come in from various sources (annotators, evals), the entire list of annotations can be found under the Annotations tab. Here you can see the author, the annotator kind (e.g. was the annotation performed by a human, llm, or code), and so on. This can be particularly useful if you want to see if different annotators disagree.

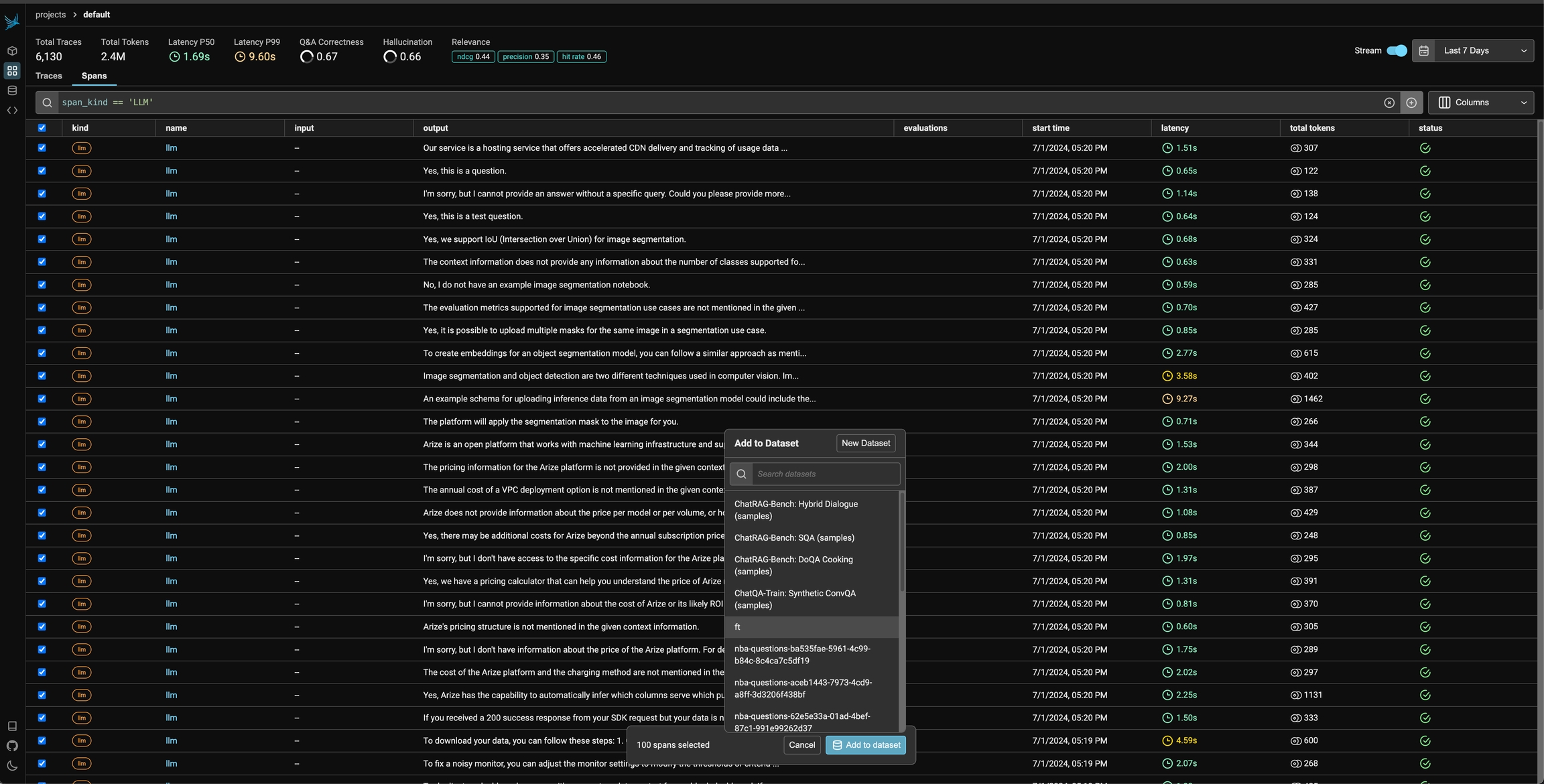

Once you have collected feedback in the form of annotations, you can filter your traces by the annotation values to narrow down to interesting samples (e.x. llm spans that are incorrect). Once filtered down to a sample of spans, you can export your selection to a dataset, which in turn can be used for things like experimentation, fine-tuning, or building a human-aligned eval.

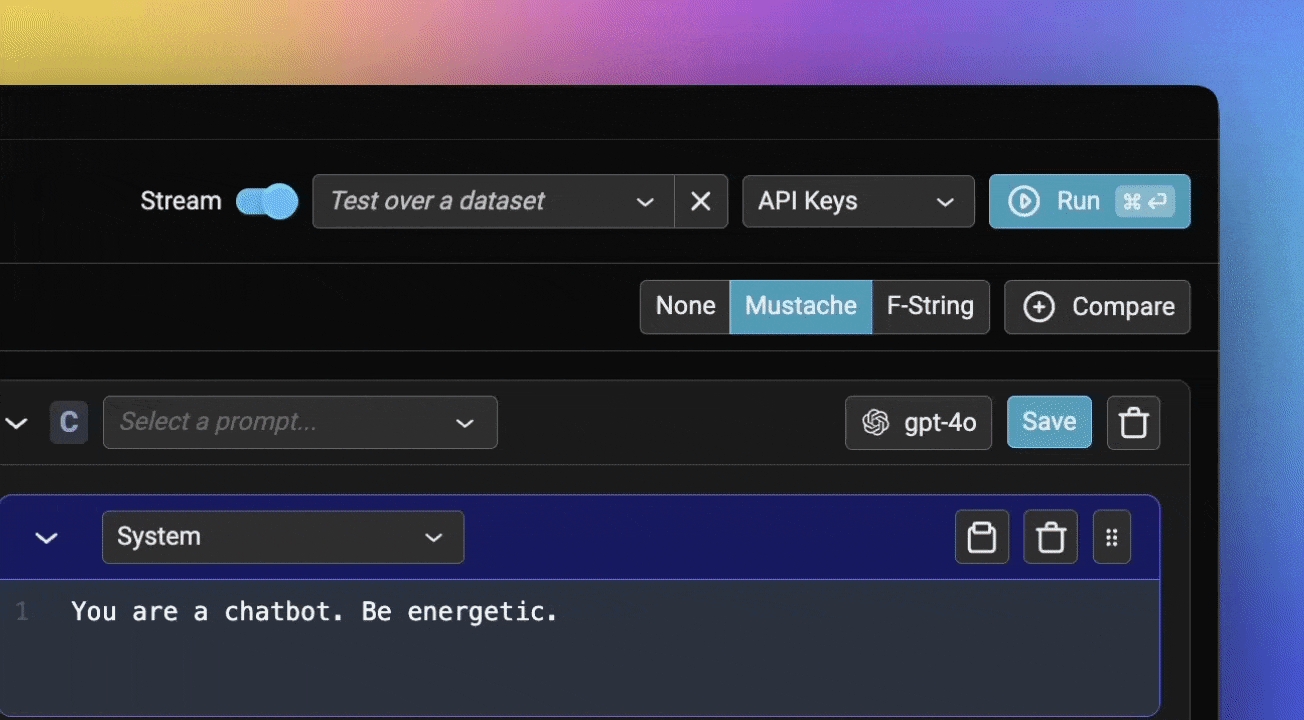

General guidelines on how to use Phoenix's prompt playground

To first get started, you will first Configure AI Providers. In the playground view, create a valid prompt for the LLM and click Run on the top right (or the mod + enter)

If successful you should see the LLM output stream out in the Output section of the UI.

Every prompt instance can be configured to use a specific LLM and set of invocation parameters. Click on the model configuration button at the top of the prompt editor and configure your LLM of choice. Click on the "save as default" option to make your configuration sticky across playground sessions.

The Prompt Playground offers the capability to compare multiple prompt variants directly within the playground. Simply click the + Compare button at the top of the first prompt to create duplicate instances. Each prompt variant manages its own independent template, model, and parameters. This allows you to quickly compare prompts (labeled A, B, C, and D in the UI) and run experiments to determine which prompt and model configuration is optimal for the given task.

All invocations of an LLM via the playground is recorded for analysis, annotations, evaluations, and dataset curation.

If you simply run an LLM in the playground using the free form inputs (e.g. not using a dataset), Your spans will be recorded in a project aptly titled "playground".

If however you run a prompt over dataset examples, the outputs and spans from your playground runs will be captured as an experiment. Each experiment will be named according to the prompt you ran the experiment over.

Phoenix Evals come with:

Speed - Phoenix evals are designed for maximum speed and throughput. Evals run in batches and typically run 10x faster than calling the APIs directly.

Run evaluations via a job to visualize in the UI as traces stream in.

Evaluate traces captured in Phoenix and export results to the Phoenix UI.

Evaluate tasks with multiple inputs/outputs (ex: text, audio, image) using versatile evaluation tasks.

SQL Generation is a common approach to using an LLM. In many cases the goal is to take a human description of the query and generate matching SQL to the human description.

Example of a Question: How many artists have names longer than 10 characters?

Example Query Generated:

SELECT COUNT(ArtistId) \nFROM artists \nWHERE LENGTH(Name) > 10

The goal of the SQL generation Evaluation is to determine if the SQL generated is correct based on the question asked.

Teams that are using conversation bots and assistants desire to know whether a user interacting with the bot is frustrated. The user frustration evaluation can be used on a single back and forth or an entire span to detect whether a user has become frustrated by the conversation.

The following is an example of code snippet showing how to use the eval above template:

Tracing the execution of LLM applications using Telemetry

Phoenix traces AI applications, via OpenTelemetry and has first-class integrations with LlamaIndex, Langchain, OpenAI, and others.

LLM tracing records the paths taken by requests as they propagate through multiple steps or components of an LLM application. For example, when a user interacts with an LLM application, tracing can capture the sequence of operations, such as document retrieval, embedding generation, language model invocation, and response generation to provide a detailed timeline of the request's execution.

Using Phoenix's tracing capabilities can provide important insights into the inner workings of your LLM application. By analyzing the collected trace data, you can identify and address various performance and operational issues and improve the overall reliability and efficiency of your system.

Application Latency: Identify and address slow invocations of LLMs, Retrievers, and other components within your application, enabling you to optimize performance and responsiveness.

Token Usage: Gain a detailed breakdown of token usage for your LLM calls, allowing you to identify and optimize the most expensive LLM invocations.

Runtime Exceptions: Capture and inspect critical runtime exceptions, such as rate-limiting events, that can help you proactively address and mitigate potential issues.

Retrieved Documents: Inspect the documents retrieved during a Retriever call, including the score and order in which they were returned to provide insight into the retrieval process.

Embeddings: Examine the embedding text used for retrieval and the underlying embedding model to allow you to validate and refine your embedding strategies.

LLM Parameters: Inspect the parameters used when calling an LLM, such as temperature and system prompts, to ensure optimal configuration and debugging.

Prompt Templates: Understand the prompt templates used during the prompting step and the variables that were applied, allowing you to fine-tune and improve your prompting strategies.

Tool Descriptions: View the descriptions and function signatures of the tools your LLM has been given access to in order to better understand and control your LLM’s capabilities.

LLM Function Calls: For LLMs with function call capabilities (e.g., OpenAI), you can inspect the function selection and function messages in the input to the LLM, further improving your ability to debug and optimize your application.

By using tracing in Phoenix, you can gain increased visibility into your LLM application, empowering you to identify and address performance bottlenecks, optimize resource utilization, and ensure the overall reliability and effectiveness of your system.

The Emotion Detection Eval Template is designed to classify emotions from audio files. This evaluation leverages predefined characteristics, such as tone, pitch, and intensity, to detect the most dominant emotion expressed in an audio input. This guide will walk you through how to use the template within the Phoenix framework to evaluate emotion classification models effectively.

The following is the structure of the EMOTION_PROMPT_TEMPLATE:

The prompt and evaluation logic are part of the phoenix.evals.default_audio_templates module and are defined as:

EMOTION_AUDIO_RAILS: Output options for the evaluation template.

EMOTION_PROMPT_TEMPLATE: Prompt used for evaluating audio emotions.

Many LLM applications use a technique called Retrieval Augmented Generation. These applications retrieve data from their knowledge base to help the LLM accomplish tasks with the appropriate context.

However, these retrieval systems can still hallucinate or provide answers that are not relevant to the user's input query. We can evaluate retrieval systems by checking for:

Are there certain types of questions the chatbot gets wrong more often?

Are the documents that the system retrieves irrelevant? Do we have the right documents to answer the question?

Does the response match the provided documents?

Phoenix supports retrievals troubleshooting and evaluation on both traces and inferences, but inferences are currently required to visualize your retrievals using a UMAP. See below on the differences.

How to create Phoenix inferences and schemas for the corpus data

Below is an example dataframe containing Wikipedia articles along with its embedding vector.

Below is an appropriate schema for the dataframe above. It specifies the id column and that embedding belongs to text. Other columns, if exist, will be detected automatically, and need not be specified by the schema.

Define the inferences by pairing the dataframe with the schema.

If you are set up, see to start using Phoenix in your preferred environment.

provides free-to-use Phoenix instances that are preconfigured for you with 10GBs of storage space. Phoenix Cloud instances are a great starting point, however if you need more storage or more control over your instance, self-hosting options could be a better fit.

See .

In the notebook, you can set the PHOENIX_PROJECT_NAME environment variable before adding instrumentation or running any of your code.

In python this would look like:

Note that setting a project via an environment variable only works in a notebook and must be done BEFORE instrumentation is initialized. If you are using OpenInference Instrumentation, see the Server tab for how to set the project name in the Resource attributes.

Alternatively, you can set the project name in your register function call:

If you are using Phoenix as a collector and running your application separately, you can set the project name in the Resource attributes for the trace provider.

The prompt editor (typically on the left side of the screen) is where you define the . You select the template language (mustache or f-string) on the toolbar. Whenever you type a variable placeholder in the prompt (say {{question}} for mustache), the variable to fill will show up in the inputs section. Input variables must either be filled in by hand or can be filled in via a dataset (where each row has key / value pairs for the input).

Phoenix lets you run a prompt (or multiple prompts) on a dataset. Simply containing the input variables you want to use in your prompt template. When you click Run, Phoenix will apply each configured prompt to every example in the dataset, invoking the LLM for all possible prompt-example combinations. The result of your playground runs will be tracked as an experiment under the loaded dataset (see Playground Traces)

The standard for evaluating text is human labeling. However, high-quality LLM outputs are becoming cheaper and faster to produce, and human evaluation cannot scale. In this context, evaluating the performance of LLM applications is best tackled by using a LLM. The Phoenix is designed for simple, fast, and accurate .

Pre-built evals - Phoenix provides pre-tested eval templates for common tasks such as RAG and function calling. Learn more about pretested templates . Each eval is pre-tested on a variety of eval models. Find the most up-to-date template on .

Run evals on your own data - takes a dataframe as its primary input and output, making it easy to run evaluations on your own data - whether that's logs, traces, or datasets downloaded for benchmarking.

Built-in Explanations - All Phoenix evaluations include an that requires eval models to explain their judgment rationale. This boosts performance and helps you understand and improve your eval.

- Phoenix let's you configure which foundation model you'd like to use as a judge. This includes OpenAI, Anthropic, Gemini, and much more. See Eval Models

(llm_classify)

(llm_generate)

We are continually iterating our templates, view the most up-to-date template .

We are continually iterating our templates, view the most up-to-date template .

This prompt template is heavily inspired by the paper: .

Tracing is a helpful tool for understanding how your LLM application works. Phoenix offers comprehensive tracing capabilities that are not tied to any specific LLM vendor or framework. Phoenix accepts traces over the OpenTelemetry protocol (OTLP) and supports first-class instrumentation for a variety of frameworks ( , ,), SDKs (, , , ), and Languages. (Python, Javascript, etc.)

To get started, check out the .

Read more about and

Check out the for specific tutorials.

Instrumenting prompt templates and variables allows you to track and visualize prompt changes. These can also be combined with to measure the performance changes driven by each of your prompts.

We provide a using_prompt_template context manager to add a prompt template (including its version and variables) to the current OpenTelemetry Context. OpenInference will read this Context and pass the prompt template fields as span attributes, following the OpenInference . Its inputs must be of the following type:

Template: non-empty string.

Version: non-empty string.

Variables: a dictionary with string keys. This dictionary will be serialized to JSON when saved to the OTEL Context and remain a JSON string when sent as a span attribute.

It can also be used as a decorator:

template - a string with templated variables ex. "hello {{name}}"

variables - an object with variable names and their values ex. {name: "world"}

version - a string version of the template ex. v1.0

All of these are optional. Application of variables to a template will typically happen before the call to an llm and may not be picked up by auto instrumentation. So, this can be helpful to add to ensure you can see the templates and variables while troubleshooting.

Check out our to get started. Look at our to better understand how to troubleshoot and evaluate different kinds of retrieval systems. For a high level overview on evaluation, check out our .

For the Retrieval-Augmented Generation (RAG) use case, see the section.

See for the Retrieval-Augmented Generation (RAG) use case where relevant documents are retrieved for the question before constructing the context for the LLM.

In , a document is any piece of information the user may want to retrieve, e.g. a paragraph, an article, or a Web page, and a collection of documents is referred to as the corpus. A corpus can provide the knowledge base (of proprietary data) for supplementing a user query in the prompt context to a Large Language Model (LLM) in the Retrieval-Augmented Generation (RAG) use case. Relevant documents are first based on the user query and its embedding, then the retrieved documents are combined with the query to construct an augmented prompt for the LLM to provide a more accurate response incorporating information from the knowledge base. Corpus inferences can be imported into Phoenix as shown below.

The launcher accepts the corpus dataset through corpus= parameter.

In Retrieval-Augmented Generation (RAG), the retrieval step returns from a (proprietary) knowledge base (a.k.a. ) a list of documents relevant to the user query, then the generation step adds the retrieved documents to the prompt context to improve response accuracy of the Large Language Model (LLM). The IDs of the retrieval documents along with the relevance scores, if present, can be imported into Phoenix as follows.

Below shows only the relevant subsection of the dataframe. The retrieved_document_ids should matched the ids in the data. Note that for each row, the list under the relevance_scores column have a matching length as the one under the retrievals column. But it's not necessary for all retrieval lists to have the same length.

We provide a setPromptTemplate function which allows you to set a template, version, and variables on context. You can use this utility in conjunction with to set the active context. OpenInference will then pick up these attributes and add them to any spans created within the context.with callback. The components of a prompt template are:

Troubleshooting for LLM applications

✅

✅

Follow the entirety of an LLM workflow

✅

🚫 support for spans only

Embeddings Visualizer

🚧 on the roadmap

✅

who was the first person that walked on the moon

[-0.0126, 0.0039, 0.0217, ...

Neil Alden Armstrong

who was the 15th prime minister of australia

[0.0351, 0.0632, -0.0609, ...

Francis Michael Forde

1

Voyager 2 is a spacecraft used by NASA to expl...

[-0.02785328, -0.04709944, 0.042922903, 0.0559...

2

The Staturn Nebula is a planetary nebula in th...

[0.03544901, 0.039175965, 0.014074919, -0.0307...

3

Eris is a dwarf planet and a trans-Neptunian o...

[0.05506449, 0.0031612846, -0.020452883, -0.02...

who was the first person that walked on the moon

[-0.0126, 0.0039, 0.0217, ...

[7395, 567965, 323794, ...

[11.30, 7.67, 5.85, ...

who was the 15th prime minister of australia

[0.0351, 0.0632, -0.0609, ...

[38906, 38909, 38912, ...

[11.28, 9.10, 8.39, ...

why is amino group in aniline an ortho para di...

[-0.0431, -0.0407, -0.0597, ...

[779579, 563725, 309367, ...

[-10.89, -10.90, -10.94, ...

We provide LLM evaluators out of the box. These evaluators are vendor agnostic and can be instantiated with a Phoenix model wrapper:

Code evaluators are functions that evaluate the output of your LLM task that don't use another LLM as a judge. An example might be checking for whether or not a given output contains a link - which can be implemented as a RegEx match.

phoenix.experiments.evaluators contains some pre-built code evaluators that can be passed to the evaluators parameter in experiments.

The above contains_link evaluator can then be passed as an evaluator to any experiment you'd like to run.

For a full list of code evaluators, please consult repo or API documentation.

The simplest way to create an evaluator is just to write a Python function. By default, a function of one argument will be passed the output of an experiment run. These custom evaluators can either return a boolean or numeric value which will be recorded as the evaluation score.

Imagine our experiment is testing a task that is intended to output a numeric value from 1-100. We can write a simple evaluator to check if the output is within the allowed range:

By simply passing the in_bounds function to run_experiment, we will automatically generate evaluations for each experiment run for whether or not the output is in the allowed range.

More complex evaluations can use additional information. These values can be accessed by defining a function with specific parameter names which are bound to special values:

input

experiment run input

def eval(input): ...

output

experiment run output

def eval(output): ...

expected

example output

def eval(expected): ...

reference

alias for expected

def eval(reference): ...

metadata

experiment metadata

def eval(metadata): ...

These parameters can be used in any combination and any order to write custom complex evaluators!

Below is an example of using the editdistance library to calculate how close the output is to the expected value:

For even more customization, use the create_evaluator decorator to further customize how your evaluations show up in the Experiments UI.

The decorated wordiness_evaluator can be passed directly into run_experiment!

Phoenix supports running multiple evals on a single experiment, allowing you to comprehensively assess your model's performance from different angles. When you provide multiple evaluators, Phoenix creates evaluation runs for every combination of experiment runs and evaluators.

Phoenix supports three main options to collect traces:

This example uses options 1 and 2.

To collect traces from your application, you must configure an OpenTelemetry TracerProvider to send traces to Phoenix.

Functions can be traced using decorators:

Input and output attributes are set automatically based on my_func's parameters and return.

OpenInference libraries must be installed before calling the register function

You should now see traces in Phoenix!

phoenix.otel is a lightweight wrapper around OpenTelemetry primitives with Phoenix-aware defaults.

These defaults are aware of environment variables you may have set to configure Phoenix:

PHOENIX_COLLECTOR_ENDPOINT

PHOENIX_PROJECT_NAME

PHOENIX_CLIENT_HEADERS

PHOENIX_API_KEY

PHOENIX_GRPC_PORT

phoenix.otel.registerThe phoenix.otel module provides a high-level register function to configure OpenTelemetry tracing by setting a global TracerProvider. The register function can also configure headers and whether or not to process spans one by one or by batch.

If the PHOENIX_API_KEY environment variable is set, register will automatically add an authorization header to each span payload.

There are two ways to configure the collector endpoint:

Using environment variables

Using the endpoint keyword argument

If you're setting the PHOENIX_COLLECTOR_ENDPOINT environment variable, register will

automatically try to send spans to your Phoenix server using gRPC.

endpoint directlyWhen passing in the endpoint argument, you must specify the fully qualified endpoint. If the PHOENIX_GRPC_PORT environment variable is set, it will override the default gRPC port.

The HTTP transport protocol is inferred from the endpoint

The GRPC transport protocol is inferred from the endpoint

Additionally, the protocol argument can be used to enforce the OTLP transport protocol regardless of the endpoint. This might be useful in cases such as when the GRPC endpoint is bound to a different port than the default (4317). The valid protocols are: "http/protobuf", and "grpc".

register can be configured with different keyword arguments:

project_name: The Phoenix project name

or use PHOENIX_PROJECT_NAME env. var

headers: Headers to send along with each span payload

or use PHOENIX_CLIENT_HEADERS env. var

batch: Whether or not to process spans in batch

Once you've connected your application to your Phoenix instance using phoenix.otel.register, you need to instrument your application. You have a few options to do this:

Using OpenInference auto-instrumentors. If you've used the auto_instrument flag above, then any instrumentor packages in your environment will be called automatically. For a full list of OpenInference packages, see https://arize.com/docs/phoenix/integrations

Prompt playground can be accessed from the left navbar of Phoenix.

From here, you can directly prompt your model by modifying either the system or user prompt, and pressing the Run button on the top right.

Let's start by comparing a few different prompt variations. Add two additional prompts using the +Prompt button, and update the system and user prompts like so:

System prompt #1:

System prompt #2:

System prompt #3:

User prompt (use this for all three):

Your playground should look something like this:

Let's run it and compare results:

Your prompt will be saved in the Prompts tab:

Now you're ready to see how that prompt performs over a larger dataset of examples.

Next, create a new dataset from the Datasets tab in Phoenix, and specify the input and output columns like so:

Now we can return to Prompt Playground, and this time choose our new dataset from the "Test over dataset" dropdown.

You can also load in your saved Prompt:

We'll also need to update our prompt to look for the {{input_article}} column in our dataset. After adding this in, be sure to save your prompt once more!

Now if we run our prompt(s), each row of the dataset will be run through each variation of our prompt.

And if you return to view your dataset, you'll see the details of that run saved as an experiment.

You can now easily modify you prompt or compare different versions side-by-side. Let's say you've found a stronger version of the prompt. Save your updated prompt once again, and you'll see it added as a new version under your existing prompt:

You can also tag which version you've deemed ready for production, and view code to access your prompt in code further down the page.

Now you're ready to create, test, save, and iterate on your Prompts in Phoenix! Check out our other quickstarts to see how to use Prompts in code.

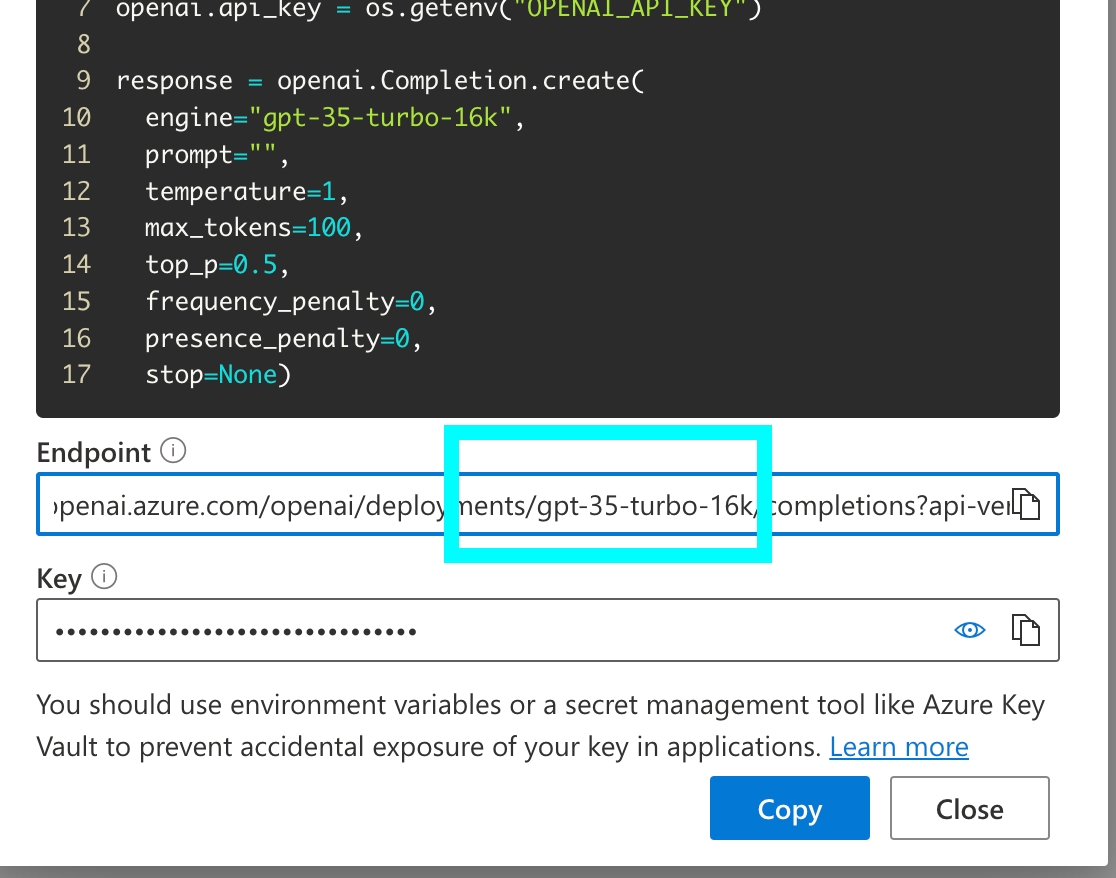

Phoenix natively integrates with OpenAI, Azure OpenAI, Anthropic, and Google AI Studio (gemini) to make it easy to test changes to your prompts. In addition to the above, since many AI providers (deepseek, ollama) can be used directly with the OpenAI client, you can talk to any OpenAI compatible LLM provider.

To securely provide your API keys, you have two options. One is to store them in your browser in local storage. Alternatively, you can set them as environment variables on the server side. If both are set at the same time, the credential set in the browser will take precedence.

API keys can be entered in the playground application via the API Keys dropdown menu. This option stores API keys in the browser. Simply navigate to to settings and set your API keys.

Available on self-hosted Phoenix

If the following variables are set in the server environment, they'll be used at API invocation time.

OpenAI

OPENAI_API_KEY

Azure OpenAI

AZURE_OPENAI_API_KEY

AZURE_OPENAI_ENDPOINT

OPENAI_API_VERSION

Anthropic

ANTHROPIC_API_KEY

Gemini

GEMINI_API_KEY or GOOGLE_API_KEY

Since you can configure the base URL for the OpenAI client, you can use the prompt playground with a variety of OpenAI Client compatible LLMs such as Ollama, DeepSeek, and more.

OpenAI Client compatible providers Include

DeepSeek

Ollama

Optionally, the server can be configured with the OPENAI_BASE_URL environment variable to change target any OpenAI compatible REST API.

For app.phoenix.arize.com, this may fail due to security reasons. In that case, you'd see a Connection Error appear.

How to deploy prompts to different environments safely

Prompts in Phoenix are versioned in a linear history, creating a comprehensive audit trail of all modifications. Each change is tracked, allowing you to:

Review the complete history of a prompt

Understand who made specific changes

Revert to previous versions if needed

When you are ready to deploy a prompt to a certain environment (let's say staging), the best thing to do is to tag a specific version of your prompt as ready. By default Phoenix offers 3 tags, production, staging, and development but you can create your own tags as well.

Each tag can include an optional description to provide additional context about its purpose or significance. Tags are unique per prompt, meaning you cannot have two tags with the same name for the same prompt.

It can be helpful to have custom tags to track different versions of a prompt. For example if you wanted to tag a certain prompt as the one that was used in your v0 release, you can create a custom tag with that name to keep track!

When creating a custom tag, you can provide:

A name for the tag (must be a valid identifier)

An optional description to provide context about the tag's purpose

Once a prompt version is tagged, you can pull this version of the prompt into any environment that you would like (an application, an experiment). Similar to git tags, prompt version tags let you create a "release" of a prompt (e.x. pushing a prompt to staging).

You can retrieve a prompt version by:

Using the tag name directly (e.g., "production", "staging", "development")

Using a custom tag name

Using the latest version (which will return the most recent version regardless of tags)

For full details on how to use prompts in code, see Using a prompt

You can list all tags associated with a specific prompt version. The list is paginated, allowing you to efficiently browse through large numbers of tags. Each tag in the list includes:

The tag's unique identifier

The tag's name

The tag's description (if provided)

This is particularly useful when you need to:

Review all tags associated with a prompt version

Verify which version is currently tagged for a specific environment

Track the history of tag changes for a prompt version

Tag names must be valid identifiers: lowercase letters, numbers, hyphens, and underscores, starting and ending with a letter or number.

Examples: staging, production-v1, release-2024

This Eval evaluates whether a retrieved chunk contains an answer to the query. It's extremely useful for evaluating retrieval systems.

The above runs the RAG relevancy LLM template against the dataframe df.

Precision

0.60

0.70

Recall

0.77

0.88

F1

0.67

0.78

100 Samples

113 Sec

This quickstart guide will show you through the basics of evaluating data from your LLM application.

The first thing you'll need is a dataset to evaluate. This could be your own collect or generated set of examples, or data you've exported from Phoenix traces. If you've already collected some trace data, this makes a great starting point.

For the sake of this guide however, we'll download some pre-existing data to evaluate. Feel free to sub this with your own data, just be sure it includes the following columns:

reference

query

response

Set up evaluators (in this case for hallucinations and Q&A correctness), run the evaluations, and log the results to visualize them in Phoenix. We'll use OpenAI as our evaluation model for this example, but Phoenix also supports a number of other models. First, we need to add our OpenAI API key to our environment.

Explanation of the parameters used in run_evals above:

dataframe - a pandas dataframe that includes the data you want to evaluate. This could be spans exported from Phoenix, or data you've brought in from elsewhere. This dataframe must include the columns expected by the evaluators you are using. To see the columns expected by each built-in evaluator, check the corresponding page in the Using Phoenix Evaluators section.

evaluators - a list of built-in Phoenix evaluators to use.

provide_explanations - a binary flag that instructs the evaluators to generate explanations for their choices.

Combine your evaluation results and explanations with your original dataset:

Note: You'll only be able to log evaluations to the Phoenix UI if you used a trace or span dataset exported from Phoenix as your dataset in this quickstart. If you've used your own outside dataset, you won't be able to log these results to Phoenix.

Phoenix is a comprehensive platform designed to enable observability across every layer of an LLM-based system, empowering teams to build, optimize, and maintain high-quality applications and agents efficiently.

During the development phase, Phoenix offers essential tools for debugging, experimentation, evaluation, prompt tracking, and search and retrieval.

Phoenix's tracing and span analysis capabilities are invaluable during the prototyping and debugging stages. By instrumenting application code with Phoenix, teams gain detailed insights into the execution flow, making it easier to identify and resolve issues. Developers can drill down into specific spans, analyze performance metrics, and access relevant logs and metadata to streamline debugging efforts.

Leverage experiments to measure prompt and model performance. Typically during this early stage, you'll focus on gather a robust set of test cases and evaluation metrics to test initial iterations of your application. Experiments at this stage may resemble unit tests, as they're geared towards ensure your application performs correctly.

Either as a part of experiments or a standalone feature, evaluations help you understand how your app is performing at a granular level. Typical evaluations might be correctness evals compared against a ground truth data set, or LLM-as-a-judge evals to detect hallucinations or relevant RAG output.

Prompt engineering is critical how a model behaves. While there are other methods such as fine-tuning to change behavior, prompt engineering is the simplest way to get started and often times has the best ROI.

Instrument prompt and prompt variable collection to associate iterations of your app with the performance measured through evals and experiments. Phoenix tracks prompt templates, variables, and versions during execution to help you identify improvements and degradations.

Phoenix's search and retrieval optimization tools include an embeddings visualizer that helps teams understand how their data is being represented and clustered. This visual insight can guide decisions on indexing strategies, similarity measures, and data organization to improve the relevance and efficiency of search results.

In the testing and staging environment, Phoenix supports comprehensive evaluation, benchmarking, and data curation. Traces, experimentation, prompt tracking, and embedding visualizer remain important in the testing and staging phase, helping teams identify and resolve issues before deployment.

Phoenix's flexible evaluation framework supports thorough testing of LLM outputs. Teams can define custom metrics, collect user feedback, and leverage separate LLMs for automated assessment. Phoenix offers tools for analyzing evaluation results, identifying trends, and tracking improvements over time.

In production, Phoenix works hand-in-hand with Arize, which focuses on the production side of the LLM lifecycle. The integration ensures a smooth transition from development to production, with consistent tooling and metrics across both platforms.

Phoenix and Arize together help teams identify data points for fine-tuning based on production performance and user feedback. This targeted approach ensures that fine-tuning efforts are directed towards the most impactful areas, maximizing the return on investment.

Phoenix, in collaboration with Arize, empowers teams to build, optimize, and maintain high-quality LLM applications throughout the entire lifecycle. By providing a comprehensive observability platform and seamless integration with production monitoring tools, Phoenix and Arize enable teams to deliver exceptional LLM-driven experiences with confidence and efficiency.

In some situations, you may need to modify the observability level of your tracing. For instance, you may want to keep sensitive information from being logged for security reasons, or you may want to limit the size of the base64 encoded images logged to reduced payload size.

The OpenInference Specification defines a set of environment variables you can configure to suit your observability needs. In addition, the OpenInference auto-instrumentors accept a trace config which allows you to set these value in code without having to set environment variables, if that's what you prefer

The possible settings are:

To set up this configuration you can either:

Set environment variables as specified above

Define the configuration in code as shown below

Do nothing and fall back to the default values

Use a combination of the three, the order of precedence is:

Values set in the TraceConfig in code

Environment variables

default values

Below is an example of how to set these values in code using our OpenAI Python and JavaScript instrumentors, however, the config is respected by all of our auto-instrumentors.

How to use an LLM judge to label and score your application

This guide will walk you through the process of evaluating traces captured in Phoenix, and exporting the results to the Phoenix UI.

Note: if you're self-hosting Phoenix, swap your collector endpoint variable in the snippet below, and remove the Phoenix Client Headers variable.

Now that we have Phoenix configured, we can register that instance with OpenTelemetry, which will allow us to collect traces from our application here.

For the sake of making this guide fully runnable, we'll briefly generate some traces and track them in Phoenix. Typically, you would have already captured traces in Phoenix and would skip to "Download trace dataset from Phoenix"

Now that we have our trace dataset, we can generate evaluations for each trace. Evaluations can be generated in many different ways. Ultimately, we want to end up with a set of labels and/or scores for our traces.

You can generate evaluations using:

Plain code

Other evaluation packages

As long as you format your evaluation results properly, you can upload them to Phoenix and visualize them in the UI.

Let's start with a simple example of generating evaluations using plain code. OpenAI has a habit of repeating jokes, so we'll generate evaluations to label whether a joke is a repeat of a previous joke.

We now have a DataFrame with a column for whether each joke is a repeat of a previous joke. Let's upload this to Phoenix.

Our evals_df has a column for the span_id and a column for the evaluation result. The span_id is what allows us to connect the evaluation to the correct trace in Phoenix. Phoenix will also automatically look for columns named "label" and "score" to display in the UI.

You should now see evaluations in the Phoenix UI!

From here you can continue collecting and evaluating traces, or move on to one of these other guides:

This guide shows how LLM evaluation results in dataframes can be sent to Phoenix.

Before accessing px.Client(), be sure you've set the following environment variables:

A dataframe of span evaluations would look similar like the table below. It must contain span_id as an index or as a column. Once ingested, Phoenix uses the span_id to associate the evaluation with its target span.

The evaluations dataframe can be sent to Phoenix as follows. Note that the name of the evaluation must be supplied through the eval_name= parameter. In this case we name it "Q&A Correctness".

A dataframe of document evaluations would look something like the table below. It must contain span_id and document_position as either indices or columns. document_position is the document's (zero-based) index in the span's list of retrieved documents. Once ingested, Phoenix uses the span_id and document_position to associate the evaluation with its target span and document.

The evaluations dataframe can be sent to Phoenix as follows. Note that the name of the evaluation must be supplied through the eval_name= parameter. In this case we name it "Relevance".

Multiple sets of Evaluations can be logged by the same px.Client().log_evaluations() function call.

By default the client will push traces to the project specified in the PHOENIX_PROJECT_NAME environment variable or to the default project. If you want to specify the destination project explicitly, you can pass the project name as a parameter.

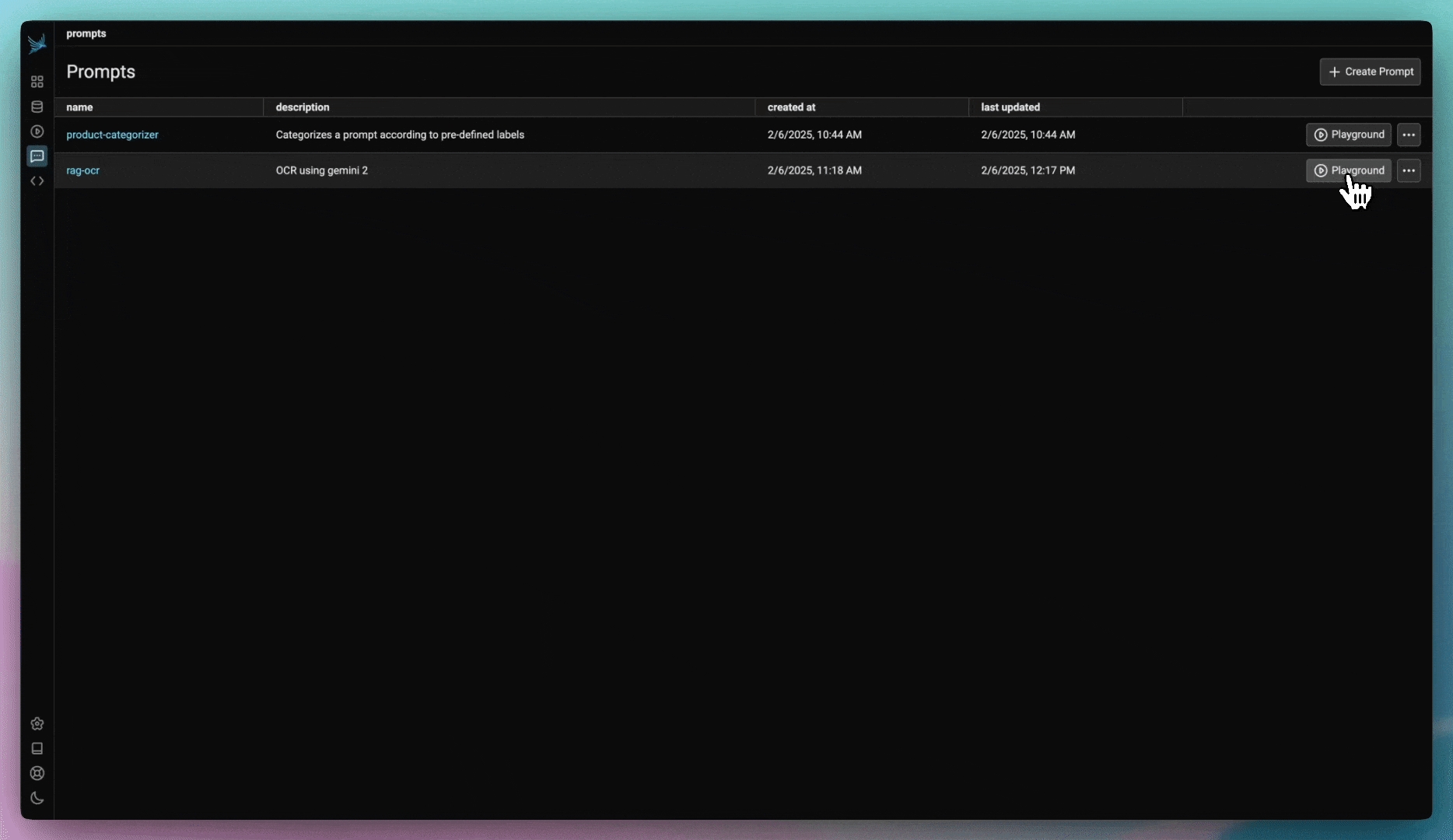

Store and track prompt versions in Phoenix

Prompts with Phoenix can be created using the playground as well as via the phoenix-clients.

Navigate to the Prompts in the navigation and click the add prompt button on the top right. This will navigate you to the Playground.

To the right you can enter sample inputs for your prompt variables and run your prompt against a model. Make sure that you have an API key set for the LLM provider of your choosing.

To save the prompt, click the save button in the header of the prompt on the right. Name the prompt using alpha numeric characters (e.x. `my-first-prompt`) with no spaces. The model configuration you selected in the Playground will be saved with the prompt. When you re-open the prompt, the model and configuration will be loaded along with the prompt.

You just created your first prompt in Phoenix! You can view and search for prompts by navigating to Prompts in the UI.

Prompts can be loaded back into the Playground at any time by clicking on "open in playground"

To make edits to a prompt, click on the edit in Playground on the top right of the prompt details view.

When you are happy with your prompt, click save. You will be asked to provide a description of the changes you made to the prompt. This description will show up in the history of the prompt for others to understand what you did.

In some cases, you may need to modify a prompt without altering its original version. To achieve this, you can clone a prompt, similar to forking a repository in Git.

Cloning a prompt allows you to experiment with changes while preserving the history of the main prompt. Once you have made and reviewed your modifications, you can choose to either keep the cloned version as a separate prompt or merge your changes back into the main prompt. To do this, simply load the cloned prompt in the playground and save it as the main prompt.

This approach ensures that your edits are flexible and reversible, preventing unintended modifications to the original prompt.

Creating a prompt in code can be useful if you want a programatic way to sync prompts with the Phoenix server.

Below is an example prompt for summarizing articles as bullet points. Use the Phoenix client to store the prompt in the Phoenix server. The name of the prompt is an identifier with lowercase alphanumeric characters plus hyphens and underscores (no spaces).

A prompt stored in the database can be retrieved later by its name. By default the latest version is fetched. Specific version ID or a tag can also be used for retrieval of a specific version.

Below is an example prompt for summarizing articles as bullet points. Use the Phoenix client to store the prompt in the Phoenix server. The name of the prompt is an identifier with lowercase alphanumeric characters plus hyphens and underscores (no spaces).

A prompt stored in the database can be retrieved later by its name. By default the latest version is fetched. Specific version ID or a tag can also be used for retrieval of a specific version.

Sometimes you just want to upload datasets using plain objects as CSVs and DataFrames can be too restrictive about the keys.

One of the quicket way of getting started is to produce synthetic queries using an LLM.

One use case for synthetic data creation is when you want to test your RAG pipeline. You can leverage an LLM to synthesize hypothetical questions about your knowledge base.

In the below example we will use Phoenix's built-in llm_generate, but you can leverage any synthetic dataset creation tool you'd like.

Imagine you have a knowledge-base that contains the following documents:

Once your synthetic data has been created, this data can be uploaded to Phoenix for later re-use.

Once we've constructed a collection of synthetic questions, we can upload them to a Phoenix dataset.

If you have an application that is traced using instrumentation, you can quickly add any span or group of spans using the Phoenix UI.

To add a single span to a dataset, simply select the span in the trace details view. You should see an add to dataset button on the top right. From there you can select the dataset you would like to add it to and make any changes you might need to make before saving the example.

You can also use the filters on the spans table and select multiple spans to add to a specific dataset.

This Eval helps evaluate the summarization results of a summarization task. The template variables are:

document: The document text to summarize

summary: The summary of the document

The above shows how to use the summarization Eval template.

This LLM Eval detects if the output of a model is a hallucination based on contextual data.

This Eval is specifically designed to detect hallucinations in generated answers from private or retrieved data. The Eval detects if an AI answer to a question is a hallucination based on the reference data used to generate the answer.

The above Eval shows how to the the hallucination template for Eval detection.

This Eval evaluates whether a question was correctly answered by the system based on the retrieved data. In contrast to retrieval Evals that are checks on chunks of data returned, this check is a system level check of a correct Q&A.

question: This is the question the Q&A system is running against

sampled_answer: This is the answer from the Q&A system.

context: This is the context to be used to answer the question, and is what Q&A Eval must use to check the correct answer

The above Eval uses the QA template for Q&A analysis on retrieved data.

Supplemental Data to Squad 2: In order to check the case of detecting incorrect answers, we created wrong answers based on the context data. The wrong answers are intermixed with right answers.

Each example in the dataset was evaluating using the QA_PROMPT_TEMPLATE above, then the resulting labels were compared against the ground truth in the benchmarking dataset.

This LLM evaluation is used to compare AI answers to Human answers. Its very useful in RAG system benchmarking to compare the human generated groundtruth.

A workflow we see for high quality RAG deployments is generating a golden dataset of questions and a high quality set of answers. These can be in the range of 100-200 but provide a strong check for the AI generated answers. This Eval checks that the human ground truth matches the AI generated answer. Its designed to catch missing data in "half" answers and differences of substance.

Question:

What Evals are supported for LLMs on generative models?

Human:

Arize supports a suite of Evals available from the Phoenix Evals library, they include both pre-tested Evals and the ability to configure cusotm Evals. Some of the pre-tested LLM Evals are listed below:

Retrieval Relevance, Question and Answer, Toxicity, Human Groundtruth vs AI, Citation Reference Link Relevancy, Code Readability, Code Execution, Hallucination Detection and Summarizaiton

AI:

Arize supports LLM Evals.

Eval:

Incorrect

Explanation of Eval:

The AI answer is very brief and lacks the specific details that are present in the human ground truth answer. While the AI answer is not incorrect in stating that Arize supports LLM Evals, it fails to mention the specific types of Evals that are supported, such as Retrieval Relevance, Question and Answer, Toxicity, Human Groundtruth vs AI, Citation Reference Link Relevancy, Code Readability, Hallucination Detection, and Summarization. Therefore, the AI answer does not fully capture the substance of the human answer.

Overview of template:

GPT-4 Results

The Agent Function Call eval can be used to determine how well a model selects a tool to use, extracts the right parameters from the user query, and generates the tool call code.

Parameters:

df - a dataframe of cases to evaluate. The dataframe must have these columns to match the default template:

This template instead evaluates only the parameter extraction step of a router:

Use to mark functions and code blocks.

Use to capture all calls made to supported frameworks.

Use instrumentation. Supported in and , among many other languages.

Sign up for an Arize Phoenix account at

Grab your API key from the Keys option on the left bar.

In your code, set your endpoint and API key:

Having trouble finding your endpoint? Check out

Run Phoenix using Docker, local terminal, Kubernetes etc. For more information, .

In your code, set your endpoint:

Having trouble finding your endpoint? Check out

Phoenix can also capture all calls made to supported libraries automatically. Just install the :

Explore tracing

View use cases to see

Using .

Using .

It looks like the second option is doing the most concise summary. Go ahead and .

Prompt playground can be used to run a series of dataset rows through your prompts. To start off, we'll need a dataset. Phoenix has many options to , to keep things simple here, we'll directly upload a CSV. Download the articles summaries file linked below:

From here, you could to test its performance, or add complexity to your prompts by including different tools, output schemas, and models to test against.

If there is a LLM endpoint you would like to use, reach out to

We are continually iterating our templates, view the most up-to-date template .

This benchmark was obtained using notebook below. It was run using the as a ground truth dataset. Each example in the dataset was evaluating using the RAG_RELEVANCY_PROMPT_TEMPLATE above, then the resulting labels were compared against the ground truth label in the WikiQA dataset to generate the confusion matrices below.

Provided you started from a trace dataset, you can log your evaluation results to Phoenix using .

This process is similar to the , but instead of creating your own dataset or using an existing external one, you'll export a trace dataset from Phoenix and log the evaluation results to Phoenix.

Phoenix's

Your own

If you're interested in more complex evaluation and evaluators, start with

If you're ready to start testing your application in a more rigorous manner, check out

An evaluation must have a name (e.g. "Q&A Correctness") and its DataFrame must contain identifiers for the subject of evaluation, e.g. a span or a document (more on that below), and values under either the score, label, or explanation columns. See for more information.

The is like the IDE where you will develop your prompt. The prompt section on the right lets you add more messages, change the template format (f-string or mustache), and an output schema (JSON mode).

To view the details of a prompt, click on the prompt name. You will be taken to the prompt details view. The prompt details view shows all the that has been saved (e.x. the model used, the invocation parameters, etc.)

Once you've crated a prompt, you probably need to make tweaks over time. The best way to make tweaks to a prompt is using the playground. Depending on how destructive a change you are making you might want to just create a new or the prompt.

Prompt labels and metadata is still

Starting with prompts, Phoenix has a dedicated client that lets you programmatically. Make sure you have installed the appropriate before proceeding.

phoenix-client for both Python and TypeScript are very early in it's development and may not have every feature you might be looking for. Please drop us an issue if there's an enhancement you'd like to see.

If a version is with, e.g. "production", it can retrieved as follows.

If a version is with, e.g. "production", it can retrieved as follows.

When manually creating a dataset (let's say collecting hypothetical questions and answers), the easiest way to start is by using a spreadsheet. Once you've collected the information, you can simply upload the CSV of your data to the Phoenix platform using the UI. You can also programmatically upload tabular data using Pandas as

We are continually iterating our templates, view the most up-to-date template .

This benchmark was obtained using notebook below. It was run using a as a ground truth dataset. Each example in the dataset was evaluating using the SUMMARIZATION_PROMPT_TEMPLATE above, then the resulting labels were compared against the ground truth label in the summarization dataset to generate the confusion matrices below.

We are continually iterating our templates, view the most up-to-date template .

This benchmark was obtained using notebook below. It was run using the as a ground truth dataset. Each example in the dataset was evaluating using the HALLUCINATION_PROMPT_TEMPLATE above, then the resulting labels were compared against the is_hallucination label in the HaluEval dataset to generate the confusion matrices below.

We are continually iterating our templates, view the most up-to-date template .

The used was created based on:

Squad 2: The 2.0 version of the large-scale dataset Stanford Question Answering Dataset (SQuAD 2.0) allows researchers to design AI models for reading comprehension tasks under challenging constraints.

All evals templates are tested against golden data that are available as part of the LLM eval library's and target precision at 70-90% and F1 at 70-85%.

We currently support a growing set of models for LLM Evals, please check out the .

We are continually iterating our templates, view the most up-to-date template .