Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Workflows are the backbone of many successful LLM applications. They define how language models interact with tools, data, and users—often through a sequence of clearly orchestrated steps. Unlike fully autonomous agents, workflows offer structure and predictability, making them a practical choice for many real-world tasks.

In this guide, we share practical workflows using a variety of agent frameworks, including:

Each section highlights how to use these tools effectively—showing what’s possible, where they shine, and where a simpler solution might serve you better. Whether you're orchestrating deterministic workflows or building dynamic agentic systems, the goal is to help you choose the right tool for your context and build with confidence.

For a deeper dive into the principles behind agentic systems and when to use them, see Anthropic’s “Building Effective Agents”.

Agent Routing is the process of directing a task, query, or request to the most appropriate agent based on context or capabilities. In multi-agent systems, it helps determine which agent is best suited to handle a specific input based on skills, domain expertise, or available tools. This enables more efficient, accurate, and specialized handling of complex tasks.

Prompt Chaining is the technique of breaking a complex task into multiple steps, where the output of one prompt becomes the input for the next. This allows a system to reason more effectively, maintain context across steps, and handle tasks that would be too difficult to solve in a single prompt. It's often used to simulate multi-step thinking or workflows.

Parallelization is the process of dividing a task into smaller, independent parts that can be executed simultaneously to speed up processing. It’s used to handle multiple inputs, computations, or agent responses at the same time rather than sequentially. This improves efficiency and speed, especially for large-scale or time-sensitive tasks.

An orchestrator is a central controller that manages and coordinates multiple components, agents, or processes to ensure they work together smoothly.

It decides what tasks need to be done, who or what should do them, and in what order. An orchestrator can handle things like scheduling, routing, error handling, and result aggregation. It might also manage prompt chains, route tasks to agents, and oversee parallel execution.

An evaluator assesses the quality or correctness of outputs, such as ranking responses, checking for factual accuracy, or scoring performance against a metric. An optimizer uses that evaluation to improve future outputs, either by fine-tuning models, adjusting parameters, or selecting better strategies. Together, they form a feedback loop that helps a system learn what works and refine itself over time.

Self-hosted Phoenix supports multiple user with authentication, roles, and more.

Phoenix Cloud is no longer limited to single-developer use—teams can manage access and share traces easily across their organization.

`The new Phoenix Cloud supports team management and collaboration. You can spin up multiple, customized Phoenix Spaces for different teams and use cases, manage individual user access and permissions for each space, and seamlessly collaborate with additional team members on your projects.

gRPC and HTTP are communication protocols used to transfer data between client and server applications.

HTTP (Hypertext Transfer Protocol) is a stateless protocol primarily used for website and web application requests over the internet.

gRPC (gRemote Procedure Call) is a modern, open-source communication protocol from Google that uses HTTP/2 for transport, protocol buffers as the interface description language, and provides features like bi-directional streaming, multiplexing, and flow control.

gRPC is more efficient in a tracing context than HTTP, but HTTP is more widely supported.

Phoenix can send traces over either HTTP or gRPC.

Phoenix does natively support gRPC for trace collection post 4.0 release. See Configuration for details.

Yes, in fact this is probably the preferred way to interact with OpenAI if your enterprise requires data privacy. Getting the parameters right for Azure can be a bit tricky so check out the models section for details.

We update the Phoenix version used by Phoenix Cloud on a weekly basis.

You can persist data in the notebook by either setting the use_temp_dir flag to false in px.launch_app which will persist your data in SQLite on your disk at the PHOENIX_WORKING_DIR. Alternatively you can deploy a phoenix instance and point to it via PHOENIX_COLLECTOR_ENDPOINT.

Use Phoenix to trace and evaluate AutoGen agents

AutoGen is an open-source framework by Microsoft for building multi-agent workflows. The AutoGen agent framework provides tools to define, manage, and orchestrate agents, including customizable behaviors, roles, and communication protocols.

Phoenix can be used to trace AutoGen agents by instrumenting their workflows, allowing you to visualize agent interactions, message flows, and performance metrics across multi-agent chains.

UserProxyAgent: Acts on behalf of the user to initiate tasks, guide the conversation, and relay feedback between agents. It can operate in auto or human-in-the-loop mode and control the flow of multi-agent interactions.

AssisstantAgent: Performs specialized tasks such as code generation, review, or analysis. It supports role-specific prompts, memory of prior turns, and can be equipped with tools to enhance its capabilities.

GroupChat: Coordinates structured, turn-based conversations among multiple agents. It maintains shared context, controls agent turn-taking, and stops the chat when completion criteria are met.

GroupChatManager: Manages the flow and logic of the GroupChat, including termination rules, turn assignment, and optional message routing customization.

Tool Integration: Agents can use external tools (e.g. Python, web search, RAG retrievers) to perform actions beyond text generation, enabling more grounded or executable outputs.

Memory and Context Tracking: Agents retain and access conversation history, enabling coherent and stateful dialogue over multiple turns.

Agent Roles

Poorly defined responsibilities can cause overlap or miscommunication, especially between multi-agent workflows.

Termination Conditions

GroupChat may continue even after a logical end, as UserProxyAgent can exhaust all allowed turns before stopping unless termination is explicitly triggered.

Human-in-the-Loop

Fully autonomous mode may miss important judgment calls without user oversight.

State Management

Excessive context can exceed token limits, while insufficient context breaks coherence.

Prompt chaining is a method where a complex task is broken into smaller, linked subtasks, with the output of one step feeding into the next. This workflow is ideal when a task can be cleanly decomposed into fixed subtasks, making each LLM call simpler and more accurate — trading off latency for better overall performance.

AutoGen makes it easy to build these chains by coordinating multiple agents. Each AssistantAgent focuses on a specialized task, while a UserProxyAgent manages the conversation flow and passes key outputs between steps. With Phoenix tracing, we can visualize the entire sequence, monitor individual agent calls, and debug the chain easily.

Notebook: Market Analysis Prompt Chaining Agent The agent conducts a multi-step market analysis workflow, starting with identifying general trends and culminating in an evaluation of company strengths.

How to evaluate: Ensure outputs are moved into inputs for the next step and logically build across steps (e.g., do identified trends inform the company evaluation?)

Confirm that each prompt step produces relevant and distinct outputs that contribute to the final analysis

Track total latency and token counts to see which steps cause inefficiencies

Ensure there are no redundant outputs or hallucinations in multi-step reasoning

Routing is a pattern designed to handle incoming requests by classifying them and directing them to the single most appropriate specialized agent or workflow.

AutoGen simplifies implementing this pattern by enabling a dedicated 'Router Agent' to analyze incoming messages and signal its classification decision. Based on this classification, the workflow explicitly directs the query to the appropriate specialist agent for a focused, separate interaction. The specialist agent is equipped with tools to carry out the request.

Notebook: Customer Service Routing Agent

We will build an intelligent customer service system, designed to efficiently handle diverse user queries directing them to a specialized AssistantAgent .

How to evaluate: Ensure the Router Agent consistently classifies incoming queries into the correct category (e.g., billing, technical support, product info)

Confirm that each query is routed to the appropriate specialized AssistantAgent without ambiguity or misdirection

Test with edge cases and overlapping intents to assess the router’s ability to disambiguate accurately

Watch for routing failures, incorrect classifications, or dropped queries during handoff between agents

The Evaluator-Optimizer pattern employs a loop where one agent acts as a generator, creating an initial output (like text or code), while a second agent serves as an evaluator, providing critical feedback against criteria. This feedback guides the generator through successive revisions, enabling iterative refinement. This approach trades increased interactions for a more polished & accurate final result.

AutoGen's GroupChat architecture is good for implementing this pattern because it can manage the conversational turns between the generator and evaluator agents. The GroupChatManager facilitates the dialogue, allowing the agents to exchange the evolving outputs and feedback.

Notebook: Code Generator with Evaluation Loop

We'll use a Code_Generator agent to write Python code from requirements, and a Code_Reviewer agent to assess it for correctness, style, and documentation. This iterative GroupChat process improves code quality through a generation and review loop.

How to evaluate: Ensure the evaluator provides specific, actionable feedback aligned with criteria (e.g., correctness, style, documentation)

Confirm that the generator incorporates feedback into meaningful revisions with each iteration

Track the number of iterations required to reach an acceptable or final version to assess efficiency

Watch for repetitive feedback loops, regressions, or ignored suggestions that signal breakdowns in the refinement process

Orchestration enables collaboration among multiple specialized agents, activating only the most relevant one based on the current subtask context. Instead of relying on a fixed sequence, agents dynamically participate depending on the state of the conversation.

Agent orchestrator workflows simplifies this routing pattern through a central orchestrator (GroupChatManager) that selectively delegates tasks to the appropriate agents. Each agent monitors the conversation but only contributes when their specific expertise is required.

Notebook: Trip Planner Orchestrator Agent

We will build a dynamic travel planning assistant. A GroupChatManager coordinates specialized agents to adapt to the user's evolving travel needs.

How to evaluate: Ensure the orchestrator activates only relevant agents based on the current context or user need.

(e.g., flights, hotels, local activities)

Confirm that agents contribute meaningfully and only when their domain expertise is required

Track the conversation flow to verify smooth handoffs and minimal overlap or redundancy among agents

Test with evolving and multi-intent queries to assess the orchestrator’s ability to adapt and reassign tasks dynamically

Parallelization is a powerful agent pattern where multiple tasks are run concurrently, significantly speeding up the overall process. Unlike purely sequential workflows, this approach is suitable when tasks are independent and can be processed simultaneously.

AutoGen doesn't have a built-in parallel execution manager, but its core agent capabilities integrate seamlessly with standard Python concurrency libraries. We can use these libraries to launch multiple agent interactions concurrently.

Notebook: Product Description Parallelization Agent We'll generate different components of a product description for a smartwatch (features, value proposition, target customer, tagline) by calling a marketing agent. At the end, results are synthesized together.

How to evaluate: Ensure each parallel agent call produces a distinct and relevant component (e.g., features, value proposition, target customer, tagline)

Confirm that all outputs are successfully collected and synthesized into a cohesive final product description

Track per-task runtime and total execution time to measure parallel speedup vs. sequential execution

Test with varying product types to assess generality and stability of the parallel workflow

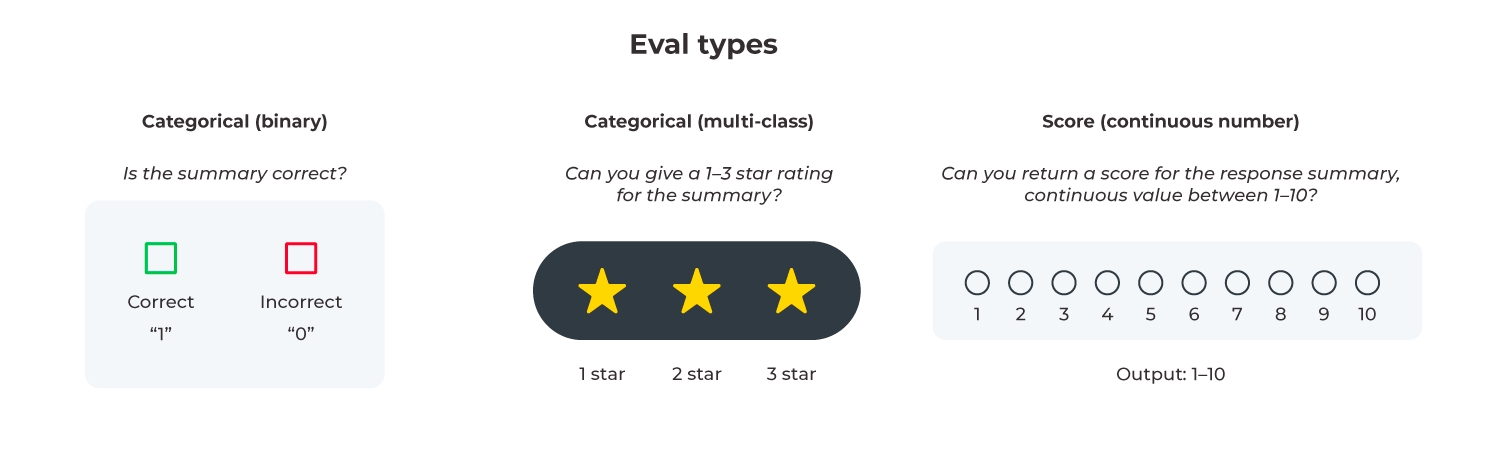

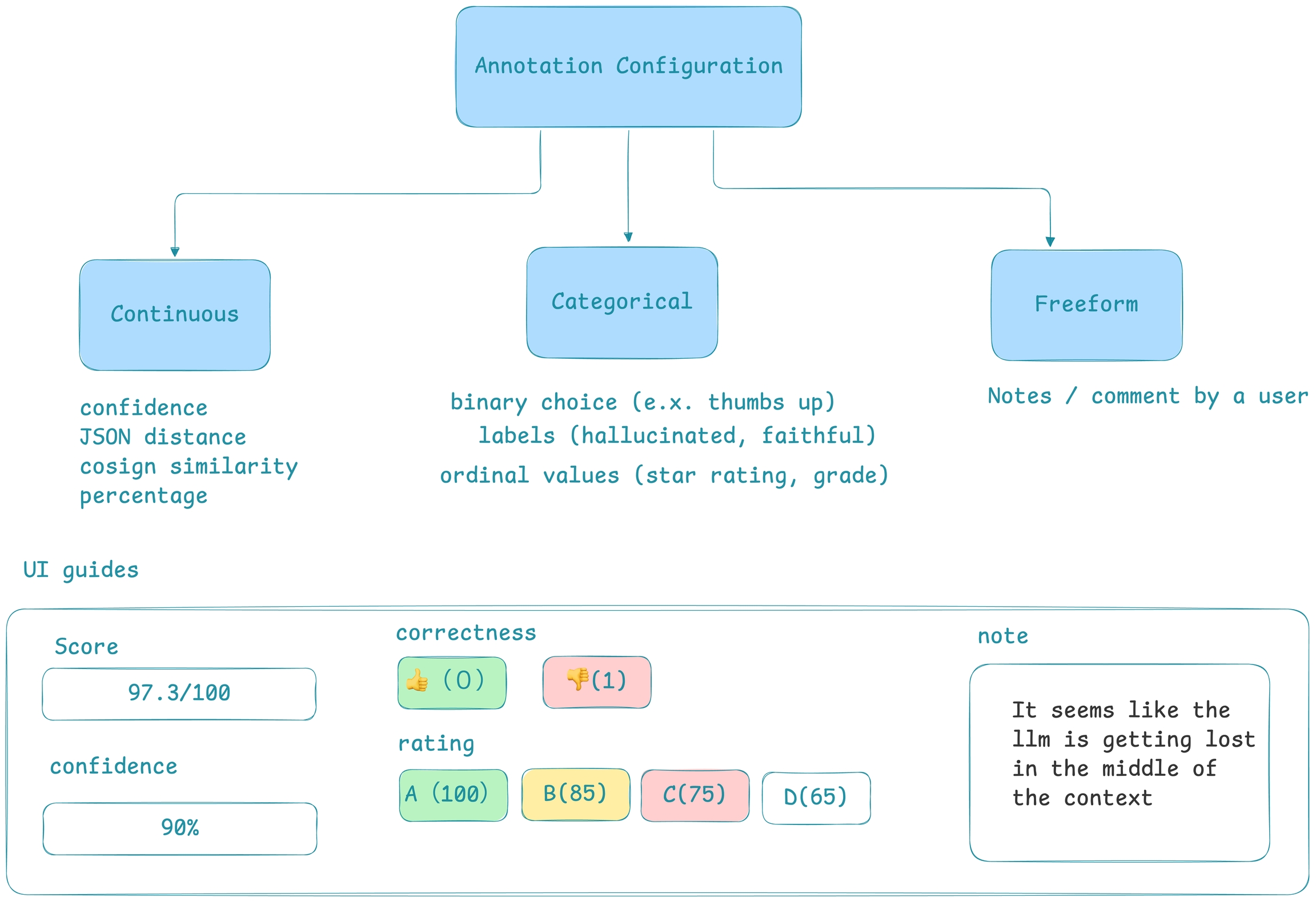

There are a multiple types of evaluations supported by the Phoenix Library. Each category of evaluation is categorized by its output type.

Categorical (binary) - The evaluation results in a binary output, such as true/false or yes/no, which can be easily represented as 1/0. This simplicity makes it straightforward for decision-making processes but lacks the ability to capture nuanced judgements.

Categorical (Multi-class) - The evaluation results in one of several predefined categories or classes, which could be text labels or distinct numbers representing different states or types.

Score - The evaluation results is a numeric value within a set range (e.g. 1-10), offering a scale of measurement.

Although score evals are an option in Phoenix, we recommend using categorical evaluations in production environments. LLMs often struggle with the subtleties of continuous scales, leading to inconsistent results even with slight prompt modifications or across different models. Repeated tests have shown that scores can fluctuate significantly, which is problematic when evaluating at scale.

Categorical evals, especially multi-class, strike a balance between simplicity and the ability to convey distinct evaluative outcomes, making them more suitable for applications where precise and consistent decision-making is important.

To explore the full analysis behind our recommendation and understand the limitations of score-based evaluations, check out our research on LLM eval data types.

It can be hard to understand in many cases why an LLM responds in a specific way. The explanation feature of Phoneix allows you to get a Eval output and an explanation from the LLM at the same time. We have found this incredibly useful for debugging LLM Evals.

from phoenix.evals import (

RAG_RELEVANCY_PROMPT_RAILS_MAP,

RAG_RELEVANCY_PROMPT_TEMPLATE,

OpenAIModel,

download_benchmark_dataset,

llm_classify,

)

model = OpenAIModel(

model_name="gpt-4",

temperature=0.0,

)

#The rails are used to hold the output to specific values based on the template

#It will remove text such as ",,," or "..."

#Will ensure the binary value expected from the template is returned

rails = list(RAG_RELEVANCY_PROMPT_RAILS_MAP.values())

relevance_classifications = llm_classify(

dataframe=df,

template=RAG_RELEVANCY_PROMPT_TEMPLATE,

model=model,

rails=rails,

)

#relevance_classifications is a Dataframe with columns 'label' and 'explanation'The flag above can be set with any of the templates or your own custom templates. The example below is from a relevance Evaluation.

Retrieval Evals are designed to evaluate the effectiveness of retrieval systems. The retrieval systems typically return list of chunks of length k ordered by relevancy. The most common retrieval systems in the LLM ecosystem are vector DBs.

The retrieval Eval is designed to assess the relevance of each chunk and its ability to answer the question. More information on the Retrieval Eval can be found here

The picture above shows a single query returning chunks as a list. The retrieval Eval runs across each chunk returning a value of relevance in a list highlighting its relevance for the specific chunk. Phoenix provides helper functions that take in a dataframe, with query column that has lists of chunks and produces a column that is a list of equal length with an Eval for each chunk.

Use Phoenix to trace and evaluate different CrewAI agent patterns

is an open-source framework for building and orchestrating collaborative AI agents that act like a team of specialized virtual employees. Built on LangChain, it enables users to define roles, goals, and workflows for each agent, allowing them to work together autonomously on complex tasks with minimal setup.

Agents are autonomous, role-driven entities designed to perform specific functions—like a Researcher, Writer, or Support Rep. They can be richly customized with goals, backstories, verbosity settings, delegation permissions, and access to tools. This flexibility makes agents expressive and task-aware, helping model real-world team dynamics.

Tasks are the atomic units of work in CrewAI. Each task includes a description, expected output, responsible agent, and optional tools. Tasks can be executed solo or collaboratively, and they serve as the bridge between high-level goals and actionable steps.

Tools give agents capabilities beyond language generation—such as browsing the web, fetching documents, or performing calculations. Tools can be native or developer-defined using the BaseTool class, and each must have a clear name and purpose so agents can invoke them appropriately.Tools must include clear descriptions to help agents use them effectively.

CrewAI supports multiple orchestration strategies:

Sequential: Tasks run in a fixed order—simple and predictable.

Hierarchical: A manager agent or LLM delegates tasks dynamically, enabling top-down workflows.

Consensual (planned): Future support for democratic, collaborative task routing. Each process type shapes how coordination and delegation unfold within a crew.

A crew is a collection of agents and tasks governed by a defined process. It represents a fully operational unit with an execution strategy, internal collaboration logic, and control settings for verbosity and output formatting. Think of it as the operating system for multi-agent workflows.

Pipelines chain multiple crews together, enabling multi-phase workflows where the output of one crew becomes the input to the next. This allows developers to modularize complex applications into reusable, composable segments of logic.

With planning enabled, CrewAI generates a task-by-task strategy before execution using an AgentPlanner. This enriches each task with context and sequencing logic, improving coordination—especially in multi-step or loosely defined workflows.

Prompt chaining decomposes a complex task into a sequence of smaller steps, where each LLM call operates on the output of the previous one. This workflow introduces the ability to add programmatic checks (such as “gates”) between steps, validating intermediate outputs before continuing. The result is higher control, accuracy, and debuggability—at the cost of increased latency.

CrewAI makes it straightforward to build prompt chaining workflows using a sequential process. Each step is modeled as a Task, assigned to a specialized Agent, and executed in order using Process.sequential. You can insert validation logic between tasks or configure agents to flag issues before passing outputs forward.

Notebook: Research-to-Content Prompt Chaining Workflow

Routing is a pattern designed to classify incoming requests and dispatch them to the single most appropriate specialist agent or workflow, ensuring each input is handled by a focused, expert-driven routine.

In CrewAI, you implement routing by defining a Router Agent that inspects each input, emits a category label, and then dynamically delegates to downstream agents (or crews) tailored for that category—each equipped with its own tools and prompts. This separation of concerns delivers more accurate, maintainable pipelines.

Notebook: Research-Content Routing Workflow

Parallelization is a powerful agent workflow where multiple tasks are executed simultaneously, enabling faster and more scalable LLM pipelines. This pattern is particularly effective when tasks are independent and don’t depend on each other’s outputs.

While CrewAI does not enforce true multithreaded execution, it provides a clean and intuitive structure for defining parallel logic through multiple agents and tasks. These can be executed concurrently in terms of logic, and then gathered or synthesized by a downstream agent.

Notebook: Parallel Research Agent

The Orchestrator-Workers workflow centers around a primary agent—the orchestrator—that dynamically decomposes a complex task into smaller, more manageable subtasks. Rather than relying on a fixed structure or pre-defined subtasks, the orchestrator decides what needs to be done based on the input itself. It then delegates each piece to the most relevant worker agent, often specialized in a particular domain like research, content synthesis, or evaluation.

CrewAI supports this pattern using the Process.hierarchical setup, where the orchestrator (as the manager agent) generates follow-up task specifications at runtime. This enables dynamic delegation and coordination without requiring the workflow to be rigidly structured up front. It's especially useful for use cases like multi-step research, document generation, or problem-solving workflows where the best structure only emerges after understanding the initial query.

Notebook: Research & Writing Delegation Agents

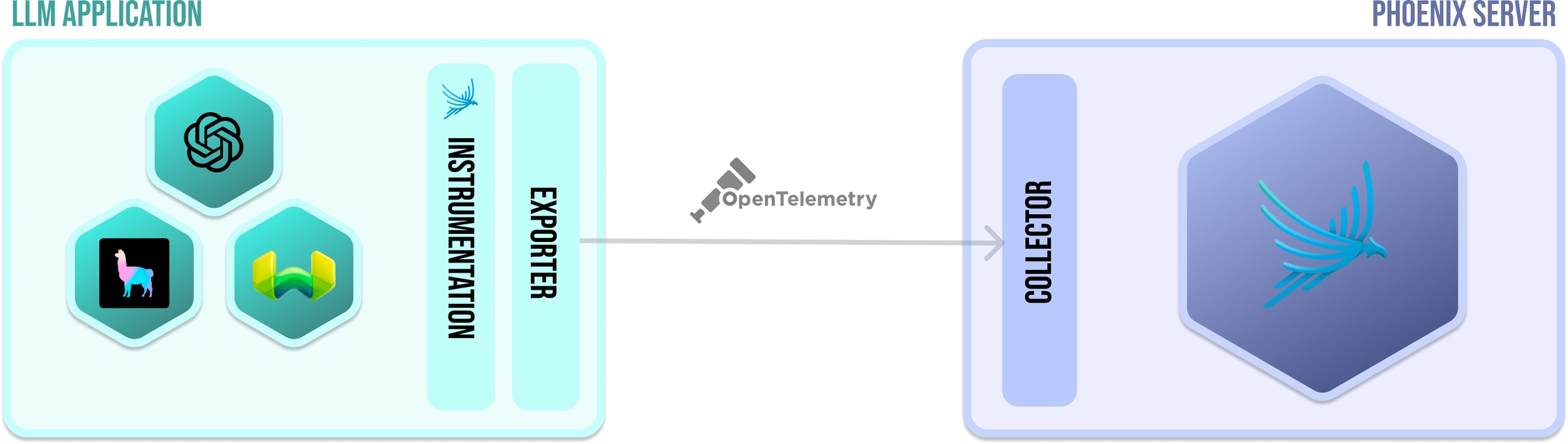

The components behind tracing

In order for an application to emit traces for analysis, the application must be instrumented. Your application can be manually instrumented or be automatically instrumented. With phoenix, there a set of plugins (instrumentors) that can be added to your application's startup process that perform auto-instrumentation. These plugins collect spans for your application and export them for collection and visualization. For phoenix, all the instrumentors are managed via a single repository called . The comprehensive list of instrumentors can be found in the how-to guide.

An exporter takes the spans created via instrumentation and exports them to a collector. In simple terms, it just sends the data to the Phoenix. When using Phoenix, most of this is completely done under the hood when you call instrument on an instrumentor.

The Phoenix server is a collector and a UI that helps you troubleshoot your application in real time. When you run or run phoenix (e.x. px.launch_app(), container), Phoenix starts receiving spans from any application(s) that is exporting spans to it.

OpenTelemetry Protocol (or OTLP for short) is the means by which traces arrive from your application to the Phoenix collector. Phoenix currently supports OTLP over HTTP.

Evaluating tasks performed by LLMs can be difficult due to their complexity and the diverse criteria involved. Traditional methods like rule-based assessment or similarity metrics (e.g., ROUGE, BLEU) often fall short when applied to the nuanced and varied outputs of LLMs.

For instance, an AI assistant’s answer to a question can be:

not grounded in context

repetitive, repetitive, repetitive

grammatically incorrect

excessively lengthy and characterized by an overabundance of words

incoherent

The list of criteria goes on. And even if we had a limited list, each of these would be hard to measure

To overcome this challenge, the concept of "LLM as a Judge" employs an LLM to evaluate another's output, combining human-like assessment with machine efficiency.

Here’s the step-by-step process for using an LLM as a judge:

Identify Evaluation Criteria - First, determine what you want to evaluate, be it hallucination, toxicity, accuracy, or another characteristic. See our for examples of what can be assessed.

Craft Your Evaluation Prompt - Write a prompt template that will guide the evaluation. This template should clearly define what variables are needed from both the initial prompt and the LLM's response to effectively assess the output.

Select an Evaluation LLM - Choose the most suitable LLM from our available options for conducting your specific evaluations.

Generate Evaluations and View Results - Execute the evaluations across your data. This process allows for comprehensive testing without the need for manual annotation, enabling you to iterate quickly and refine your LLM's prompts.

Using an LLM as a judge significantly enhances the scalability and efficiency of the evaluation process. By employing this method, you can run thousands of evaluations across curated data without the need for human annotation.

This capability will not only speed up the iteration process for refining your LLM's prompts but will also ensure that you can deploy your models to production with confidence.

Arize is the company that makes Phoenix. Phoenix is an open source LLM observability tool offered by Arize. It can be access in its Cloud form online, or self-hosted and run on your own machine or server.

"Arize" can also refer to Arize's enterprise platform, often called Arize AX, available on arize.com. Arize AX is the enterprise SaaS version of Phoenix that comes with additional features like Copilot, ML and CV support, HIPAA compliance, Security Reviews, a customer success team, and more. See of the two tools.

With SageMaker notebooks, phoenix leverages the to host the server under proxy/6006.Note, that phoenix will automatically try to detect that you are running in SageMaker but you can declare the notebook runtime via a parameter to launch_app or an environment variable

Learn about options to migrate your legacy Phoenix Cloud instance to the latest version

To move to the new Phoenix Cloud, simply with a different email address. From there, you can start using a new Phoenix instance immediately. Your existing projects in your old (legacy) account will remain intact and independent, ensuring a clean transition.

Since most users don’t use Phoenix Cloud for data storage, this straightforward approach works seamlessly for migrating to the latest version.

If you need to migrate data from the legacy version to the latest version, .

The easiest way to determine which version of Phoenix Cloud you’re using is by checking the URL in your browser:

The new Phoenix Cloud version will have a hostname structure like: app.arize.phoenix.com/s/[your-space-name]

If your Phoenix Cloud URL does not include /s/ followed by your space name, you are on the legacy version.

import os

os.environ["PHOENIX_NOTEBOOK_ENV"] = "sagemaker"If you are working on an API whose endpoints perform RAG, but would like the phoenix server not to be launched as another thread.

You can do this by configuring the following the environment variable PHOENIX_COLLECTOR_ENDPOINT to point to the server running in a different process or container.

LlamaTrace and Phoenix Cloud are the same tool. They are the hosted version of Phoenix provided on app.phoenix.arize.com.

NOT_PARSABLE errors often occur when LLM responses exceed the max_tokens limit or produce incomplete JSON. Here's how to fix it:

Increase max_tokens: Update the model configuration as follows:

pythonCopy codellm_judge_model = OpenAIModel(

api_key=getpass("Enter your OpenAI API key..."),

model="gpt-4o-2024-08-06",

temperature=0.2,

max_tokens=1000, # Increase token limit

)Update Phoenix: Use version ≥0.17.4, which removes token limits for OpenAI and increases defaults for other APIs.

Check Logs: Look for finish_reason="length" to confirm token limits caused the issue.

If the above doesn't work, it's possible the llm-as-a-judge output might not fit into the defined rails for that particular custom Phoenix eval. Double check the prompt output matches the rail expectations.

If you want to contribute to the cutting edge of LLM and ML Observability, you've come to the right place!

To get started, please check out the following:

We encourage you to start with an issue labeled with the tag good first issue on theGitHub issue board, to get familiar with our codebase as a first-time contributor.

To submit your code, fork the Phoenix repository, create a new branch on your fork, and open a Pull Request (PR) once your work is ready for review.

In the PR template, please describe the change, including the motivation/context, test coverage, and any other relevant information. Please note if the PR is a breaking change or if it is related to an open GitHub issue.

A Core reviewer will review your PR in around one business day and provide feedback on any changes it requires to be approved. Once approved and all the tests pass, the reviewer will click the Squash and merge button in Github 🥳.

Your PR is now merged into Phoenix! We’ll shout out your contribution in the release notes.

Agent Roles

Explicit role configuration gives flexibility, but poor design can cause overlap or miscommunication

State Management

Stateless by default. Developers must implement external state or context passing for continuity across tasks

Task Planning

Supports sequential and branching workflows, but all logic must be manually defined—no built-in planning

Tool Usage

Agents support tools via config. No automatic selection; all tool-to-agent mappings are manual

Termination Logic

No auto-termination handling. Developers must define explicit conditions to break recursive or looping behavior

Memory

No built-in memory layer. Integration with vector stores or databases must be handled externally

Benchmarking Chunk Size, K and Retrieval Approach

The advent of LLMs is causing a rethinking of the possible architectures of retrieval systems that have been around for decades.

The core use case for RAG (Retrieval Augmented Generation) is the connecting of an LLM to private data, empower an LLM to know your data and respond based on the private data you fit into the context window.

As teams are setting up their retrieval systems understanding performance and configuring the parameters around RAG (type of retrieval, chunk size, and K) is currently a guessing game for most teams.

The above picture shows the a typical retrieval architecture designed for RAG, where there is a vector DB, LLM and an optional Framework.

This section will go through a script that iterates through all possible parameterizations of setting up a retrieval system and use Evals to understand the trade offs.

This overview will run through the scripts in Phoenix for performance analysis of RAG setup:

The scripts above power the included notebook.

The typical flow of retrieval is a user query is embedded and used to search a vector store for chunks of relevant data.

The core issue of retrieval performance: The chunks returned might or might not be able to answer your main question. They might be semantically similar but not usable to create an answer the question!

The eval template is used to evaluate the relevance of each chunk of data. The Eval asks the main question of "Does the chunk of data contain relevant information to answer the question"?

The Retrieval Eval is used to analyze the performance of each chunk within the ordered list retrieved.

The Evals generated on each chunk can then be used to generate more traditional search and retreival metrics for the retrieval system. We highly recommend that teams at least look at traditional search and retrieval metrics such as:

MRR

Precision @ K

NDCG

These metrics have been used for years to help judge how well your search and retrieval system is returning the right documents to your context window.

These metrics can be used overall, by cluster (UMAP), or on individual decisions, making them very powerful to track down problems from the simplest to the most complex.

Retrieval Evals just gives an idea of what and how much of the "right" data is fed into the context window of your RAG, it does not give an indication if the final answer was correct.

The Q&A Evals work to give a user an idea of whether the overall system answer was correct. This is typically what the system designer cares the most about and is one of the most important metrics.

The above Eval shows how the query, chunks and answer are used to create an overall assessment of the entire system.

The above Q&A Eval shows how the Query, Chunk and Answer are used to generate a % incorrect for production evaluations.

The results from the runs will be available in the directory.

Underneath experiment_data there are two sets of metrics:

The first set of results removes the cases where there are 0 retrieved relevant documents. There are cases where some clients test sets have a large number of questions where the documents can not answer. This can skew the metrics a lot.

The second set of results is unfiltered and shows the raw metrics for every retrieval.

The above picture shows the results of benchmark sweeps across your retrieval system setup. The lower the percent the better the results. This is the Q&A Eval.

The LLM Evals library is designed to support the building of any custom Eval templates.

Follow the following steps to easily build your own Eval with Phoenix

To do that, you must identify what is the metric best suited for your use case. Can you use a pre-existing template or do you need to evaluate something unique to your use case?

Then, you need the golden dataset. This should be representative of the type of data you expect the LLM eval to see. The golden dataset should have the “ground truth” label so that we can measure performance of the LLM eval template. Often such labels come from human feedback.

Building such a dataset is laborious, but you can often find a standardized one for the most common use cases (as we did in the code above)

The Eval inferences are designed or easy benchmarking and pre-set downloadable test inferences. The inferences are pre-tested, many are hand crafted and designed for testing specific Eval tasks.

Then you need to decide which LLM you want to use for evaluation. This could be a different LLM from the one you are using for your application. For example, you may be using Llama for your application and GPT-4 for your eval. Often this choice is influenced by questions of cost and accuracy.

Now comes the core component that we are trying to benchmark and improve: the eval template.

You can adjust an existing template or build your own from scratch.

Be explicit about the following:

What is the input? In our example, it is the documents/context that was retrieved and the query from the user.

What are we asking? In our example, we’re asking the LLM to tell us if the document was relevant to the query

What are the possible output formats? In our example, it is binary relevant/irrelevant, but it can also be multi-class (e.g., fully relevant, partially relevant, not relevant).

In order to create a new template all that is needed is the setting of the input string to the Eval function.

The above template shows an example creation of an easy to use string template. The Phoenix Eval templates support both strings and objects.

The above example shows a use of the custom created template on the df dataframe.

You now need to run the eval across your golden dataset. Then you can generate metrics (overall accuracy, precision, recall, F1, etc.) to determine the benchmark. It is important to look at more than just overall accuracy. We’ll discuss that below in more detail.

Yes, you can use either of the two methods below.

Install pyngrok on the remote machine using the command pip install pyngrok.

on ngrok and verify your email. Find 'Your Authtoken' on the .

In jupyter notebook, after launching phoenix set its port number as the port parameter in the code below. Preferably use a default port for phoenix so that you won't have to set up ngrok tunnel every time for a new port, simply restarting phoenix will work on the same ngrok URL.

"Visit Site" using the newly printed public_url and ignore warnings, if any.

Ngrok free account does not allow more than 3 tunnels over a single ngrok agent session. Tackle this error by checking active URL tunnels using ngrok.get_tunnels() and close the required URL tunnel using ngrok.disconnect(public_url).

This assumes you have already set up ssh on both the local machine and the remote server.

If you are accessing a remote jupyter notebook from a local machine, you can also access the phoenix app by forwarding a local port to the remote server via ssh. In this particular case of using phoenix on a remote server, it is recommended that you use a default port for launching phoenix, say DEFAULT_PHOENIX_PORT.

Launch the phoenix app from jupyter notebook.

In a new terminal or command prompt, forward a local port of your choice from 49152 to 65535 (say 52362) using the command below. Remote user of the remote host must have sufficient port-forwarding/admin privileges.

If successful, visit to access phoenix locally.

If you are abruptly unable to access phoenix, check whether the ssh connection is still alive by inspecting the terminal. You can also try increasing the ssh timeout settings.

Simply run exit in the terminal/command prompt where you ran the port forwarding command.

There are two endpoints that matter in Phoenix:

Application Endpoint: The endpoint your Phoenix instance is running on

OTEL Tracing Endpoint: The endpoint through which your Phoenix instance receives OpenTelemetry traces

If you're accessing a Phoenix Cloud instance through our website, then your endpoint is available under the Hostname field of your Settings page.

If you're self-hosting Phoenix, then you choose the endpoint when you set up the app. The default value is http://localhost:6006

To set this endpoint, use the PHOENIX_COLLECTOR_ENDPOINT environment variable. This is used by the Phoenix client package to query traces, log annotations, and retrieve prompts.

If you're accessing a Phoenix Cloud instance through our website, then your endpoint is available under the Hostname field of your Settings page.

If you're self-hosting Phoenix, then you choose the endpoint when you set up the app. The default values are:

Using the GRPC protocol: http://localhost:6006/v1/traces

Using the HTTP protocol: http://localhost:4317

To set this endpoint, use the register(endpoint=YOUR ENDPOINT) function. This endpoint can also be set using environment variables. For more on the register function and other configuration options, .

import getpass

from pyngrok import ngrok, conf

print("Enter your authtoken, which can be copied from https://dashboard.ngrok.com/auth")

conf.get_default().auth_token = getpass.getpass()

port = 37689

# Open a ngrok tunnel to the HTTP server

public_url = ngrok.connect(port).public_url

print(" * ngrok tunnel \"{}\" -> \"http://127.0.0.1:{}\"".format(public_url, port))ssh -L 52362:localhost:<DEFAULT_PHOENIX_PORT> <REMOTE_USER>@<REMOTE_HOST>Evaluating multi-agent systems involves unique challenges compared to single-agent evaluations. This guide provides clear explanations of various architectures, strategies for effective evaluation, and additional considerations.

A multi-agent system consists of multiple agents, each using an LLM (Large Language Model) to control application flows. As systems grow, you may encounter challenges such as agents struggling with too many tools, overly complex contexts, or the need for specialized domain knowledge (e.g., planning, research, mathematics). Breaking down applications into multiple smaller, specialized agents often resolves these issues.

Modularity: Easier to develop, test, and maintain.

Specialization: Expert agents handle specific domains.

Control: Explicit control over agent communication.

Multi-agent systems can connect agents in several ways:

Network

Agents can communicate freely with each other, each deciding independently whom to contact next.

Assess communication efficiency, decision quality on agent selection, and coordination complexity.

Supervisor

Agents communicate exclusively with a single supervisor that makes all routing decisions.

Evaluate supervisor decision accuracy, efficiency of routing, and effectiveness in task management.

Supervisor (Tool-calling)

Supervisor uses an LLM to invoke agents represented as tools, making explicit tool calls with arguments.

Evaluate tool-calling accuracy, appropriateness of arguments passed, and supervisor decision quality.

Hierarchical

Systems with supervisors of supervisors, allowing complex, structured flows.

Evaluate communication efficiency, decision-making at each hierarchical level, and overall system coherence.

Custom Workflow

Agents communicate within predetermined subsets, combining deterministic and agent-driven decisions.

Evaluate workflow efficiency, clarity of communication paths, and effectiveness of the predetermined control flow.

There are a few different strategies for evaluating multi agent applications.

1. Agent Handoff Evaluation

When tasks transfer between agents, evaluate:

Appropriateness: Is the timing logical?

Information Transfer: Was context transferred effectively?

Timing: Optimal handoff moment.

2. System-Level Evaluation

Measure holistic performance:

End-to-End Task Completion

Efficiency: Number of interactions, processing speed

User Experience

3. Coordination Evaluation

Evaluate cooperative effectiveness:

Communication Quality

Conflict Resolution

Resource Management

Multi-agent systems introduce added complexity:

Complexity Management: Evaluate agents individually, in pairs, and system-wide.

Emergent Behaviors: Monitor for collective intelligence and unexpected interactions.

Evaluation Granularity:

Agent-level: Individual performance

Interaction-level: Agent interactions

System-level: Overall performance

User-level: End-user experience

Performance Metrics: Latency, throughput, scalability, reliability, operational cost

Adapt single-agent evaluation methods like tool-calling evaluations and planning assessments.

See our guide on agent evals and use our pre-built evals that you can leverage in Phoenix.

Focus evaluations on coordination efficiency, overall system efficiency, and emergent behaviors.

See our docs for creating your own custom evals in Phoenix.

Structure evaluations to match architecture:

Bottom-Up: From individual agents upward.

Top-Down: From system goals downward.

Hybrid: Combination for comprehensive coverage.

from phoenix.evals import download_benchmark_dataset

df = download_benchmark_dataset(

task="binary-hallucination-classification", dataset_name="halueval_qa_data"

)

df.head()MY_CUSTOM_TEMPLATE = '''

You are evaluating the positivity or negativity of the responses to questions.

[BEGIN DATA]

************

[Question]: {question}

************

[Response]: {response}

[END DATA]

Please focus on the tone of the response.

Your answer must be single word, either "positive" or "negative"

'''model = OpenAIModel(model_name="gpt-4",temperature=0.6)

positive_eval = llm_classify(

dataframe=df,

template= MY_CUSTOM_TEMPLATE,

model=model

)#Phoenix Evals support using either strings or objects as templates

MY_CUSTOM_TEMPLATE = " ..."

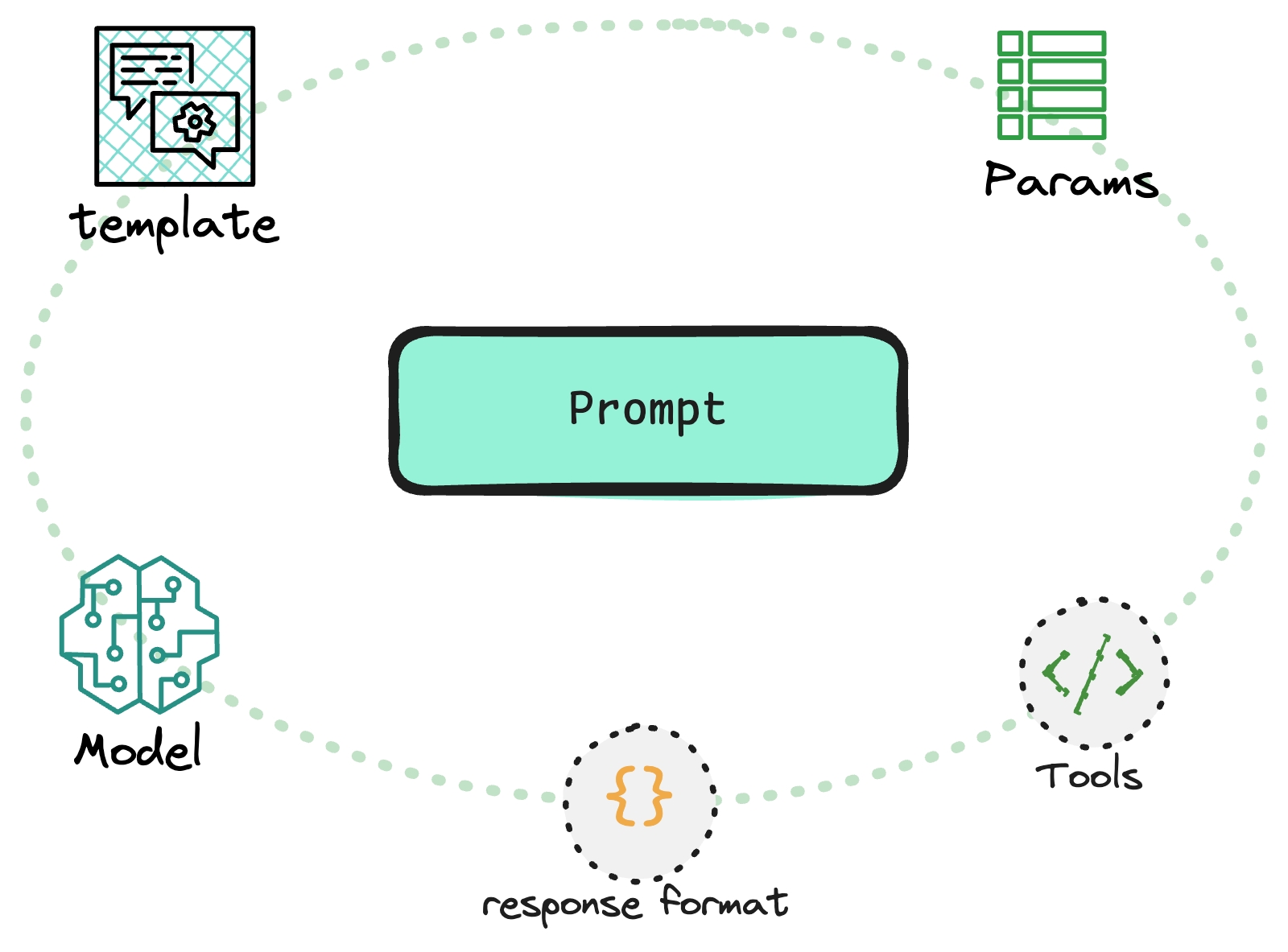

MY_CUSTOM_TEMPLATE = PromptTemplate("This is a test {prompt}")Prompts often times refer to the content of how you "prompt" a LLM, e.g. the "text" that you send to a model like OpenAI's gpt-4. Within Phoenix we expand this definition to be everything that's needed to prompt:

The prompt template of the messages to send to a completion endpoint

The invocation parameters (temperature, frequency penalty, etc.)

The tools made accessible to the LLM (e.g. weather API)

The response format (sometimes called the output schema) used for when you have JSON mode enabled.

This expanded definition of a prompt lets you more deterministically invoke LLMs with confidence as everything is snapshotted for you to use within your application.

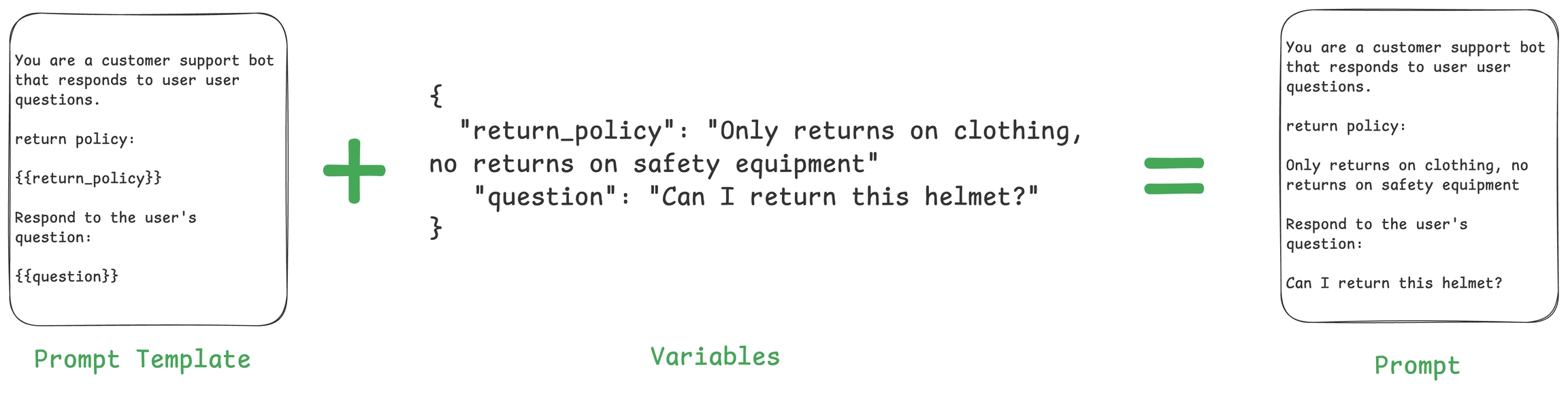

Although the terms prompt and prompt template get used interchangeably, it's important to know the difference.

Prompts refer to the message(s) that are passed into the language model.

Prompt Templates refer to a way of formatting information to get the prompt to hold the information you want (such as context and examples). Prompt templates can include placeholders (variables) for things such as examples (e.g. few-shot), outside context (RAG), or any other external data that is needed.

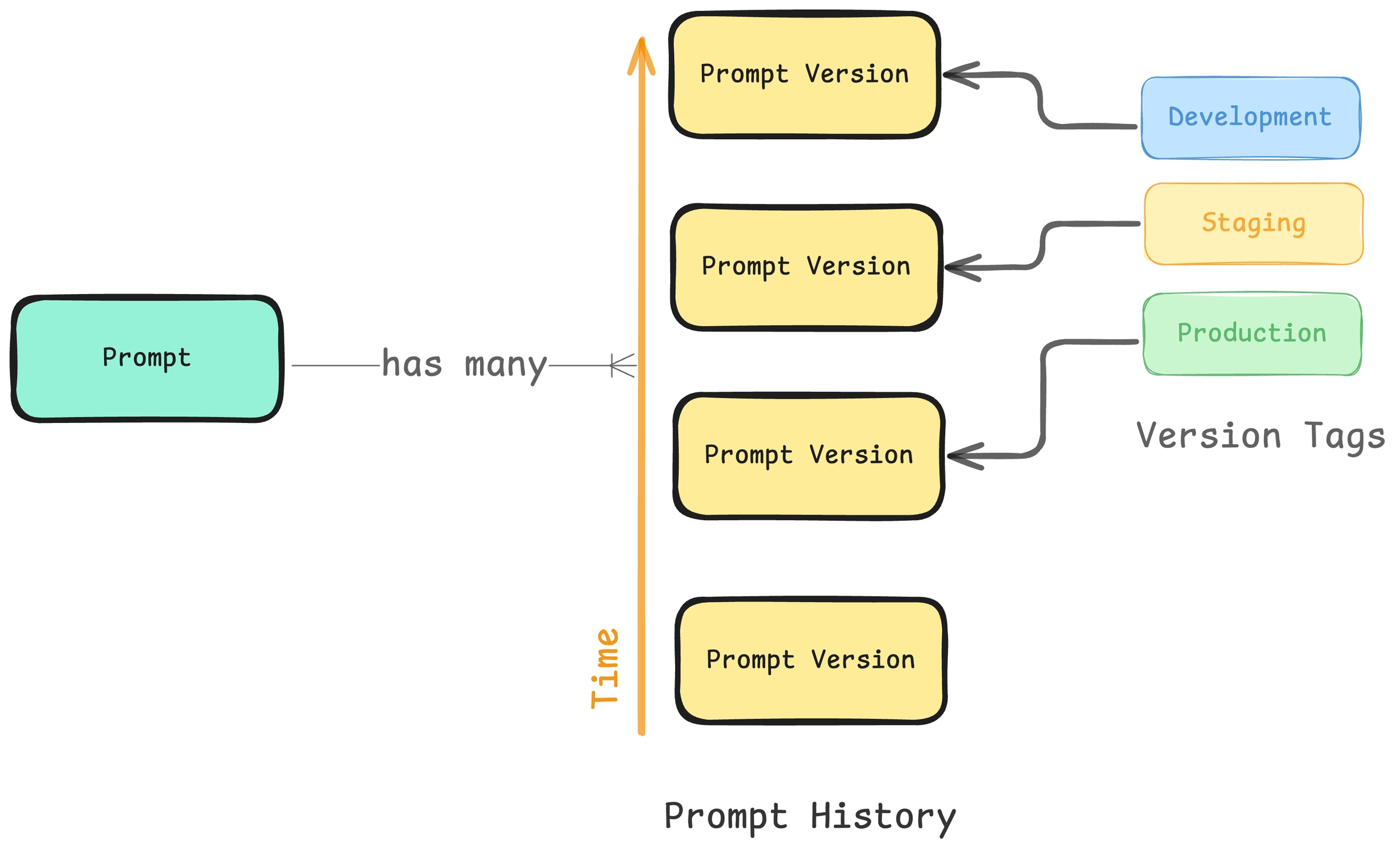

Every time you save a prompt within Phoenix, a snapshot of the prompt is saved as a prompt version. Phoenix does this so that you not only can view the changes to a prompt over time but also so that you can build confidence about a specific prompt version before using it within your application. With every prompt version phoenix tracks the author of the prompt and the date at which the version was saved.

Similar to the way in which you can track changes to your code via git shas, Phoenix tracks each change to your prompt with a prompt_id.

Imagine you’re working on a AI project, and you want to label specific versions of your prompts so you can control when and where they get deployed. This is where prompt version tags come in.

A prompt version tag is like a sticky note you put on a specific version of your prompt to mark it as important. Once tagged, that version won’t change, making it easy to reference later.

When building applications, different environments are often used for different stages of readiness before going live, for example:

Development – Where new features are built.

Staging – Where testing happens.

Production – The live system that users interact with.

Tagging prompt versions with environment tags can enable building, testing, and deploying prompts in the same way as an application—ensuring that prompt changes can be systematically tested and deployed.

In addition to environment tags, custom Git tags allow teams to label code versions in a way that fits their specific workflow (`v0.0.1`). These tags can be used to signal different stages of deployment, feature readiness, or any other meaningful status.

Prompt version tags work exactly the same way as git tags.

Prompts can be formatted to include any attributes from spans or datasets. These attributes can be added as F-Strings or using Mustache formatting.

F-strings should be formatted with single {s:

{question}{% hint style="info" %} To escape a { when using F-string, add a second { in front of it, e.g., {{escaped}} {not-escaped}. Escaping variables will remove them from inputs in the Playground. {% endhint %}

Mustache should be formatted with double {{s:

{{question}}{% hint style="info" %} We recommend using Mustache where possible, since it supports nested attributes, e.g. attributes.input.value, more seamlessly {% endhint %}

Tools allow LLMs to interact with the external environment. This can allow LLMs to interface with your application in more controlled ways. Given a prompt and some tools to choose from an LLM may choose to use some (or one) tools or not. Many LLM API's also expose a tool choice parameter which allow you to constrain how and which tools are selected.

Here is an example of what a tool would look like for the weather API using OpenAI.

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

},

"required": ["location"],

}

}

}Some LLMs support structured responses, known as response format or output schema, allowing you to specify an exact schema for the model’s output.

Structured Outputs ensure the model consistently generates responses that adhere to a defined JSON Schema, preventing issues like missing keys or invalid values.

Reliable type-safety: Eliminates the need to validate or retry incorrectly formatted responses.

Explicit refusals: Enables programmatic detection of safety-based refusals.

Simpler prompting: Reduces reliance on strongly worded prompts for consistent formatting.

For more details, check out this OpenAI guide.

Use Phoenix to trace and evaluate agent frameworks built using Langgraph

This guide explains key LangGraph concepts, discusses design considerations, and walks through common architectural patterns like orchestrator-worker, evaluators, and routing. Each pattern includes a brief explanation and links to runnable Python notebooks.

LangGraph allows you to build LLM-powered applications using a graph of steps (called "nodes") and data (called "state"). Here's what you need to know to understand and customize LangGraph workflows:

A TypedDict that stores all information passed between nodes. Think of it as the memory of your workflow. Each node can read from and write to the state.

Nodes are units of computation. Most often these are functions that accept a State input and return a partial update to it. Nodes can do anything: call LLMs, trigger tools, perform calculations, or prompt users.

Directed connections that define the order in which nodes are called. LangGraph supports linear, conditional, and cyclical edges, which allows for building loops, branches, and recovery flows.

A Python function that examines the current state and returns the name of the next node to call. This allows your application to respond dynamically to LLM outputs, tool results, or even human input.

A way to dynamically launch multiple workers (nodes or subgraphs) in parallel, each with their own state. Often used in orchestrator-worker patterns where the orchestrator doesn't know how many tasks there will be ahead of time.

LangGraph enables complex multi-agent orchestration using a Supervisor node that decides how to delegate tasks among a team of agents. Each agent can have its own tools, prompt structure, and output format. The Supervisor coordinates routing, manages retries, and ensures loop control.

LangGraph supports built-in persistence using checkpointing. Each execution step saves state to a database (in-memory, SQLite, or Postgres). This allows for:

Multi-turn conversations (memory)

Rewinding to past checkpoints (time travel)

Human-in-the-loop workflows (pause + resume)

LangGraph improves on LangChain by supporting more flexible and complex workflows. Here’s what to keep in mind when designing:

A linear sequence of prompt steps, where the output of one becomes the input to the next. This workflow is optimal when the task can be simply broken down into concrete subtasks.

Use case: Multistep reasoning, query rewriting, or building up answers gradually.

📓

Runs multiple LLMs in parallel — either by splitting tasks (sectioning) or getting multiple opinions (voting).

Use case: Combining diverse outputs, evaluating models from different angles, or running safety checks.

With the Send API, LangGraph lets you:

Launch multiple safety evaluators in parallel

Compare multiple generated hypotheses side-by-side

Run multi-agent voting workflows

This improves reliability and reduces bottlenecks in linear pipelines.

📓

Routes an input to the most appropriate follow-up node based on its type or intent.

Use case: Customer support bots, intent classification, or model selection.

LangGraph routers enable domain-specific delegation — e.g., classify an incoming query as "billing", "technical support", or "FAQ", and send it to a specialized sub-agent. Each route can have its own tools, memory, and context. Use structured output with a routing schema to make classification more reliable.

📓

One LLM generates content, another LLM evaluates it, and the loop repeats until the evaluation passes. LangGraph allows feedback to modify the state, making each round better than the last.

Use case: Improving code, jokes, summaries, or any generative output with measurable quality.

📓

An orchestrator node dynamically plans subtasks and delegates each to a worker LLM. Results are then combined into a final output.

Use case: Writing research papers, refactoring code, or composing modular documents.

LangGraph’s Send API lets the orchestrator fork off tasks (e.g., subsections of a paper) and gather them into completed_sections. This is especially useful when the number of subtasks isn’t known in advance.

You can also incorporate agents like PDF_Reader or a WebSearcher, and the orchestrator can choose when to route to these workers.

⚠️ Caution: Feedback loops or improper edge handling can cause workers to echo each other or create infinite loops. Use strict conditional routing to avoid this.

📓

SmolAgents is a lightweight Python library for composing tool-using, task-oriented agents. This guide outlines common agent workflows we've implemented—covering routing, evaluation loops, task orchestration, and parallel execution. For each pattern, we include an overview, a reference notebook, and guidance on how to evaluate agent quality.

While the API is minimal—centered on Agent, Task, and Tool—there are important tradeoffs and design constraints to be aware of.

This workflow breaks a task into smaller steps, where the output of one agent becomes the input to another. It’s useful when a single prompt can’t reliably handle the full complexity or when you want clarity in intermediate reasoning.

Notebook: The agent first extracts keywords from a resume, then summarizes what those keywords suggest.

How to evaluate: Check whether each step performs its function correctly and whether the final result meaningfully depends on the intermediate output (e.g., do summaries reflect the extracted keywords?)

Check if the intermediate step (e.g. keyword extraction) is meaningful and accurate

Ensure the final output reflects or builds on the intermediate output

Compare chained vs. single-step prompting to see if chaining improves quality or structure

Routing is used to send inputs to the appropriate downstream agent or workflow based on their content. The routing logic is handled by a dedicated agent, often using lightweight classification.

Notebook: The agent classifies candidate profiles into Software, Product, or Design categories, then hands them off to the appropriate evaluation pipeline.

How to evaluate: Compare the routing decision to human judgment or labeled examples (e.g., did the router choose the right department for a given candidate?)

Compare routing decisions to human-labeled ground truth or expectations

Track precision/recall if framed as a classification task

Monitor for edge cases and routing errors (e.g., ambiguous or mixed-signal profiles)

This pattern uses two agents in a loop: one generates a solution, the other critiques it. The generator revises until the evaluator accepts the result or a retry limit is reached. It’s useful when quality varies across generations.

Notebook: An agent writes a candidate rejection email. If the evaluator agent finds the tone or feedback lacking, it asks for a revision.

How to evaluate: Track how many iterations are needed to converge and whether final outputs meet predefined criteria (e.g., is the message respectful, clear, and specific?)

Measure how many iterations are needed to reach an acceptable result

Evaluate final output quality against criteria like tone, clarity, and specificity

Compare the evaluator’s judgment to human reviewers to calibrate reliability

In this approach, a central agent coordinates multiple agents, each with a specialized role. It’s helpful when tasks can be broken down and assigned to domain-specific workers.

Notebook: The orchestrator delegates resume review, culture fit assessment, and decision-making to different agents, then composes a final recommendation.

How to evaluate: Assess consistency between subtasks and whether the final output reflects the combined evaluations (e.g., does the final recommendation align with the inputs from each worker agent?)

Ensure each worker agent completes its role accurately and in isolation

Check if the orchestrator integrates worker outputs into a consistent final result

Look for agreement or contradictions between components (e.g., technical fit vs. recommendation)

When you need to process many inputs using the same logic, parallel execution improves speed and resource efficiency. Agents can be launched concurrently without changing their individual behavior.

Notebook:

Candidate reviews are distributed using asyncio, enabling faster batch processing without compromising output quality.

How to evaluate: Ensure results remain consistent with sequential runs and monitor for improvements in latency and throughput (e.g., are profiles processed correctly and faster when run in parallel?)

Confirm that outputs are consistent with those from a sequential execution

Track total latency and per-task runtime to assess parallel speedup

Watch for race conditions, dropped inputs, or silent failures in concurrency

To log traces, you must instrument your application either manually or automatically. To log to a remote instance of Phoenix, you must also configure the host and port where your traces will be sent.

When running Phoenix locally on the default port of 6006, no additional configuration is necessary.

If you are running a remote instance of Phoenix, you can configure your instrumentation to log to that instance using the PHOENIX_HOST and PHOENIX_PORT environment variables.

Alternatively, you can use the PHOENIX_COLLECTOR_ENDPOINT environment variable.

Tracing can be paused temporarily or disabled permanently.

Pause tracing using context manager

If there is a section of your code for which tracing is not desired, e.g. the document chunking process, it can be put inside the suppress_tracing context manager as shown below.

Uninstrument the auto-instrumentors permanently

Calling .uninstrument() on the auto-instrumentors will remove tracing permanently. Below is the examples for LangChain, LlamaIndex and OpenAI, respectively.

To get token counts when streaming, install openai>=1.26 and set stream_options={"include_usage": True} when calling create. Below is an example Python code snippet. For more info, see .

If you have customized a LangChain component (say a retriever), you might not get tracing for that component without some additional steps. Internally, instrumentation relies on components to inherit from LangChain base classes for the traces to show up. Below is an example of how to inherit from LanChain base classes to make a and to make traces show up.

Phoenix offers key modules to measure the quality of generated results as well as modules to measure retrieval quality.

Response Evaluation: Does the response match the retrieved context? Does it also match the query?

Retrieval Evaluation: Are the retrieved sources relevant to the query?

Evaluation of generated results can be challenging. Unlike traditional ML, the predicted results are not numeric or categorical, making it hard to define quantitative metrics for this problem.

Phoenix offers , a module designed to measure the quality of results. This module uses a "gold" LLM (e.g. GPT-4) to decide whether the generated answer is correct in a variety of ways. Note that many of these evaluation criteria DO NOT require ground-truth labels. Evaluation can be done simply with a combination of the input (query), output (response), and context.

LLM Evals supports the following response evaluation criteria:

QA Correctness - Whether a question was correctly answered by the system based on the retrieved data. In contrast to retrieval Evals that are checks on chunks of data returned, this check is a system level check of a correct Q&A.

Hallucinations - Designed to detect LLM hallucinations relative to retrieved context

Toxicity - Identify if the AI response is racist, biased, or toxic

Response evaluations are a critical first step to figuring out whether your LLM App is running correctly. Response evaluations can pinpoint specific executions (a.k.a. traces) that are performing badly and can be aggregated up so that you can track how your application is running as a whole.

Phoenix also provides evaluation of retrieval independently.

The concept of retrieval evaluation is not new; given a set of relevance scores for a set of retrieved documents, we can evaluate retrievers using retrieval metrics like precision, NDCG, hit rate and more.

LLM Evals supports the following retrieval evaluation criteria:

Relevance - Evaluates whether a retrieved document chunk contains an answer to the query.

Retrieval is possibly the most important step in any LLM application as poor and/or incorrect retrieval can be the cause of bad response generation. If your application uses RAG to power an LLM, retrieval evals can help you identify the cause of hallucinations and incorrect answers.

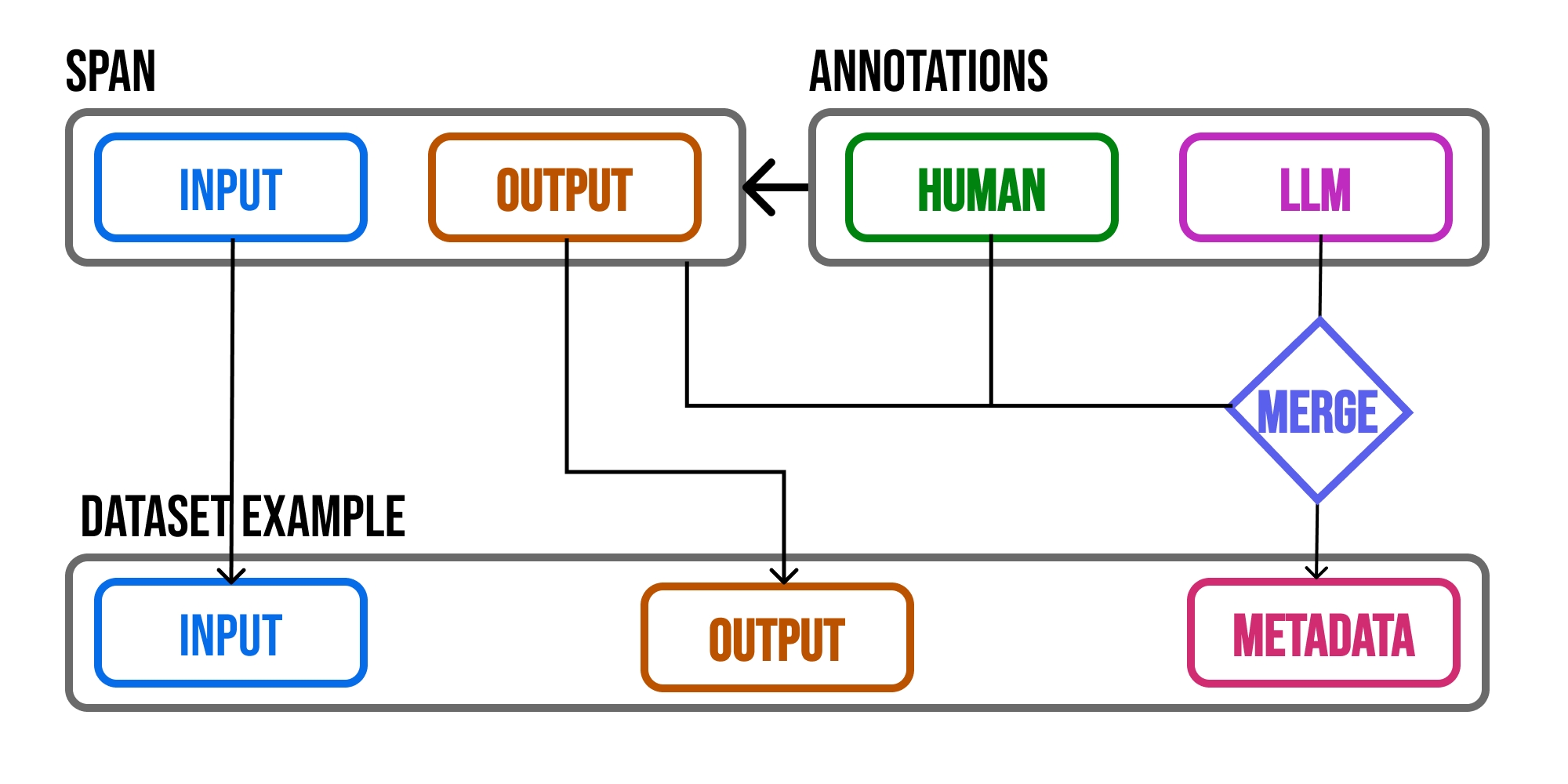

With Phoenix's LLM Evals, evaluation results (or just Evaluations for short) is data consisting of 3 main columns:

label: str [optional] - a classification label for the evaluation (e.g. "hallucinated" vs "factual"). Can be used to calculate percentages (e.g. percent hallucinated) and can be used to filter down your data (e.g. Evals["Hallucinations"].label == "hallucinated")

score: number [optional] - a numeric score for the evaluation (e.g. 1 for good, 0 for bad). Scores are great way to sort your data to surface poorly performing examples and can be used to filter your data by a threshold.

explanation: str [optional] - the reasoning for why the evaluation label or score was given. In the case of LLM evals, this is the evaluation model's reasoning. While explanations are optional, they can be extremely useful when trying to understand problematic areas of your application.

Let's take a look at an example list of Q&A relevance evaluations:

These three columns combined can drive any type of evaluation you can imagine. label provides a way to classify responses, score provides a way to assign a numeric assessment, and explanation gives you a way to get qualitative feedback.

With Phoenix, evaluations can be "attached" to the spans and documents collected. In order to facilitate this, Phoenix supports the following steps.

Querying and downloading data - query the spans collected by phoenix and materialize them into DataFrames to be used for evaluation (e.g. question and answer data, documents data).

Running Evaluations - the data queried in step 1 can be fed into LLM Evals to produce evaluation results.

Logging Evaluations - the evaluations performed in the above step can be logged back to Phoenix to be attached to spans and documents for evaluating responses and retrieval. See here on how to log evaluations to Phoenix.

Sorting and Filtering by Evaluation - once the evaluations have been logged back to Phoenix, the spans become instantly sortable and filterable by the evaluation values that you attached to the spans. (An example of an evaluation filter would be Eval["hallucination"].label == "hallucinated")

By following the above steps, you will have a full end-to-end flow for troubleshooting, evaluating, and root-causing an LLM application. By using LLM Evals in conjunction with Traces, you will be able to surface up problematic queries, get an explanation as to why the generation is problematic (e.g. hallucinated because ...), and be able to identify which step of your generative app requires improvement (e.g. did the LLM hallucinate or was the LLM fed bad context?).

For a full tutorial on LLM Ops, check out our tutorial below.

Possibly the most common use-case for creating a LLM application is to connect an LLM to proprietary data such as enterprise documents or video transcriptions. Applications such as these often times are built on top of LLM frameworks such as or , which have first-class support for vector store retrievers. Vector Stores enable teams to connect their own data to LLMs. A common application is chatbots looking across a company's knowledge base/context to answer specific questions.

There are varying degrees of how we can evaluate retrieval systems.

Step 1: First we care if the chatbot is correctly answering the user's questions. Are there certain types of questions the chatbot gets wrong more often?

Step 2: Once we know there's an issue, then we need metrics to trace where specifically did it go wrong. Is the issue with retrieval? Are the documents that the system retrieves irrelevant?

Step 3: If retrieval is not the issue, we should check if we even have the right documents to answer the question.

Visualize the chain of the traces and spans for a Q&A chatbot use case. You can click into specific spans.

When clicking into the retrieval span, you can see the relevance score for each document. This can surface irrelevant context.

Phoenix surfaces up clusters of similar queries that have poor feedback.

Phoenix can help uncover when irrelevant context is being retrieved using the LLM Evals for Relevance. You can look at a cluster's aggregate relevance metric with precision @k, NDCG, MRR, etc to identify where to improve. You can also look at a single prompt/response pair and see the relevance of documents.

Phoenix can help you identify if there is context that is missing from your knowledge base. By visualizing query density, you can understand what topics you need to add additional documentation for in order to improve your chatbots responses.

By setting the "primary" dataset as the user queries, and the "corpus" dataset as the context I have in my vector store, I can see if there are clusters of user query embeddings that have no nearby context embeddings, as seen in the example below.

Found a problematic cluster you want to dig into, but don't want to manually sift through all of the prompts and responses? Ask chatGPT to help you understand the make up of the cluster. .

Looking for code to get started? Go to our Quickstart guide for Search and Retrieval.

Cyclic workflows: LangGraph supports loops, retries, and iterative workflows that would be cumbersome in LangChain.

Debugging complexity: Deep graphs and multi-agent networks can be difficult to trace. Use Arize AX or Phoenix!

Fine-grained control: Customize prompts, tools, state updates, and edge logic for each node.

Token bloat: Cycles and retries can accumulate state and inflate token usage.

Visualize: Graph visualization makes it easier to follow logic flows and complex routing.

Requires upfront design: Graphs must be statically defined before execution. No dynamic graph construction mid-run.

Supports multi-agent coordination: Easily create agent networks with Supervisor and worker roles.

Supervisor misrouting: If not carefully designed, supervisors may loop unnecessarily or reroute outputs to the wrong agent.

from phoenix.trace import suppress_tracing

with suppress_tracing():

# Code running inside this block doesn't generate traces.

# For example, running LLM evals here won't generate additional traces.

...

# Tracing will resume outside the block.

...LangChainInstrumentor().uninstrument()

LlamaIndexInstrumentor().uninstrument()

OpenAIInstrumentor().uninstrument()

# etc.response = openai.OpenAI().chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Write a haiku."}],

max_tokens=20,

stream=True,

stream_options={"include_usage": True},

)from typing import List

from langchain_core.callbacks import CallbackManagerForRetrieverRun

from langchain_core.retrievers import BaseRetriever, Document

from openinference.instrumentation.langchain import LangChainInstrumentor

from opentelemetry import trace as trace_api

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk import trace as trace_sdk

from opentelemetry.sdk.trace.export import SimpleSpanProcessor

PHOENIX_COLLECTOR_ENDPOINT = "http://127.0.0.1:6006/v1/traces"

tracer_provider = trace_sdk.TracerProvider()

trace_api.set_tracer_provider(tracer_provider)

tracer_provider.add_span_processor(SimpleSpanProcessor(OTLPSpanExporter(endpoint)))

LangChainInstrumentor().instrument()

class CustomRetriever(BaseRetriever):

"""

This example is taken from langchain docs.

https://python.langchain.com/v0.1/docs/modules/data_connection/retrievers/custom_retriever/

A custom retriever that contains the top k documents that contain the user query.

This retriever only implements the sync method _get_relevant_documents.

If the retriever were to involve file access or network access, it could benefit

from a native async implementation of `_aget_relevant_documents`.

As usual, with Runnables, there's a default async implementation that's provided

that delegates to the sync implementation running on another thread.

"""

k: int

"""Number of top results to return"""

def _get_relevant_documents(

self, query: str, *, run_manager: CallbackManagerForRetrieverRun

) -> List[Document]:

"""Sync implementations for retriever."""

matching_documents: List[Document] = []

# Custom logic to find the top k documents that contain the query

for index in range(self.k):

matching_documents.append(Document(page_content=f"dummy content at {index}", score=1.0))

return matching_documents

retriever = CustomRetriever(k=3)

if __name__ == "__main__":

documents = retriever.invoke("what is the meaning of life?")import phoenix as px

from phoenix.trace import LangChainInstrumentor

px.launch_app()

LangChainInstrumentor().instrument()

# run your LangChain applicationimport os

from phoenix.trace import LangChainInstrumentor

# assume phoenix is running at 162.159.135.42:6007

os.environ["PHOENIX_HOST"] = "162.159.135.42"

os.environ["PHOENIX_PORT"] = "6007"

LangChainInstrumentor().instrument() # logs to http://162.159.135.42:6007

# run your LangChain applicationimport os

from phoenix.trace import LangChainInstrumentor

# assume phoenix is running at 162.159.135.42:6007

os.environ["PHOENIX_COLLECTOR_ENDPOINT"] = "162.159.135.42:6007"

LangChainInstrumentor().instrument() # logs to http://162.159.135.42:6007

# run your LangChain applicationDatasets are integral to evaluation and experimentation. They are collections of examples that provide the inputs and, optionally, expected reference outputs for assessing your application. Each example within a dataset represents a single data point, consisting of an inputs dictionary, an optional output dictionary, and an optional metadata dictionary. The optional output dictionary often contains the the expected LLM application output for the given input.

Datasets allow you to collect data from production, staging, evaluations, and even manually. The examples collected are then used to run experiments and evaluations to track improvements.

Use datasets to:

Store evaluation test cases for your eval script instead of managing large JSONL or CSV files

Capture generations to assess quality manually or using LLM-graded evals

Store user reviewed generations to find new test cases

With Phoenix, datasets are:

Integrated. Datasets are integrated with the platform, so you can add production spans to datasets, use datasets to run experiments, and use metadata to track different segments and use-cases.

Versioned. Every insert, update, and delete is versioned, so you can pin experiments and evaluations to a specific version of a dataset and track changes over time.

There are various ways to get started with datasets:

Manually Curated Examples

This is how we recommend you start. From building your application, you probably have an idea of what types of inputs you expect your application to be able to handle, and what "good" responses look like. You probably want to cover a few different common edge cases or situations you can imagine. Even 20 high quality, manually curated examples can go a long way.

Historical Logs

Once you ship an application, you start gleaning valuable information: how users are actually using it. This information can be valuable to capture and store in datasets. This allows you to test against specific use cases as you iterate on your application.

If your application is going well, you will likely get a lot of usage. How can you determine which datapoints are valuable to add? There are a few heuristics you can follow. If possible, try to collect end user feedback. You can then see which datapoints got negative feedback. That is super valuable! These are spots where your application did not perform well. You should add these to your dataset to test against in the future. You can also use other heuristics to identify interesting datapoints - for example, runs that took a long time to complete could be interesting to analyze and add to a dataset.

Synthetic Data

Once you have a few examples, you can try to artificially generate examples to get a lot of datapoints quickly. It's generally advised to have a few good handcrafted examples before this step, as the synthetic data will often resemble the source examples in some way.

While Phoenix doesn't have dataset types, conceptually you can contain:

Key-Value Pairs:

Inputs and outputs are arbitrary key-value pairs.

This dataset type is ideal for evaluating prompts, functions, and agents that require multiple inputs or generate multiple outputs.

If you have a RAG prompt template such as:

Given the context information and not prior knowledge, answer the query.

---------------------

{context}

---------------------

Query: {query}

Answer: Your dataset might look like:

{

"query": "What is Paul Graham known for?",

"context": "Paul Graham is an investor, entrepreneur, and computer scientist known for..."

}

{

"answer": "Paul Graham is known for co-founding Y Combinator, for his writing, and for his work on the Lisp programming language." }

LLM inputs and outputs:

Simply capture the input and output as a single string to test the completion of an LLM.

The "inputs" dictionary contains a single "input" key mapped to the prompt string.

The "outputs" dictionary contains a single "output" key mapped to the corresponding response string.

{

"input": "do you have to have two license plates in ontario" }

{

"output": "true"

}

{

"input": "are black beans the same as turtle beans" }

{ "output": "true" }

Messages or chat:

This type of dataset is designed for evaluating LLM structured messages as inputs and outputs.

The "inputs" dictionary contains a "messages" key mapped to a list of serialized chat messages.

The "outputs" dictionary contains a "messages" key mapped to a list of serialized chat messages.

This type of data is useful for evaluating conversational AI systems or chatbots.

{ "messages": [{ "role": "system", "content": "You are an expert SQL..."}] }

{ "messages": [{ "role": "assistant", "content": "select * from users"}] }

{ "messages": [{ "role": "system", "content": "You are a helpful..."}] }

{ "messages": [{ "role": "assistant", "content": "I don't know the answer to that"}] }

Depending on the type of contents of a given dataset, you might consider the dataset be a certain type.

A dataset that contains the inputs and the ideal "golden" output is often times is referred to as a Golden Dataset. These datasets are hand-labeled dataset and are used in evaluating the performance of LLMs or prompt templates. T.A golden dataset could look something like

Paris is the capital of France

True

Canada borders the United States

True

The native language of Japan is English

False

API centered on Agent, Task, and Tool

Tools are just Python functions decorated with @tool. There’s no centralized registry or schema enforcement, so developers must define conventions and structure on their own.

Provides flexibility for orchestration

No retry mechanism or built-in workflow engine