ML Model Monitoring that Scales

Monitor your machine learning model performance with Arize AI

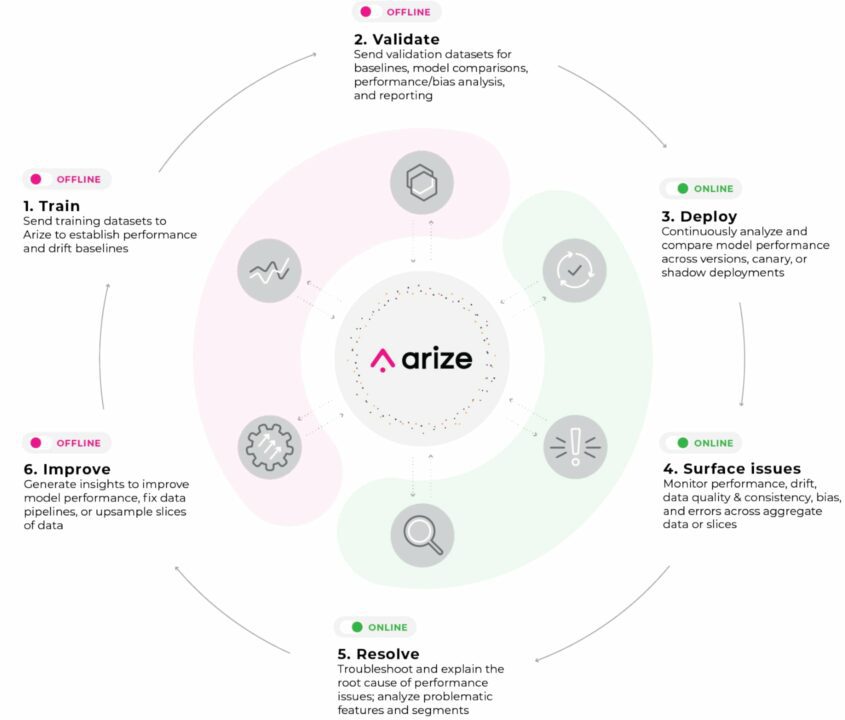

Keep your models in top shape by detecting model issues with Arize AI. Implement model monitoring as part of your overall ML observability solution to surface, resolve, and improve your models in production. Model monitoring with Arize moves beyond surfacing a red or green light by enabling ML practitioners to drill down, explain, and root cause model issues and improve overall AI outcomes.

Monitors with Arize AI include:

Daily or hourly checks on model performance such as accuracy above 80%, RMSE, MAPE, etc., with easy workflows to troubleshoot and visualize the root cause of accuracy problems.

Distribution comparisons, numeric or categorical on features, predictions, and actuals, automatically triggered when drift surpasses a specified threshold to alert teams of when drift is affecting the model’s overall performance.

Real-time data checks with features, predictions, and actuals to better understand key data quality metrics such as % empty, data type mismatch, or cardinality shifts.

Model monitoring as part of a comprehensive ML observability approach to automatically detect model issues, diagnose hard-to-find problems, and improve your model’s performance in production using explainability metrics, easy visualizations, and a holistic understanding of a model’s data across all environments.

Request a trial