Arize AX for ML Observability

The unified platform to help machine learning engineering teams monitor, debug, and improve ML model performance in production.

Complete Visibility into ML Model Performance

Automatically surface data drift or model issues, then trace problems back to the source

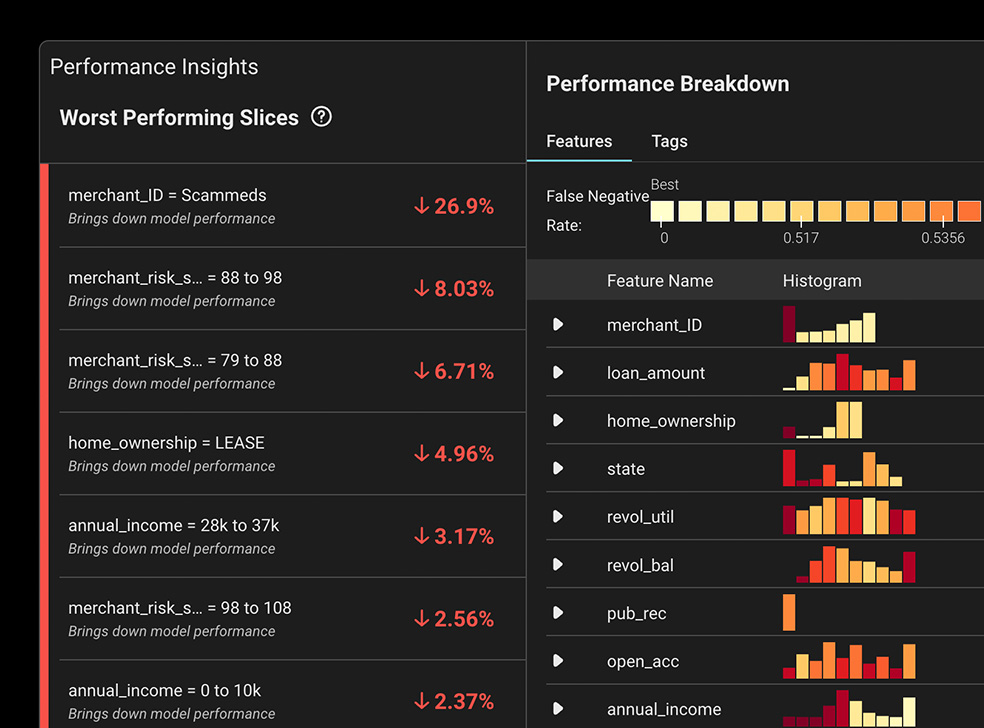

Performance Tracing

Instantly surface up worst-performing slices of predictions with heatmaps that pinpoint problematic model features and values.

Explainability

Gain insights into why a model arrived at its outcomes, so you can optimize performance over time and mitigate potential model bias issues.

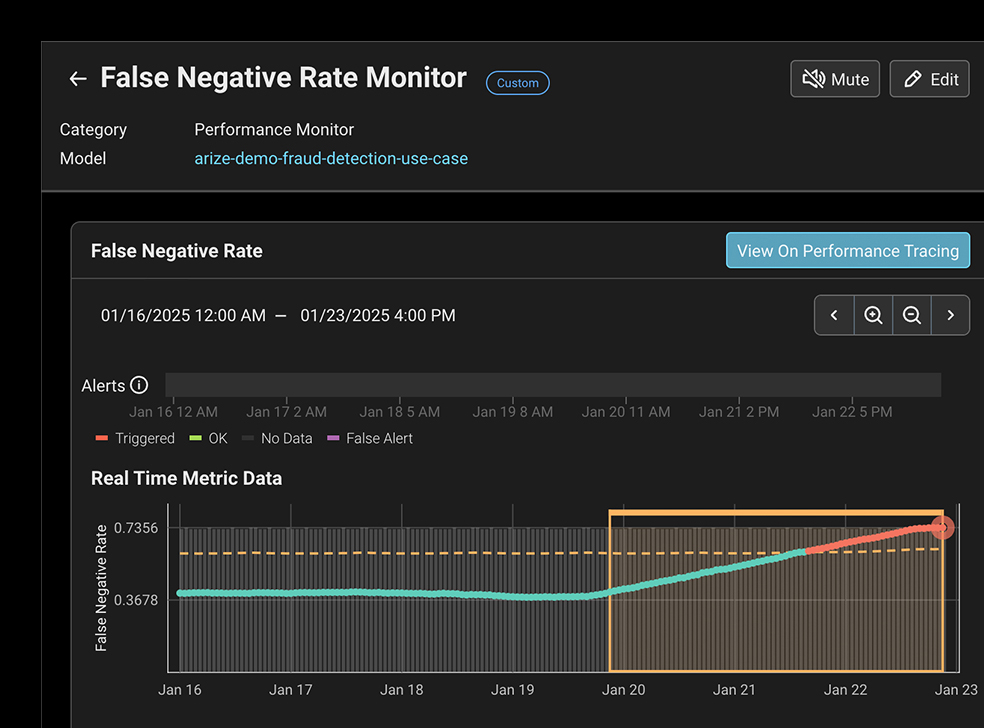

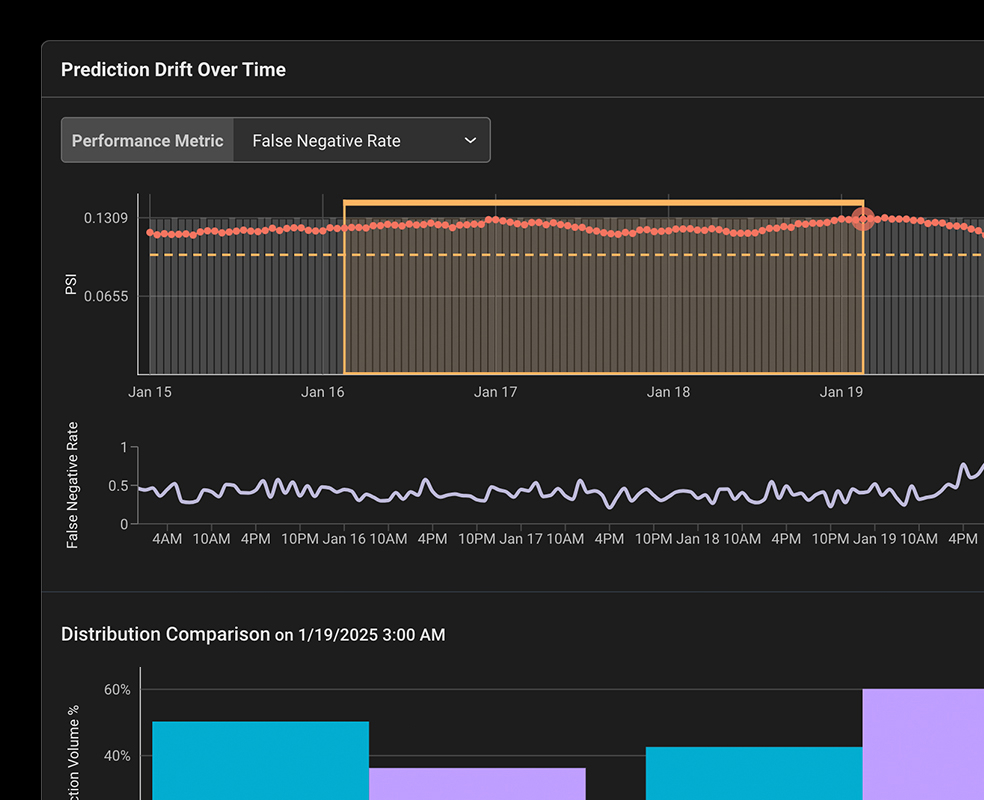

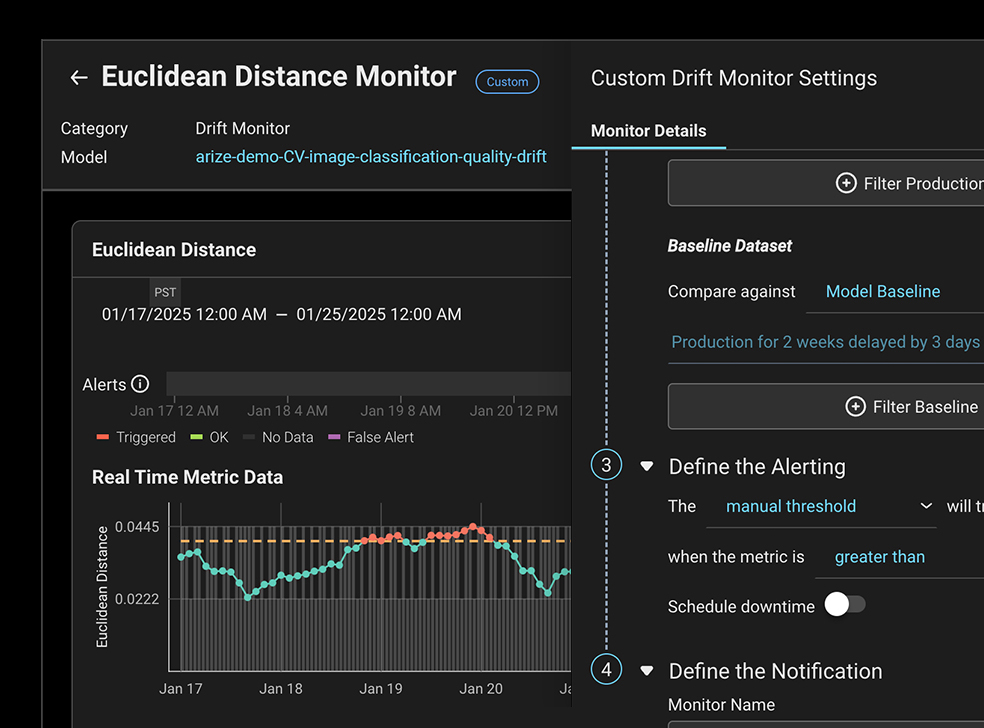

Dashboards & Monitors

Automated model monitoring and dynamic dashboards help you quickly kickoff root cause analysis workflows.

Model & Feature Drift

Compare datasets across training, validation, and production environments to detect unexpected shifts in your model’s predictions or feature values.

Smart Data Workflows for Model Improvement

Find, Analyze, and improve your ML data with AI-driven workflows and automation

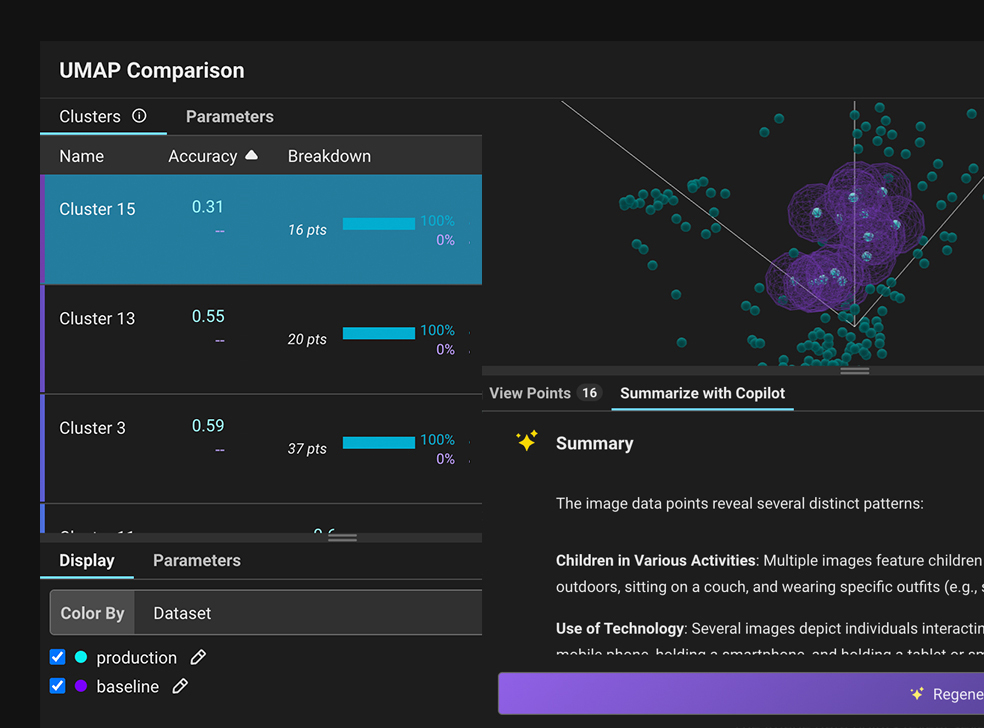

Cluster Search & Curate

AI-driven similarity search streamlines the ability to find and analyze clusters of data points that look like your reference point of interest.

Embedding Monitoring

Monitor embedding drift for NLP, computer vision, and multi-variate tabular model data.

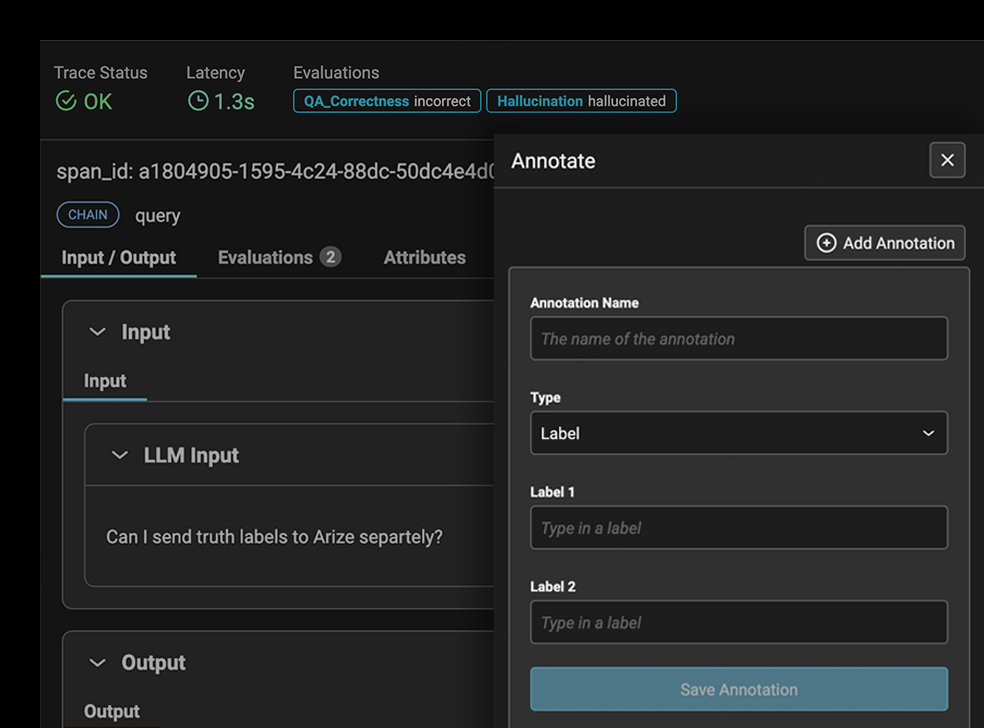

Annotate

Native support to augment your model data with human feedback, labels, metadata, and notes.

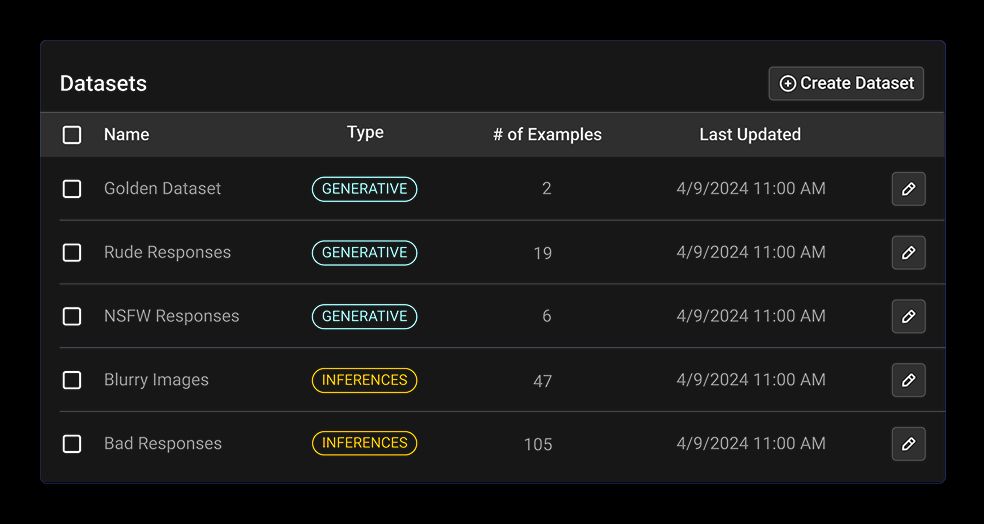

Build Datasets

Save off data points of interest for experiment runs, A/B analysis, and relabeling and improvement workflows.