America First Credit Union is one of America’s largest independent credit unions, with 1.5 million members and more than $20 billion worth of deposits.

As America First Credit Union scaled AI across its business, a new challenge emerged: business stakeholders still needed to explain decisions, but the “why” increasingly lived inside complex statistical models.

To provide assistance, the data science and engineering teams at America First Credit Union set out to build a new internal GenAI app to translate model-driven outcomes into a human-friendly, end-to-end narrative, enabling teams to move faster with fewer ad-hoc escalations.

Product Requirements

Shipping an internal “decision explainer” that people will actually use quickly introduces three constraints:

- End-to-end context, not a single model view – Stakeholders want the whole flow that produced the outcome, as opposed to just the singular response.

- Low-latency explanations – Responses in the interactive UX need to be relatively instant, even when multiple sub-explanations are requested.

- Production-grade observability – Provide insight across microservices + parallel workers, so the team can debug, iterate, and eventually evaluate quality with confidence.

Behind the Build

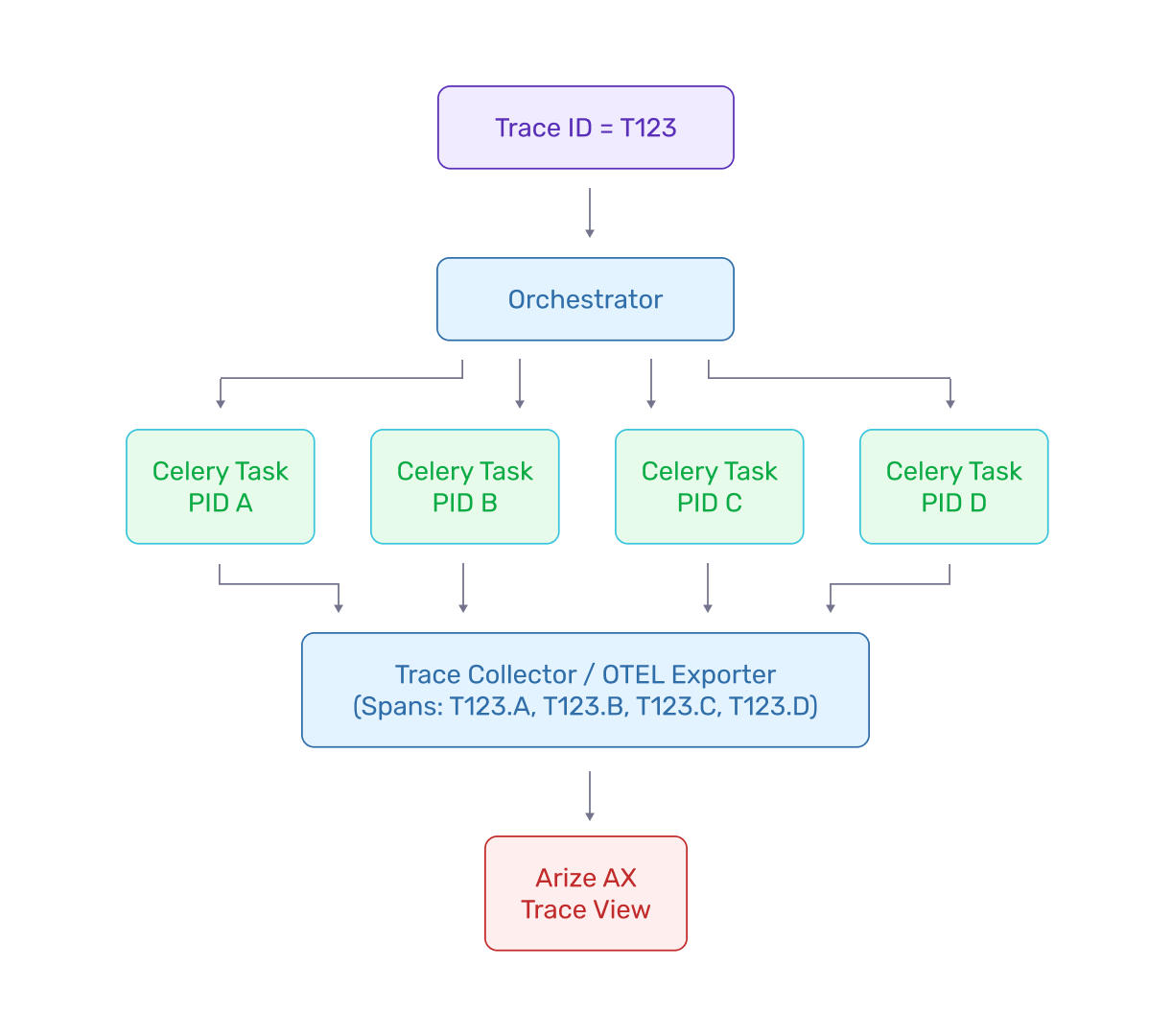

America First Credit Union parallelizes LLM work using Celery, spinning up short-lived worker processes to run tasks concurrently. Since explanations often need multiple LLM “sub-answers” (e.g., different sections, different contributing signals), parallelizing using Celery helps the team deliver answers faster to meet internal expectations. “Five or six seconds is very, very reasonable for an LLM but that’s far longer than what we want for a UX,” explains Samuel Romine, Machine Learning Engineer at America First Credit Union.

“This provides a way for business users to come in and interrogate a decision, just like they would pop into somebody’s office.” — Austin Facer, Senior Data Scientist, America First Credit Union

Tracing & Observability

America First Credit Union’s explainer is designed to scale for internal use cases — and from the start, it is configured so the team can track performance and behavior through tracing in Arize AX.

“Everything’s integrated through tracing using Arize AX, we can keep track of performance. We can just plug and play with other projects – since Arize integrates so well with OpenTelemetry, even when I need something super custom I’m able to plug it in.” — Samuel Romine, America First Credit Union

The value of Arize in this workflow lies in converting LLM workloads in a distributed, parallelized application into one debuggable narrative — so when something breaks or quality regresses, AFCU is able to debug at every part of the application.

Results

Early signals from the internal rollout show the explainer is improving both business usability and engineering velocity:

- Faster “why” answers for business users: underwriters get a plain-English narrative without hunting through scattered decision details.

- Higher usefulness after a product shift: users wanted the full end-to-end decision process explained, not just the model.

- Debuggable at scale: parallel LLM work stays observable via end-to-end tracing across concurrent tasks/containers.

- Clear economic rationale: it follows the same “reduce ad-hoc effort and accelerate iteration” logic behind earlier observability ROI (>500% ROI in year one).

Book some time with us for a custom demo