Agentic AI

What is Agentic AI?

Agentic AI is an AI system built with the capacity to autonomously make decisions, take actions, and maintain memory across multiple steps in a task. These agents are more than just text-based chatbots responding to prompts — they are constructed with components like routers, skills, and memory that simulate a layered cognitive architecture. A router acts like a “boss,” making decisions about which skills to call based on user input. Skills are the logical units of action, akin to looking up a product or executing a database query. Memory ensures continuity across multi-turn interactions. This structured approach distinguishes agentic AI from simpler LLM applications that operate in single-turn, stateless interactions.

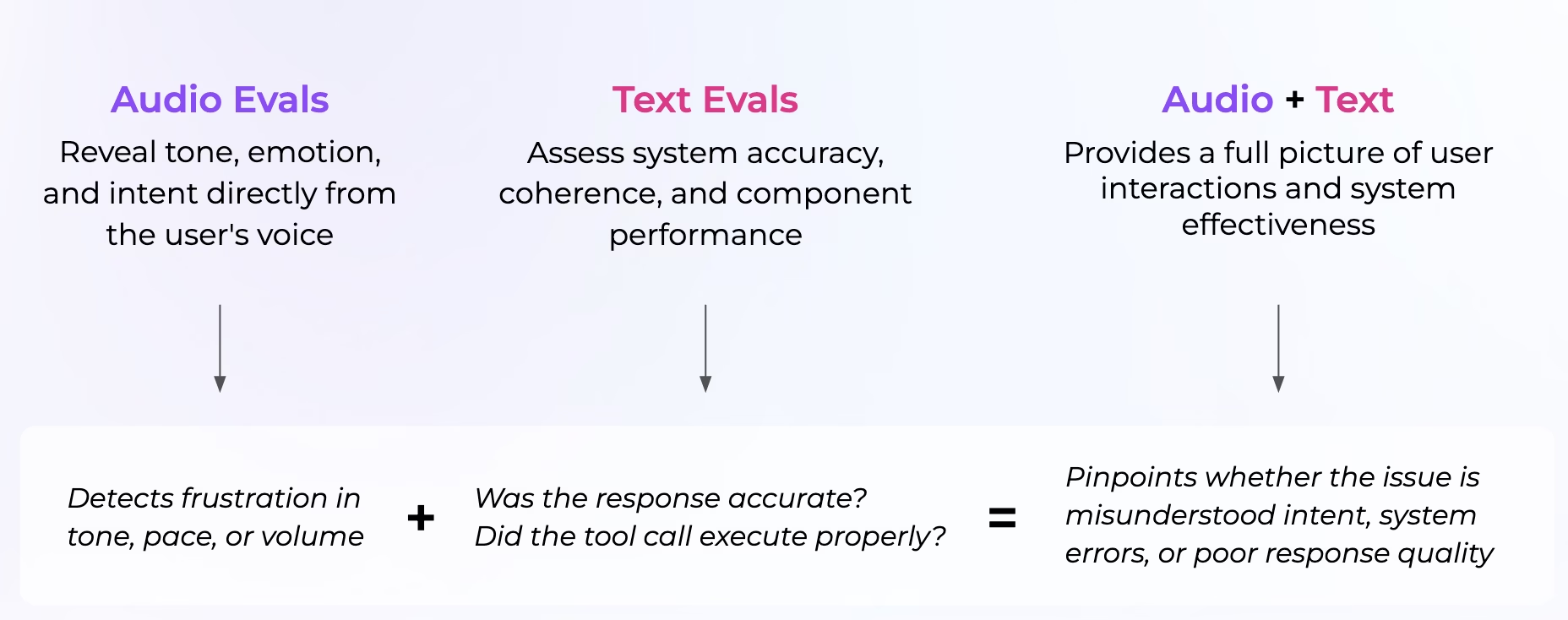

Moving Beyond Chatbots: Multimodal and Voice-Based Agents

Agentic AI is quickly expanding into voice and multimodal interfaces. Voice AI is already transforming industries like customer service—over a billion call center conversations today are powered by real-time voice APIs. A compelling example is the Priceline Pennybot, which allows users to book an entire vacation hands-free. Evaluating these new agents requires going beyond textual responses to consider speech transcription, audio quality, user sentiment, and conversational flow. This expansion into new modalities increases both the complexity and the need for tailored evaluation frameworks.

Agentic AI Evaluation: Why It’s Not One-Size-Fits-All

Each part of an agent — router, skill, tool, memory, and more —presents unique failure points and evaluation challenges. Routers must be evaluated not only for choosing the correct skill but for passing the correct parameters (e.g., selecting leggings in the right size and price range). Skills can be assessed using LLM-as-a-judge or code-based evaluations for tasks like RAG (retrieval-augmented generation), focusing on the relevance and correctness of answers. The path the agent takes through these steps also matters; “convergence” refers to whether it consistently completes tasks in a reasonable number of steps. Different model backends (like OpenAI vs. Anthropic) can take wildly different paths for the same task, which introduces yet another layer of complexity.

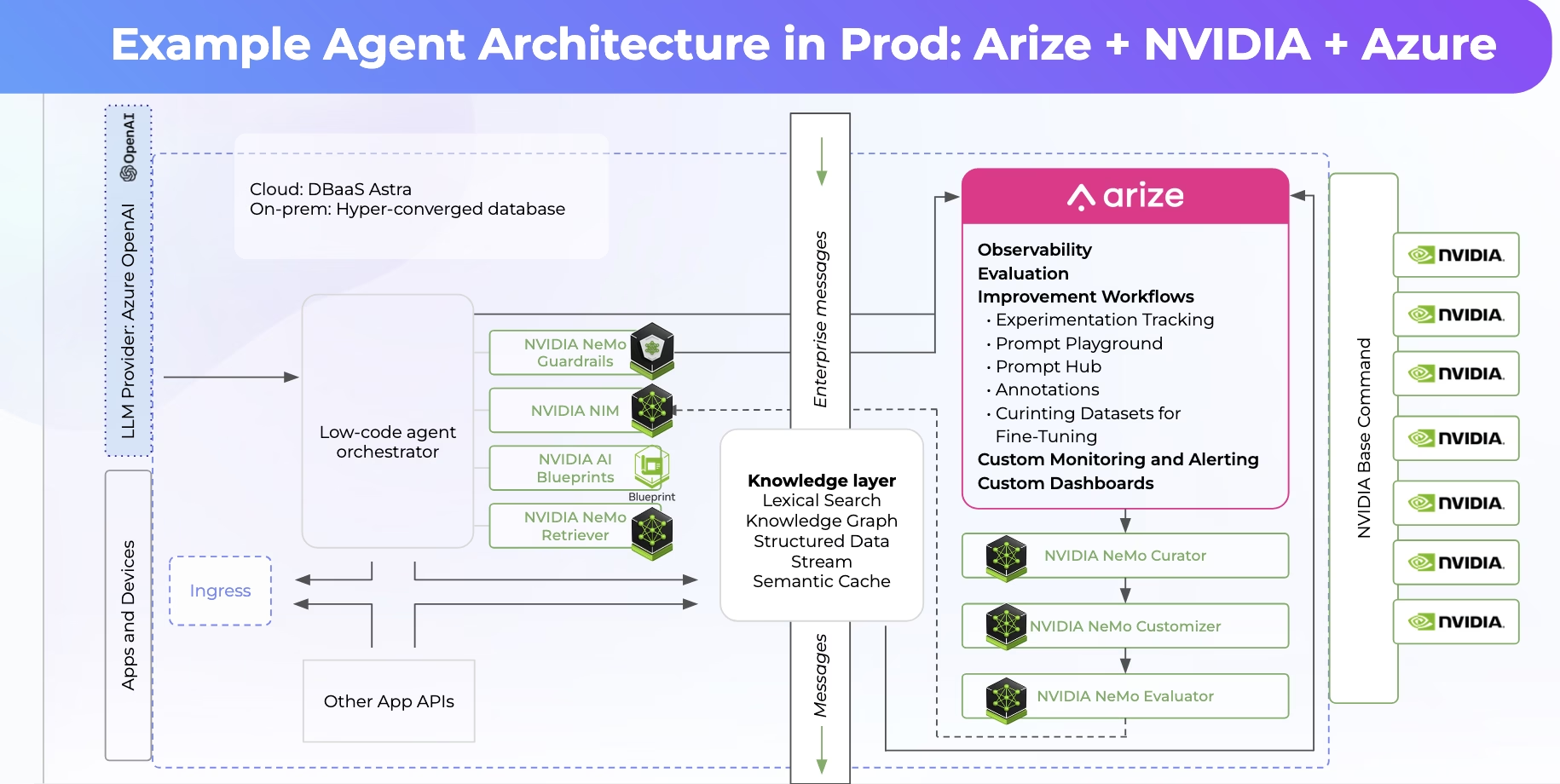

Agentic AI Observability: Tracing Under the Hood

Understanding what an agent did and why it did it is core to observability. “Traces” — logs that show each router decision, skill invocation, and memory update — are invaluable for debugging and performance optimization. They let teams pinpoint where an agent failed: Did it pick the wrong skill? Did it send the wrong data? Did it lose track of conversation state? Observability in agentic AI requires tooling that offers deep visibility into the decision-making flow, not just the final output.

Agent Evals Everywhere: A New Mindset for Reliability

Evaluations shouldn’t be bolted on—they should be woven throughout the system. Internal agents with robust observability have evaluations at each stage: Was the search query interpreted correctly? Did the router make the right call? Did the skill execute correctly? This holistic approach allows for pinpointing failures and continuously improving the system. As agentic AI becomes more complex and embedded in real-world workflows, rigorous, layered agent evaluation and observability will be essential for maintaining trust, performance, and safety.