AI Memory

A new class of infrastructure is forming to power AI memory across the gen-AI value chain. If your app needs to recall, personalize, or adapt across time and not just react, memory layers are becoming essential.

What Is AI Memory?

AI memory is the emerging infrastructure that enables apps and agents to recall, personalize, and adapt across time rather than just react.

AI memory marks a shift from focusing solely on expanding context windows to building systems that remember and persist knowledge, preferences, and interactions beyond a single session.

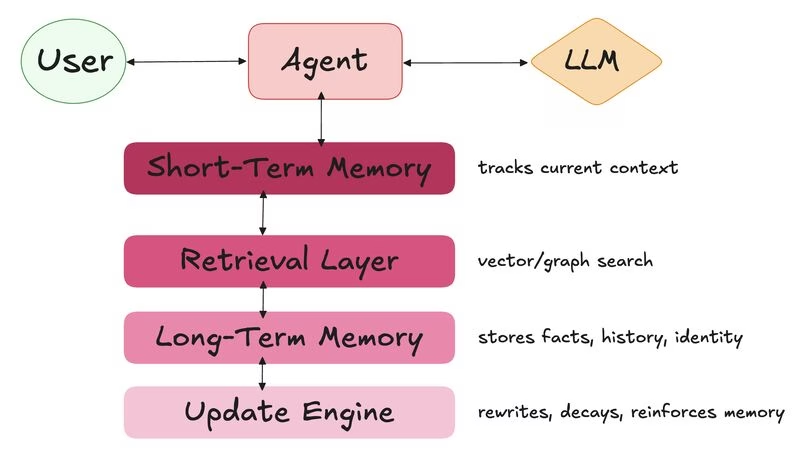

Memory systems power this by operating as loops—not pipelines—where agents dynamically retrieve, reflect, and revise their state in response to evolving context.

If your app needs to act over time—not just within a single prompt—memory layers are becoming essential.

What Are the Core Components of AI Memory Systems

AI memory systems have four core components:

- 🧠 Short-Term Memory (STM): Tracks recent interactions—critical for coherence and multi-step reasoning

- 📚 Long-Term Memory (LTM): Persists across sessions. Stores facts, preferences, identity—like a personal knowledge base

- 🔍 Retrieval: Finds relevant info via vector search or knowledge graphs

- ♻️ Updating: Rewrites or reinforces memory as new signals come in

These systems operate as loops—not pipelines. Agents dynamically retrieve, reflect, and revise state in response to evolving context.

AI Memory Layer Platforms

There are two breakout memory layer leaders today: Mem0 and Zep.

While they have the same goal — persistent, queryable memory for LLMs — they have big differences in design and philosophy.

| Design | Results | Tradeoffs | |

| Mem0 | Composable platform: hybrid architecture (vector + graph + kv), adaptive memory updates, and multi-level recall | +26% accuracy over OpenAI memory and 91% faster response time | Leans toward agent builders who want control |

| Zep | Scalable backend: temporal knowledge graphs, structured session memory, and drop-in integration with LangChain, LangGraph, etc. | +18.5% accuracy gain (LongMemEval) and 90% latency reduction | Built for teams shipping LLM features at scale |

Full List of AI Memory Tools: An Emerging Ecosystem

Beyond Mem0 and Zep, a wave of new memory systems is pushing in different directions:

Memory Platforms at a Glance

| System | Architecture | Strengths | Use Case Fit |

| Mem0 | Vector + Graph + KV Store | Adaptive updates, control | Agent Builders |

| Zep | Temporal Knowledge Graph | Latency, plug-and-play | Production LLM pipelines |

| LangMem | Summarization | Minimizes context size via summarization and selective recall | Suited for constrained LLM calls (e.g. support, assistants) |

| Memoripy | Clustering + Decay | Lightweight, local-first memory | Great for fast, minimal agents |

| Memary | Knowledge Graph | Knowledge graph–centric, with a roadmap toward cross-agent memory | Aiming at reasoning-heavy, persistent systems |

| Cognee | Pipelines + Graphs | Explores memory pipelines and structured grounding | For RAG-heavy workflows |

| Letta | Local Server | Open-source memory server for vLLM/Ollama | Strong option for local LLM stacks |

What Memory Is Embedded Inside Agent Frameworks?

It’s important to remember that some memory lives inside agent frameworks. This is often overlooked data but can be critical for coordination.

| Framework | Memory Design | Limitations |

| LangChain | Buffer, summary, and vector memory modules | Easy to use, but session-bound and single-agent |

| AutoGen | Tracks multi-agent dialogue, tool calls, and feedback | Structured, but tightly scoped to task runs |

| CrewAI | Agents read/write shared memory | Enables coordination, but lacks depth and long-term persistence |

Why Do You Need A Standalone Memory Layer?

While agent memory is great for tactical memory — like reasoning chains, short-term context, and inter-agent sync — these frameworks are not built for strategic memory—persistence, semantic recall, and knowledge accumulation across time. That is where standalone memory is needed.