LLM as a Judge Evaluation

Traditionally, evaluating LLM and generative AI systems has been slow, expensive, and subjective. Human annotators are expensive. Metrics like BLEU or ROUGE don’t capture nuance. LLM-as-a-Judge uses large language models themselves to evaluate outputs from other models. Instead of relying solely on humans, LLM judges can assess quality, relevance, correctness, and more — and often do so with surprising accuracy.

What Is LLM-as-a-Judge?

LLM as a judge refers to using large language models themselves to evaluate outputs from other models. Instead of relying solely on humans, LLM judges can assess quality. It is often essential in testing multiagent systems and AI applications. Instead of relying solely on human annotations or traditional metrics, developers use powerful LLMs to assess responses based on quality, accuracy, relevance, coherence, and more. This approach enables automated, scalable, and cost-effective evaluation — and in many cases, LLM judges perform comparably to human reviewers.

Why Use LLMs As Judges?

LLMs can evaluate at scale across thousands of generations quickly and consistently at a fraction of the cost of human evaluations.

They also show high agreement with human preferences when used correctly — in some studies, matching human-human agreement rates.

Online vs. Offline Evaluation

LLM-as-a-Judge can be used in two distinct evaluation settings:

- Offline Evaluation is ideal for experimentation, benchmarking, and model comparisons. It happens after inference and supports complex, detailed analyses with minimal latency constraints.

- Online Evaluation is used in real-time or production environments — like dashboards or live customer interactions. These setups require LLMs to judge quickly and consistently, often with stricter limits on latency, compute, and reliability.

Understanding this distinction helps teams choose the right prompting strategy, model, and evaluation architecture for their use case.

Real-World Applications

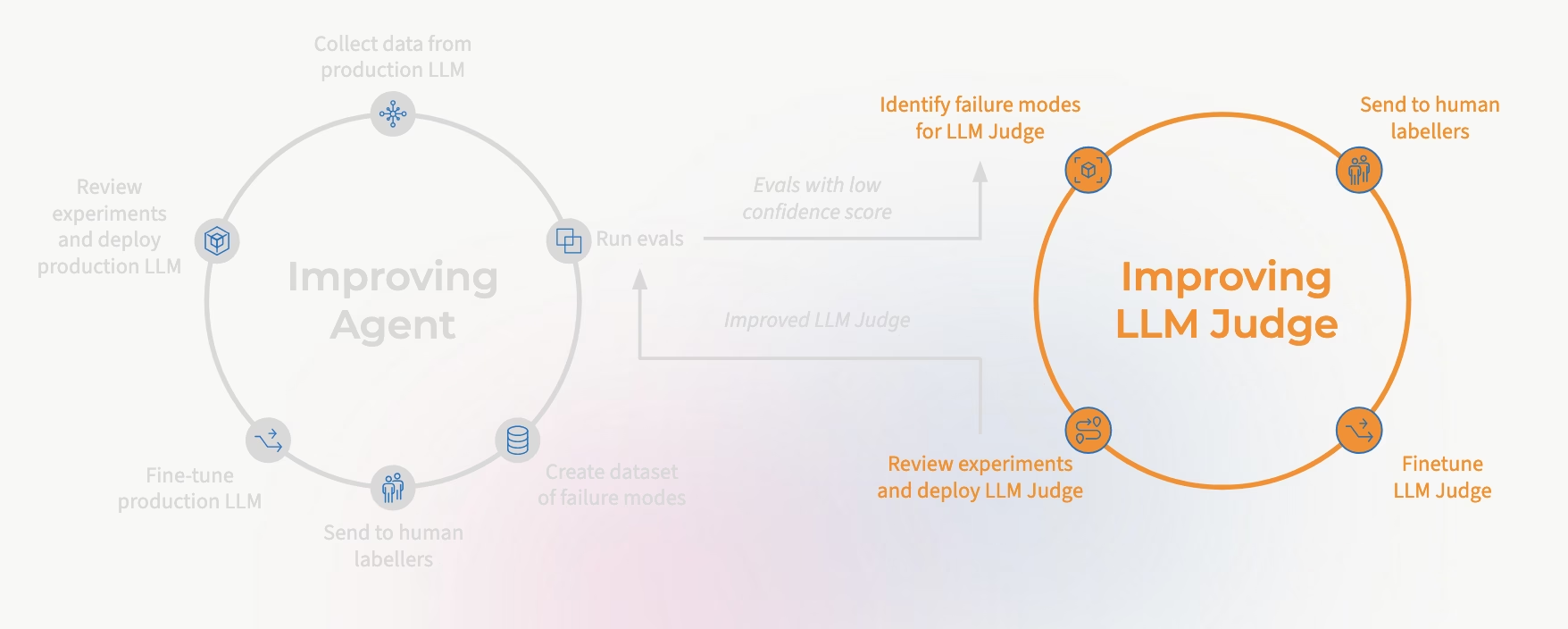

Today, teams use LLM-as-a-Judge techniques to evaluate everything from LLM summarization to chatbots, retrieval to RAG pipelines, and even agentic systems. These evaluations are powering dashboards, tracking performance regressions, guiding fine-tuning, and informing go-to-market.

Some examples of what this looks like in practice: Evaluate chatbot answers vs. ground-truth; track hallucination rates across model versions; score retrieved document relevance in RAG pipelines; assess output toxicity or bias; diagnose failures of agentic tool-use behavior and planning.

LLM as a Judge Evaluators

LLM as a judge is useful across a raft of use cases, including detecting hallucination, accuracy, relevancy, toxicity and more. Here is a list of simple eval templates using LLM as a judge built by Arize AI that are tested against benchmarked datasets and target precision and F-score above 70%.

| Eval & Link to Docs (Prompt + Code) | Description |

| Hallucinations | Checks if outputs contain unsupported or incorrect information. |

| Question Answering | Assesses if responses fully and accurately answer user queries given reference data. |

| Retrieved Document Relevancy | Determines if provided documents are relevant to a given query or task. |

| Toxicity | Identifies racist, sexist, biased, or otherwise inappropriate content. |

| Summarization | Judges the accuracy and clarity of summaries relative to original texts. |

| Code Generation | Evaluates correctness of generated code relative to task instructions. |

| Human vs AI | Compares AI-generated text directly against human-written benchmarks. |

| Citation | Checks correctness and relevance of citations to original sources. |

| User Frustration | Detects user frustration signals within conversational AI contexts. |

Agent Evaluation With LLM as a Judge

LLM judges aren’t just for evaluating static generations. They’re also being used to evaluate multi-step agent behavior — including planning, tool use, and reflection. In addition to offering custom evals, Arize supports templates for structured agent evaluation across dimensions like:

| Evaluation Type | Description | Evaluation Criteria |

| Agent Planning | Assesses the quality of an agent’s plan to accomplish a given task using available tools. | Does the plan include only valid and applicable tools for the task? Are the tools used sufficient to accomplish the task? Will the plan, as outlined, successfully achieve the desired outcome? Is this the shortest and most efficient plan to accomplish the task? |

| Agent Tool Selection | Evaluates whether the agent selects the appropriate tool(s) for a given input or question. | Does the selected tool align with the user’s intent? Is the tool capable of addressing the specific requirements of the task? Are there more suitable tools available that the agent overlooked? |

| Agent Parameter Extraction | Checks if the agent correctly extracts and utilizes parameters required for tool execution. | Are all necessary parameters accurately extracted from the input? Are the parameters formatted correctly for the tool’s requirements? Is there any missing or extraneous information in the parameters? |

| Agent Tool Calling | Determines if the agent’s tool invocation is appropriate and correctly structured. | Is the correct tool called for the task? Are the parameters passed to the tool accurate and complete? Does the tool call adhere to the expected syntax and structure? |

| Agent Path Evaluation | Analyzes the sequence of steps the agent takes to complete a task, focusing on efficiency and correctness. | Does the agent follow a logical and efficient sequence of actions? Are there unnecessary or redundant steps? Does the agent avoid loops or dead-ends in its reasoning? |

| Agent Reflection | Encourages the agent to self-assess its performance and make improvements. | Can the agent identify errors or suboptimal decisions in its process? Does the agent propose viable alternatives or corrections? Is the reflection process constructive and leads to better outcomes? |

LLM-as-a-Judge: Basis In Research

Several academic papers establish the legitimacy, efficacy and limitations of LLM as a judge.

| Paper | Authors & Affiliations | Year | Why It Matters |

| Judging LLM-as-a-Judge with MT-Bench | Lianmin Zheng et al. (UC Berkeley) | 2023 | Demonstrated GPT-4’s evaluations closely align with humans. |

| From Generation to Judgment: Survey | Dawei Li et al. (Arizona State University) | 2024 | Comprehensive overview identifying best practices and pitfalls. |

| G-Eval: GPT-4 for Better Human Alignment | Yang Liu et al. (Microsoft Research) | 2023 | Provided methods for structured evaluations that outperform traditional metrics. |

| GPTScore: Evaluate as You Desire | Jinlan Fu et al. (NUS & CMU) | 2024 | Allowed flexible, tailored evaluations through natural language prompts. |

| Training an LLM-as-a-Judge Model: Pipeline & Lessons | Renjun Hu et al. (Alibaba Cloud) | 2025 | Offered practical insights into effectively training custom LLM judges. |

| Critical Evaluation of AI Feedback | Archit Sharma et al. (Stanford & Toyota Research Institute) | 2024 | Explored replacing humans with LLMs in RLHF pipelines. |

| LLM-as-a-Judge in Extractive QA | Xanh Ho et al. (University of Nantes & NII Japan) | 2025 | Showed LLM judgments align better than EM/F1 with human evaluation. |

| A Survey on LLM-as-a-Judge | Jiawei Zhang et al. (University of Illinois Urbana-Champaign) | 2025 | Offers a comprehensive taxonomy of LLM-as-a-Judge frameworks, benchmarks, and use cases across NLP and multi-modal domains. Emphasizes challenges like bias, calibration, and evaluation reproducibility. |

Prompting LLMs to Be Effective Judges

Getting reliable results from LLM-as-a-Judge starts with clear and well-structured prompts. The way you pose the evaluation task matters. Here are a few commonly used formats.

Common LLM-as-a-Judge Prompt Formats

- Single-answer evaluation: Present a user input and a single model response. Ask the LLM to rate it on specific criteria like correctness, helpfulness, or fluency.

- Pairwise evaluation: Provide two outputs for the same input and ask the LLM to select the better one, explaining its choice.

Prompting Best Practices

- Include explicit criteria (e.g., “Rate based on helpfulness, factual accuracy, and completeness.”)

- Ask for chain-of-thought reasoning before the final score or decision

- Request structured outputs (e.g., a JSON format or bullet points)

Example prompt:

You are an expert AI evaluator. Given a user question and two model answers, select which response is better and explain why.

Question: ...

Answer A: ...

Answer B: ...

Evaluation Criteria: helpfulness, factuality, coherence.

Respond in this format:

Better Answer: A or B

Explanation: ...Limitations & Pitfalls

Some LLMs may introduce subtle biases when used as evaluators. For example, certain models show a tendency to prefer responses in position A when performing pairwise comparisons, and often favors longer responses. These kinds of behaviors can skew evaluation outcomes. In general, evaluation quality varies by model and prompt. Consistency in prompt formatting and evaluation criteria is key to getting trustworthy results.

Keep in mind:

- Evaluation quality depends heavily on prompt structure

- Use chain-of-thought (CoT) or reasoning steps to boost reliability

- Compare against a human gold standard (especially early on)

Big Picture

LLM-as-a-Judge isn’t just a trend — it’s quickly becoming the default evaluation approach in many production AI stacks. When used carefully, it enables faster iteration, better tracking, and more rigorous quality control. And best of all: it’s fully automated.