What is LLM Evaluation?

Chapter Summary

Overview: The introduction provides an overview of LLM evaluation, and emphasizes the importance of evaluating LLM applications to ensure they meet user expectations and perform reliably. It highlights the shift from traditional software testing to dynamic, context-sensitive evaluations that account for LLMs’ non-deterministic nature.

Get started with LLM evaluation by following our product documentation, which provides step-by-step guidance to begin implementing effective evaluation strategies.

Large Language Model (LLM) evaluation is the process of systematically assessing how well your application performs when powered by an LLM. It helps you understand and measure key factors like relevance, hallucination rate, latency, and task accuracy.

You’re constantly iterating—modifying prompts, adjusting temperature settings, or refining your retrieval strategy. But without evaluation, you’re flying blind. You won’t know whether a change improves performance, breaks a use case, or has no impact at all.

Why Is LLM Evaluation Important?

LLMs are powerful tools that let developers build intelligent systems without collecting labeled data or training custom models. With just a prompt, you can generate content, extract structured data, summarize documents, and more.

That ease of development is a double-edged sword. While LLMs demo well, production environments are far less forgiving. A prompt that works in a sandbox may fail under real-world complexity, user diversity, or edge cases.

That’s where evaluation comes in. Evaluation allows you to:

- Track improvements as you iterate on prompts, parameters, or retrieval.

- Detect regressions before they impact users.

- Quantify quality across axes like relevance, hallucination rate, coherence, latency, and more.

- <strong”>Benchmark alternatives (e.g., different models, strategies, or tools).

But not all evals are created equal. The dataset you choose to evaluate against plays a huge role. A narrow test set might produce strong metrics, but those results won’t generalize to production. To trust your evals, your dataset needs to reflect real use cases and user behavior.

What’s in this LLM Evaluation Guide?

Our Definitive Guide to LLM Evaluation provides a structured approach to building, implementing, and optimizing evaluation strategies for applications powered by large language models (LLMs). As the use of LLMs expands across industries, this guide outlines the tools, frameworks, and best practices necessary to evaluate and improve these systems effectively.

The guide begins by introducing LLM as a Judge, an approach where LLMs assess their own or other models’ outputs. This method automates evaluations, reducing the reliance on costly human annotations while providing scalable and consistent assessments. The foundational discussion then moves to different evaluation types, including token classification and synthetic data evaluation, emphasizing the importance of selecting the right approach for specific use cases.

In pre-production stages, curating high-quality datasets is essential for reliable evaluations. The guide details methods like synthetic data generation, human annotation, and benchmarking LLM evaluation metrics to create robust test datasets. These datasets help establish LLM evaluation benchmarks, ensuring that the evaluation process aligns with real-world scenarios.

For teams integrating LLMs into production workflows, we explore CI/CD testing frameworks, which enable continuous iteration and validation of updates. By incorporating experiments and automated tests into pipelines, teams can maintain stability and performance while adapting to evolving requirements.

As applications move into production, LLM guardrails play a critical role in mitigating risks, such as hallucinations, toxic responses, or security vulnerabilities. This section covers input and output validation strategies, dynamic guards, and few-shot prompting techniques for addressing edge cases and attacks.

Finally, we highlight practical use cases, including RAG evaluation, which focuses on assessing retrieval-augmented generation systems for document relevance and response accuracy, to ensure seamless performance across all components. By combining insights from metrics, AI guardrails, and benchmarks, teams can holistically assess their applications’ performance and ensure alignment with business goals.

This guide provides everything needed to evaluate LLMs effectively, from pre-production dataset preparation to production-grade safeguards and ongoing improvement strategies. It is an essential resource for AI teams aiming to deliver reliable, safe, and impactful LLM-powered solutions.

LLM Evaluation: Getting Started

Paradigm Shift: Integration Testing and Unit Testing to LLM Evaluations

While at first glance the shift from traditional software testing methods like integration and unit testing to LLM application evaluations may seem drastic, both approaches share a common goal: ensuring that a system behaves as expected and delivers consistent, reliable outcomes. Fundamentally, both testing paradigms aim to validate the functionality, reliability, and overall performance of an application.

In traditional software engineering:

- Unit Testing isolates individual components of the code, ensuring that each function works correctly on its own.

- Integration Testing focuses on how different modules or services work together, validating the correctness of their interactions.

In the world of LLM applications, these goals remain, but the complexity of behavior increases due to the non-deterministic nature of LLMs.

- Dynamic Behavior Evaluation: Rather than testing isolated code components, LLM evaluations focus on how the application responds to various inputs in real-time, examining not just accuracy but also context relevance, coherence, and user experience.

- Task-Oriented Assessments: Evaluations are now centered on the application’s ability to complete user-specific tasks, such as resolving queries, generating coherent responses, or interacting seamlessly with external systems (e.g., function calling).

Both paradigms emphasize predictability and consistency, with the key difference being that LLM applications require dynamic, context-sensitive evaluations, as their outputs can vary with different inputs. However, the underlying principle remains: ensuring that the system (whether it’s traditional code or an LLM-driven application) performs as designed, handles edge cases, and delivers value reliably.

LLM Eval Types

In this section, we’ll review a number of different ways to approach LLM evaluations: LLM as a Judge, Code based evaluations, and online & offline LLM evaluations.

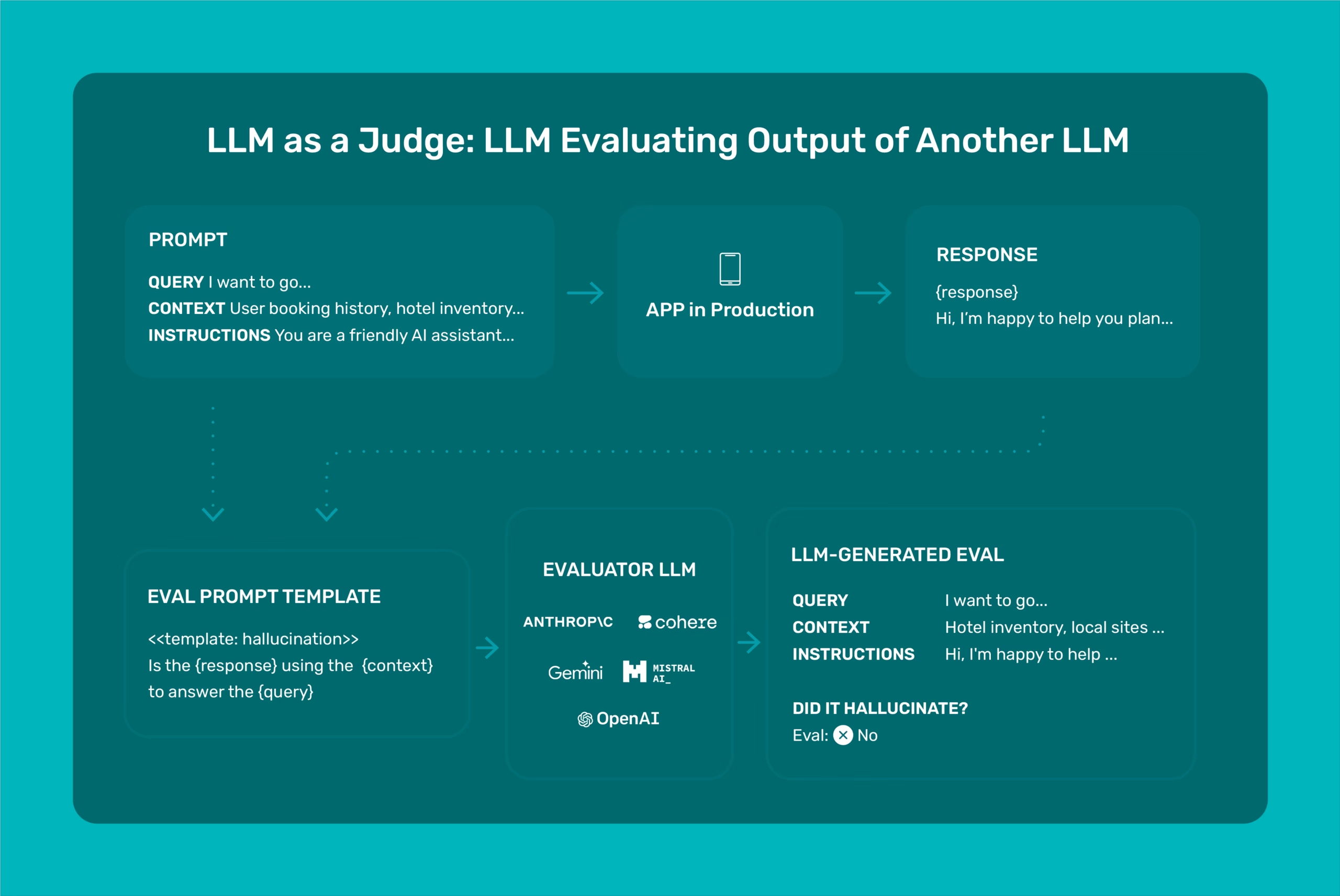

LLM as a Judge

Often called LLM as a judge, LLM-assisted evaluation uses AI to evaluate AI — with one LLM evaluating the outputs of another and providing explanations.

LLM-assisted evaluation is often needed because user feedback or any other “source of truth” is extremely limited and often nonexistent (even when possible, human labeling is still expensive) and it is easy to make LLM applications complex.

Fortunately, we can use the power of LLMs to automate the evaluation. In this eBook, we will delve into how to set this up and make sure it is reliable.

While using AI to evaluate AI may sound circular, we have always had human intelligence evaluate human intelligence (for example, at a job interview or your college finals). Now AI systems can finally do the same for other AI systems.

The process here is for LLMs to generate synthetic ground truth that can be used to evaluate another system. Which begs a question: why not use human feedback directly? Put simply, because you often do not have enough of it.

Getting human feedback on even one percent of your input/output pairs is a gigantic feat. Most teams don’t even get that. In such cases, LLM-assisted evals help you benchmark and test in development prior to production. But in order for this process to be truly useful, it is important to have evals on every LLM sub-call, of which we have already seen there can be many.

You are given a question, an answer and reference text. You must determine whether the given answer correctly answers the question based on the reference text. Here is the data:

[BEGIN DATA]

************

[Question]: {question}

************

[Reference]: {context}

************

[Answer]: {sampled_answer}

[END DATA]

Your response must be a single word, either “correct” or “incorrect”, and should not contain any text or characters aside from that word. “correct” means that the question is correctly and fully answered by the answer. “incorrect” means that the question is not correctly or only partially answered by the answer.

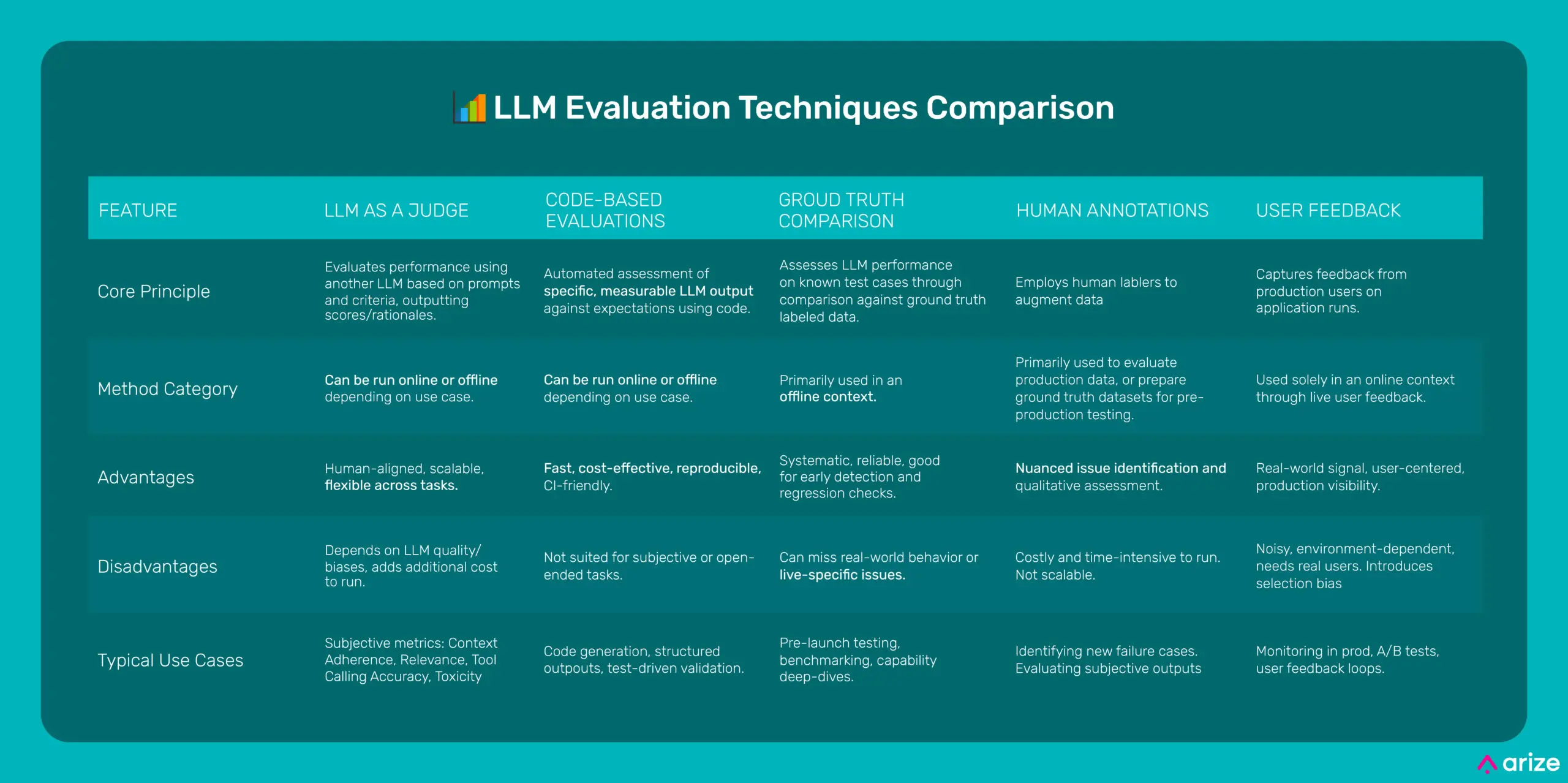

LLM Evaluation Techniques Comparison Chart

We’ve reviewed various LLM eval methodologies here to assess different aspects of their capabilities and real-world effectiveness. This table provides a comparison of several key evaluation methods, including those leveraging the power of other LLMs for judgment, automated code-based assessments, and the distinct perspectives offered by online and offline evaluation strategies.

(Click to expand)

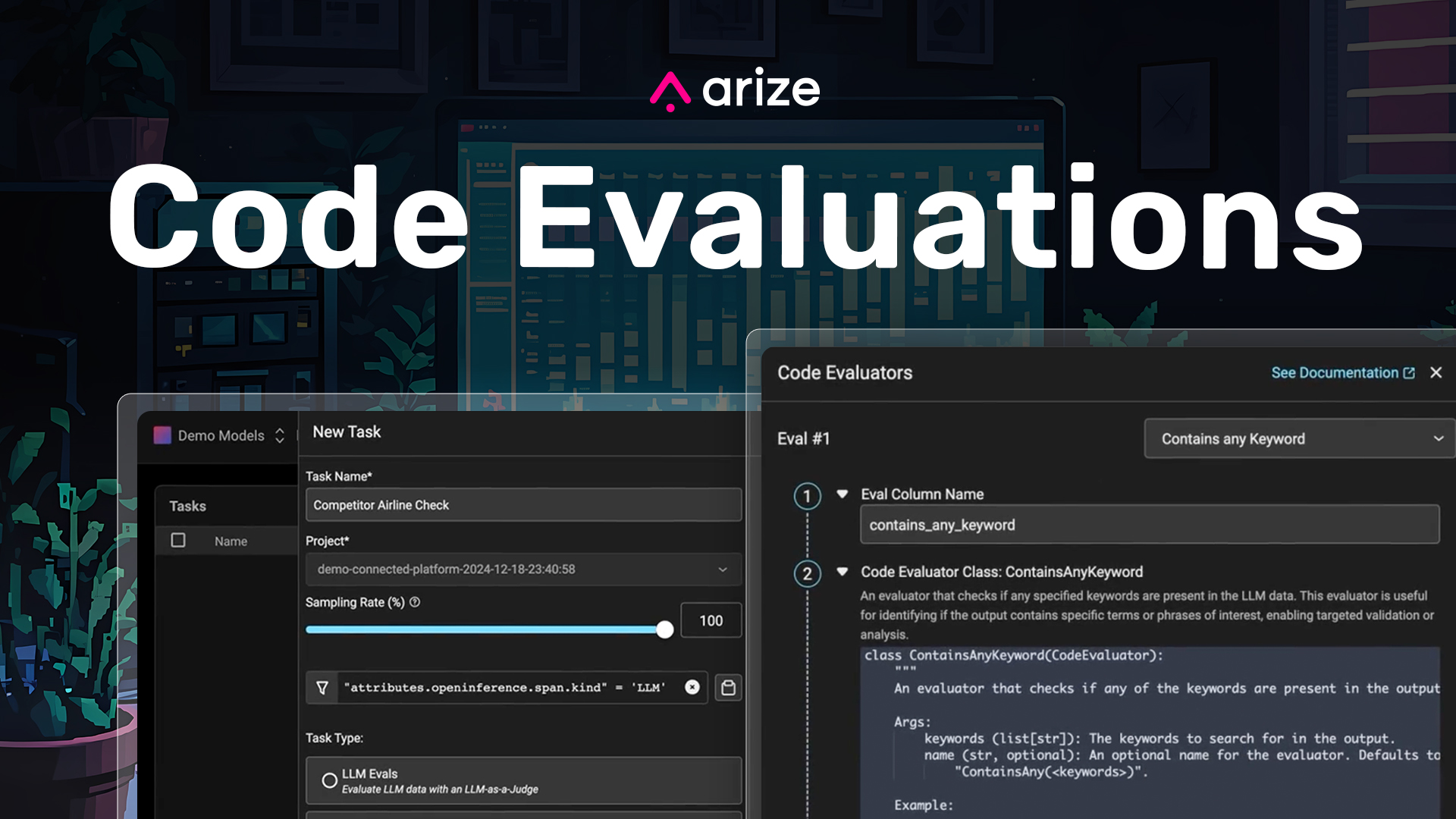

Code-Based LLM Evaluations

Code-based LLM evaluations are methods that use programming code to assess the performance, accuracy, or behavior of large language models (LLMs). These evaluations typically involve creating automated scripts or CI/CD test cases to measure how well an LLM performs on specific tasks or datasets. A code-based eval is essentially a python or JS/TS unit test.

Code-based evaluation is sometimes preferred as a way to reduce costs as it does not introduce token usage or latency. When evaluating a task such as code generation, a code-based eval will often be the preferred method since it can be hard coded, and follows a set of rules. However, for evaluation that can be subjective, like hallucination, there’s no code evaluator that could provide that label reliably, in which case LLM as a Judge needs to be used.

Common use cases for code-based evaluators include:

LLM Application Testing

Code-based evaluations can test the LLM’s performance at various levels—focusing on ensuring that the output adheres to the expected format, includes necessary data, and passes structured, automated tests.

- Test Correct Structure of Output: In many applications, the structure of the LLM’s output is as important as the content. For instance, generating JSON-like responses, specific templates, or structured answers can be critical for integrating with other systems.

-

Test Specific Data in Output: Verifying that the LLM output contains or matches specific data points is crucial in domains such as legal, medical, or financial fields where factual accuracy matters.

-

Structured Tests: Automated structured tests can be employed to validate whether the LLM behaves as expected across various scenarios. This might involve comparing the outputs to expected responses or validating edge cases.

Evaluating Your Evaluator

Evaluating the effectiveness of your evaluation strategy ensures that you’re accurately measuring the model’s performance and not introducing bias or missing crucial failure cases. Code-based evaluation for evaluators typically involves setting up meta-evaluations, where you evaluate the performance or validity of the evaluators themselves.

In order to evaluate your evaluator, teams need to create a set of hand annotated test datasets. These test datasets do not need to be large in size, 100+ examples are typically enough to evaluate your evals. In Arize Phoenix, we include test datasets with each evaluator to help validate the performance for each model type.

We recommend this guide for for a more in depth review of how to improve and check your evaluators.

“Evaluating the effectiveness of your evaluation strategy ensures that you’re accurately measuring the model’s performance and not introducing bias or missing crucial failure cases.”

Eval Output Type

Depending on the situation, the evaluation can return different types of results:

- Categorical (Binary): The evaluation results in a binary output, such as true/false or yes/no, which can be easily represented as 1/0. This simplicity makes it straightforward for decision-making processes but lacks the ability to capture nuanced judgements.

-

Categorical (Multi-class): The evaluation results in one of several predefined categories or classes, which could be text labels or distinct numbers representing different states or types.

-

Continuous Score: The evaluation results in a numeric value within a set range (e.g. 1-10), offering a scale of measurement. We don’t recommend using this approach.

-

Categorical Score: A value of either 1 or 0. The categorical score can be pretty useful as you can average your scores but don’t have the disadvantages of continuous range.

Although score evals are an option, we recommend using categorical evaluations in production environments. LLMs often struggle with the subtleties of continuous scales, leading to inconsistent results even with slight prompt modifications or across different models. Repeated tests have shown that scores can fluctuate significantly, which is problematic when evaluating at scale.

Categorical evals, especially multi-class, strike a balance between simplicity and the ability to convey distinct evaluative outcomes, making them more suitable for applications where precise and consistent decision-making is important.

class ExampleResult(Evaluator):

def evaluate(self, input, output, dataset_row, metadata, **kwargs) -> EvaluationResult:

print("Evaluator Using All Inputs")

return(EvaluationResult(score=score, label=label, explanation=explanation)

class ExampleScore(Evaluator):

def evaluate(self, input, output, dataset_row, metadata, **kwargs) -> EvaluationResult:

print("Evaluator Using A float")

return 1.0

class ExampleLabel(Evaluator):

def evaluate(self, input, output, dataset_row, metadata, **kwargs) -> EvaluationResult:

print("Evaluator label")

return "good"

Online vs Offline Evaluation

Evaluating LLM applications across their lifecycle requires a two-pronged approach: offline and online. Offline LLM evaluation generally happens during pre-production, and involves using curated or outside datasets to test the performance of your application. Online LLM evaluation runs once your app is in production, and is run on production data. The same evaluator can be used to run online and offline evaluations.

Offline LLM Evaluation

Offline LLM evaluation generally occurs during the development and testing phases of the application lifecycle. It involves evaluating the model or system in controlled environments, isolated from live, real-time data. The primary focus of offline evaluation is pre-deployment validation CI/CD, enabling AI engineers to test the model against a predefined set of inputs (like golden datasets) and gather insights on performance consistency before the model is exposed to real-world scenarios. This process is crucial for:

-

Prompt and Output Validation: Offline tests allow teams to evaluate prompt engineering changes and different model versions before committing them to production. AI engineers can experiment with prompt modifications and evaluate which variants produce the best outcomes across a range of edge cases.

-

Golden Datasets: Evaluating LLMs using golden datasets (high-quality, annotated data) ensures that the LLM application performs optimally in known scenarios. These datasets represent a controlled benchmark, providing a clear picture of how well the LLM application processes specific inputs, and enabling engineers to debug issues before deployment.

-

Pre-production Check: Offline evaluation is well-suited for running CI/CD tests on datasets that reflect complex user scenarios. Engineers can check the results of offline tests and changes prior to pushing those changes to production.

“Having one unified system for both offline and online evaluation allows you to easily use consistent evaluators for both techniques.”

Note: The “offline” part of “offline evaluations” refers to the data that is being used to evaluate the application. In offline evaluations, the data is pre-production data that has been curated and/or generated, instead of production data captured from runs of your application. Because of this, the same evaluator can be used for offline and online evaluations. Having one unified system for both offline and online evaluation allows you to easily use consistent evaluators for both techniques.

Online LLM Evaluation

Online LLM evaluation, by contrast, takes place in real-time, during production. Once the application is deployed, it starts interacting with live data and real users, where performance needs to be continuously monitored. Online evaluation provides real-world feedback that is essential for understanding how the application behaves under dynamic, unpredictable conditions. It focuses on:

-

Continuous Monitoring: Applications deployed in production environments need constant monitoring to detect issues such as degradation in performance, increased latency, or undesirable outputs (e.g., hallucinations, toxicity). Automated online evaluation systems can track application outputs in real time, alerting engineers when specific thresholds or metrics fall outside acceptable ranges.

-

Real-Time Guardrails: LLMs deployed in sensitive environments may require real-time guardrails to monitor for and mitigate risky behaviors like generating inappropriate, hallucinated, or biased content. Online evaluation systems can incorporate these guardrails to ensure the LLM application proactively being protected rather than reactively.

Choosing Between Online and Offline LLM Evaluation

While it may seem advantageous to apply online evaluations universally, they introduce additional costs in production environments. The decision to use online evaluations should be driven by the specific needs of the application and the real-time requirements of the business. AI engineers can typically group their evaluation needs into three categories: offline evaluation, guardrails, and online evaluation.

-

Offline evaluation: Offline evaluations are used for checking LLM application results prior to releasing to production. Use offline evaluations for CI/CD checks of your LLM application.

Example: Customer service chatbot where you want to make certain changes to a prompt do not break previously correct responses.

-

Guardrail: AI engineers want to know immediately if something isn’t right and block or revise the output. These evaluations run in real-time and block or flag outputs when they detect that the system is veering off-course.

Example: An LLM application generates automated responses for a healthcare system. Guardrails check for critical errors in medical advice, preventing harmful or misleading outputs from reaching users in real time.

-

Online evaluation: AI engineers don’t want to block or revise the output, but want to know immediately if something isn’t right. This approach is useful when it’s important to track performance continuously but where it’s not critical to stop the model’s output in real time.

Example: An LLM application generates personalized marketing emails. While it’s important to monitor and ensure the tone and accuracy are correct, minor deviations in phrasing don’t require blocking the message. Online evaluations flag issues for review without stopping the email from being sent.

Download this article

Join the Arize community and continue your journey into LLM evaluation.