The Key To Building Transparent AI Software Systems

Best practices for ensuring robots do not touch ethical third rails

An AI algorithm is a software system that has the ability to automate or perform tasks that typically require human intelligence. These systems often include more than just a trained model. They can also include explicit algorithm functionality, such as business rules, that integrate the model’s output into the larger AI system to complete an end-to-end task.

To properly implement ethical AI systems, the software used to deploy the models must include the ability to measure and mitigate the live algorithm behavior from end-to-end. By intentionally building in methods to audit AI, we can ensure a good robot doesn’t go off the rails – and if it does, we’ll have the tools to course correct it.

So what does it take to build ethics into an AI’s software infrastructure? How can we approach preemptively designing these systems to ensure we will have the ability to audit AI models for bias?

What Does It Take To Build Ethics Into AI Software Infrastructure?

A truly auditable AI system should have enough transparency that users and creators can answer the questions “what data went into the model, what predictions came out, and what adjustments were made to it down the road before the output was used?” If bias or quality issues are detected, the levers built into an ethical AI system can be used to mitigate any bias or correct quality the issues that arise.

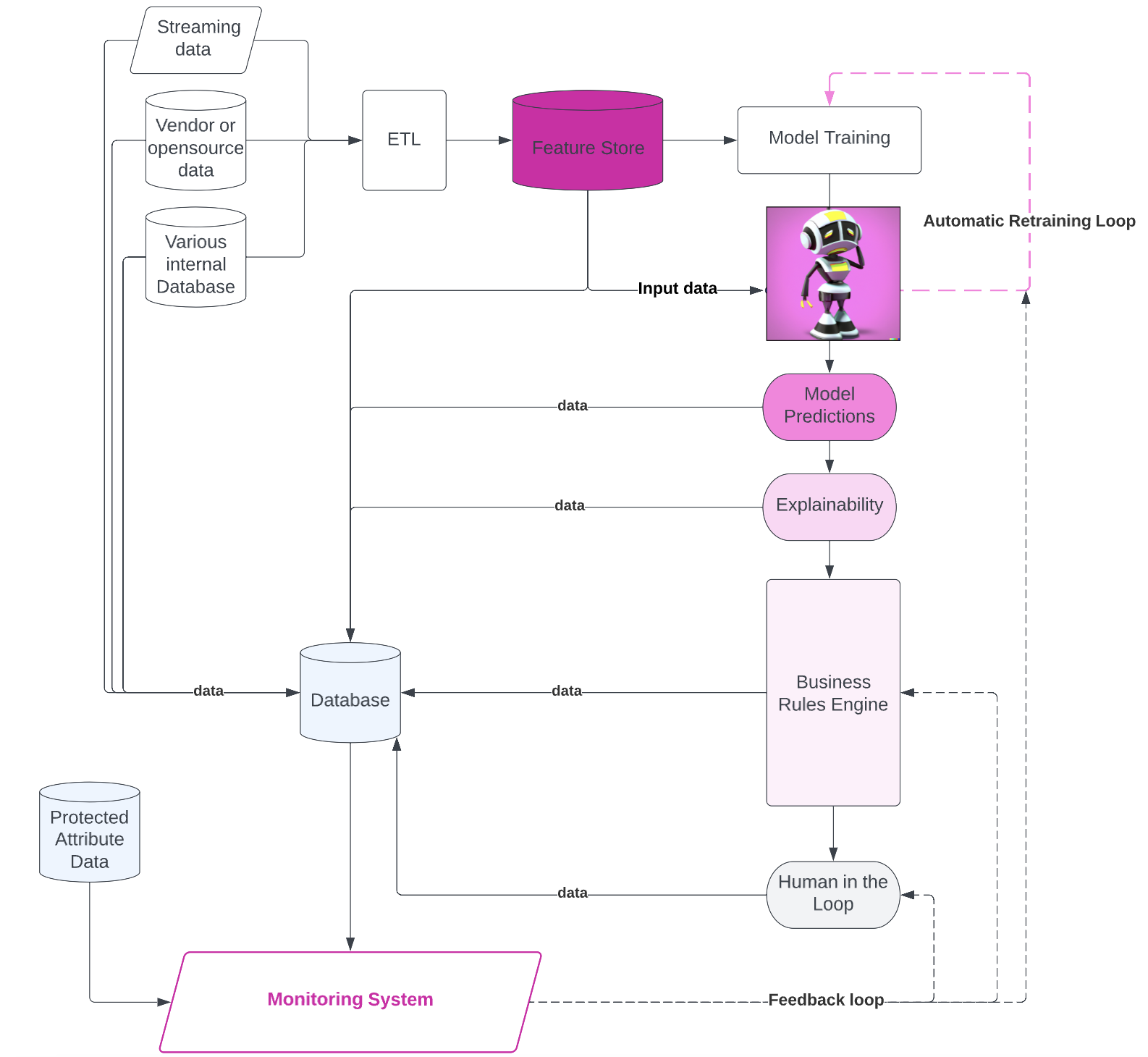

This proposed system design is a generalized infrastructure that could be adapted for many business use-cases relying on live AI.

While the system integrates the common core components for live AI models – such as a feature store, retraining pipeline, and model serving layer – this design also includes a rules engine to capture all business decisions applied to adjust the model output and determine how the model is used. The monitoring system scoops up all this data and augments it with protected demographic data to provide the ability to measure the system’s output and model usage for bias or misuse.

This design provides core functionality that ensures the model operates in a responsible, ethical, and unbiased manner. It includes:

- A data collection system with coverage for all system-generated data

- A metric and monitoring system to track model performance and bias in live models to provide both data and model ML Observability.

- Multiple levers to pull to mitigate any potential bias, such as:

- A targeted model retraining loop for updating the model with better data

- A rules engine for programming in explicit logic such as model overrides or bias mitigation

- A human-in-the-loop quality check system.

What Data Is Needed To Audit An AI System?

The data required to completely audit an AI system is extensive. It includes:

- Features (the model input)

- Predictions (the model output)

- SHAP or some representation of feature importance to provide pointwise prediction explainability. This allows us to trace why a specific prediction was made.

- Any business logic or rules applied to augment the prediction

- Any human decisions applied to augment the prediction

- Isolated demographic data that can be linked to predictions for monitoring bias

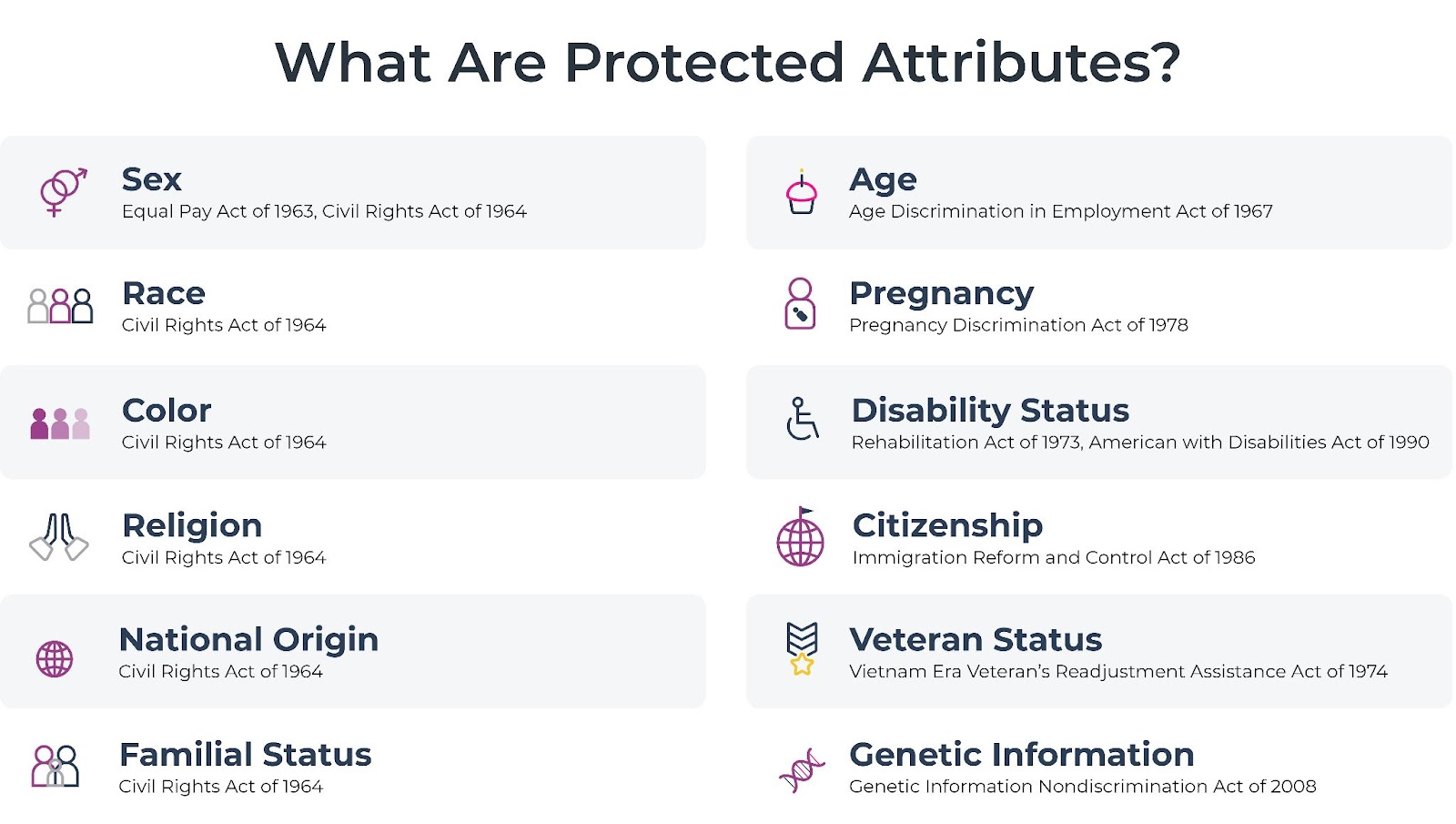

Notice the protected demographic data is kept isolated from the system in this design. This data is only used to measure model performance to detect bias. It should never be intermingled with model inputs as that could cause bias to be encoded into the model. Some core attributes to consider under U.S. law appear below.

Bias Measurement and Monitoring

Once that data is collected, metrics and visualizations can be used to quantify and monitor for bias trends in the live system. Standard fairness and bias metrics to consider are:

- Recall Parity: measures how “sensitive” the model is for one group compared to another, or the model’s ability to predict true positives correctly.

- False Positive Rate Parity: measures whether a model incorrectly predicts the positive class for the sensitive group as compared to the base group.

- Disparate Impact: a quantitative measure of the adverse treatment of protected classes

A model may appear to perform well on average, but digging deeper it’s possible to look beyond the average model performance and isolate performance across demographic groups. Tacking the accuracy per group gives visibility into how fair the model is serving the population as a whole.

How Can Bias Be Mitigated In An AI Software System?

The rules engine, also known as a rule-based system or an expert system, is a type of algorithm that uses a predefined set of rules to make decisions or solve problems. These rules, often represented as IF-THEN statements, capture the domain-specific knowledge and expertise in a structured and organized manner. In real life AI applications, the model predictions are often fed into a rules engine, where business decisions are made around how to use the prediction or how to augment it. If bias is detected, new rules can be encoded to override it.

Many AI systems include an automated pipeline for collecting new data model retraining on the new data to update it with the freshest information. This keeps the model healthy and performant. This same retraining loop can be used to remove bias from models. The retraining data can be collected in a targeted way to focus on providing more data or better examples for the model to learn from on areas where it is failing.

The human in the loop component provides the ability to quality check the AI’s output before it is used. This functionality also needs to be part of the software design so the human decision data can be collected, especially in use-cases that cannot or should not be fully automated. AI is often most effective as a human assistant, not a complete task automation tool. Building in infrastructure to support human interaction and decision making allows us humans to override bias or harmful patterns when they are detected.

How Can An AI Algorithm Be Biased, Unfair, or Unethical?

Most often, the bias is not likely hard coded in the sense that it is explicitly written by a software engineer or data scientist. Instead, the algorithms learn automatically from scanning large datasets. These AI models work to learn patterns from a large set of data, and encode those patterns mathematically. Those mathematical patterns are then saved as a “model” and used to make inferences on new data.

Under this paradigm, these models can learn harmful and unfair patterns simply if these patterns exist in the data provided to it. They will then perpetuate these harmful patterns by relying on them when making predictions. With data available widely on the internet, even old patterns and historical biases could get encoded into these models if we’re not careful.

So when thinking about patterns that get encoded into AI algorithms, there are two kinds of patterns to consider. Explicit and implicit patterns. Explicit patterns are rules hard coded in. These patterns are a purposeful choice by an organization, and typically represented as IF-THEN statements in code. Implicit patterns are learned by the model from data provided to it.

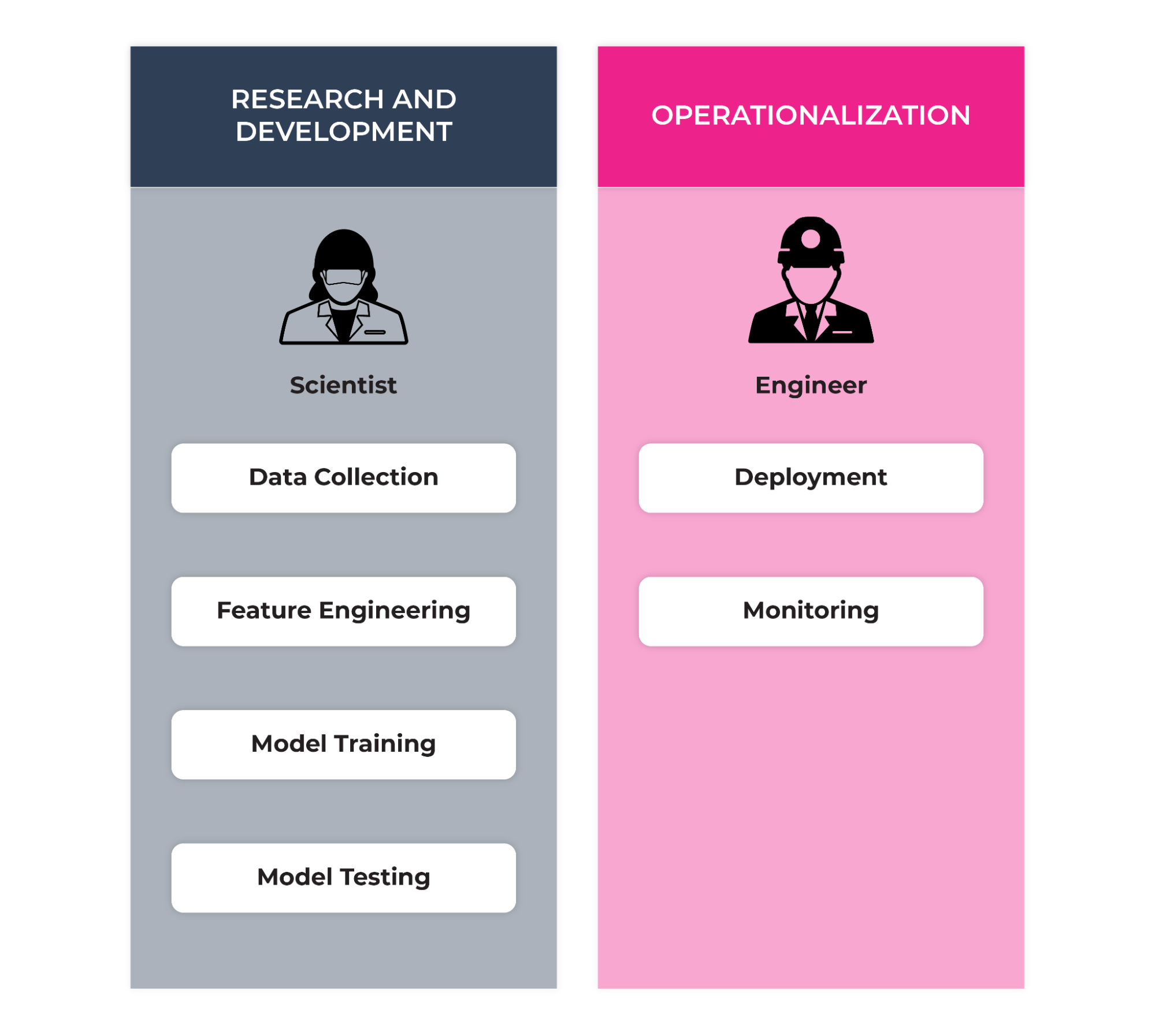

AI Development Lifecycle

To add further context to this discussion, it is helpful to break down the AI project lifecycle to understand where bias or quality issues could be introduced. There are two core phases of the AI project lifecycle to focus on: Research and Development, and Operationalization.

Research and Development

In the R&D phase, scientists or researchers work to create the model. They collect raw data, transform the data into meaningful model features, experiment and test various modeling approaches and parameters, and evaluate the model’s performance based on its ability to optimize a specific outcome of choice. During these steps, there are many considerations these model creators will take to prevent and test for bias in their model. They work to collect unbiased data and carefully measure model performance across protected demographics to ensure fairness. However, bias can still creep in. So we’ll want to be able to monitor and track this model’s behavior once it’s live.

Operationalization

Once the model is created and we have our good robot, the project will move into the next phase – where said good robot is operationalized. That’s where the engineers come in. This phase of development focuses on transforming the chosen model into a practical and functional system that produces predictions from the model that an end-user can access and rely on and use live. This process involves constructing software systems that integrate the model’s core functionality and adhere to best practices for production code, ensuring the system is scalable and maintainable. This is where ethical AI by design comes in. This system can be designed to not only produce predictions from the model, but to also safeguard these algorithms if designed with ethical AI in mind.

An auditable AI system collects data from the model itself as well as the patterns around explicit decisions regarding how the model’s output is used. This allows for a holistic view of the algorithm’s behavior and facilitates monitoring the system as a whole.

This algorithmic transparency should be at the forefront of the design of any AI system from the beginning of the design. This means that the ability to measure and mitigate bias needs to be baked into the software, and not an afterthought. If an algorithm with potential bias is deployed without these mechanisms in place, it will be difficult to detect and correct any harmful patterns.

Conclusion

It’s likely many AI systems will need to be audited at some point, whether it’s for bias, or simply interpretability or quality. It’s possible to get ahead of this by thoughtfully designing infrastructure that provides enough visibility into the data, and opportunity for algorithm improvements. Let’s keep those robots from going off the rails.

For deeper reading on this subject, here are a few of my favorite books and resources on ethical AI:

- A Summary and Review of the Ethical Algorithm

- Invisible Women: Data Bias In a World Designed for Men by Caroline Criado Perez

- Weapons of Math Instruction by Cathy O’Neil

- Ethical Machines: Your Concise Guide To Totally Unbiased, Transparent and Respectful AI by Reid Blackman