How To Use Annotations To Collect Human Feedback On Your LLM Application

Liking Phoenix? Please consider giving us a ⭐ on Github!

Cast your mind back to the early days of mainstream AI development – a whopping seven years ago. NVIDIA stock was just over $1 per share. “Transformers” meant Optimus Prime, not a word-changing technical leap forward. And the primary way of evaluating AI applications was collecting manual human feedback.

Since those days, programmatic and LLM-based evaluations have been commonplace and we’ve drastically reduced our reliance on human-labelers. Simultaneously however, the rise of reinforcement learning from human feedback (RLHF) has popularized new systems of collecting human feedback en masse.

Whether you’re looking to hand-label your data to weed out especially subtle response variations, curate the perfect set of examples for few-shot prompting, or log real-time user feedback from your live app, a robust system for capturing and cataloging human annotations is critical.

Let’s take a look at how our latest update to Phoenix allows you to do just that.

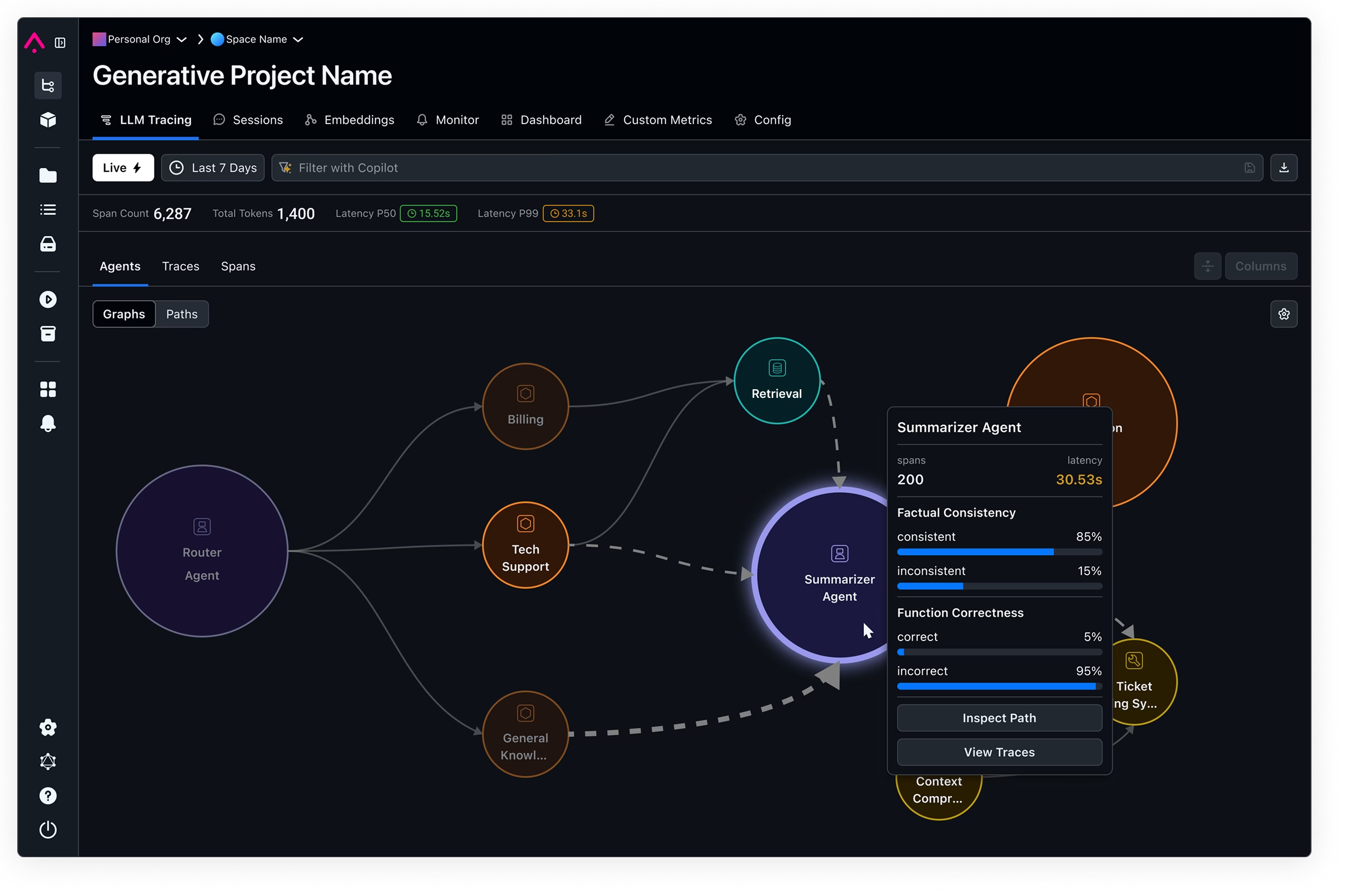

Annotations

Annotations make it simple to add human feedback to spans and traces in your LLM applications. Annotations can be added via the Phoenix UI or through our SDKs or API.

To keep our UI clean and easy to use, we’ve now grouped human annotations and our existing evaluations into a new column called “Feedback.” Annotations and evaluations in this section will be rolled up into top-level metrics within Phoenix, and can be used to filter spans and traces in the UI or programmatically.

Creating Datasets Using Annotations

Annotations also work extremely well with the Datasets feature we launched last month. Want to create a dataset of all the 👍captured in your application to fine-tune your model?

Simply filter on that annotation, select all rows, then add them to a dataset:

Then export your new dataset to examine further or power your fine-tuning flywheel.

How To: Log Annotations in the UI

Logging annotations in the Phoenix UI is as simple as selecting your trace, then selecting the “Annotate” button on the top right.

How To: Log Annotations Programmatically

You can log annotations programmatically by sending a request to https://app.phoenix.arize.com/v1/span_annotations (NOTE: If you are self-hosting Phoenix, replace app.phoenix.arize.com with your Phoenix base url). If you’re not using our Python SDK, make sure you set “PHOENIX_CLIENT_HEADERS” = “api_key=…” as a request header.

This endpoint takes the following parameters in the request body:

{

"span_id": "67f6740bbe1ddc3f", # the id of the span you want to attach the annotation to

"name": "correctness", # the name of your annotation

"annotator_kind": "HUMAN", # either HUMAN or LLM

"result": {

"label": "correct", # the text value of your annotation

"score": 1, # a score associated with your annotation

"explanation": "it is correct" # the explanation behind your annotation

},

}

Collecting the span_id value is the only potentially tricky part here. If you’re using our Python or TS SDKs, you can query for the current span:

from opentelemetry import trace

span = trace.get_current_span()

span_id = span.get_span_context().span_id.to_bytes(8, "big").hex()

import { trace } from "@opentelemetry/api";

async function chat(req, res) {

// ...

const spanId = trace.getActiveSpan()?.spanContext().spanId;

}If you’re trying to log annotations from a backend application, you need to persist the span_id on your own. Here’s a diagram of what this type of architecture often looks like:

The span_id is retrieved during the initial generation phase, then attached to the response sent to the frontend. If the user then eventually provides feedback, the span id is sent along with that feedback to the backend system to be logged.

And You’re Off!

With that, you can now log human feedback into Phoenix. If you run into issues, check out the resources below, or jump into our Slack channel to ask a question.

Human feedback in Phoenix transforms how you evaluate and improve LLM apps. By combining automated metrics with human insights, you can create models that not only perform well but also resonate with users.

We can’t wait to see what you build!

Resources

Additional helpful references:

- Technical Docs

- Example of a chatbot application using programmatic annotations (JS/TS)

- Sign up for Phoenix here