Quick Guide to the EU AI Act for AI Teams

The EU AI Act is the world’s first comprehensive AI regulation, meant to promote responsible AI development and deployment in the European Union (EU). If you’re working with AI and have any interaction with the EU market, the regulations likely apply to you. We took a look at the Act to help unpack the risk categories and emphasize the essential role of observability.

Starting February 2, 2025, the European Union will begin enforcing Chapter I and II of the Act. In short, these sections involve developing AI literacy, and understanding and adhering to the various definitions in the regulations. In particular, AI systems that pose an “unacceptable risk” are banned. You can find the full timeline and requirements here.

While the regulations may seem daunting and complex at first glance, the main goal here is to make sure that AI is safe, and everyone’s rights are respected. There are also measures built in that are intended to support innovation, with a particular emphasis on startups.

Note: This blog is intended to provide a general overview of the EU AI Act’s risk categories and potential compliance considerations. The information here is intended for educational purposes only and should not be considered legal advice. Always consult with legal experts for specific compliance requirements.

Will the EU AI Act Affect Me?

Unless you’re working on something “high risk” (which we’ll cover below), you won’t need to worry much. That being said, the EU AI Act applies to a broad range of stakeholders both inside and outside the EU:

Providers: Anyone who develops and places AI systems or general-purpose AI models on the market or putting them into service in the EU, regardless of whether they are established or located within the EU. This includes both for-pay and free systems.

- Deployers: Anyone who uses AI systems within the EU. This excludes instances where a natural person is using the AI system for purely personal, non-professional reasons.

- Importers and Distributors: Anyone who brings AI systems into the EU market.

- Product Manufacturers: Anyone who integrates AI systems into their products.

- Authorized Representatives: Anyone who acts on behalf of providers not established in the EU.

Now let’s get into the risk categories…

Risk Categories

The EU AI Act defines “risk” as “the combination of the probability of an occurrence of harm and the severity of that harm.” This definition is important to how the regulation approaches risk assessment. AI systems are categorized by risk level—minimal, limited, high, and unacceptable.

We broke out each category here to give examples, along with suggested best practices–but again, this is based on our reading of the Act and is not legal advice.

Unacceptable Risk AI Systems

AI applications that pose an “unacceptable risk” are banned under the Act starting February 2 due to their potential for harm. Examples of this include social credit scoring, predictive policing, subliminal manipulation, and biometric categorization systems based on protected characteristics. To ensure that you don’t drift into this category, make sure that you regularly review system functionality and maintain clear documentation of system limitations and safeguards. It goes without saying that you should always consider ethical implications during system design and updates.

High-Risk AI Systems

Most of the text of the Act addresses high-risk systems, which are the ones that are regulated. These systems require the most comprehensive compliance measures due to their potential impact on safety or fundamental rights. Compliance involves extensive documentation, monitoring, and risk management. For a more detailed overview, see Annex III.

Examples:

- Healthcare diagnostics

- Autonomous vehicles

- AI-assisted hiring tools

- Public safety systems

- Education or assessment

- Recruitment or performance evaluation

Essential Compliance Elements:

- Establish comprehensive risk management systems

- Implement strong data governance practices

- Maintain detailed technical documentation

- Enable automatic record-keeping

- Ensure human oversight capabilities

- Meet accuracy and cybersecurity standards

General approach toward implementation:

- Conduct regular risk assessments

- Deploy robust monitoring systems

- Create detailed compliance documentation

- Implement continuous logging systems

- Establish human oversight procedures

- Consider using an AI observability platform

Limited Risk AI Systems

These systems are subject to basic transparency measures, particularly when interacting directly with users. Compliance here mainly focuses on ensuring user awareness and proper content labeling.

Examples include:

- Chatbots

- Generative AI tools

- Virtual assistants

- AI content curation systems

- Deep fakes

Key Compliance Considerations:

- If you fall into this category, you’ll need to implement clear AI disclosure notifications. In other words, it should be clear to users that they’re interacting with AI.

- Plan to develop robust content labeling systems

- Create user-friendly transparency documentation

- Set up basic monitoring

- Consider automated labeling for AI-generated content

Minimal Risk AI Systems

While formal compliance measures aren’t mandated with AI systems that pose minimal risk, the Act recommends following best practices for transparency and documentation.

Some examples of minimal risk AI systems:

- Video game AI

- Basic spam filters

- Simple recommendation systems

- Weather prediction apps

Suggested Best Practices:

- Maintain basic documentation of system functionality

- Implement standard testing procedures

- Consider voluntary transparency measures

What About General Purpose AI Models?

The EU AI Act also seeks to regulate foundation models, in order to ensure responsible development and deployment. These regulations will apply to U.S. companies that want to operate in the EU market.

The initial guidelines in the Act apply to all providers of General Purpose AI Models (GPAI) models, particularly those that pose a “systemic risk.” Systemic risk is determined by a list of things including number of parameters, compute used for training, and other factors that you can find outlined in Chapter V. Systemic risk increases with greater model capability.

While there are preliminary guidelines for foundation models in the Act, the Commission writing the Act recognized a need for guidelines that are even more comprehensive. To address this, the GPAI Code of Practice was released in November 2024. If you’re interested, you can find and download the draft here.

Among other things, the Code of Practice stipulates that GPAI model providers must perform model evaluations. The evaluations must be thorough, scientifically rigorous, ongoing, and should involve both internal and external experts.

The full requirements will be finalized in August 2025.

Why AI Observability is Key

To summarize some key regulatory and compliance points:

- The Act mandates continuous monitoring across multiple dimensions, including performance tracking, data quality assessment, and bias detection, making comprehensive AI observability essential for engineering teams.

- High-risk AI systems require specific measures like mandatory risk management protocols and human oversight, with detailed logging and documentation requirements.

- Organizations that fail to comply with the EU AI Act face severe penalties, including fines of up to €35 million or 7% of global revenue, alongside potential system shutdowns and damage to reputation.

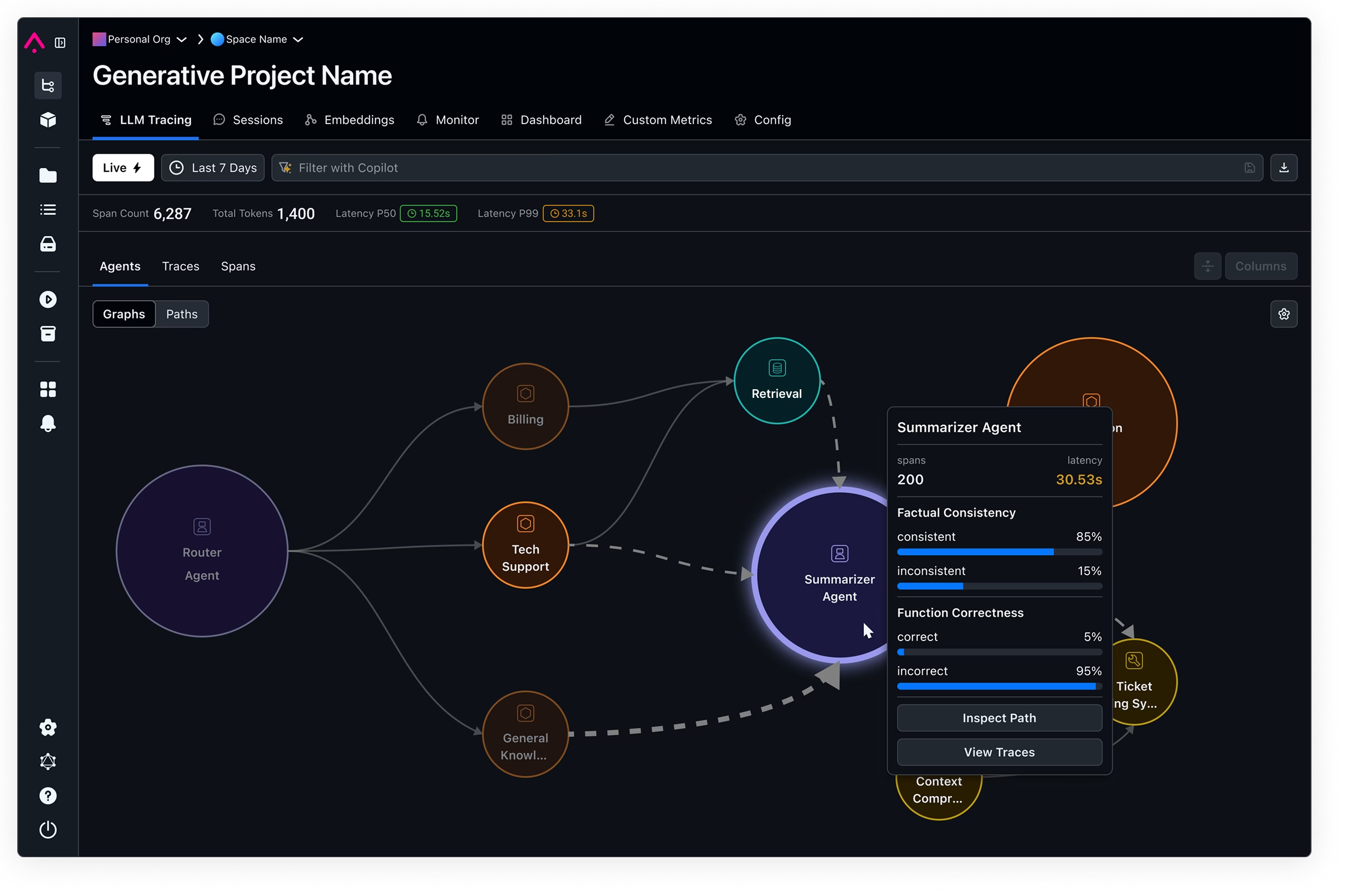

The requirements for continuous performance monitoring, data quality tracking, bias detection, and detailed audit trails make it essential to have an observability system in place for AI. Observability platforms like Arize can get you started on the right path to compliance. With an AI observability and monitoring solution, you can:

- Trace and document decision-making processes to support both transparency requirements and compliance frameworks.

- Automate documentation systems and early warning detection to reduce the engineering burden compared to building in-house solutions.

- Monitor multiple aspects of AI systems, from performance metrics to bias detection, throughout the entire model lifecycle.

Apply guardrails to better control the output or responses of AI-powered applications and agents.

An observability solution directly supports transparency obligations and facilitates your adherence to compliance frameworks. For example, AI observability can help deployers meet requirements for high-risk applications like biometric ID, while also helping with risk assessment and mitigation for general-purpose AI systems classified as limited risk.

Conclusion

Preparing for the EU AI Act requires a systematic approach to understanding your obligations and implementing appropriate measures. While the requirements may seem daunting, they ultimately promote responsible AI development and deployment. By investing in observability now, you can build trust with users while taking the first step towards regulatory requirements.

Start exploring how AI observability can improve the transparency you need throughout your AI development lifecycle with our OSS tool, Arize Phoenix – or book a conversation with our AI Solutions experts.