Introduction

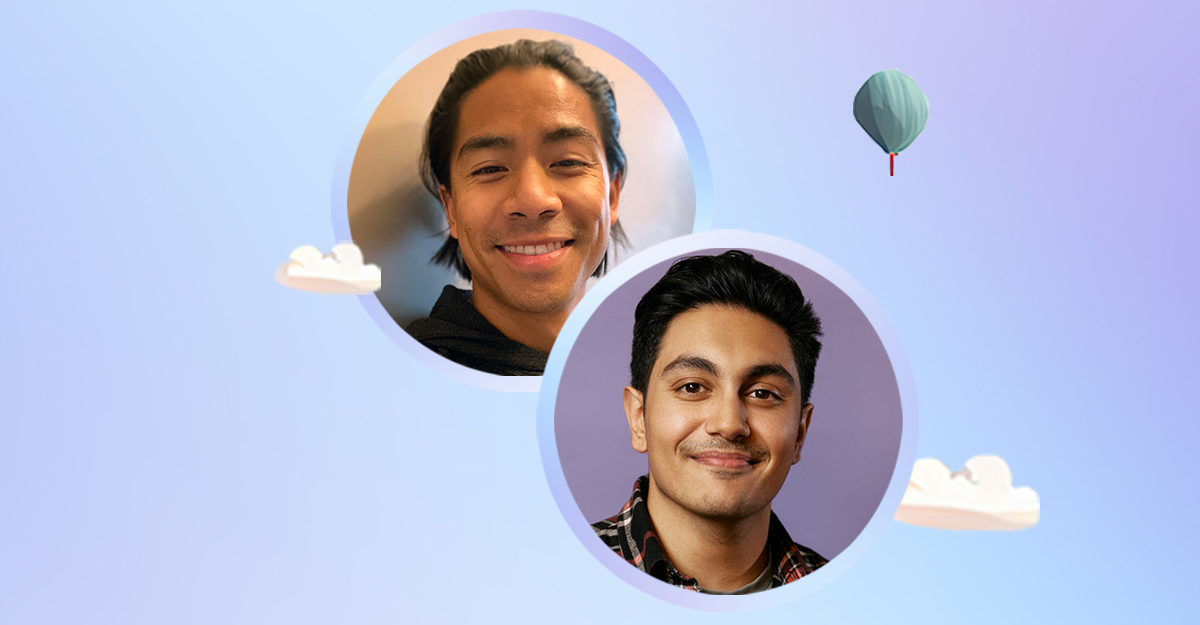

This week, we talk about the implications of Text-to-Video Generation and speculate as to the possibilities (and limitations) of this incredible technology with some hot takes. Dat Ngo, ML Solutions Engineer at Arize, is joined by AI Engineer Vibhu Sapra to break down OpenAI’s technical report on their Text-To-Video Generation Model: Sora. According to OpenAI, “Sora can generate videos up to a minute long while maintaining visual quality and adherence to the user’s prompt.” At the time of this recording, the model had not been widely released yet, but was becoming available to red teamers to assess risk, and also to artists to receive feedback on how Sora could be helpful for creatives.

We also explore EvalCrafter: Benchmarking and Evaluating Large Video Generation Models. This recent paper proposed a new framework and pipeline to exhaustively evaluate the performance of the generated videos, which we look at in light of Sora.

Jason Lopatecki, CEO and Co-Founder at Arize, makes a special guest appearance.

Watch

Dive in

Listen

Transcript

Sora Overview and Discussion

Dat Ngo: Welcome to this week’s paper reading. I’m gonna share my screen real quick. So we’re gonna wait for folks to kind of hop in the room for the first few minutes. And while we’re waiting for those people, maybe we can get kicked off with some introductions. So my name is Dat Ngo, you might have seen me in previous paper readings.

Really excited to talk about today’s kind of paper reading which we will go into in depth. But I’m a solutions architect here at Arize. I build pretty heavily with our customers, kind of at the ground level. Get to, you know, have my hand, and you know, what people are building, why they’re building it, and the issues that they’re coming across.

So, I definitely really love our paper readings where we can go kind of deep into some technical stuff. I have a special guest here with me today, his name is Vibhu, and I’ll let him introduce himself.

Vibhu Sapra: I’m Vibhu, I do a lot of LLM stuff and general AI engineering. So we’re talking through video gen. Not the deepest background in video gen. But this is kind of how our conversations go, we find something interesting, we go deep on it. Some of you may have seen me around SF. I help out in the Latent Space Paper Club, and we run another paper club. But yeah, we figured Sora is out, so let’s talk about it. The technical report had quite a bit of info. We’ll kind of try to understand what’s going on here.

Dat Ngo: I didn’t really know much about like video evals or or how videos are generated until I started kinda diving into this research. So actually, really excited to share with the audience kind of what we figured out and what we know. But maybe to get us started, Vibhu is gonna walk us through the Sora paper, and then we’ll talk about that, and then we’ll save the second half for video evals. And how people are thinking about that space.

Vibhu Sapra: Cool sounds good. Let me share my screen. Oh, looks like we have Jason.

Jason Lopatecki: Hi I’m Jason, Founder at Arize, great to see everyone!

Vibhu Sapra: Awesome. So we’re gonna start going through the Sora paper and the Sora technical report.

One thing that I noticed that was pretty interesting and that people haven’t really been going over in depth is that they came out with a technical report on Sora. And this tells you a lot about what’s actually going on, how they got to it, what the model is like. How people can kind of reclaim this, what we can expect, how will this break down into open source and kind of just think about how video Gen will affect the industry. So going through here, I’m just going to kind of note some interesting things that I found while reading through it.

First thing I thought was pretty interesting was where they say this line that, like people, aren’t tweeting enough. Our largest model, Sora, is capable of generating a minute of high fidelity video. So are they like talking about, you know. compared to DALL-E compared to GPT. 3.5., compared to GPT. 4.The technical aspect of how text to video works means that this is a pretty heavy model–we’ll try to break down some rough numbers of how they’re doing this but yeah, it’s pretty big. So if this is their largest model, you know, is this like GPT-5, equivalent. Is this the future? I thought that was kind of interesting

Overview though there’s a transformer architecture. They’re doing space-time patches of video and latent image codes. It’s a very large model. They’re able to scale video generation models.

They talk about simulations, physical worlds. I know there’s like interesting takes on that. Yann LeCun from Meta is kind of against it. It’s not really a great simulator. But then we have others like Jim Fan from Nvidia who thinks this is a great simulation.

Jason Lopatecki: That was a heated argument. I don’t know if you saw the thread on that. I think I mean, people have really strong stances in this where it feels like the jury is still out. There’s definitely a world model and not a world model view of how these things work, and I tend to be a little more balanced, and think either side could be right, but I need more information. But very interesting heated physics simulator debates.

Vibhu Sapra: Yeah, it’s so interesting because for people that are still watching and haven’t really gone too deep: Sora is not out yet. Not many people can play around with it. It’s still being red teamed. They make claims, and they show demos, and I don’t know…there’s a lot to go off of with just that, you know? So it’s interesting how strong the senses have been. I’m pretty neutral on this as well. For one, I don’t have as much of a background as these two to make these claims. But also, we just haven’t seen the evidence.

There’s some interesting takes that have come out to where they’re claiming that they can generate a minute of temporally consistent video. But then people have pointed out that every sample on Twitter, every sample on the website here, I think the longest clips are like 17 to 20 seconds or something. So people are like, this is transformer-based generation. Does it hallucinate after a certain amount of time? Are they doing these intervals for a specific reason, why can’t we see a minute video? So there’s still a lot of gaps that really need to be filled before you make these assumptions.

But either way, for the casual people, it’s fun to read these takes.

I thought that this technical report was actually really interesting because they have a whole bunch of resources. I feel like they really gave an overview of how they came to the approach that they came to. So when going through this, they kind of link like for everything they talk about, I think, a total of like 30 citations. And if someone’s really interested in text to video and how they get this architecture, there’s a lot of great resources here. So they kind of stay how like previous work use Rnns, then we use GANs, auto regressive transformers, diffusion models. All this stuff kind of built into Sora, how it comes to be. And then, if you click any of these links, you have, like a great reference of papers to go through and better understand the scene.

So for someone that you know wants to shed more light on this, there’s a lot of reading to do here, and a second follow up as well to understand the complexities of this. If you look at the general Sora release not just the technical paper, I did some digging and I looked into things like Who’s the research lead? Who’s the systems lead? Who are the contributors? If you kind of look into these people, you get some of their background. What have they focused on? Where does their research excel? And something interesting was like, there’s a lot of the DALL-E team that’s working on this. That’s a key component of Sora. They kind of go into this in the technical research paper. But then there’s also a lot of like inference optimization. So it kind of gives you an idea of, okay, this is our largest model, how do we run this? How are they gonna scale it? Why haven’t they deployed it yet? You can get a rough idea of how this generation is going.

And then, something that I was surprised by looking at these people is how much work they’ve done on effectively deploying inference off transformers. And it seems to me like that’s a really big thing. That’s why they haven’t released it.

How long will this take? People are saying it could take an hour for a minute of video. How do you do that right? How do you get it to stick to the prompt? This kind of goes into evals as well with like, there’s a truthfulness score of how well all the video actually sticks to the prompt sense. This is kind of different from the text, right? So we have to make sure that there’s quality. It takes time. Inference is really heavy. What’s the cost of all this? But yeah, it’s only been a few days.

Jason Lopatecki: Yeah, I think I think one of the things that surprised me that I hadn’t seen before. Maybe I missed it. But there’s a paper on scalable diffusion models with transformers. So this is transformer-based. If you look at the paper there. It’s basically a transformer based diffusion model which I didn’t even know existed. There’s a paper out this last year on it. And there’s a unit backbone of typical diffusion models that they replace with the transform. And I think that the goal here is just to be able to use the same scaling training architectures that you know they perfect it for other things, for diffusion models. But I thought that was one interesting take that I hadn’t seen.

Vibhu Sapra: They go pretty deep into this actually about how it is transformer-based, how they actually break this down like. So they have visual patches of video where basically, video is just images stitched together. So basically, you take video, you break it down into image patches. They have like a 3 step process. There’s a visual encoder. You get this to a lower dimension at Dimension. Latent space. That’s where generation kind of occurs. And a lot of this kind of goes into: Hey! What worked was we scaled all this up. They kind of have an example here as well. If, like early in the steps. It’s not so great. Train more 4x compute. It gets better. 32x. The more compute you throw the better it gets.

They’re kind of really using that like, they talk about emergence, the scaling of transformers, and how this works. But yeah, they have a lot of that in this technical report here and once again, great papers that like they reference essentially, what’s going on here is similar to stable diffusion where you generate images from noise–what they’re doing is they’re generating videos from like padded noise. And they’re filling in the gaps. And the way that they learn some of this physics-based simulation is as you’re generating and filling in like time sequences of noise.

You’re kind of looking ahead, right? So we’re filling in, how would this video be created based on like the future goes here…this is the current, and it’s kind of learning all the simulation in and of itself. And they go deeper into how all this works, depending on how technically you want to get. We’re probably just gonna go roughly, skip it over just for the sake of time, but it’s all stuff that I found interesting that people would want to dive down. I think that here they gave some interesting insights into how big. This is how it runs, what’s actually going on.

So when you’re doing stable diffusion over time for videos, it’s no longer auto regressively generated. So basically you like to subsample down into a latent space. You generate a bunch of sample images with temporal consistency, and each of these frames has to be generated like, not sequentially in the sense of it’s not sequentially, it’s all happening at once. So you no longer have the benefits of kv caching where you can look at previous frames.

So the inference for this is like significantly more expensive to do this as it gets longer, there’s more research that really has to go into that. Which is why, when you look at the authors, they’re very like focused on infra deploying this, I thought that was kind of interesting.

You can do rough calculations of let’s say, the latent space that video is generated in is like 16 by 16 times 30 fps, times 60 seconds. That’s about roughly half a million tokens that have to be generated similar to GPT-4. But now it’s not auto regressive with Kv caching. So it’s just compute heavy, and we don’t know the exact number so it could be higher, could be smaller. There’s infrared tricks that they’re doing here. But I thought, that’s just like, you know, a deeper overview of what’s actually going on inside, Sora, before we talk about the fun stuff of how all this works, you know. Do you guys have any thoughts? That was like my, my deeper take of how this actually works. And then they have like little parts anywhere in the paper we should scroll through?

Jason Lopatecki: Yeah, I think it was good take. A lot of this is decently new to to me, as I’m kind of, you know, taking it in myself.

Dat Ngo: Yeah, and that was a good good take, and I like the napkin math Vibhu, would you in terms of like when you think about the inputs for compute so it sounds like pixel resolution obviously, is a component number of frames. You’re generating right? Which maybe is a function of probably total frames, not like the length of time, because you could have like 60 f fps, but then generate for 2 min is the same as like 4 min at 30 fps. So, do you think it’s not only the resolution number of frames. Do you think there are any other inputs to compute here that people should be thinking about?

Vibhu Sapra: Yeah. So a lot of this is pretty abstract in a way, because, you know, it’s not an open source model. They don’t share the architecture, but what they do share is that they have a de-sampling step where they come down to a lower dimension. So they have, like an encoder that takes it to a lower dimension like latent space. They do generation at this dimensionality, then they upscale it back up.

So these are really rough numbers, with a pretty low estimate, or I could just be all off here. I also don’t have a great video gen background. But it’s just an understanding of what’s going on here. And then, if you understand it at that level, if you’re actually building with it, you can better understand what are the limitations. This is why there’s still benefit to understand what attention is, how Llms work, because you kind of understand the issues that you might run into there, you know. But napkin math is napkin math. A lot of this is just kind of abstracted away. As it is, I think some other interesting stuff that’s like a little higher level and not as a level is how they got their training data. So there’s some interesting stuff about unreal engine, and labeling their clips.

One thing a lot of people didn’t realize last year, like OpenAI put out an actual research paper. So DALL-E 3 has, like an actual research paper. They don’t share architecture, but they do share like how they got from DALL-E 2 to DALL-E 3. What were the advancements? And a lot of it is just synthetic data captioning. So they have a really good captioning model that they use to train data. A lot of Sora, it seems, is based on that.

And you can see the DALL-E people worked on it. So a lot of the like text video clips are captioned similar to DALL-E with a GPT-style, synthetic data captioner. They’re able to really leverage that to have good video generation, so higher level, that’s kind of also what made this possible. Another aspect is, as they say, with their scaling laws and everything. Just throw more compute and it gets better. It is a transformer-based. They talk about previous approaches, they build on stable diffusion work and everything. So that’s kind of where they went from.

There’s other capabilities for people that haven’t followed what Sora is. So there’s some cool stuff here like animating DALL-E images. So taking image to video. Extending generated videos. So kind of like going forward in time, going back in time. Looping video generation. Some of this stuff gets pretty trippy. Some people have noticed that, like as you scroll down, the examples aren’t as good. Was this just to kind of steal the hype of like Google released a big model. Here’s kind of what we have ready…it’s not super ready.

This is also pretty common in stable diffusion, stable video to video generation. But a lot of it is just pretty interesting that, like they have all this in one model, you know it seems like it’s a pretty foundation big move for them. And they’ve announced it. Some of it is like blending into videos. So going from one video to another, blending them in properly. So like we have this and this, and then we combine them. It works pretty well.

There’s a lot of talk on how this affects the industry. We’ve kind of gone 0 to 100 of like what wasn’t possible to what is possible, how much does stock footage still matter?

It has the ability to also just straight generate high res images. You don’t have to do video. So there’s a lot to Sora, you know, I’m curious. Outside of the paper, you know, what did you guys find most interesting?

Jason Lopatecki: I’ll share two things. The first was a diffusion model with transformers. This is kind of one of the backup papers I was just mentioning. And then I thought this one was kind of a useful one, which was the patch and patch impact. So again, these are from the references. But it gave me an idea. And, by the way, from Deep Mind folks. You know the idea of like the patches and understanding. Turning a patch into, you know, an image here into a set of patches. And those patches become latent, you know, latent space embedding, you know, latent space embeddings. And so like you’re mentioning that if you think about each one of these little squares is a token, so your basic scale is going to be: how many patches do you have in an image? How many images do you have in a sequence that determines how many tokens? Or inferences you’re going to do. So that kind of comes back to what you were talking about previously in terms of scale.

Some of the concepts of patches are not new. This is part of the transformer work being done. But you know, I thought these are kind of useful backgrounds to understanding how the patches map the latent space, and understanding that the other one, I thought, was pretty good. This thread, if you haven’t seen it, is like the hot, hot topic which gets into this hypothetical debate space which a lot of you can tell, people who really know this stuff still have very differing views. And the questions like, you know, looking at this video here water looks pretty good in its motion. Has it learned the physics of? Is it modeling any of the physics behind what’s there? And that’s kind of the debate. And Jim Fans taking one side of this, which is learning some physics. I don’t think he thinks it’s got the physics perfect, but his stance is there.

Dat Ngo: So for people on the podcast who can’t see this. It’s a picture of a glass kind of falling and it doesn’t shatter. It’s actually in the research paper too, where it does have limitations as a simulator. So, do I think this is just like prompting unreal engine 5? I don’t think it is. I think it’s approximating motion from all the videos that’s been trained. But it’s actually, you know, I think it kinda understands video. But I don’t think it understands physics. So anyway, that’s my hot take. But I don’t know if you would agree. Or Jason, what are your thoughts? If you wanna share your hot takes.

Jason Lopatecki: I think it learns the model the data has, and as much as some physics exists in that. It might learn some of that. And I think that’s where some of the gym fan hot takes it are, I think the Yan LeCun hot takes, or are there’s no truth like I think Yann LeCun has a couple of really big digs on these generative models because it’s not because there’s no true ground truth. It’s not learning physics in the real world that what it’s learning just doesn’t match necessarily the real world. I think there’s kind of a couple of sides. Both have some grounding in truth. In reality. I mean, they’re both kinda amazing in the space. But I think there’s some truth to both. I think we’ve felt it with even GPT-4, which in, you know, Gemini and all these other models that they learn some model of the data they learn some, some representation and modeling of the data. It’s not just the next world, you know. There, there’s something more structural. They’re learning. And the question is, are those structural things?

Dat Ngo: That’s a good segue. I know I want to save the last 15 min for Q+A

Vibhu Sapra: I have some hot takes. There is other stuff that people are brought up that’s valid like it doesn’t process audio. There’s a lot of studies that show in simulation, to have real world understanding, audio is pretty key, right? I think TikTok has a thing that 88% of users would not see it the same without audio. So there’s a lot of that as well. But on the vision transformer stuff actually, it’s interesting. There’s a tweet out from someone at Google that’s like Sora announced rebranding a bunch of Google research, because everything is all Google stuff. But then this also goes both ways. So the kind of history of multi-modal models.

So Google put out vision transformer right? And then you have like Clip, which was using Cnns from OpenAI. Eventually, as we got to the latest versions of clips, they started using a vision transformer for the encoder. But if you look at Google research in 2022 I believe, they have Flamingo, which is like a vision language model. And they actually switch back out from using vision transformer back to a Cnn. So Google kind of went the other way, where they made a vision transformer. They use it a few times, then their latest research with Flamingo they go back to a Cnn. And it’s no longer a vision transformer. But OpenAI is now using vision transformer.

Dat Ngo: Okay, that’s a good hot take. I feel like they’re always building off of each other. As they should.

Vibhu Sapra: Another take as well. I’ve talked to people at OpenAI that kind of explain how these labs work and stuff. And there’s little stuff where, like, you’re not allowed to see what other teams are working on. So people not working on Sora don’t know what the Sora team is doing, how it’s going and stuff. So you know, there’s probably reasons we can infer for why that is.

Dat Ngo: We have a question from Eric Ringer. Are you using examples of geometric/physical implausibility like the malformed hands in SD images? If not, what are they doing to prevent this?

Jason Lopatecki: Yeah, it’s pretty clear with this picture where you get some of that physical implausibility. And which is, I think, what they’re highlighting is like obviously the glass can’t melt into the table. I don’t know. I don’t know, it’s hard to say, because again we haven’t gotten to play with it much. But I think there’s inklings of physics like Jim Fan saying, there’s a bunch of examples where things just look possible, too. I feel like there’s also a fine-tuning like. Obviously, there’s the data that they trained on, too. But I feel like there’s also some fine tuning steps. What’s interesting is in this video, the glass melts on the table, but the ice kind of bounces off the table. I don’t know if you kinda see it as it hits, you know, like it’s learning something. I don’t know how much it represents true physics.

Vibhu Sapra: Some people have brought up edge cases like in self-driving cars where, like you get a lot of it now. It has ray tracing down pretty well, which is like really new and really hard to do. It gets lighting pretty well. But then some basic stuff like this is just a tail ending, like a bunch of stuff that it has to keep figuring out in direct answering the question a bit. They did say that they have a detection classifier. So a lot of this pipeline will probably be extract abstracted away. It’s on their end of how they serve this so if a generation is like, you know, probably it doesn’t match the prompt while they probably have their own check. That we can’t do. Shipping this to production is pretty different than stable diffusion images.

Right now we have, like the Lca models, like latent consistency, where you can live time, redo like image end. But this will take time. I think they’ve said it could take, like, you know, 2030 min to generate a video. So it’s not like the same UX as image gen. Yeah, these are heavy models, and then as well as that. They have metadata to like. Show what is Sora generated. They have classifiers for toxicity. So I think that, like the direct answer is that they’ll deal with some of this. But we do also just see, like, yeah. Sometimes fingers are off, sometimes people phase through things, but once again it’s not. It’s not out. I still have the take of, you know. They were taking some of Gemini’s thunder. And there’s no minute, long videos. But it’s impressive.

EvalCrafter: Benchmarking and Evaluating Large Video Generation Models.

Dat Ngo: Okay, I know we’re gonna run on time. I definitely wanna make it through evals. So the first section we talked about, maybe a big like, you know, step function change into generating emails. I think we were all impressed with the physics and just how the videos looked. Maybe we talk about how do people think about evaluating? Because as a human, you think it looks good. But are there quantitative measures to do this?

So I did pull this paper, Eval Crafter, by some researchers in HK and University of Macau. But I really like this paper, because if I had to break it down the way they thought about their benchmark, so they set up essentially kind of a bunch of tests. So they kind of walk through really, how they set up their tests right? So what kind of prompts to generate whatever videos. And then they have essentially a framework. And this is a really good way to describe the framework down here.

They broke down the evils into four categories. Right? And so there’s video quality text video, alignment motion quality, temporal consistency. So we’ll walk through all 4. And I’m just gonna do the tldr based off of time and what they mean. And what’s the math behind each one. But I really learned that to evaluate video, there’s actually a lot of criteria. If you think about the medium of time, it’s super important. It’s maybe different than maybe text to text or text to image.

And so let’s maybe walk through the first set of evals. So the first one is video quality, and really, there’s a few ways that these researchers kind of looked at it. So video quality assessment this is based off of another paper based off of Dover. And so Dover just stands for disentangled objective video quality evaluator. It’s essentially a way to measure the quality of that specific eval, and the way they broke it down specifically was in two parts. There’s VQAa, and VQAt. Basically, I want to measure the aesthetic score, which is the sub a or the technical score.

And so the aesthetic score is essentially hey, like aesthetically do I have what I essentially wrote out. So if I have a prompt to generate a video, do I have those kind of overall qualities in that video quality. And the technical score is really technical distortions. So it goes super into depth what this actually means, but the tldr of it is like, do I have distortions in certain artifacts, etc. So it’s actually using another model to assess essentially the generated video.

The next category inside of here is inside of video quality is inception score. And so this is also the inception score is essentially. it evaluates it again, it’s another model. And it reflects the diversity of the generated video. Right? And so there’s a whole paper on inception score, and what it means.

But it really is trying to like that evaluate kind of it uses what’s called like a cat gan to assess like how diverse is. You know. What? What am I generating? So just tldr that that’s something you can measure is like, how generative is is this particular kind of video that I’m generating?

So that’s the first category that we kind of walk through. So they used VQAa, VQAt, and then the inception score. The next category is text video alignment. So text as in, hey, here’s the prompt and video as in. Here’s what I generated, and there’s quite a few scores in here as well.

And so we’ll just kind of hop through that. And so when we look at the text to video kind of alignment, there’s this first category called text to video consistency. The way you can think about text to video consistency. Some people call it like a clip score. You essentially obtain, like a frame wise embedding, and then a text embedding, and then you compute their cosine similarity. And so the idea here is, how closely does the text that I have align with this this particular image embedding. So it’s almost like comparing an image embedding to a text embedding and then seeing, are they similar? You’ll start to notice a lot of similarities for quantifying this. A lot of it is comparing embeddings for certain aspects of the video.

Maybe the next one to talk about is image video consistency, or some people call SD score. This one is really calculating the embedding similarity between the generated video and this thing called the SdXL image. The SDXL is a latent diffusion model for text to image synthesis. Anyways, there’s a whole other paper about this, but essentially using another model. So SDXL is a latent diffusion model for synthesis. Essentially, you can do a few things for evaluation. It’s like, use another model to see if it’s consistent, or compare sets of embeddings. That’s kind of the theme I’m getting from this paper. And so when we think about this, this score, essentially what this one’s trying to measure is and the video to an image, whereas the text to video above here is a text to the particular generated. This one was a frame-wise image. Yeah, embeddings to text. So a little different text to image and then image to video.

This one’s made up of actually three scores. And I’m not gonna go and go into so much depth.

But there’s essentially image, image segmentation and object detection tracking. So hey, is this thing that I’m locating throughout temporally in the right place, you can think of things hop around in a video. It kind of makes for an inconsistent video. So actually, tracking where things are in a particular frame turns out there’s models for that same track. You can use objects, detection, cocoa, etc. But there’s ways to quantitatively measure. Hey? Is the object of interest in my frames moving around.

So that was text to video alignment.

Vibhu Sapra: If I could pop in for a second for that text to video alignment section. It’s actually probably the. It’s. It’s a pretty interesting one that I would recommend. People go down if they’re like looking into any of these. A lot of this, I would say, is pretty abstracted away in the sense of we’re not doing foundation models. There’s only so many models that we can use that have such generation. So a lot of this we can’t do. But going into text to video alignment like the stuff of comparing image to text embeddings, this is kind of how multimodal models work. So you kind of add another modality to like a language model. And you do contrastive learning by comparing what’s the embedding space of this image with this text pair. So this kind of like Eval, has broader implications to a lot of this.

A lot of the other stuff here is like frame by frame is there consistency? Does motion seem right? The overall quality? Other papers like Emu video from Meta, that also has benchmarks around. Of this there’s also just like the vibe checked off like we have human annotators, we measure these things with a qualitative Eval. But then this is a good one to go into, if anyone’s into it.

Dat Ngo: That’s a good point. We will talk about. So the researchers also do a qualitative eval. So this is the same thumbs up thumbs down. But except it’s like a set of categories, and that’s at the end of this paper. I think the next thing is motion quality. So the really cool thing is.For maybe you could have specific scores for specific types of videos. For instance, if you have humans in your video, there’s this action score. Essentially, there’s another model that understands like the kinetics and motions of humans. Right? So it’s like, Hey, is this human moving in a way that a normal human would move.

You can actually score and measure these things. So you’ll start to notice some of these more specialized. So this is like motion quality is the human actually blocking like a human moving like a human. We have things like average flow score. And this is just maybe, instead of being human oriented action score. This is just like, Hey, do objects behave like they should. So this is maybe non-human versions of that. So does this flow from this frame to this frame? Does this specific, you know, generic object work? Then there’s this A/C score, too.

And then the way you can think about this is I didn’t really didn’t understand this one to be to be very honest. so I didn’t really understand this one, but we can move on to maybe temporal consistency, which is the last one

Vibhu Sapra: if you want. I can explain it for a second. So some stuff that people find very interesting in Sora is like when you come to animation. There are 12 key things that animated videos do really well. And a lot of that is like, what’s the velocity of like eye movement and like moving and like animating characters and stuff. And a lot of the other video models have not done this. Well, it’s like they get like a 0 on this score.

So this is kind of measuring that. And people have noticed that, like inherently, Sora has done this very well, even though we don’t have benchmarks for any of this. This is kind of talking about what’s fluidity of the motion in images. This goes into animation. If you’re an animation studio doing a rough draft. How well does your video generation model actual like motion? That’s kind of what this measures. I’ve never done this Eval. But that’s kind of the space. And it is very like, it’s actually a key thing that’s like, been talked about with other video gen models. It’s just not my space.

Dat Ngo: But yeah, actually, I think I remember this one. This one’s like. if you said: Human walking quickly in the frame. And if they end up walking super slowly it. It’s like, Hey, and I know I again. I’ve never run this Eval before, but the way it’s kind of described. It’s whether the motion amplitude is consistent with the amplitude specifying the text prompt. My thought is like, Hey, if you say humans walking quickly, and they end up walking really slow. I’m assuming there’s a way to measure it again. This paper also is the Rabbit Hole into probably 50 other papers.

Vibhu Sapra: This goes deep. There’s a lot of overlap in these evals. To like text to prompt alignment would also fit that right. Human goes slow. Human doesn’t go slow. So I don’t know. That’s the big one that I go for just text to video alignment, because the real thing in video gen is like this stuff takes time. It’s very compute intensive. That’s why they have a lot of people optimizing how to ship this, how to deploy this. And like Emu video, I remember seeing that a year ago, what they show is like, there’s some benchmarks where they can get great video, it just doesn’t align to the prompt. So that doesn’t necessarily match what we’re expecting. Right? If you have a great video, but doesn’t meet your prompt there’s not much value in that.

Dat Ngo: And I’m gonna go through the last category. And then I have a hot take but temporal consistency time. Super important and videos. Obviously, we’ll cover really quickly. What that means. But there’s warping error. Never heard of that. But I think it’s like understanding.

this one I didn’t really know super well, but it says we calculate pixel wise differences between a warped image and a predicted image. I think it’s maybe I don’t know future prediction versus I don’t know what a warped image means.

Vibhu Sapra: This is just general with temporal consistency. Since you’re doing stable diffusion. So you’re generating base images from noise, stitching them together like previous work on Video Jen, there would be very deep inconsistency between frame to frame, where like backgrounds would change. So warping is where if there’s motion like, let’s say I’m moving my hand, in one frame I might have 5 fingers, the next I might have none. In the next, my face might warp somewhere.

So this is like a measure of how you actually measure temporal consistency? A lot of this is also just vibe check, or there’s like hacky routes to do this very efficiently that we can tangent into another area of research. But actually, a lot of Alibaba, tencent models. If you think of the dancing videos and AI influencer videos, they’re very efficient in the sense of like you generate one image. You train a model that maps, dancing, motion, for example, and apply that to your image and move it. Takes a lot less compute, and it solves this warping. So another rabbit hole to go down. But it’s just a part of temporal consistency.

Dat Ngo: Yeah, it’s like time consistency. This is pixel to pixel, and I think the next one turns out you can actually take. You can look at semantic consistency. So turn a pixel into some text and see if the text is consistent throughout time. There’s also face consistency. Like I said, there’s very specific things to humans, for example. So I think this one might be like Pixel to pixel consistency over time, but maybe more specific to a human’s face.

And so anyways, I just wanna give people an idea of, like maybe the categories that people are thinking about. And for the first time, I think the quantitative measures are just. I think they’re harder to do. Maybe I’m wrong. Then they also did a user opinion alignment, which is that feedback. So to give qualitative scores from humans. And so things like video quality, when you as a human look at a video, you kinda know, something is off. And maybe they have a more qualitative, maybe, feedback for that.

But it’s interesting to understand how we humans think a video is good. And then how do you get that into math form was really what I wanted to cover in this paper.

Any other closing thoughts?

Conclusion

Vibhu Sapra: I want to hear the hot takes!

Jason Lopatecki: The only other thought I have is for the creative, generative models, you tend to care more about your model evals versus like… we have this concept of task evals where as people are using this in production, getting proxy metrics for if it’s working correctly and and they’re they’re normally like simplified versions of of something. But most of this fits into the model Eval category of trying to understand the quality of the model you built. In the first place and compare models.

When a business goes to use this or a company customer goes to use this, and you’re looking at like use by use, by use: did it do what the person wanted to? There’s probably a simple version or some version of this, I mean for creative stuff, it tends to matter less. You know, you create a bunch of things, and you just throw, you know, like that the output. If you’re integrating. GPT-4. In your business system, you care a lot about not breaking things for creative image generation, you can just do another one.

So maybe those task evals matter or less. It’s just around getting the bottle right or good enough.

Vibhu Sapra: Yeah, I guess my other thing that I’m pretty excited about, even with that space is like they’re doing pretty good, like video latent space work. And that leads to embeddings. And I don’t know. I like how like Phoenix has the whole like embedding visualization. I want to see more of that for video. If you’re taking this to prod. I would love to see a version of like video embeddings plotted in Phoenix like actually going through that, seeing what fits, but how we build pipelines around this. How do you do this actual like. you know, pipeline work?

Jason Lopatecki: Well, thanks everyone or joining. And great conversation.

Dat Ngo: Big shout out to our special guest, Vibhu. If you can’t tell, he’s the wealth of information. Thank you so much for joining.