Dive into the latest technical papers with the Arize Community.

New In Arize AX: January 2026 Updates

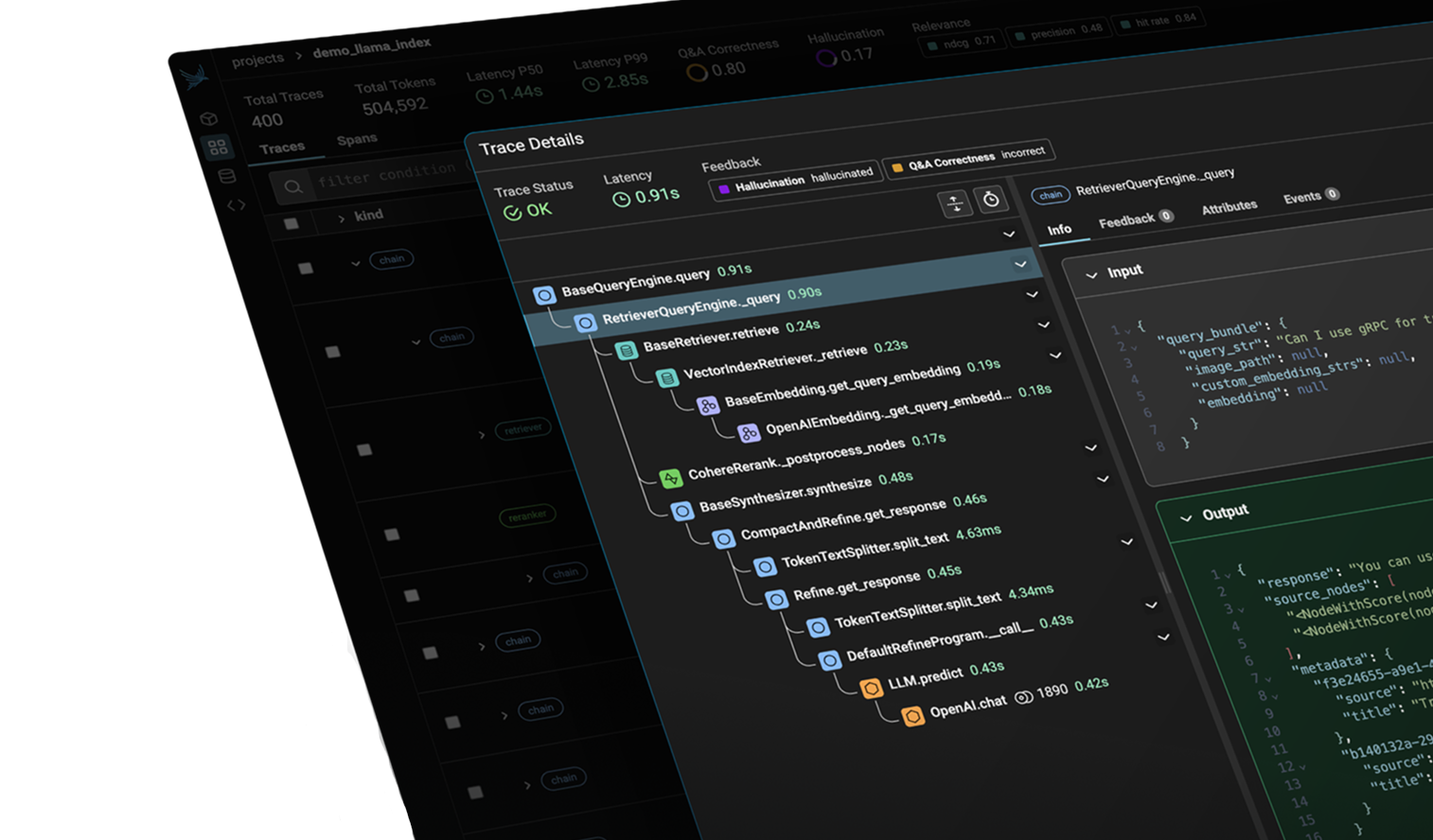

Arize AX pushed out a lot of new updates in January 2026. From improved evaluator hub to custom prompt release labels, here are some highlights. Evaluator Hub: Reusable Evaluators We’re…

- Product Releases