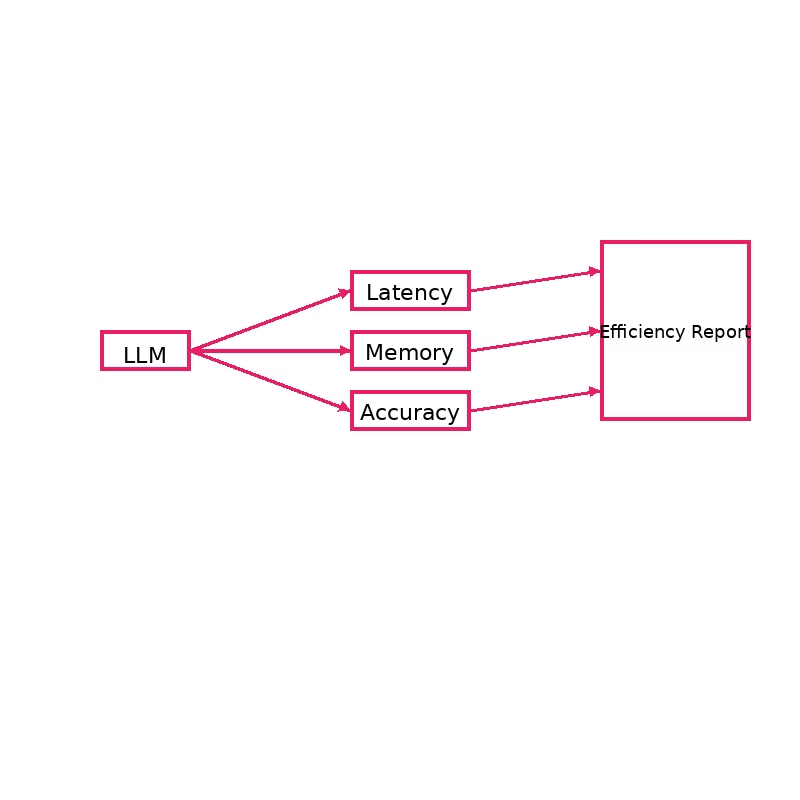

EfficientLLM is a benchmarking initiative focused on measuring how resource-efficient different LLMs are, beyond just accuracy. It evaluates models on speed, memory usage, and cost-per-query under standardized conditions. For example, EfficientLLM might record inference latency (throughput), peak GPU memory consumption, and computational cost needed to achieve a certain quality. By collecting these efficiency metrics across models and tasks, the framework highlights trade-offs between model size, speed, and performance. This helps researchers identify which LLMs offer the best performance per unit of computing resource, guiding optimization and deployment decisions. In essence, EfficientLLM provides a holistic scorecard for LLMs that factors in not only what they can do, but how efficiently they do it (paper).

What is EfficientLLM?

EfficientLLM

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.