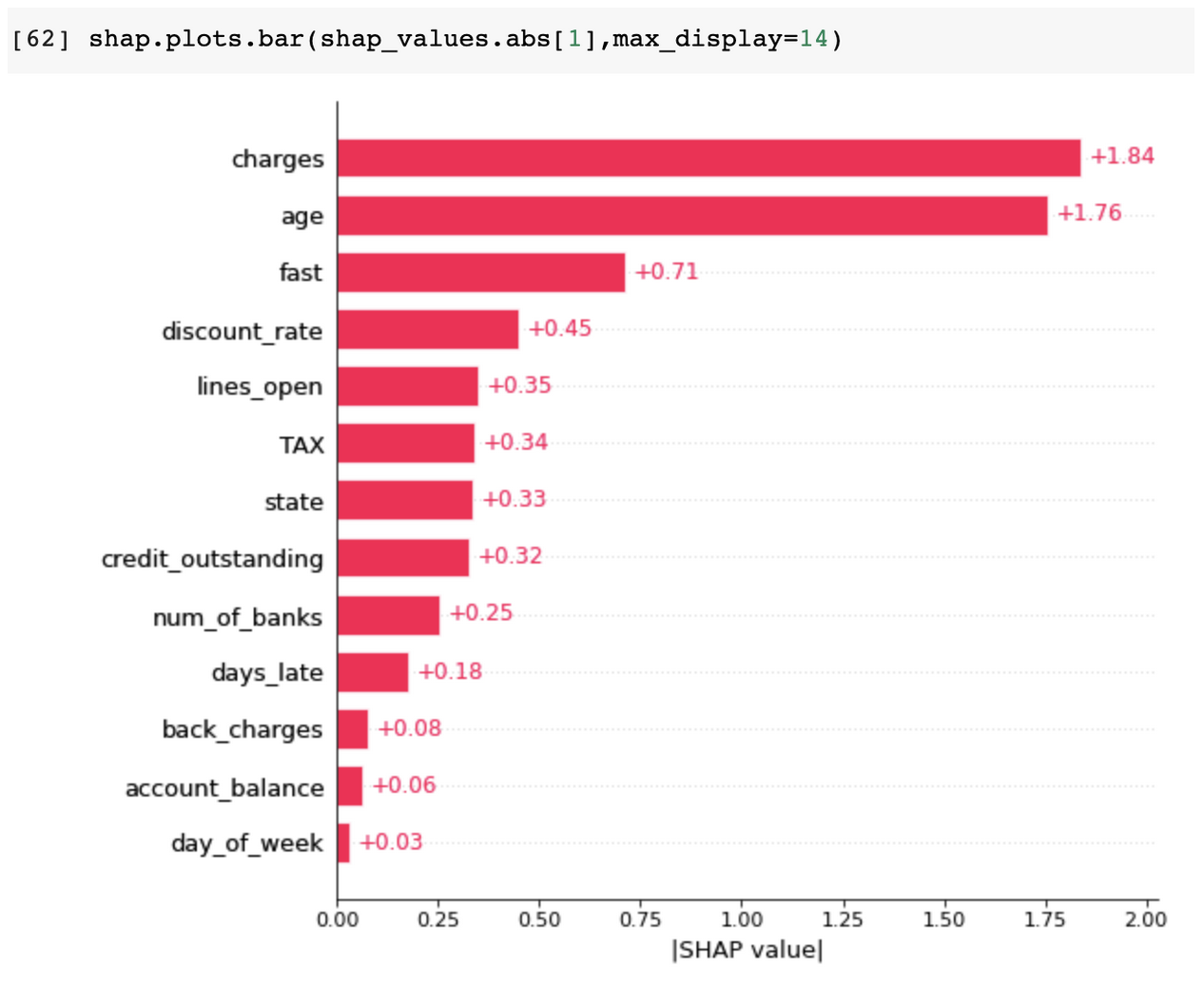

The total extent to which the machine learning internal mechanics can be explained in human-understandable terms only. It is simply the process of explaining the reasons behind the machine learning aspects of output data. See ‘SHAP’.

What is Explainability in Machine Learning?

Explainability

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.