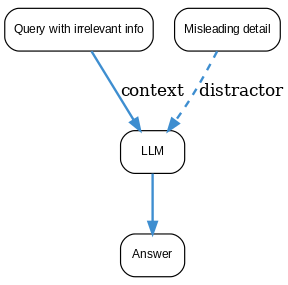

Misguided Attention” is a benchmark designed to test an LLM’s reasoning robustness when faced with misleading or irrelevant context. Each prompt in this evaluation contains distracting details or extraneous information meant to throw the model off track. The challenge is whether the LLM can ignore these red herrings and focus on the truly relevant parts of the question. For example, a problem might embed a simple logic puzzle within a lengthy, confounding story – a model failing the test will get sidetracked by the story and answer incorrectly. This benchmark gained attention after open models like DeepSeek V3 dramatically underperformed on it (solving only ~22% of prompts), revealing that even high-performing models can be easily tricked by superficial cues. Misguided Attention has become a go-to benchmark for “critical thinking” and highlights the need for models to better separate signal from noise (misguided attention dataset).

What is Misguided Attention Evaluation?

Misguided Attention Evaluation

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.