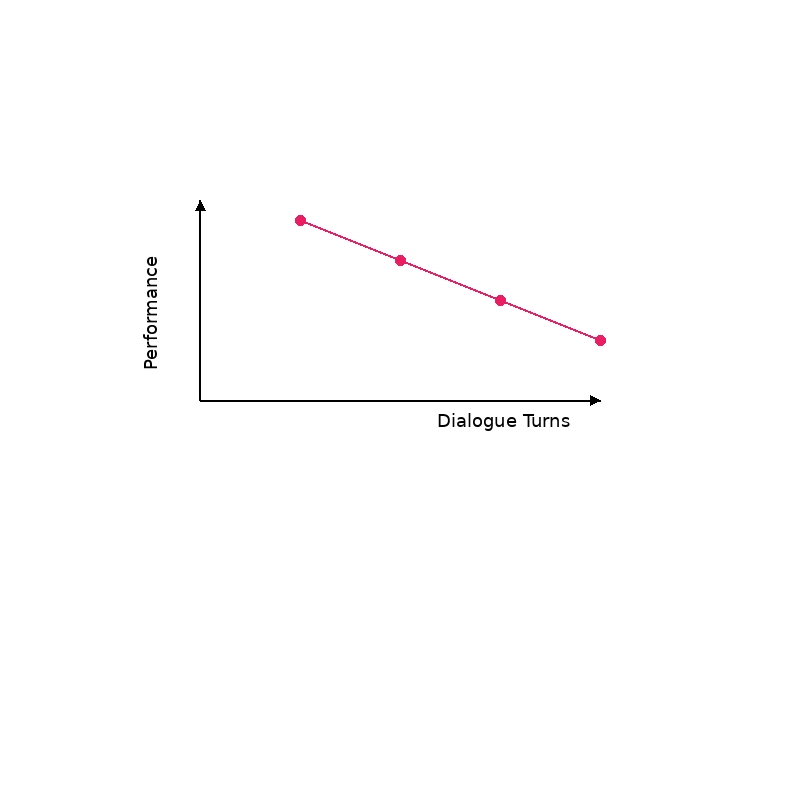

It has been observed that many LLMs “get lost” in extended conversations, showing a significant performance drop as the number of dialogue turns increases. Initially, a model may answer correctly, but after several back-and-forth exchanges, its responses become less accurate, more contradictory, or incoherent. A recent study found that 15 top models performed much worse in multi-turn settings (up to 35% drop) compared to single-turn prompts. This degradation may be due to error accumulation, the model drifting off-topic, or misremembering earlier context. As the conversation grows, the chance of the model introducing nonsense or forgetting instructions rises. Researchers are now quantifying this multi-turn reliability issue and developing techniques (like turn-by-turn grounding or periodic context resets) to mitigate it. Recognizing multi-turn degradation is important for deploying LLMs in chatbots or assistants, ensuring they maintain quality over long interactions (paper).

What is LLM Multi-Turn Degradation"

Multi Turn LLM Degradation

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.