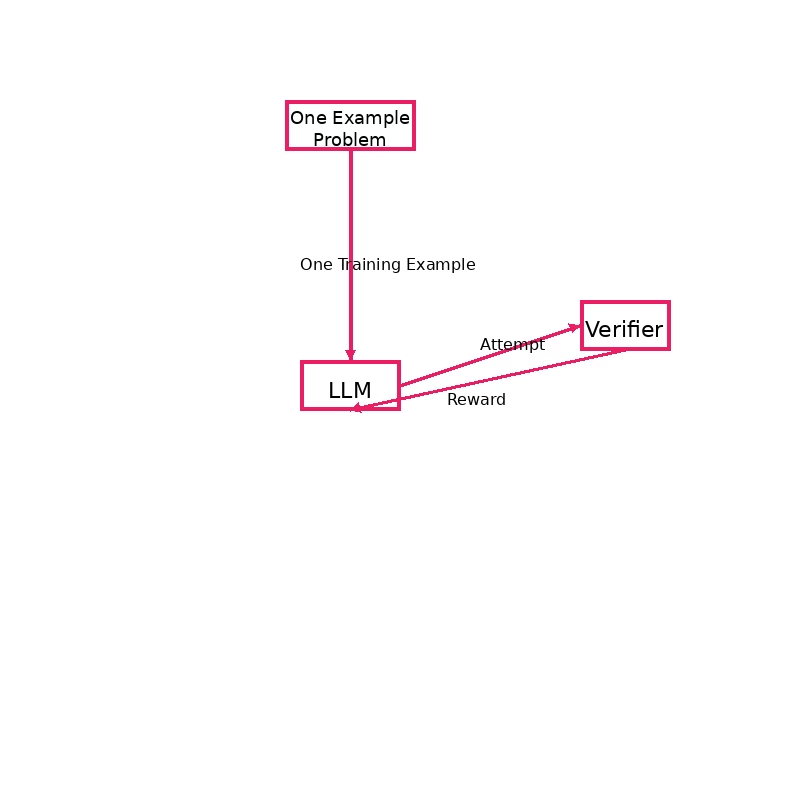

One-Shot RLVR (Reinforcement Learning with Verifiable Reward) is a method to fine-tune a language model using only a single training example, provided the task has an automatic correctness check. The LLM is given one complex problem (such as a math question) and tries to solve it; a verifier (like a unit test or known answer) then provides a binary reward signal (success or failure). Using this reward, the LLM’s parameters are updated via reinforcement learning. Remarkably, researchers found that even one example with verifiable feedback can significantly improve an LLM’s reasoning on similar tasks. One-Shot RLVR doesn’t teach the model new skills from scratch but activates and sharpens latent capabilities with minimal data. This approach achieved state-of-the-art math reasoning improvements by fine-tuning on just one carefully chosen example, demonstrating the power of extremely low-shot RL fine-tuning (paper).

What Is One-Shot Reinforcement Learning Using Verifiable Rewards (RLVR)?

One-Shot RLVR

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.