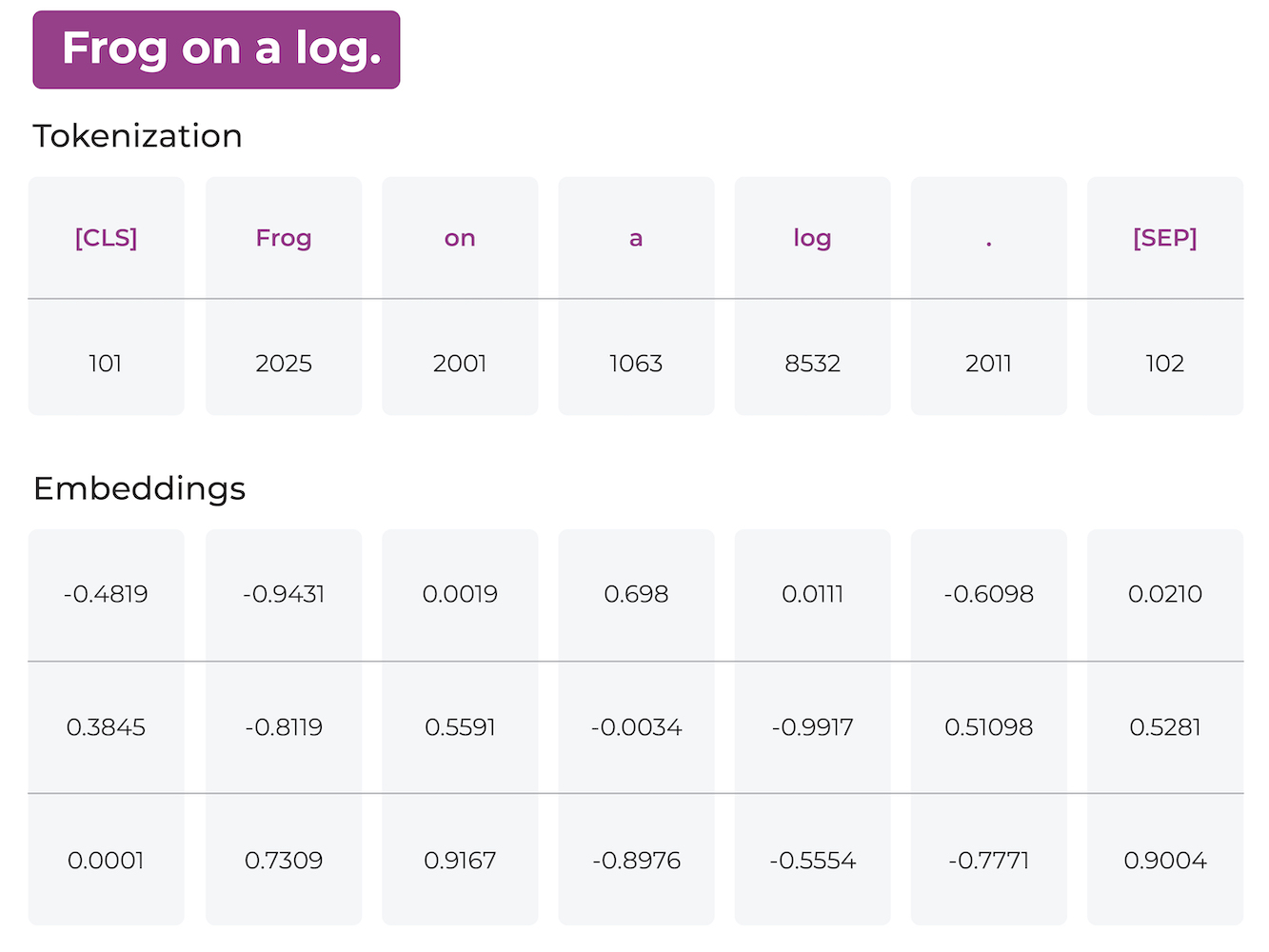

Tokenization is a crucial step in language models as it breaks down text data into smaller units called tokens, such as words or characters. These tokens serve as a representation of the text and enable various NLP tasks. Tokenization helps standardize and process text data, making it easier to analyze. It also addresses language-specific challenges like stemming and stop-word removal, improving the accuracy of language models.

What Is Tokenization In Machine Learning?

Tokenization

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.