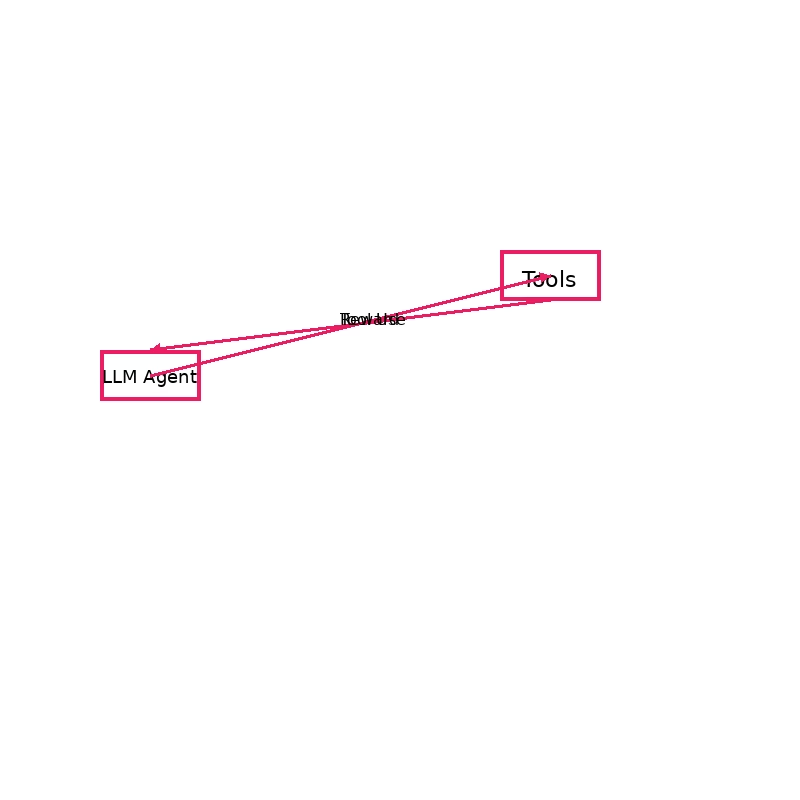

Tool-N1 refers to a class of approaches where language models learn to use external tools through trial and error, without explicit step-by-step demonstrations. Instead of imitating labeled tool-use examples, the LLM is placed in an environment with APIs (calculators, web search, code execution, etc.) and given a goal. The model’s only feedback is a reward signal based on whether the goal was achieved. Through reinforcement learning, the LLM gradually figures out which tool to call and what inputs to provide to get the desired result. OpenAI recently showed that this method can train agents to carry out complex multi-step tool workflows via RL alone. Tool-N1 agents develop a sense of when and how to invoke tools accurately – for example, learning to call a calculator for math problems – all without supervised tool-use traces. This leads to more flexible and general tool use, as the model isn’t limited to mimicking examples but can discover novel tool interactions to maximize rewards (paper).

What is Tool-N1 in AI Engineering?

Tool N1

Bi-weekly AI Research Paper Readings

Stay on top of emerging trends and frameworks.