Arize Phoenix:

Open-source LLM tracing & evaluation

Evaluate, experiment, and optimize AI applications in real time.

2.5M+

Downloads

6.4k+

Github Stars

1.5M+

OTEL Instrumentation Monthly Downloads

6k+

Community

AI Developers Use Phoenix

Flexible, Transparent, & Free from Lock-In

Phoenix uses OpenTelemetry (OTEL) for seamless setup, full transparency, and no vendor lock-in—start, scale, or move on without restriction.

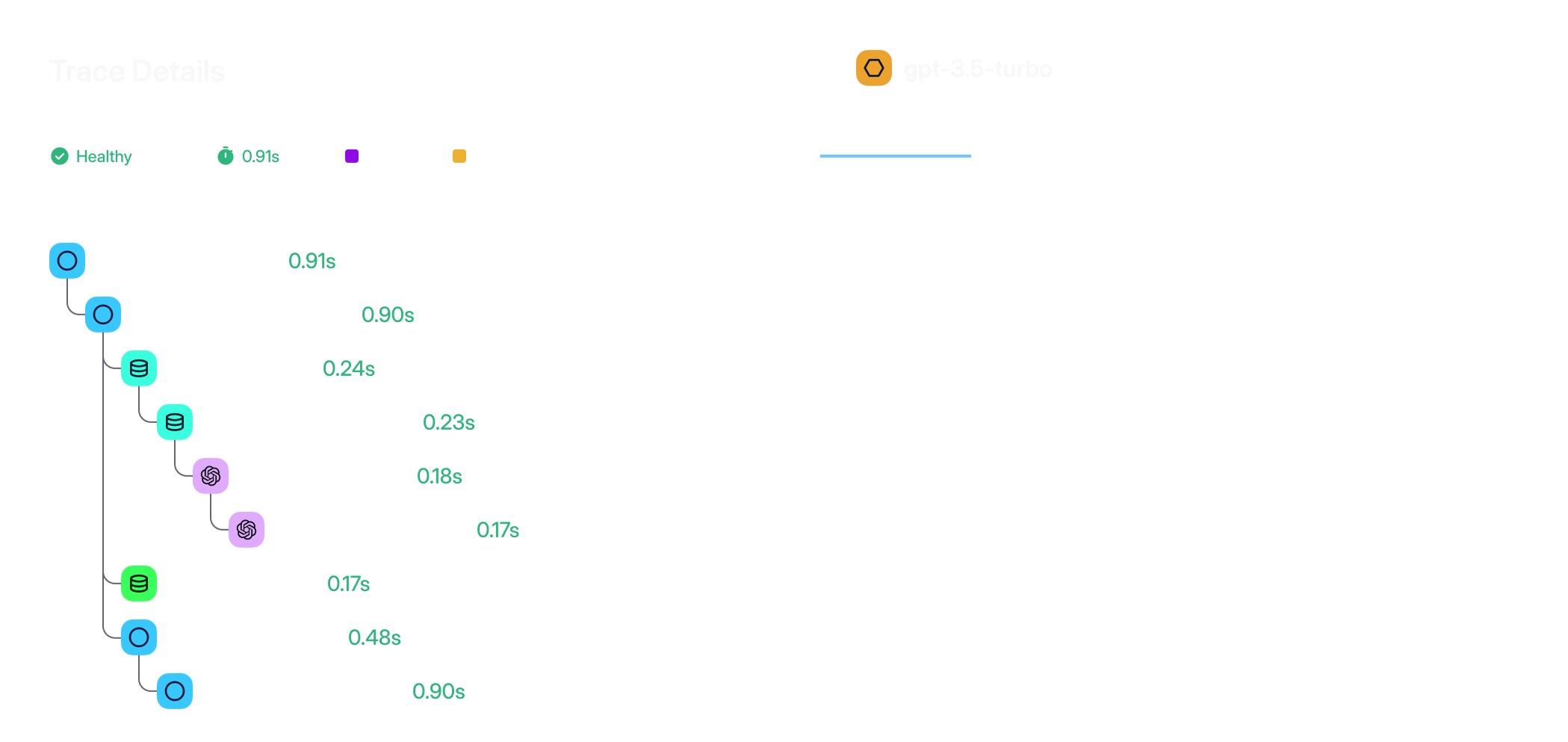

Application Tracing for Total Visibility

Easily collect LLM app data with automatic instrumentation — or go manual for utmost control.

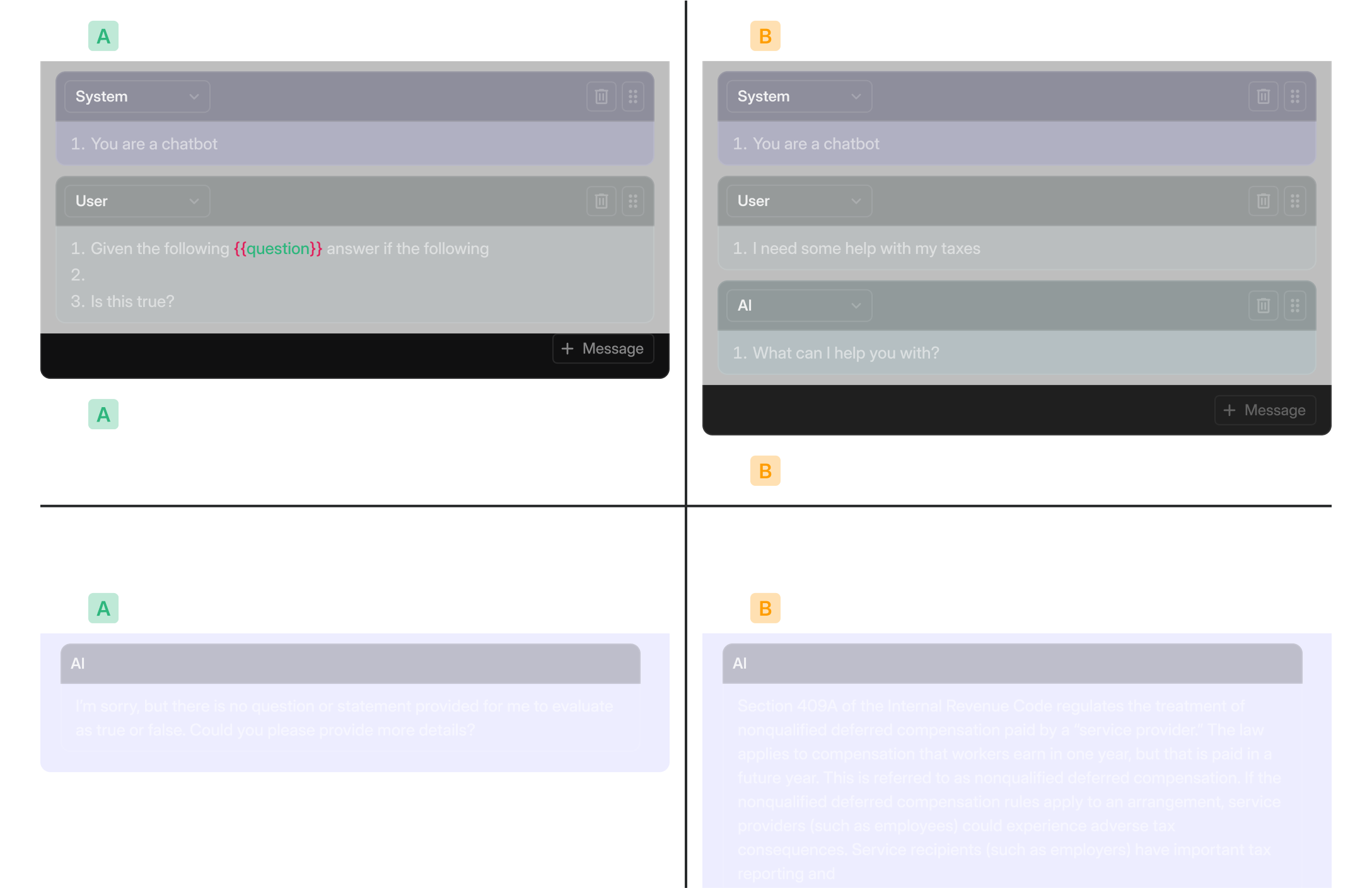

Interactive Prompt Playground

A fast, flexible sandbox for prompt and model iteration. Compare prompts, visualize outputs, and debug failures without leaving your workflow.

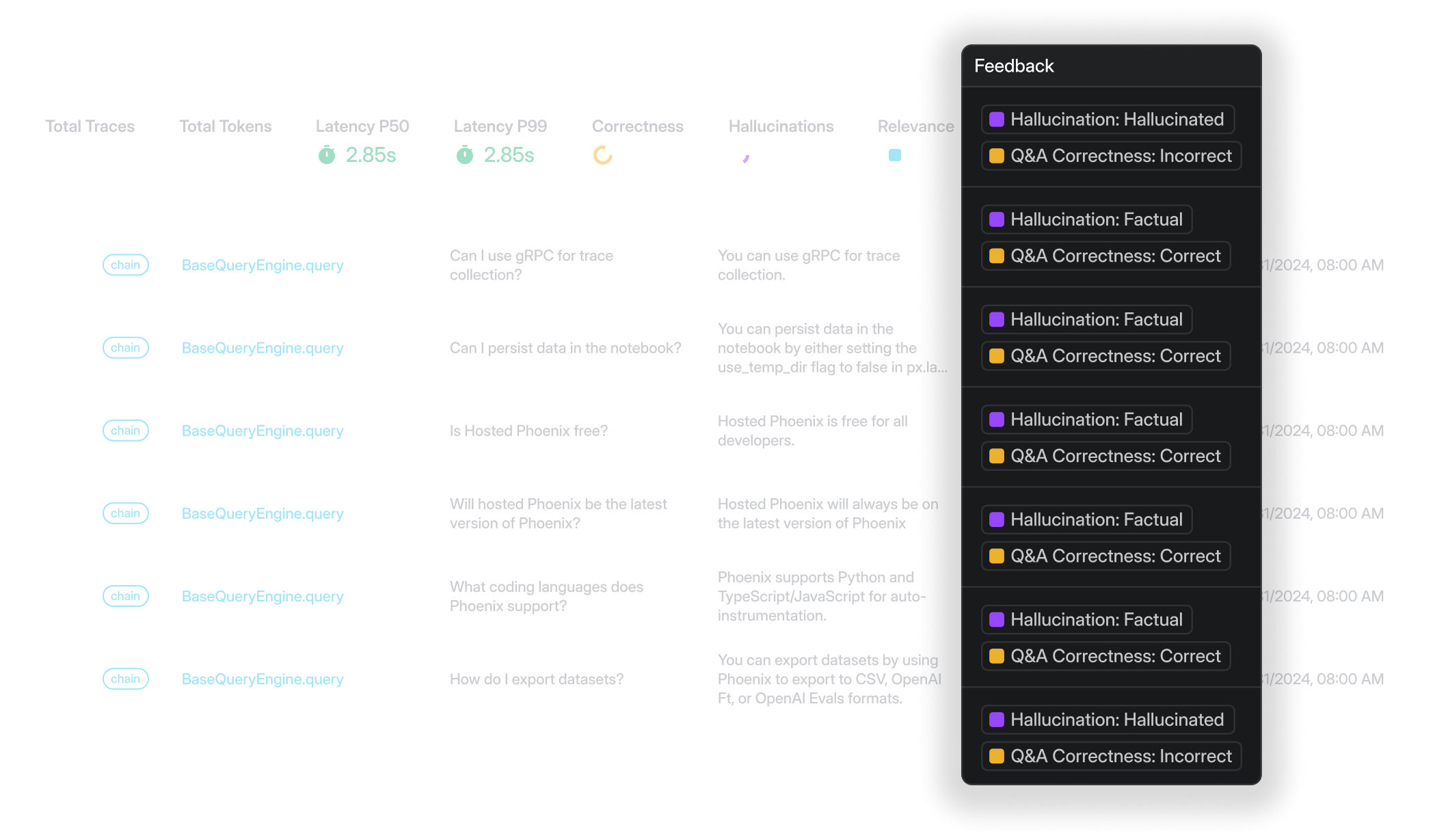

Streamlined Evaluations and Annotations

Leverage an eval library with unparalleled speed and ergonomics. Start with pre-built templates, easily customized to any task, or incorporate Human feedback.

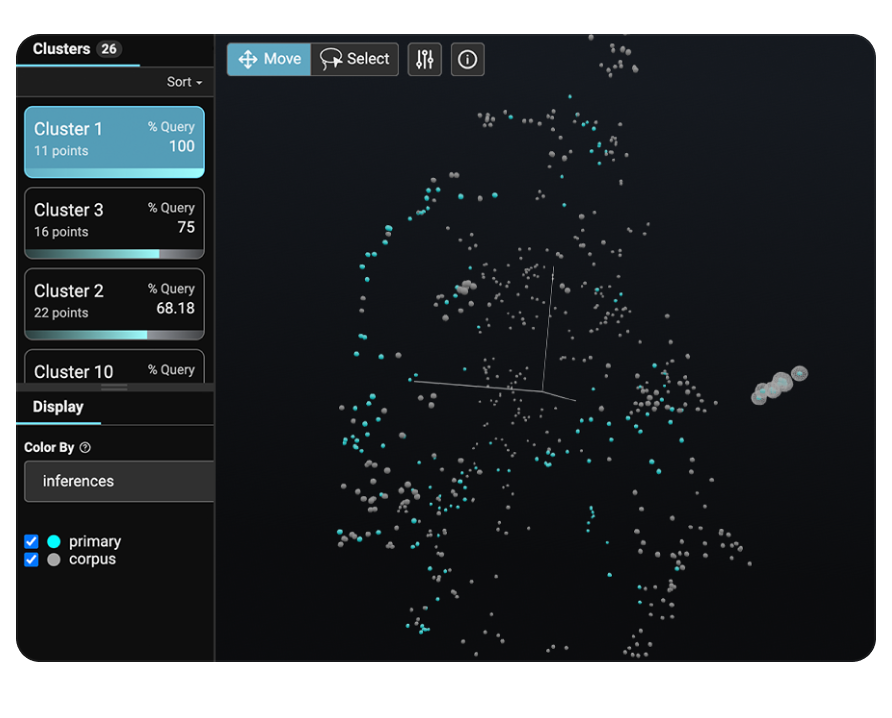

Dataset Clustering & Visualization

Uncover semantically similar questions, document chunks, and responses using embeddings to isolate poor performance.

Proudly Open Source

Built on top of OpenTelemetry, Phoenix is agnostic of vendor, framework, and language – granting you the flexibility you need in today’s generative landscape.

Phoenix works with all of your LLM tools

Run model tests, leverage pre-built templates, and seamlessly incorporate human feedback. Customize to fit any project while fine-tuning faster than ever.

Don’t just take our word for it

"We are in an exciting time for AI technology including LLMs. We will need better tools to understand and monitor an LLM’s decision making. With Phoenix, Arize is offering an open source way to do exactly just that in a nifty library."

Erick Siavichay

Project Mentor, Inspirit AI

"Just came across Arize-phoenix, a new library for LLMs and RNs that provides visual clustering and model interpretability. Super useful."

Lior Sinclair

AI Researcher

"I always get asked what my favorite Open Source eval tool is. The most impressive one thus far to me is Phoenix."

Hamel Husain

Founder, Parlance Labs

"As LLM-powered applications increase in sophistication and new use cases emerge, deeper capabilities around LLM observability are needed to help debug and troubleshoot. We’re pleased to see this open-source solution from Arize, along with a one-click integration to LlamaIndex, and recommend any AI engineers or developers building with LlamaIndex check it out."

Jerry Liu

CEO and Co-Founder, LlamaIndex

"This is something that I was wanting to build at some point in the future, so I’m really happy to not have to build it. This is amazing."

Tom Matthews

Machine Learning Engineer at Unitary.ai

"Arize's Phoenix tool uses one LLM to evaluate another for relevance, toxicity, and quality of responses. The tool uses 'Traces' to record the paths taken by LLM requests (made by an application or end user) as they propagate through multiple steps. An accompanying OpenInference specification uses telemetry data to understand the execution of LLMs and the surrounding application context. In short, it's possible to figure out where an LLM workflow broke or troubleshoot problems related to retrieval and tool execution."

Lucas Mearian

Senior Reporter, ComputerWorld

"Large language models...remain susceptible to hallucination — in other words, producing false or misleading results. Phoenix, announced today at Arize AI’s Observe 2023 summit, targets this exact problem by visualizing complex LLM decision-making and flagging when and where models fail, go wrong, give poor responses or incorrectly generalize."

Shubham Sharma

VentureBeat

"Phoenix is a much-appreciated advancement in model observability and production. The integration of observability utilities directly into the development process not only saves time but encourages model development and production teams to actively think about model use and ongoing improvements before releasing to production. This is a big win for management of the model lifecycle."

Christopher Brown

CEO and Co-Founder of Decision Patterns and a former UC Berkeley Computer Science lecturer

"Phoenix integrated into our team’s existing data science workflows and enabled the exploration of unstructured text data to identify root causes of unexpected user inputs, problematic LLM responses, and gaps in our knowledge base."

Yuki Waka

Application Developer, Klick