Launch at Google Cloud Next ‘24 gives AI engineers unique visibility and control over complex LLM applications

Las Vegas, NV, April 11, 2023 – Arize AI, a pioneer and market leader in AI observability and large language model (LLM) evaluation, debuted industry-first capabilities for prompt variable monitoring and analysis onstage at Google Cloud Next ’24 today.

The launch comes at a time of critical need. Although enterprises are racing to deploy foundation models to stay competitive in an increasingly AI-driven world, hallucinations and accuracy of responses remain barriers to production deployments.

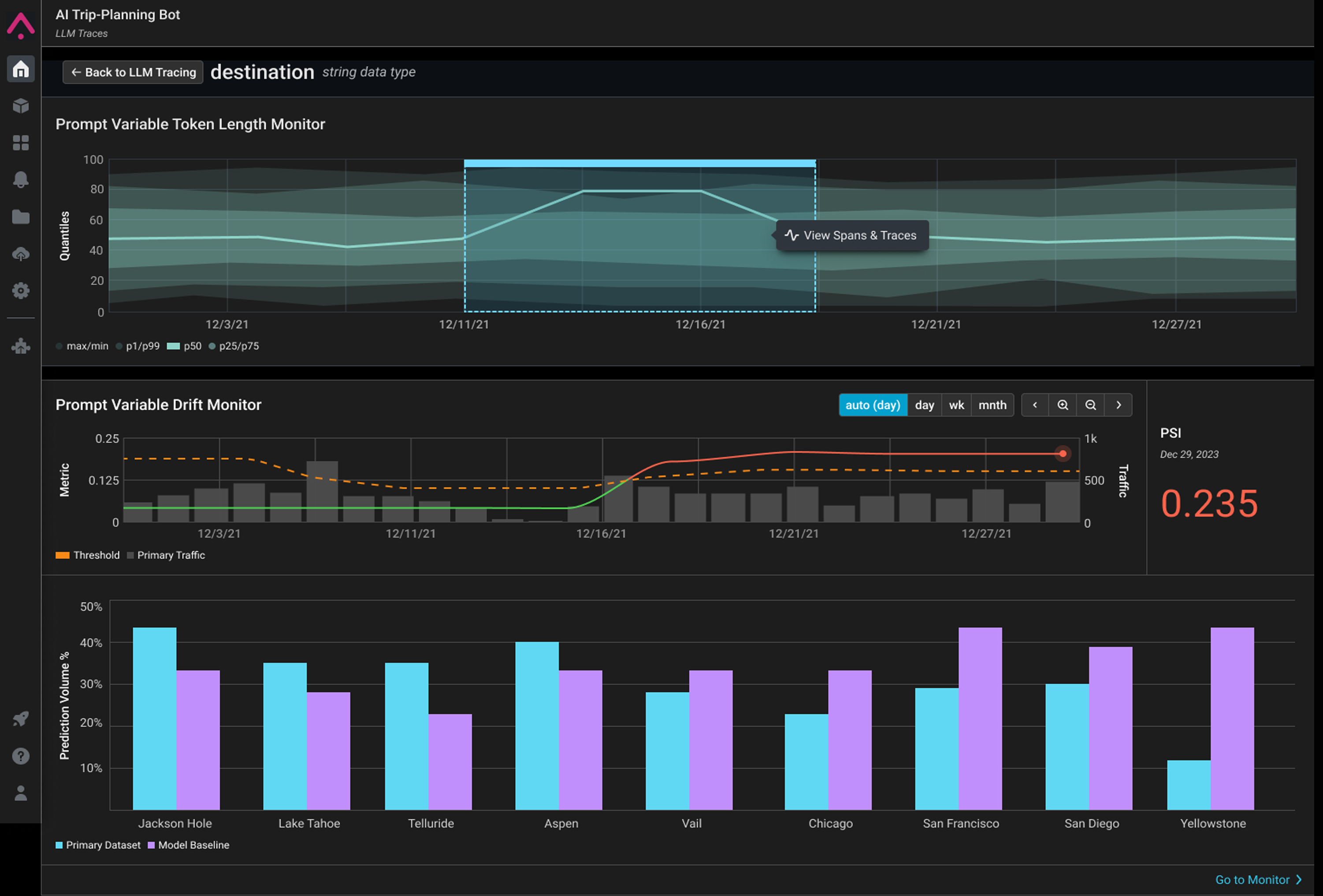

Arize’s new prompt variable monitoring (create a free account) helps AI engineers and ML teams solve that problem by automatically detecting bugs in prompt variables and pinpointing problematic datasets. Through introspection and refinement of the prompts used in LLM-powered applications, teams can ensure that generated outputs align with expectations around metrics such as accuracy, relevance, and correctness. Additional context window management tools also launching today allow for further examination.

“Debugging LLM systems is far too painful today,” says Jason Lopatecki, CEO and Co-Founder of Arize AI. “By analyzing how AI systems respond to a myriad of prompts and offering deeper insights into model behavior, Arize’s new prompt variable analysis tools promise to help AI engineers have more successful outcomes in production — with training and feedback loops to inform ongoing refinement.”

About Arize AI

Arize AI is an AI observability and LLM evaluation platform that helps teams deliver and maintain more successful AI in production. Arize’s automated monitoring and observability platform allows teams to quickly detect issues when they emerge, troubleshoot why they happened, and improve overall performance across both traditional ML and generative use cases. Arize is headquartered in Berkeley, CA.