Co-Authored by Jason Lopatecki, Co-founder and CEO & Michael Schiff, Chief Technology Officer.

Earlier this month, we rolled out real‑time ingestion support to every Arize AX workspace—paid and free. With that launch, Arize now ingests terabytes of data every day across hundreds of customers and thousands of users, all at (near) real‑time speeds.

How do we keep those speeds consistent across so many workloads? The answer is our custom‑built analytical database: adb.

Arize adb was engineered from the ground up for the twin demands of high‑volume generative‑AI workloads and blazing‑fast ad‑hoc analytics. Until now, it was the unsung hero keeping the platform humming under tremendous load. With the arrival of universal real‑time ingestion, it felt like the right moment to pull back the curtain.

💡 adb backs every managed Arize AX instance today. Want to kick the tires? Sign up here.

Why We Built adb

Observability has a habit of birthing new databases—Datadog’s Husky, TimescaleDB, InfluxDB, ClickHouse, and more. Building a database from scratch is not for the faint‑hearted, and it’s rarely the right path for a start‑up. But when you are operating at petabyte scale and powering mission‑critical AI workflows, generic solutions fall short.

Datadog would not be Datadog if it ran on vanilla ClickHouse—and Arize would not be Arize without adb.

AI observability and evaluation isn’t just about collecting logs and traces; it’s about accelerating the entire AI development lifecycle. Builders need:

- Instant data availability at scale

- Fast, frequent updates for evaluations and feedback loops

- One‑click and programmatic exports of freshly updated datasets

Those needs drove the design goals that shaped adb:

| Goal | What it means |

| Core data asset | Generative outputs are organizational gold. Data should be available to any tool, not trapped in a monolith. |

| Stateless, elastic compute | All state lives in object storage so compute can scale up → down → zero without risk. |

| Sub‑second answers on billions of rows | The UI should feel as responsive as a spreadsheet, no matter the dataset size. |

| Continuous real‑time updates | Fresh events stream in without blocking; large historical backfills run asynchronously. |

| Internet‑scale durability | Designed to process billions of spans, traces, or generations every month—then keep going. |

Key Features of Arize adb

adb delivers speed and scale without tradeoffs:

- Compute / Storage Separation – Your AI data is an asset; accessing it should not require long export jobs.

- Parquet / Iceberg‑backed storage – Standards‑based files with an Arrow‑native query layer.

- Real‑time streaming inserts – Dev and prod data flow through a unified path, arriving in seconds.

- Mass updates with immediate download – Evaluations, annotations, and feedback loops rewrite datasets that can be fetched instantly.

- Smart cache control – Tune the hot/cold boundary; move a 10× cost lever with a single knob.

- Apache Flight for direct file access – Zero‑copy downloads of just‑updated Arrow files at scale.

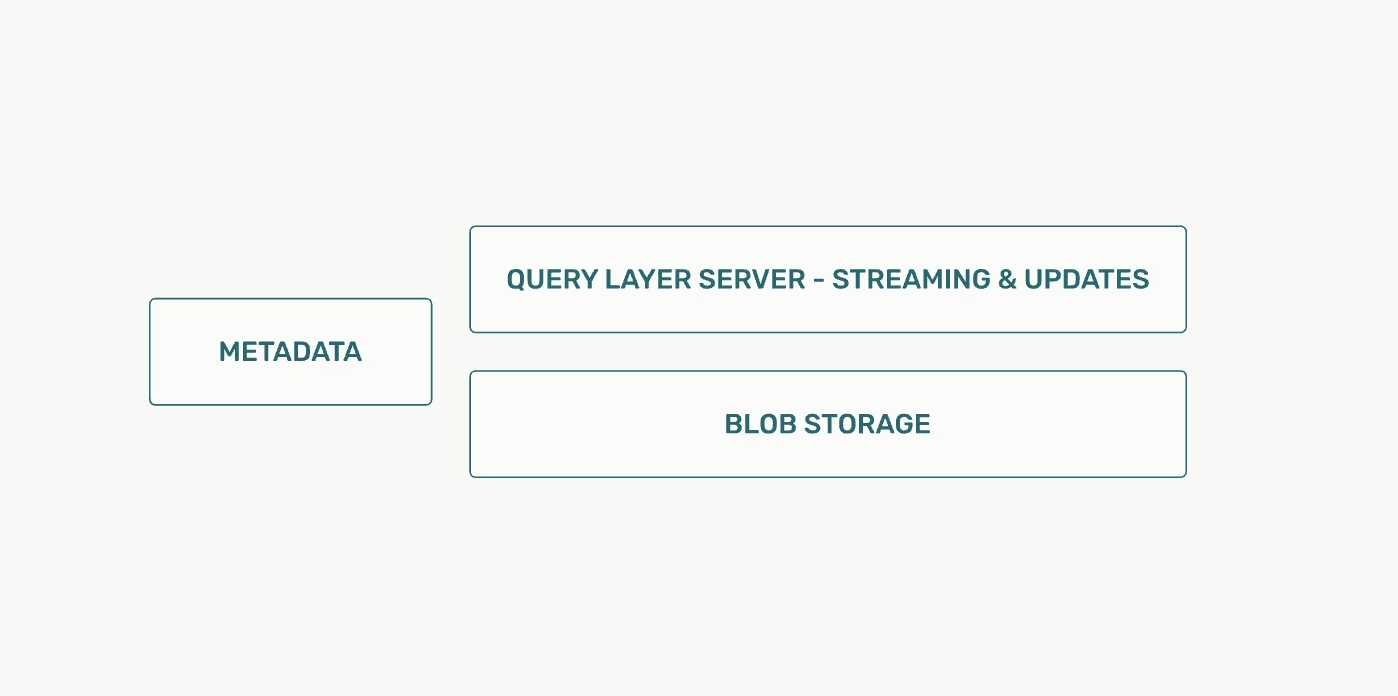

Architecture At a Glance

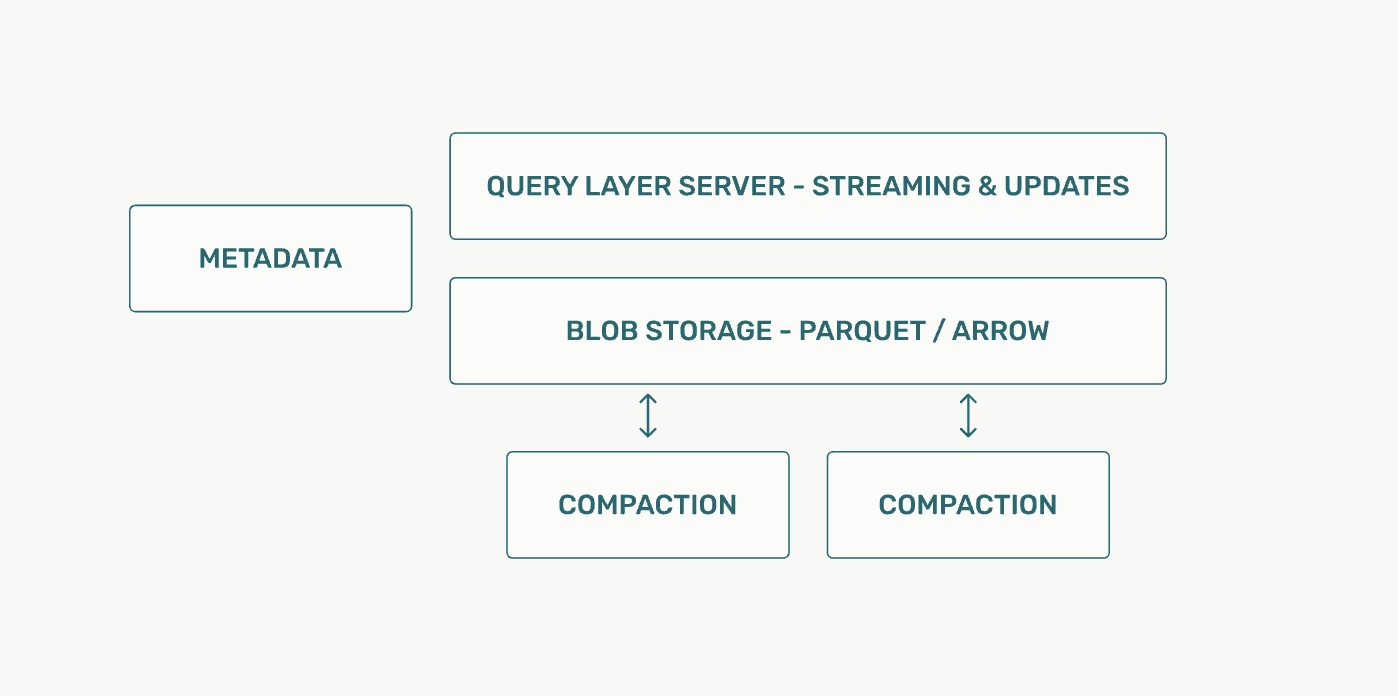

Below is a simplified look at how adb orchestrates streaming ingest, real‑time updates, and large batch uploads—all backed by durable blob storage.

The earliest version of adb forked Apache Druid — hence some lingering nomenclature — but nearly every component was subsequently rewritten or highly modified. Besides a few familiar names, little of the original architecture remains.

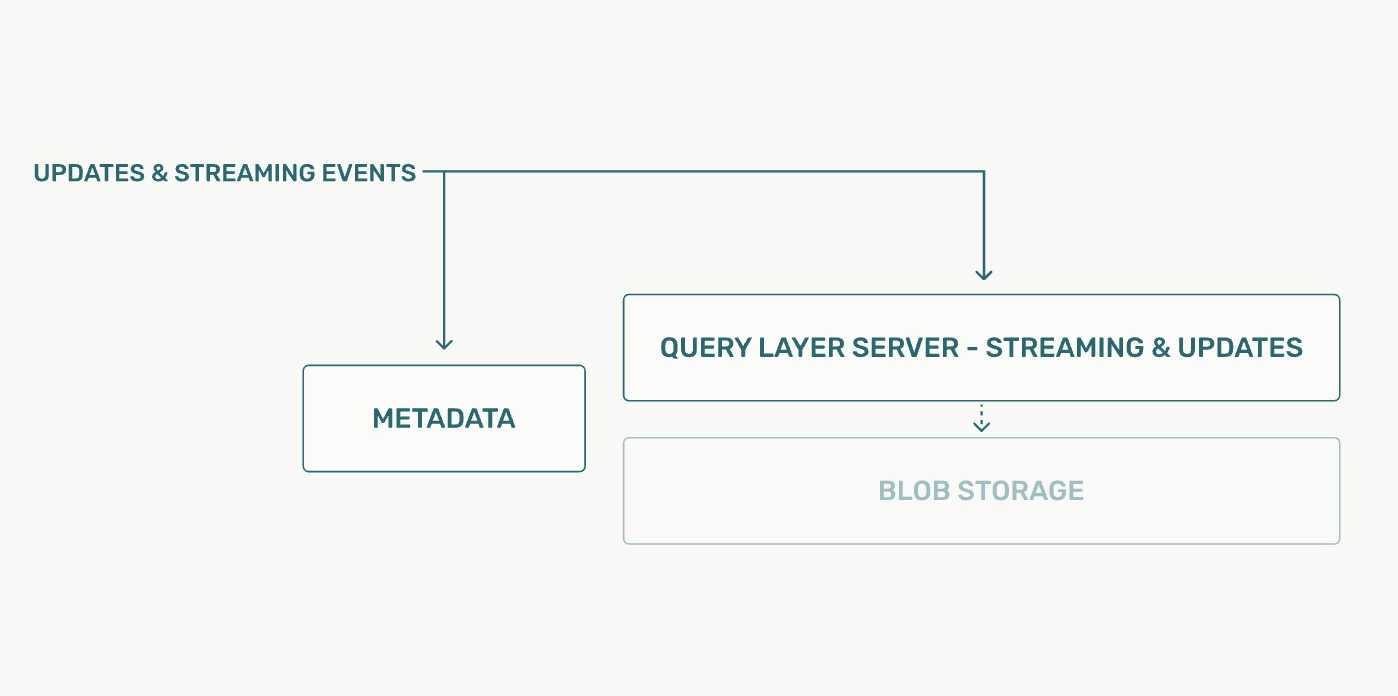

Streaming Path

The streaming path was written from scratch to manage the full streaming ingest data that comes in both nonstop at scale.

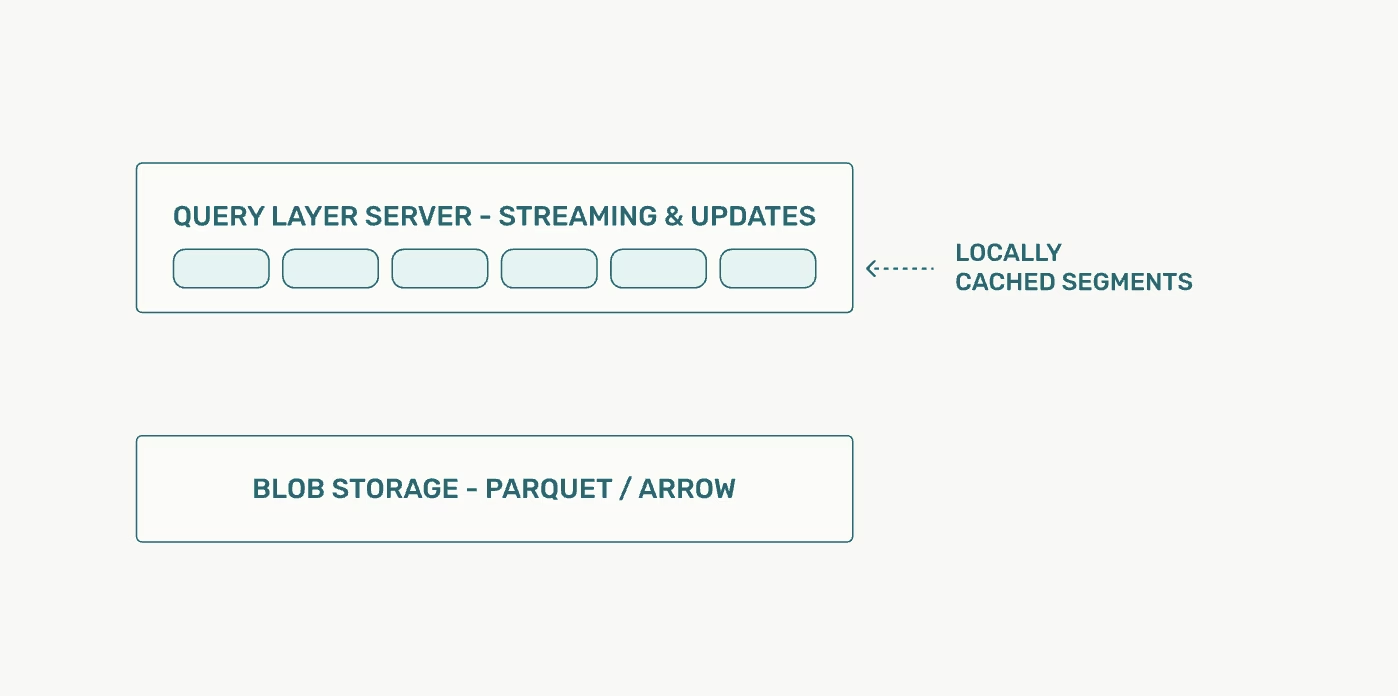

Streams of events land in the Query Layer (sometimes called the historical tier). Data is materialized as in‑memory Arrow, then flushed to object storage in columnar form.

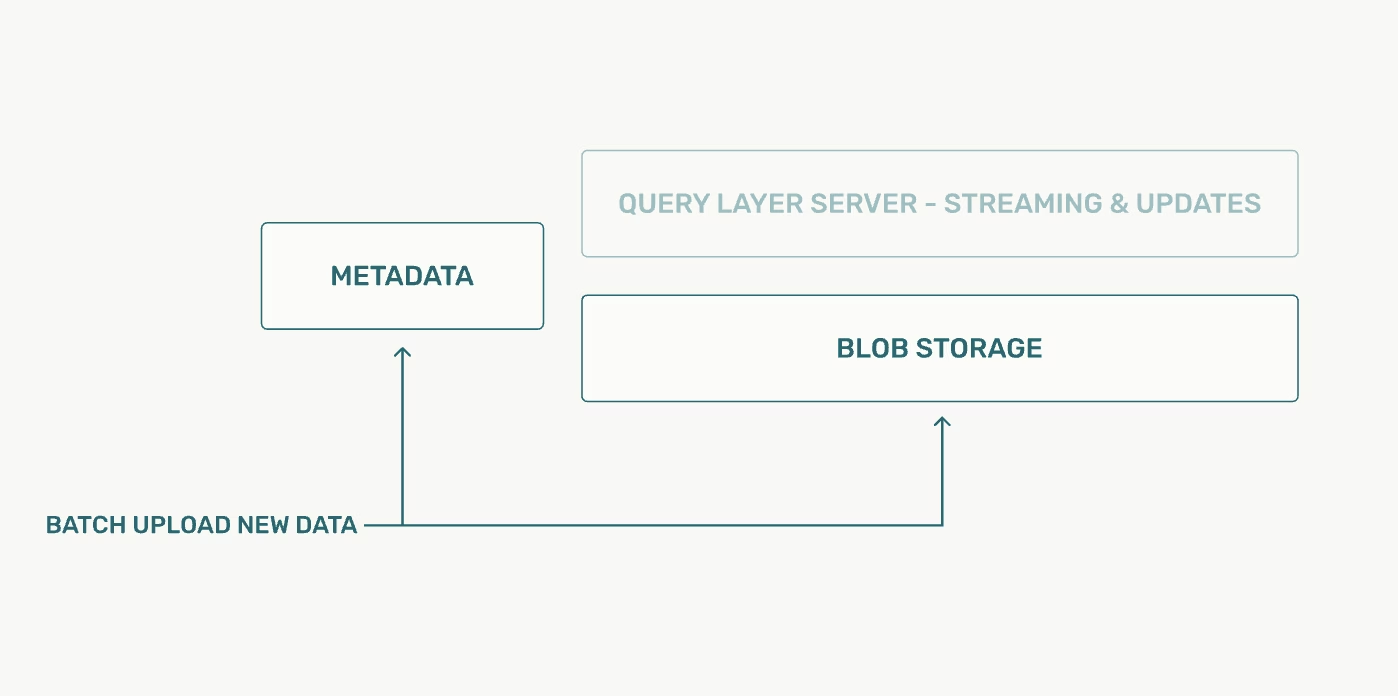

Batch Path

Huge backfills bypass the query layer entirely, writing Parquet/Arrow files straight to blob storage. Indexing services—rebuilt for scale—generate Arrow metadata so the same query layer can serve them without special handling.

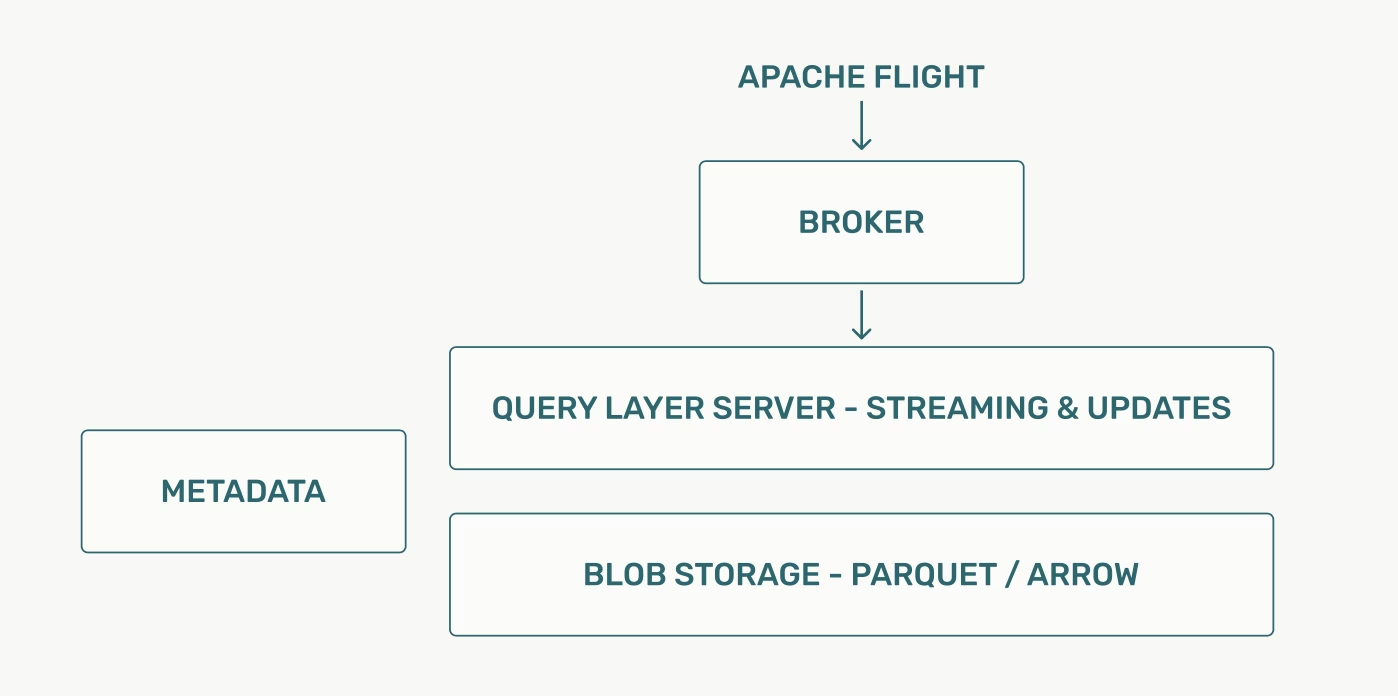

Accessing Files Instantly

In AI workflows, data is both updated in real-time and pulled continuously into workflows from the datastore. Our data, whether stored in memory as Arrow or on disk as Parquet/Arrow, needed a zero work option to download. Enter stage left, Apache flight. All data can be accessed through the same broker and historical caches based on Apache flight, allowing access to data with zero data translation effort.

Compaction

Segment sizes vary dramatically; ingest patterns that trickle in data prefer fast compaction, while huge files (we handle two‑million‑token attributes) need much smaller segments. Realtime ingestion, freshly updated data, small event streams and very large text data all require different compaction patterns.

Cache Management

By controlling the caching layer, we store petabytes in blob storage yet pull only what’s required onto the query servers. Designing the cache layer based on our business logic lets us offer 10× pricing advantages versus alternatives.

Conclusion

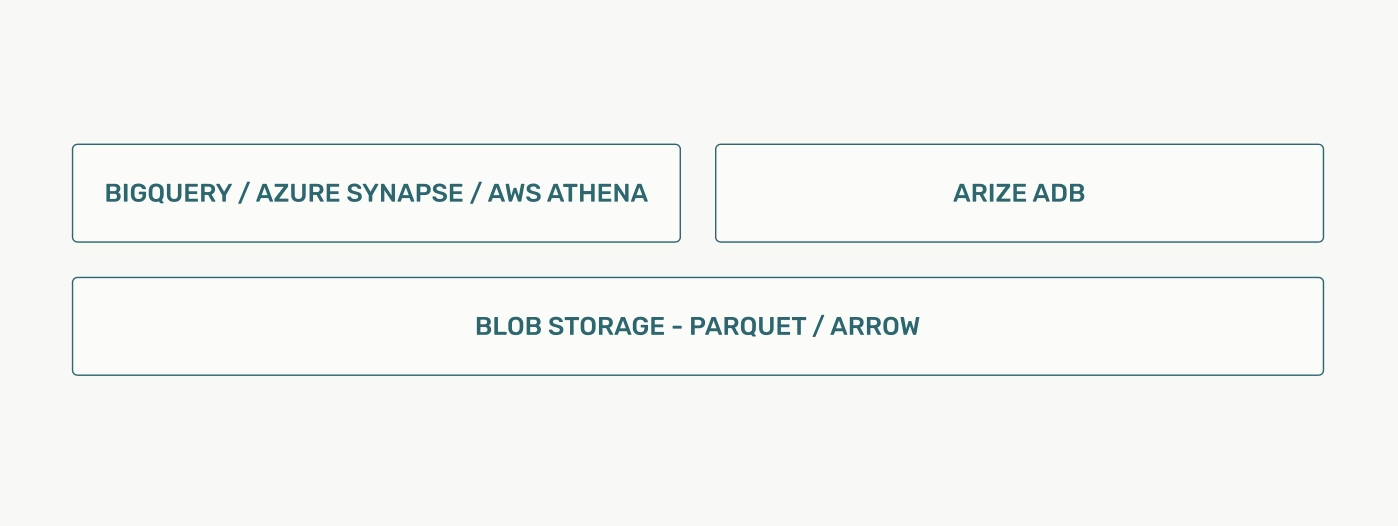

ClickHouse garners much of today’s community attention, yet a quieter movement is gathering momentum: the anti‑monolith approach. It centers on a universal data layer—open Parquet/Iceberg files in durable object storage—served by lightweight, stateless query engines of your choosing.

As an organization, a single data layer, powering the entire organization, using standard file formats with separation of query services, is powerful.

That philosophy has guided adb from day one. We’ve battle‑tested this architecture on petabytes of data and trillions of AI events, proving that openness and a clean separation of storage and compute can fit an organization’s data architecture better than any one‑size‑fits‑all monolith.

What’s Next

Want to see how adb actually works under the hood? Our technical deep dive breaks down the streaming and batch architecture. Learn how events flow through the query layer to how we handle compaction for everything from tiny event streams to two-million-token attributes. Or skip straight to the benchmarks to see how adb stacks up against dataset uploads, real-time ingest latency, and full-text search across 10M spans with full conversation histories.

Give adb a spin by signing up for Arize AX and let us know what you think!