In a recent live AI research paper reading, the authors of the new paper Self-Adapting Language Models (SEAL) shared a behind-the-scenes look at their work, motivations, results, and future directions.

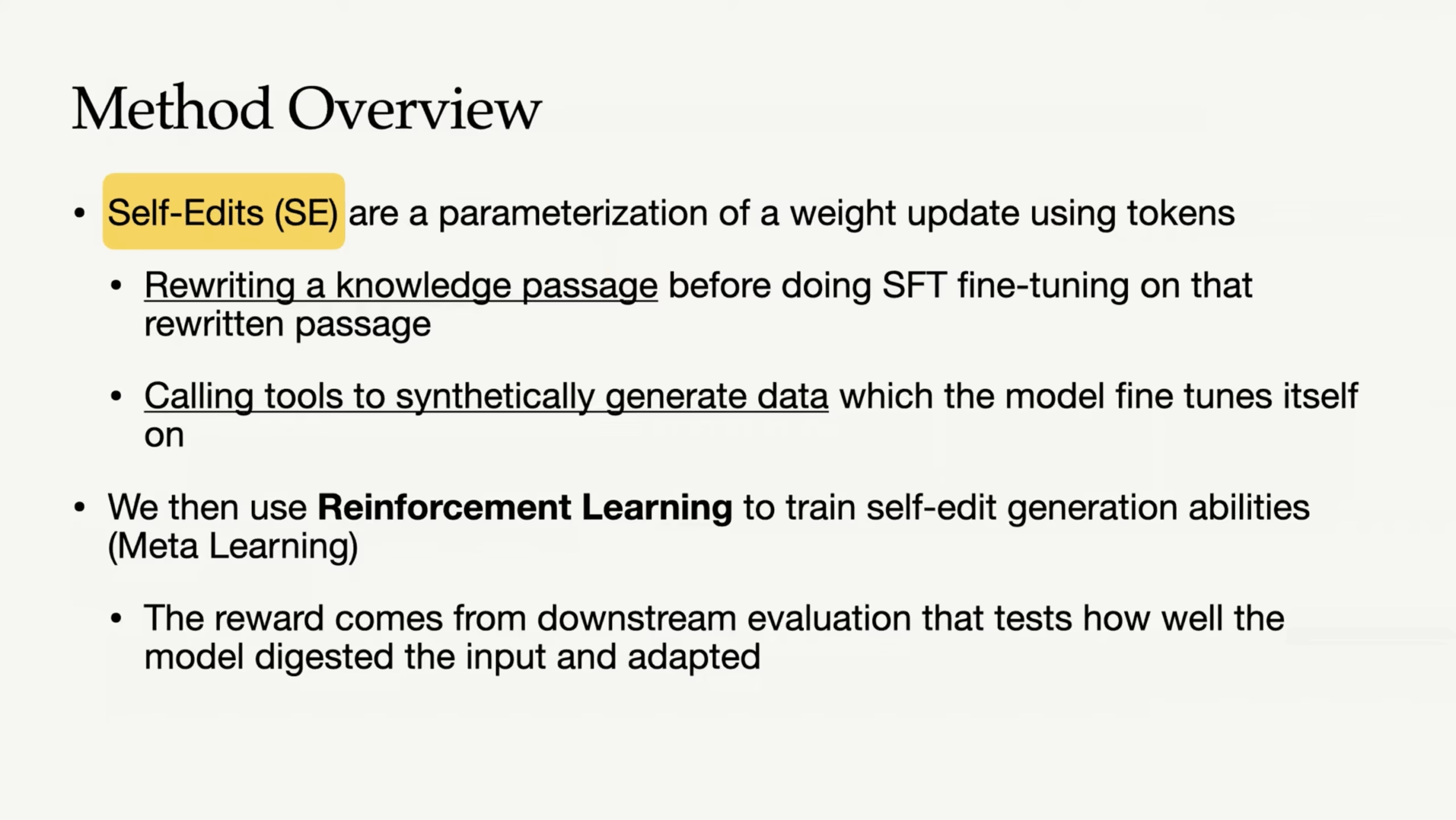

The paper introduces a novel method for enabling large language models (LLMs) to adapt their own weights using self-generated data and training directives — “self-edits.”

This session featured the authors themselves — Adam Zweiger and Jyothish (Jyo) Pari, researchers at MIT — offering a unique TL;DR and commentary on the implications of their own work. They were joined by Dylan Couzon, Developer Advocate at Arize, and Parth Shisode, Software Engineer at Arize, who moderated the discussion.

▶️ Watch

Dive In

- Read the Paper

- Explore more AI Research Papers and get notified about future readings

Listen to the Podcast

🔑 Key Takeaways

- SEAL allows LLMs to self-update weights using their own natural-language-generated training directives, without external data or supervision.

- “Self-edits” are natural language descriptions of model updates, which enable long-term adaptation by the model itself.

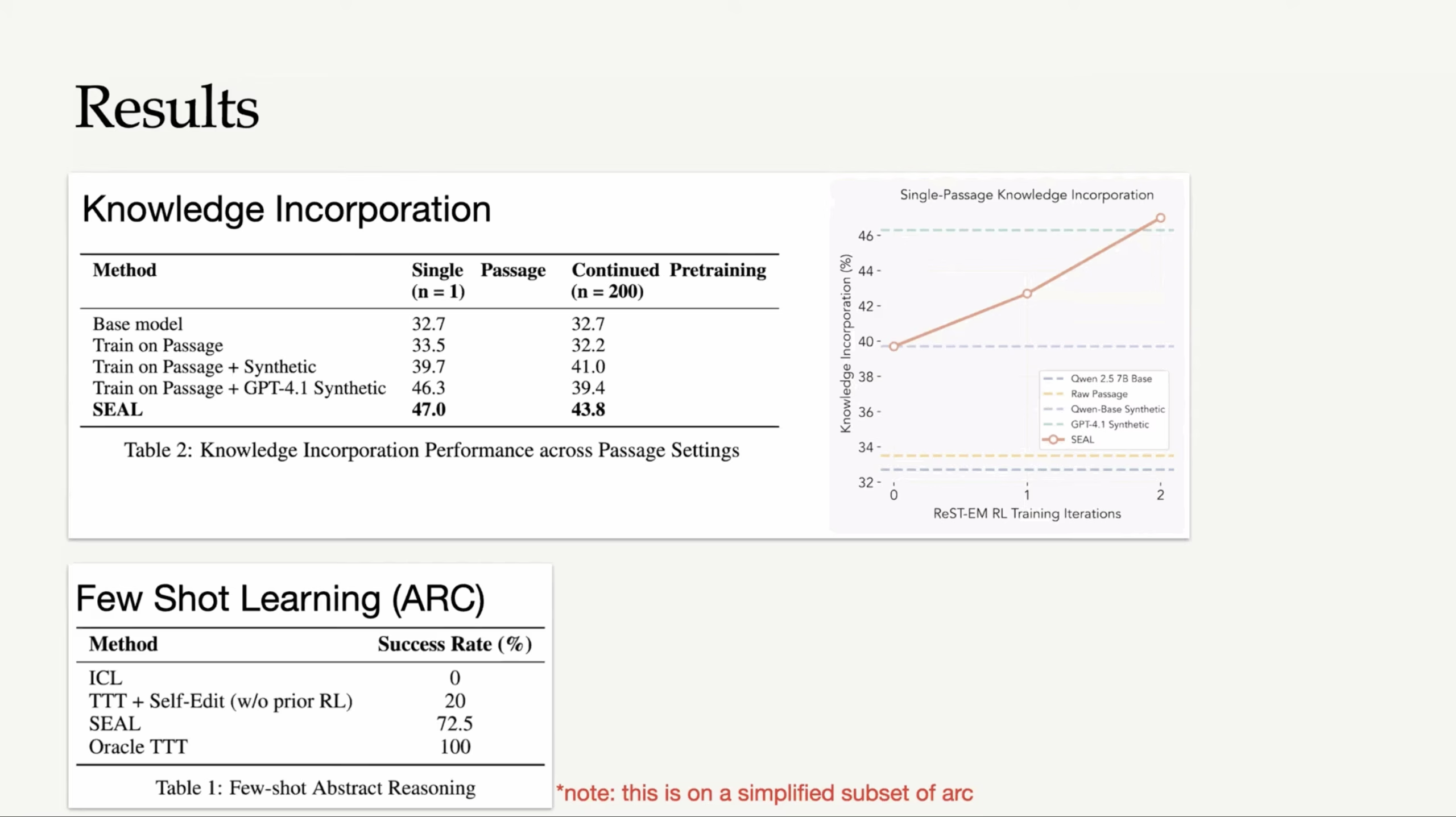

- Two domains tested: Knowledge incorporation (e.g. remembering facts from a passage) and abstract reasoning (using the ARC benchmark).

- SEAL’s self-generated data can outperform GPT-4.1 in downstream tasks—suggesting models can tutor themselves better than external LMs can.

- Reinforcement learning is used to improve self-edits, with successful ones reinforced via downstream performance.

- Challenges include catastrophic forgetting, as self-edits may overwrite previous learning—future work explores lifelong learning and self-edit triggering during inference.

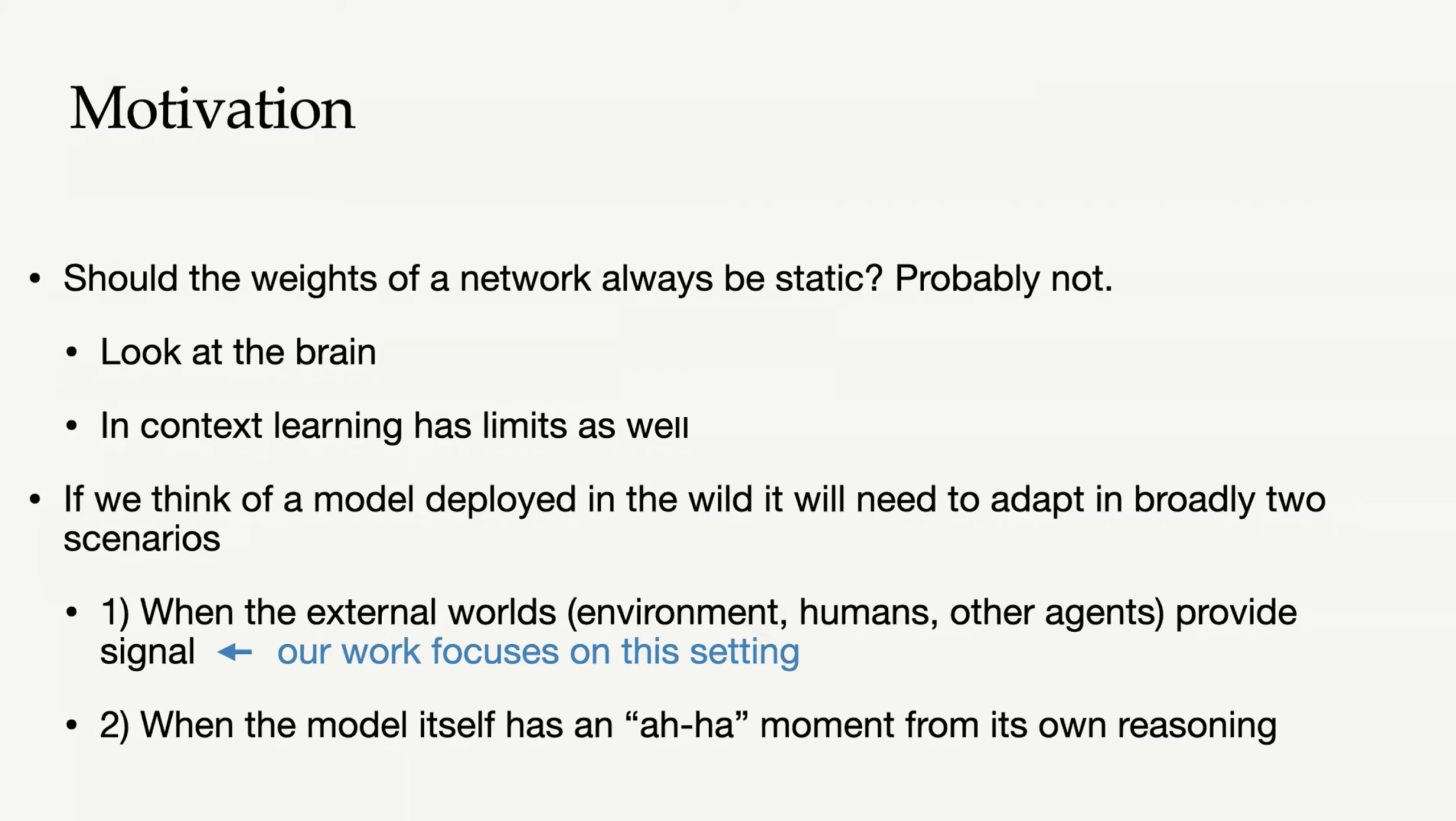

Motivation: Why Enable Self-Adapting LLMs?

Jyo Pari: There’s so much information flowing through these models — even just during a single forward pass—but it disappears. So SEAL is a way of asking: can we retain those insights? Can the model teach itself something midstream and hold onto it?

Prior Approaches

Adam Zweiger: The central object in SEAL is what we call a self-edit. It’s a piece of natural language the model writes describing how to improve itself. For example, it might say, ‘Train me on these implications of the document I just read.’ And then it actually goes and fine-tunes itself using that synthetic training data.

That’s only half of it. We also use reinforcement learning to evaluate how effective the self-edit was. The model gets a reward if it performs better on the target task after training on its own edit. That lets us evolve better and better ways for the model to teach itself.

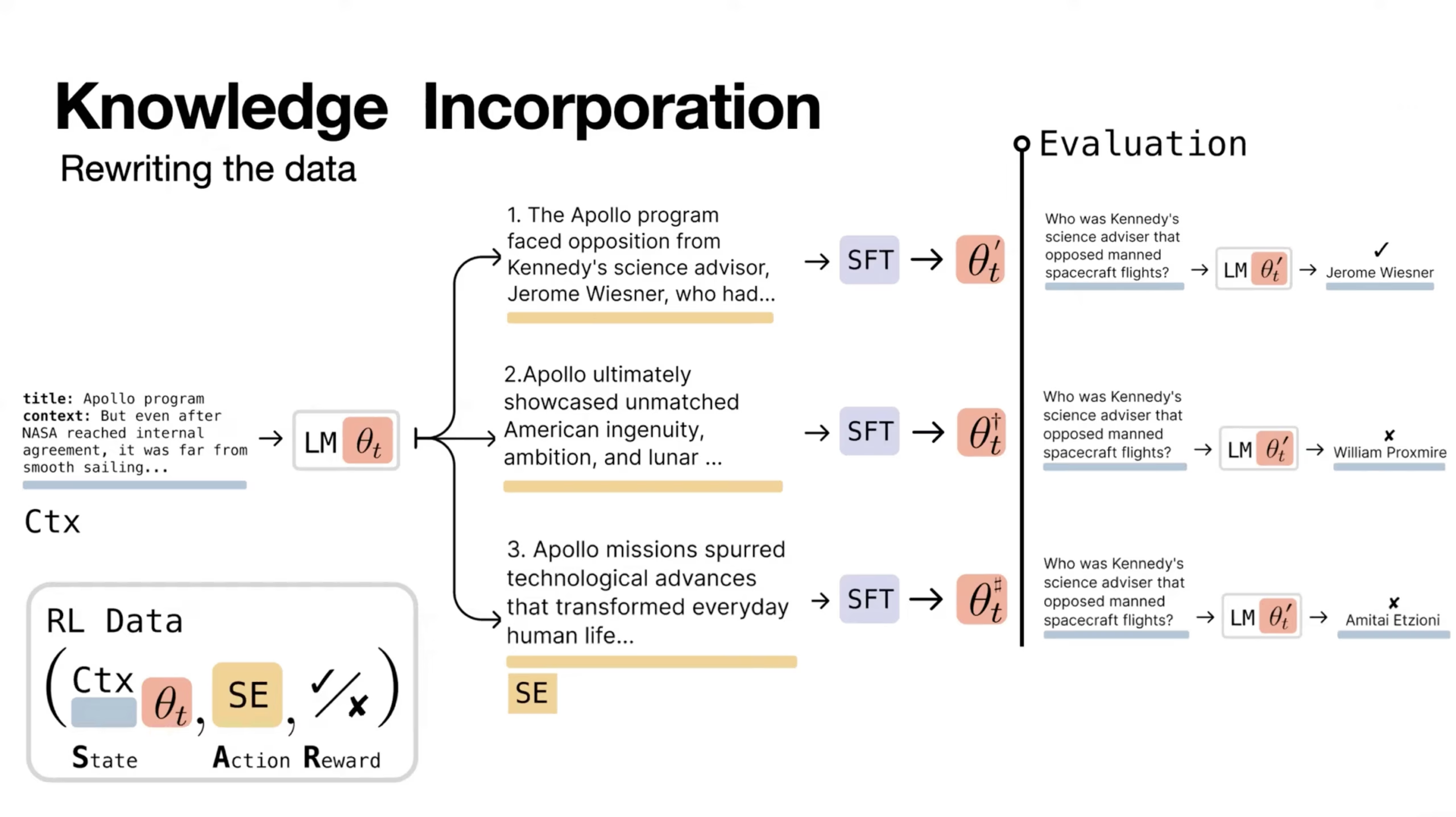

Knowledge Incorporation

Jyo Pari: The knowledge task is pretty simple: the model reads a passage—say, about the Apollo mission—and it has to answer questions about it later, without seeing the passage again.

Most approaches finetune directly on the passage. But with SEAL, the model rewrites the passage in different ways—implications, facts, summaries—and trains on that. It’s like how a student takes notes to retain what’s important.

Results: SEAL vs Baselines

Adam Zweiger: So here’s a surprising result—we compared SEAL to just finetuning on GPT-4.1-generated summaries of the same content. And SEAL actually performed better. That was a pretty big moment because it suggests the model is better at teaching itself than GPT-4.1 is at tutoring it.

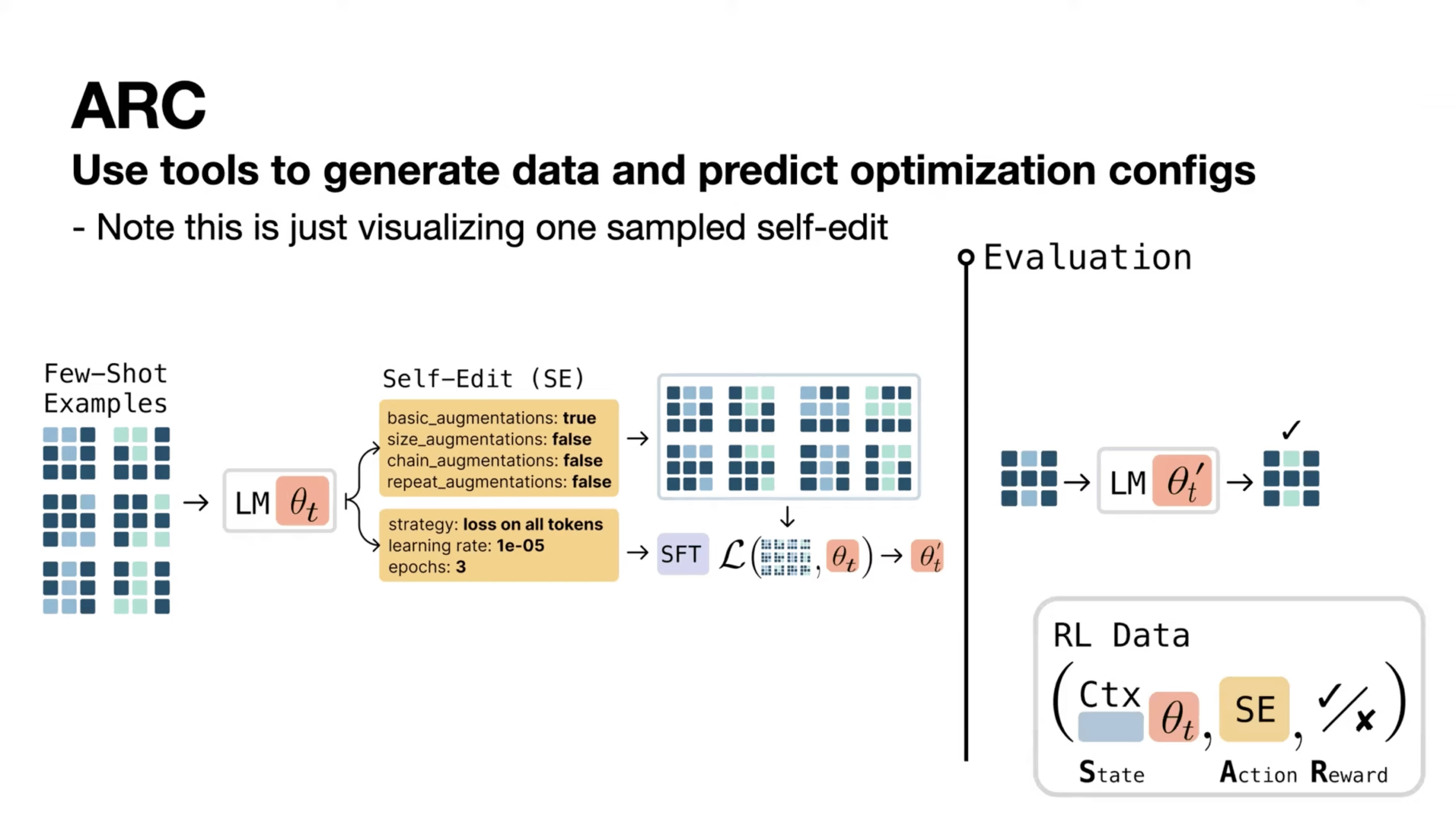

Few-Shot Learning with ARC

Jyo Pari: For ARC, which is this reasoning-heavy visual task, we set up SEAL to generate its own training configs. It chooses which augmentations or tools to use from a predefined pool. And then it trains on those configurations.

Adam Zweiger: Instead of the model solving tasks directly, it’s proposing ways to train itself on tasks like those—choosing data settings, formats, transformations. It’s a new form of self-supervised curriculum generation.

🔁 Reinforcement Learning Training Loop

Adam Zweiger: The RL loop looks like this: the model proposes multiple self-edits, we apply each edit via finetuning, then evaluate downstream performance. We score the edits based on how much they help, and use PPO to reinforce the ones that worked best. Over time, this leads the model to generate better and better ways of editing itself.

⚠️ Limitation: Catastrophic Forgetting

Jyo Pari: One of the challenges is, you get this classic catastrophic forgetting. Because each self-edit updates the weights, it can overwrite what you just learned a few steps ago. It’s like cramming for a test and forgetting what you studied yesterday. So we’re actively looking into strategies to preserve knowledge across updates—things like gradient orthogonalization or memory buffers.