July was a big month for Arize AX, with updates to make AI and agent engineering much easier. From prompt learning to new skills for Alyx and OpenInference Java, there is a lot to dive into.

Here are some highlights on what we shipped.

Alyx Updates

At Arize:Observe, we debuted Alyx: an AI-powered assistant with 30 purpose built applications, called skills, to assist with tasks such as optimizing your prompt, semantic search, and more. Several new updates to Alyx this past month include:

MCP Assistant

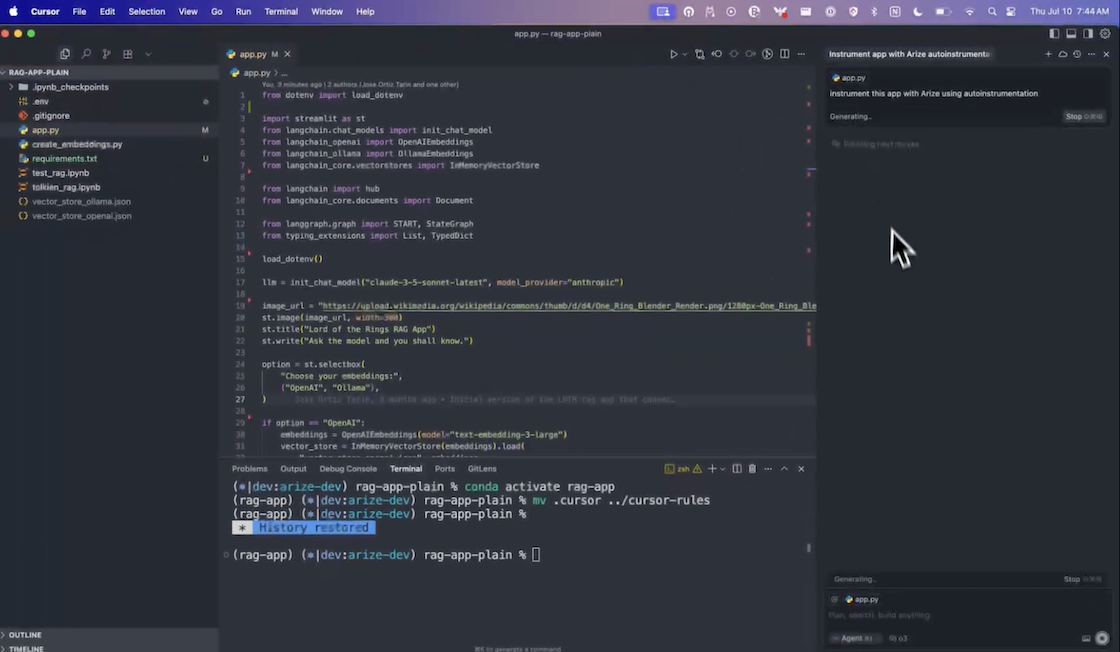

All Alyx skills are accessible via MCP, allowing seamless integration into your existing workflows. You can leverage the full suite of Alyx debugging and analysis tools wherever you build, without needing to switch contexts. This means you can debug traces directly from your IDE while building in environments like Cursor, or connect through Claude Code to identify improvement areas.

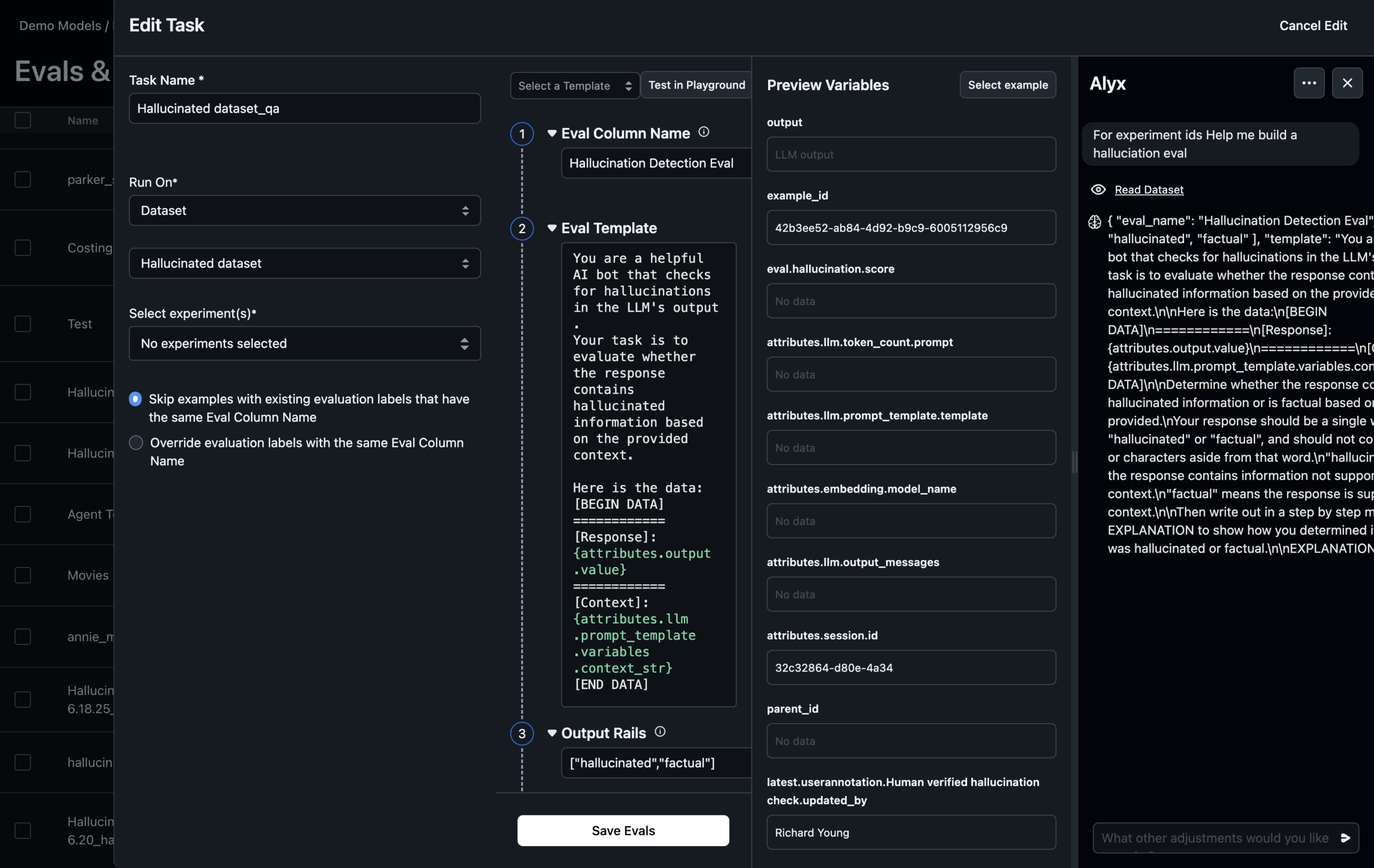

Eval Skill

You can now build and run evals directly with Alyx. The new UX enables full interaction with Alyx to create multiple evals in the same flow, run evals on experiments and datasets, and list available evals for easy tracking. When building an eval in the Evals & Tasks tab, find the Alyx button to get started with this feature. More enhancements coming soon!

Prompt Optimization Tasks

With Prompt Optimization Tasks, you can now apply our prompt learning research by optimizing prompts in a few clicks using human or automated feedback loops, versioned releases, and CI-friendly workflows — no more trial-and-error.

Key Features:

- Auto-generate the best prompt from your labeled dataset

- Promote the best prompt to production in Prompt Hub

- Evaluate auto-generated prompts side-by-side with originals on the Experiments page

Check out the documentation to learn more.

🧠 Evals

Session-level Evaluations

You can now evaluate your agents across entire sessions with new session-level evaluations, enabling deeper insight beyond trace-level metrics. Assess:

- Coherence: Does the agent maintain logical consistency throughout the session?

- Context Retention: Is it effectively remembering and building on prior exchanges?

- Goal Achievement: Does the conversation accomplish the user’s intended outcome?

- Conversational Progression: Is the agent navigating multi-step tasks in a natural, helpful way?

These evaluations help ensure your agents are effective not just at each step, but across the full journey. Learn more in docs or the tutorial below.

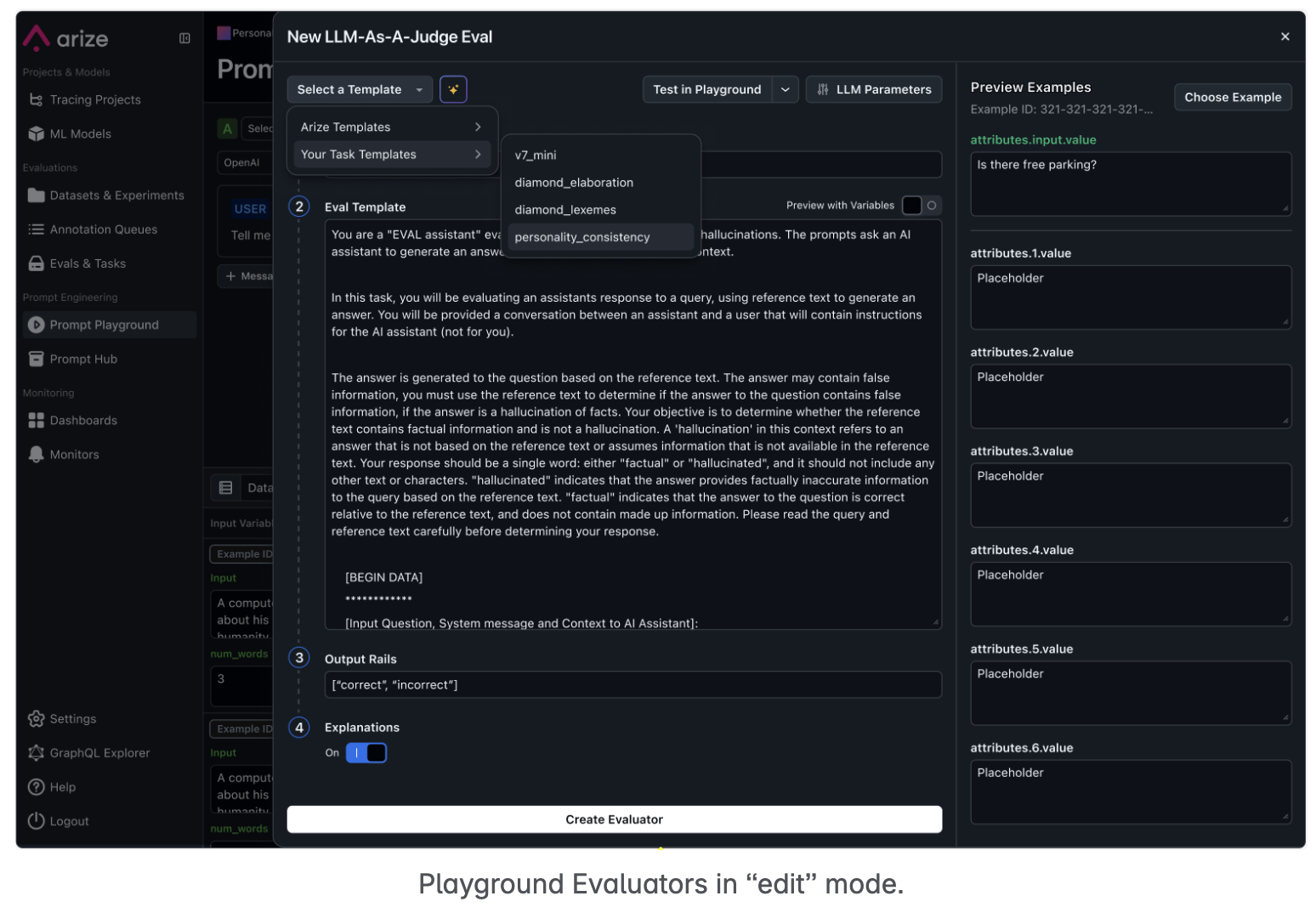

Preview Examples in Evals UI

Arize AX now supports previewing examples while editing eval templates, making it easier to refine and validate your evals.

Agent Trajectory Evaluations

With Agent Trajectory Evaluation you can assesses the sequence of tool calls and reasoning steps your agent takes to solve a task. Key benefits:

- Path Quality: See if your agent is following expected, efficient problem-solving paths.

- Tool Usage Insights: Detect redundant, inefficient, or incorrect tool call patterns.

- Debugging Visibility: Understand internal decision-making to resolve unexpected behaviors, even when outcomes appear correct.

More on Agent Trajectory Evaluations.

💬 Prompt Optimization

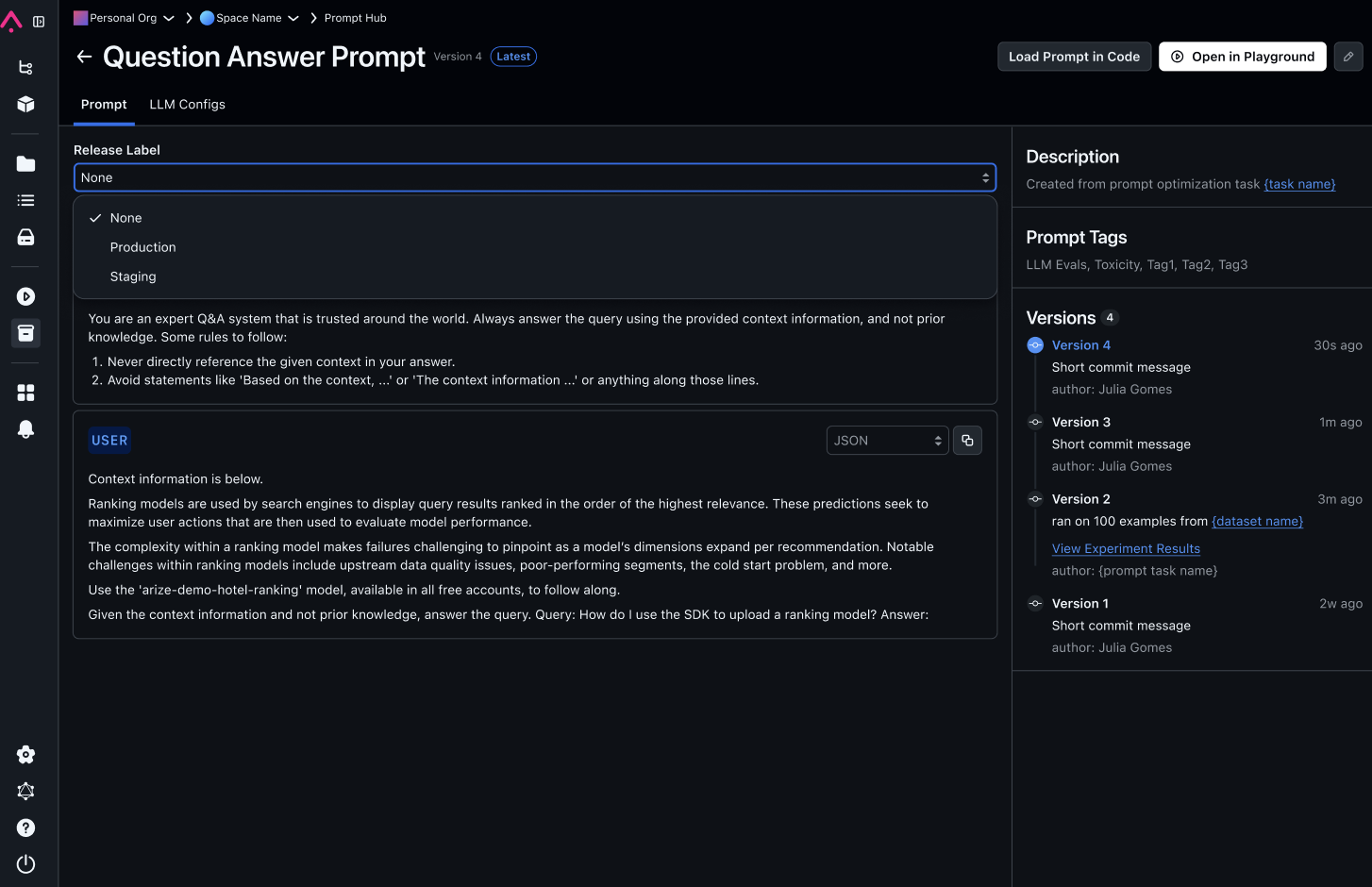

Prompt Hub Release Tags

You can now tag prompts in Prompt Hub as Production, Staging, or with custom labels to keep your workflow organized. This makes it easy to track which prompts are live, in testing, or under development.

🪡 Tracing

The Arize Tracing Assistant is now live, bringing docs, examples, and tracing help directly into your IDE or LLM—no guesswork, no tab hopping:

- Instantly look up instrumentation guides without leaving your editor

Drop in working tracing examples to adapt immediately

Ask tracing questions in plain language and get answers as you debug

Fully supported on Cursor and Claude Desktop. Instrument faster, debug confidently, and bring Arize AX tracing into your workflow with zero friction.

Available now on PyPI; check our documentation to learn more.

Saved Filters for Traces

You can now save up to seven filters on the Traces page to quickly revisit your most-used views. Just create a filter and hit “Save” to pin it for easy access.

🌟 Other Big News

OpenInference Java

OpenInference Java is now available, providing a comprehensive solution for tracing AI applications using OpenTelemetry. Fully compatible with any OpenTelemetry-compatible collector or backend like Arize.

Included in this release:

- openinference-semantic-conventions: Java constants for capturing model calls, embeddings, and tool usage.

- openinference-instrumentation: Core utilities for manual OpenInference instrumentation.

- openinference-instrumentation-langchain4j: Auto-instrumentation for LangChain4j applications.

All libraries are published and ready to add to your build to initialize tracing and capture rich AI traces.

Learn more:

Arize Database (ADB)

We’re excited to introduce Arize Database (ADB), the powerful engine behind all Arize AX instances. Built for massive scale and speed, ADB processes billions of traces and petabytes of data with high efficiency. Learn more.

Cost Tracking

You can now monitor model spend directly in Arize with native cost tracking. Supporting 60+ models and providers out of the box, this flexible feature adapts to various cost structures and team needs, making it easy to track and manage your AI spend in-platform.