Arize AX released a flurry of updates in November of 2025. From OpenInference TypeScript 2.0 to a revamp of integrations, there is a lot to catch up on.

OpenInference TypeScript 2.0

The updates include:

- Easy manual instrumentation with the same decorators, wrappers, and attribute helpers found in the Python

openinference-instrumentationpackage. - Function tracing utilities that automatically create spans for sync/async function execution, including specialized wrappers for chains, agents, and tools.

- Decorator-based method tracing, enabling automatic span creation on class methods via the

@observedecorator. - Attribute helper utilities for standardized OpenTelemetry metadata creation, including helpers for inputs/outputs, LLM operations, embeddings, retrievers, and tool definitions.

Overall, tracing workflows, agent behavior, and external tool calls are all now significantly simpler and more consistent across languages.

Session Annotations

This release introduces Session Annotations, making it easier than ever to capture human insights without disrupting your workflow. You can now add notes directly from the Session Page—no context switching required.

Annotations are supported at two levels:

- Input/Output Level: Attach insights to specific output messages, automatically linked to the root span of the trace.

- Span Level: Dive deeper into a trace and annotate individual spans for precise, context-rich feedback.

Together, these capabilities make it simple to highlight issues, call out successes, and integrate human feedback seamlessly into your debugging and evaluation process.

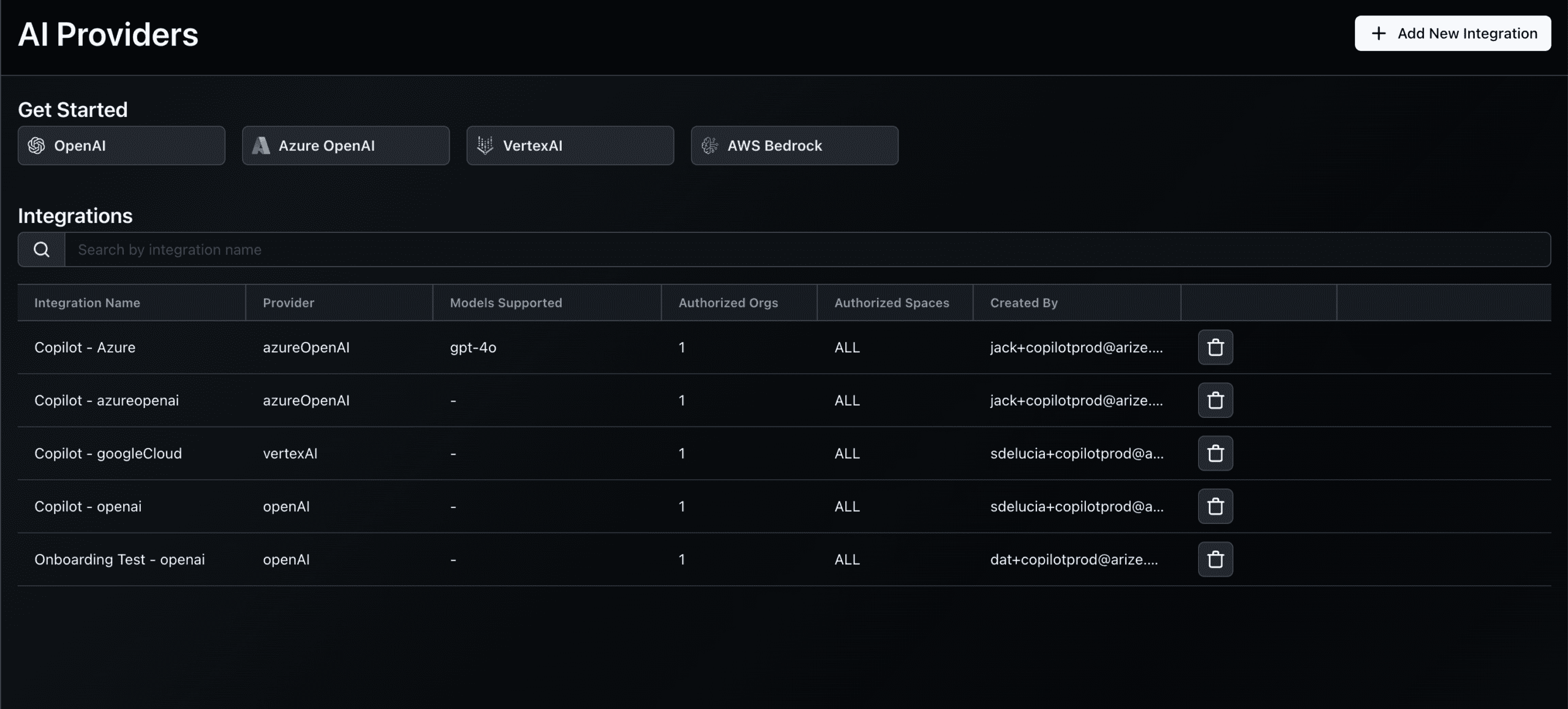

Integrations Revamp

This release delivers major improvements to how integrations are managed, scoped, and configured. Integrations can now be targeted to specific orgs and spaces, and the UI has been refreshed to clearly separate AI Providers from Monitoring Integrations.

This release delivers major improvements to how integrations are managed, scoped, and configured. Integrations can now be targeted to specific orgs and spaces, and the UI has been refreshed to clearly separate AI Providers from Monitoring Integrations.

A new creation flow supports both simple API-based setups and flexible custom endpoints, including multi-model configurations with defaults or custom names. Users can also add multiple keys for the same provider, enabling more granular control and easier management at scale.

Structured Outputs Support for Playground

This release adds full structured output support to the playground, giving users precise control over the fields an LLM must return. Models that implement the OpenAI API schema (including OpenAI, Azure, and compatible custom endpoints) now support structured outputs end-to-end. When saving prompts, the structured output JSON is stored alongside other LLM parameters for seamless reuse. Tooltips have also been added to clearly indicate when a model or provider does not support structured outputs.

Questions?

Feel free to drop us a line in Arize’s Slack community, or book a demo.