In this post, we’ll explore how Continuous Integration and Continuous Deployment (CI/CD) can be used to evaluate large language models (LLMs) effectively. By integrating LLM evaluations into your CI/CD pipelines, you can ensure consistent, reliable AI performance and automate experimental results from your AI applications.

Watch

How to Set Up a CI/CD Pipeline for LLM Evaluations

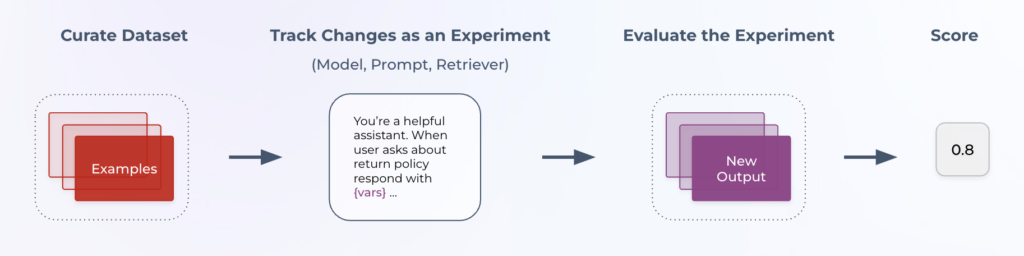

Setting up a CI/CD pipeline to evaluate LLMs requires thinking beyond traditional software workflows. LLMs can produce a wide range of outputs, and traditional assertion or unit tests cannot correctly identify successes and failures. Typical methods to evaluate LLM applications consist of either comparing outputs against a ground truth value, or using a separate LLM as a Judge to classify a response. We won’t cover those in detail in this article, but for more on LLM evaluation, see our full guide.

For this example, we’ll use Arize Phoenix’s experiments API. This will let us structure a test that will run via our CI pipeline.

- Create a dataset of test cases in Phoenix. This can be done via code or from a CSV.

- Create a task that represents the work your system is doing. This could be a call to an agent you’re testing, or something more basic like a single LLM call mimicking part of your application. This task should be a single method.

- Create one or more evaluators to measure the outputs of your task. A simple option here is to compare the expected output from your dataset to the actual output of your task. A more complex version would involve making an LLM as a Judge call at this stage.

- Create a method like the one below to run our experiment and test the result:

def run_and_test_experiment(): experiment = run_experiment( dataset, task=YOUR_TASK, evaluators=[YOUR_EVALUATOR] ) return experiment.get_evaluations()["score"].mean() > 0.8 - Finally, add a yml file matching the code below to prepare your script as for CI/CD:

name: Arize Phoenix Evaluator on: push: paths: - backend/** jobs: run-script: runs-on: ubuntu-latest env: OPENAI_KEY: ${OPENAI_API_KEY} steps: - name: Checkout repository uses: actions/checkout@v2 - name: Set up Python uses: actions/setup-python@v2 with: python-version: '3.10' - name: Install dependencies run: | pip install -q arize-phoenix==4.21.0 nest_asyncio packaging openai 'gql[all]' - name: Run script run: python ./path/to/your/script.py

Best Practices for Implementing CI/CD in LLM Evaluations

- Automate LLM Evaluation in CI/CD Pipelines

Automation is the key to reliability. Whether you’re retraining a model or adding a new skill, every step that can be automated—should be. Use tools like Jenkins, GitHub Actions, or GitLab CI to manage these processes. - Combine Quantitative and Qualitative Evaluations for LLMs

Evaluating an LLM isn’t just about numbers; qualitative evaluation is just as important. Automated testing might focus on response times, while human-in-the-loop processes collect feedback on usefulness or hallucination levels. - Use Version Control for Models, Data, and CI/CD Configurations

It’s not only code that needs version control—models and datasets do too. Incorporate tools that can snapshot both the model weights and evaluation datasets, ensuring every model version can be reproduced and evaluated consistently.

How Arize Can Improve Your CI/CD Pipeline for LLM Evaluations

Arize offers a layer of observability that integrates with your CI/CD pipeline—helping track model health, evaluate qualitative feedback, and automate LLM testing. With Arize Phoenix, you can add continuous evaluation for LLMs that includes detailed tracing, human-in-the-loop feedback, and in-depth performance analysis—bridging the gap between model experimentation and real-world deployment.

Ready to Build Reliable CI/CD for Your LLMs?

If you’re interested in learning how Arize can help you incorporate LLM evaluations into your CI/CD pipeline and take your AI application reliability to the next level, get started with Arize Phoenix today.