Retrieval-Augmented Generation (RAG) has become a cornerstone in AI applications, and as our needs grow, more complex, traditional RAG approaches are showing their limitations. Enter Agentic RAG, which introduces intelligent agents into the retrieval process.

Let’s talk about what it is, how it works, and why monitoring and observability are key parts of the process.

Tutorial: Trace an Agentic RAG App

This companion notebook will help you build and trace an agentic RAG system using LlamaIndex’s ReAct agent framework combined with vector and SQL query tools, and Arize Phoenix. Go to the notebook.

Watch: Agentic RAG Overview

How RAG Works: A Quick Recap

Let’s start with a quick refresh on traditional RAG, which is kind of like a librarian finding the perfect book for you.

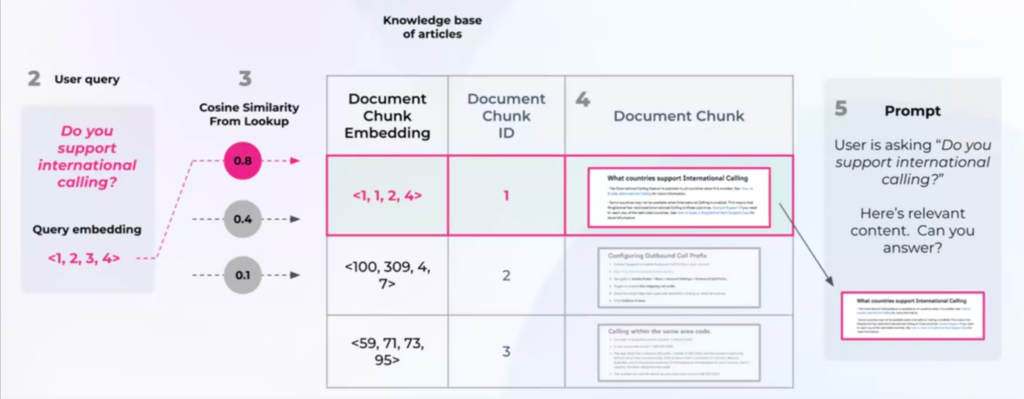

RAG implements a vector-based retrieval process that begins with the transformation of documents into dense vector embeddings. These are then indexed in a vector store. When processing a user query, the system computes an embedding for the input and performs semantic similarity computations (typically using cosine similarity metrics) against the stored document embeddings.

The highest-scoring documents are then retrieved and concatenated into the context window of the prompt, providing the foundation for the language model’s response generation. While this architecture has proven effective for straightforward retrieval tasks, it presents limitations when dealing with heterogeneous data sources or complex, multi-step queries that require more nuanced retrieval strategies. You can read about tracing and evaluating RAG in our docs here.

While this approach works well for simple use cases, it faces challenges when dealing with multiple data sources or complex queries. Traditional RAG often struggles with multi-hop questions that require retrieving information from different parts of the knowledge base or even different data sources sequentially. For instance, a user might ask, ‘What is our return policy for items bought with a discount code I received last month?’ This requires first identifying the general return policy and then applying the specifics related to discount codes and potentially the timeframe of the promotion – a multi-step process that traditional RAG often handles poorly.

Agentic RAG: Adding Intelligence to Retrieval

At its core, Agentic RAG can be defined as a retrieval-augmented generation framework that leverages autonomous agents to dynamically orchestrate the retrieval of relevant context based on the complexity and nuances of the user query. These agents employ reasoning and decision-making capabilities to select appropriate retrieval tools and strategies, going beyond simple vector similarity searches.

Agentic RAG introduces AI agents into the retrieval process, acting as intelligent intermediaries between user queries and data sources.

These agents can:

- Determine if external knowledge sources are needed at all

- Choose which specific data sources to query based on the question

- Evaluate if the retrieved context actually helps answer the user’s question

- Decide whether to try alternative retrieval strategies if initial results are inadequate

What are the Key Characteristics of Agentic RAG?

Here is an overview of some key characteristics of agentic RAG.

Dynamic Retrieval

In contrast to traditional RAG, where the retrieval process is often a fixed sequence of embedding and similarity search, Agentic RAG empowers intelligent agents to adapt their retrieval strategy on the fly based on the nuances of the user query. This adaptability manifests in several ways:

- Conditional Retrieval: Agents can determine if retrieval is even necessary. For simple questions that can be answered from the model’s internal knowledge, the agent might bypass external data sources entirely.

- Adaptive Granularity: Depending on the query’s complexity, agents can adjust the granularity of the retrieved information. For a broad question, they might initially retrieve high-level summaries, while a specific question might trigger the retrieval of very granular document sections or individual data points.

- Iterative Refinement: If the initial retrieval doesn’t yield satisfactory results, agents can iteratively refine their search parameters, try different retrieval methods, or even query related concepts to broaden or narrow the search space.

- Source Prioritization: Agents can learn or be configured to prioritize certain data sources based on the query’s context or the historical success rate of those sources for similar queries. For instance, for a question about recent events, an agent might prioritize a real-time news API over a static document repository.

Tool Usage

A defining feature of Agentic RAG is the agent’s ability to leverage a diverse set of “tools” to access and retrieve information. These tools extend beyond simple vector stores and enable a much richer and more versatile retrieval process:

- Vector Stores: Agents can utilize vector databases (like Chroma, Pinecone, FAISS) for semantic similarity search, just like in traditional RAG. However, they can strategically choose which vector store to query based on the topic of the question.

- SQL Databases: Agents equipped with natural language to SQL translation capabilities can query structured data stored in relational databases (like PostgreSQL, MySQL). This allows them to retrieve specific facts and figures based on semantic understanding.

- APIs (Application Programming Interfaces): Agents can interact with external APIs to fetch real-time data, such as weather information, stock prices, news feeds, or data from specialized services. This significantly expands the scope of information the system can access.

- Web Search: Agents can be equipped with the ability to perform web searches to gather information that might not be present in internal knowledge bases. This is particularly useful for open-ended or exploratory queries.

- Specialized Tools: The “tool” concept can be extended to include custom functions or modules designed for specific retrieval tasks, such as accessing file systems, querying knowledge graphs, or interacting with specific enterprise systems. The agent acts as an orchestrator, deciding which tool is most appropriate for each part of the information-gathering process.

Reasoning and Planning

Agentic RAG goes beyond simply retrieving relevant documents; the agents exhibit a degree of reasoning and planning to fulfill the user’s request effectively.

- Intent Recognition: Agents analyze the user’s query to understand the underlying intent and the specific information being sought. This is crucial for selecting the right tools and retrieval strategies.

- Decomposition of Complex Queries: For multi-faceted questions, agents can break down the query into smaller, more manageable sub-tasks. Each sub-task might require a different retrieval strategy or tool.

- Step-by-Step Planning: Agents can formulate a plan outlining the sequence of retrieval steps needed to gather all the necessary information. This might involve querying multiple sources in a specific order or iteratively refining the search based on intermediate results.

- Conditional Logic: Agents can employ conditional logic (if-then-else rules or more complex decision-making processes) to determine the next course of action based on the outcome of previous retrieval steps. For example, if the initial vector search yields low-confidence results, the agent might decide to try a keyword-based search or consult a different data source.

Context Evaluation

After retrieving information from various sources, Agentic RAG agents play a crucial role in evaluating the relevance and quality of the retrieved context:

- Relevance Scoring: Agents can employ various techniques (potentially leveraging language models themselves) to score the retrieved documents or data snippets based on their relevance to the original user query and the specific sub-task they were intended to address.

- Factuality and Reliability Assessment: In more sophisticated systems, agents might attempt to assess the factuality and reliability of the retrieved information, potentially by cross-referencing information from multiple sources or using external knowledge.

- Redundancy Detection: Agents can identify and filter out redundant information retrieved from different sources, ensuring that the context passed to the generation model is concise and focused.

- Contextual Coherence: When retrieving information from multiple steps or sources, agents can evaluate the coherence and consistency of the combined context to ensure it provides a unified and logical foundation for the language model’s response.

- Sufficiency Check: Agents can determine if the retrieved context is sufficient to answer the user’s query comprehensively. If not, they might initiate further retrieval steps or inform the user about the limitations.

By incorporating these key characteristics, Agentic RAG systems can handle a wider range of complex queries, integrate diverse data sources more effectively, and ultimately provide more accurate and helpful responses compared to traditional RAG approaches.

What’s the Difference Between Single and Multi-Agent RAG?

The core distinction between single and multi-agent Agentic RAG lies in how the responsibility for the intelligent retrieval process is distributed.

Single Agent: One agent handles all aspects of retrieval: query analysis, tool selection, execution, and context evaluation. It’s simpler to implement initially but can become a bottleneck for complex tasks and diverse data. Think of a versatile individual handling everything.

Multi-Agent: Multiple specialized agents collaborate, each focusing on specific tasks (e.g., querying a specific database type, handling API calls). A central agent might coordinate. This offers better specialization, scalability, and modularity for complex scenarios but introduces more implementation and coordination challenges. Think of a team of experts working together. So multi-agent RAG might look like this:

- One agent for internal knowledge base queries

- Another agent for external API calls

- Additional agents for specialized tools and operations

| Feature | Single Agent | Multi-Agent |

|---|---|---|

| Complexity | Lower initial complexity | Higher implementation complexity |

| Specialization | Limited | High, optimized per task |

| Scalability | Potential bottleneck | Better for high query loads |

| Modularity | Less flexible for new tools | More modular and maintainable |

| Coordination | Simple (internal) | Requires careful management |

| Debugging | Easier to trace initially | More challenging to trace |

Practical Implementation: A Real-World Example

Let’s look at a practical implementation of Agentic RAG using LlamaIndex. Consider an internal company system that needs to handle both employee information and company policies.

Architecture Components

The implementation’s foundation rests on a dual-database architecture that leverages both vector and relational paradigms. The system employs Chroma as the vector store for managing company policy documents, while PostgreSQL serves as the relational backbone for structured employee data.

This data architecture means we need specialized query engines: a natural language SQL query engine interfaces with PostgreSQL, translating semantic queries into structured SQL, while a vector query engine handles document retrieval operations through Chroma.

The agent layer sits on top of this infrastructure, configured with specific context parameters that define its operational boundaries and decision-making capabilities. The agent’s architecture incorporates detailed tool descriptions that serve as a decision framework for selecting appropriate data sources, complemented by integration with GPT-3.5 Turbo for sophisticated reasoning capabilities. This configuration enables the agent to dynamically select between the vector and relational query engines based on the semantic requirements of incoming queries.

Monitoring and Improvement with Observability

One crucial aspect of implementing Agentic RAG is the ability to monitor and improve its performance. Tools like Arize Phoenix can help by:

- Tracing query paths and tool selections

- Monitoring document retrieval accuracy

- Identifying potential improvements in retrieval strategies

- Debugging incorrect tool selections or document retrievals

Best Practices and Considerations

When implementing Agentic RAG, consider these key points:

- Clear Tool Descriptions: Provide detailed descriptions of each tool’s capabilities to help the agent make informed decisions

- Robust Testing: Verify that agents are selecting the correct tools and retrieving appropriate documents

- Document Quality: Ensure your knowledge base documents contain sufficient context for accurate retrieval

- Monitoring Strategy: Implement comprehensive observability to track and improve system performance

Agentic RAG represents a significant advancement in how we approach information retrieval and question-answering systems. By introducing intelligent agents into the retrieval process, we can handle more complex queries across multiple data sources while maintaining accuracy and relevance. The combination of traditional RAG capabilities with agent-based decision-making opens up new possibilities for building more sophisticated AI applications. As this technology continues to evolve, we can expect to see even more innovative implementations and use cases emerge.

Get started in your observability journey with our open source solution Arize Phoenix.