Why AI Engineers Need a Unified Tool for AI Evaluation and Observability

AI engineers today face a growing challenge: bridging the gap between development and production while ensuring high performance across diverse AI model types—whether it’s generative AI, traditional machine learning (ML), or computer vision (CV).

Traditionally, development and production have been treated as separate phases, but in reality, they are deeply interconnected:

- Development informs production by ensuring robust experimentation and evaluation before deployment.

- Production fuels iterative development by surfacing real-world feedback, errors, and performance regressions that guide model improvements.

AI teams need a single platform that unifies these two phases, allowing them to seamlessly develop, evaluate, monitor, and iterate—without silos or blind spots.

This is where Arize’s unified AI observability and evaluation platform comes in. Built specifically for AI engineers, Arize provides end-to-end observability, evaluation, and troubleshooting capabilities across all AI model types, enabling teams to:

- Develop with confidence by testing and benchmarking performance before launch.

- Monitor and debug production applications with streamlined workflows.

- Use online production data for continuous experimentation and iterative development.

By connecting development and production in a single feedback loop, Arize ensures that AI engineers can iterate faster and deploy AI applications with confidence.

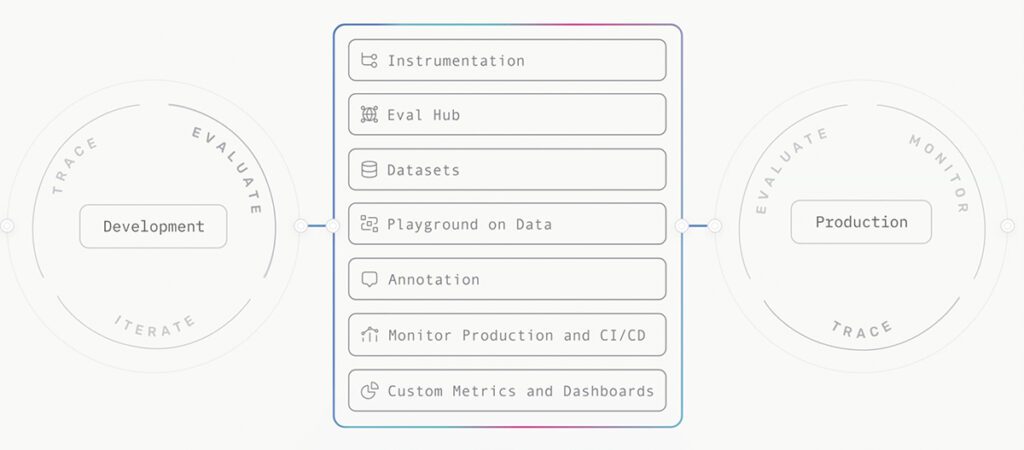

The AI Development-Production Feedback Loop

Arize is designed to break down the barriers between development and production, creating a continuous improvement cycle:

(1) Development Informs Production

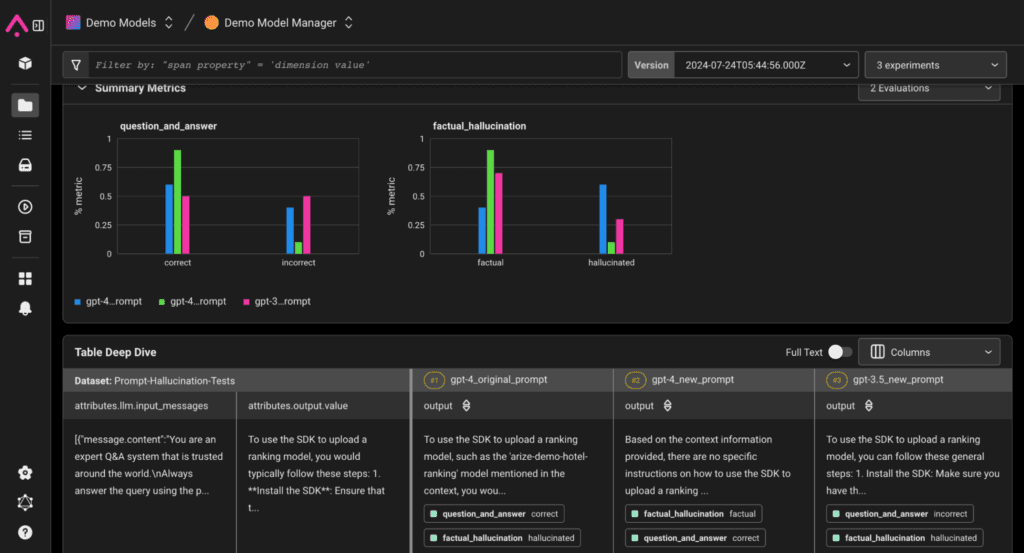

- Run experiments, and compare results, to test your evaluation metrics, prompts, models, and more, so you can deploy with confidence.

- Define evaluation benchmarks and standardize frameworks for both offline and online evaluation, ensuring consistency across development and production.

- Curate datasets and organize traces and spans from development data, human annotations, or uploaded CSVs to ensure that application changes don’t trigger unintended cascading effects.

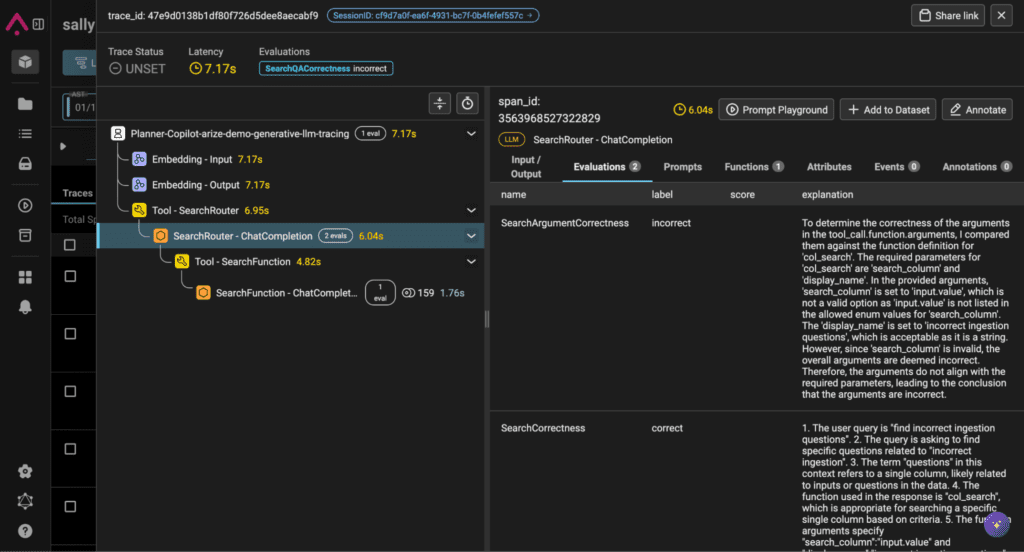

- Trace your application and get full visibility into your application for debugging function calls, poor retrieval, hallucinations, latency, and more before real-world users interact with your application.

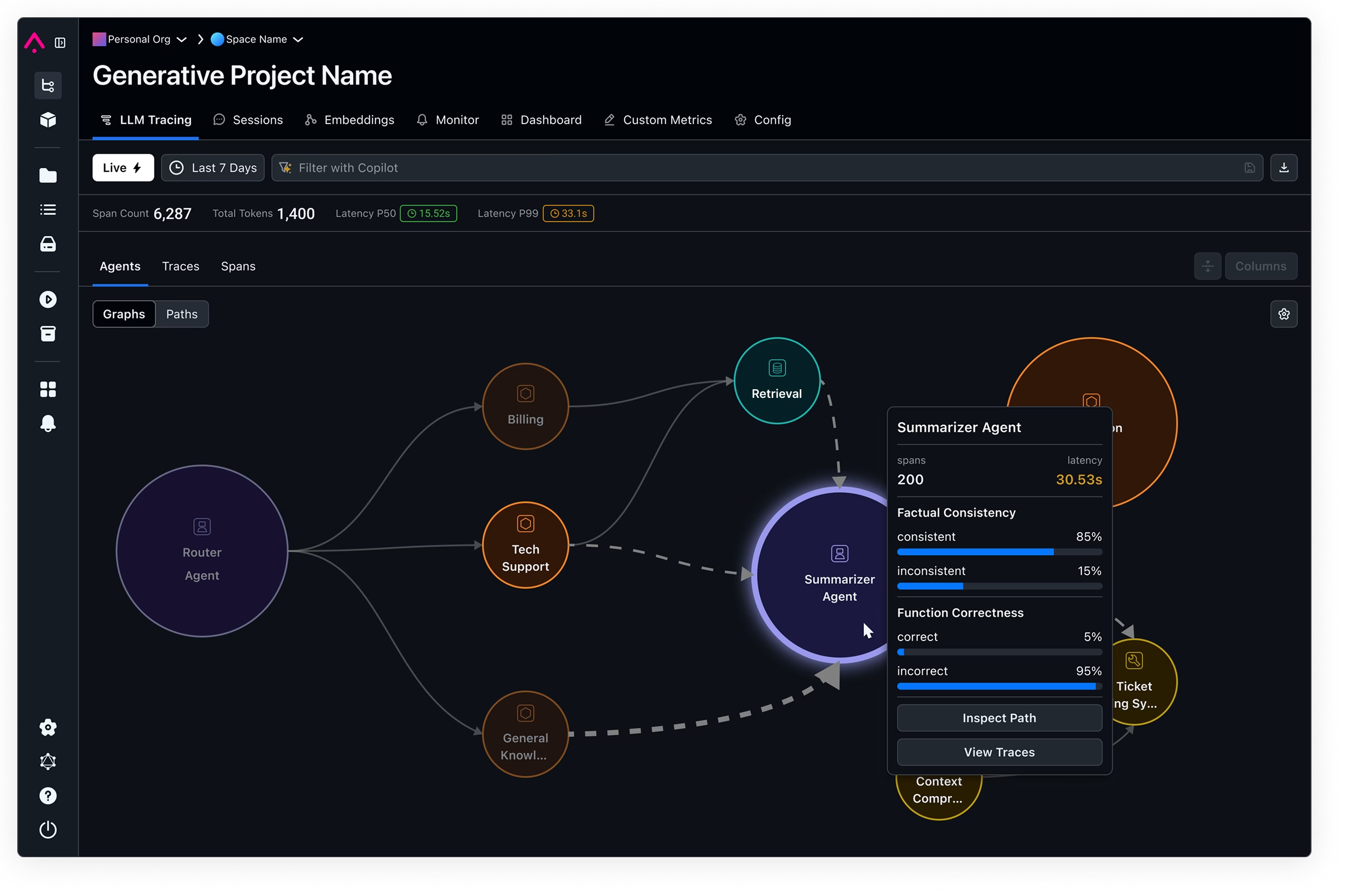

(2) Production Fuels Iterative Development

- With end-to-end observability across every step of your AI application or model, gain a comprehensive view of user interactions, automatically detect failure cases, and pinpoint performance issues that need attention.

- Use LLM evaluations and guardrails to detect hallucinations, agentic failures, and unexpected regressions early, preventing issues before they impact users.

- Identify and curate production insights using AI Search and Annotations, seamlessly feeding them into Datasets and Experiments to drive continuous improvement and refinement.

(3) Continuous Improvement & Deployment

- Fine-tune models based on production feedback by curating data into Datasets, with the ability to export refined data for retraining and continuous improvement.

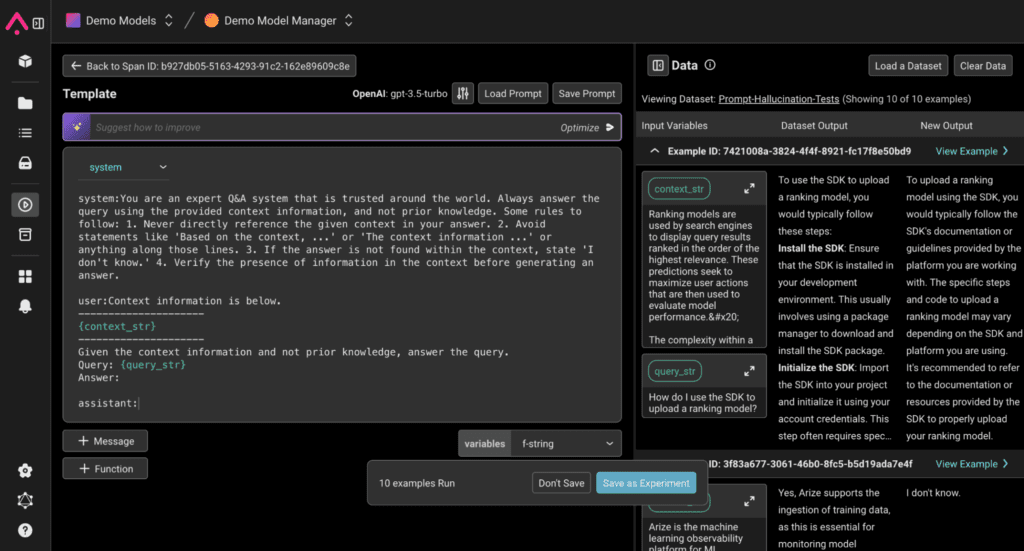

- Test and refine evaluations and prompts using Prompt Playground, leveraging real-world inputs to optimize performance. Store all versions in Prompt Hub and Eval Hub for seamless access, comparison, and iteration.

One Unified Platform Across Your AI Portfolio

Arize supports the full spectrum of AI-powered systems and applications, whether you’re working with:

GenAI

- Debug function calling, RAG, and multi-modal applications.

- Detect hallucinations and toxicity, accuracy, and coherence.

- Run self-improving evaluations and prompts.

Computer Vision (CV)

- Track drift in embeddings, object detection, and segmentation models.

- Surface edge-case failures across real-world datasets.

- Debug CV pipelines with frame-by-frame analysis.

Machine Learning (ML)

- Monitor tabular, time-series, and recommendation models at scale.

- Detect data drift, feature importance shifts, and model decay.

- Automatically curate datasets for retraining based on production issues.

Instead of siloed tools for each model type, Arize provides a single pane of glass to monitor, evaluate, and iterate across LLMs, CV, and ML models alike.

Why AI Engineers Choose Arize AI

- One platform from dev to prod – No more disconnected workflows.

- Enterprise-scale deployments – Trusted by leading AI teams.

- AI-powered workflows – Automated debugging & insights.

- Open-source & open standards – Built on OpenTelemetry & OSS evals.

In a world where AI applications are constantly evolving, Arize ensures that every production insight fuels better development—and every update leads to stronger performance in production.

Sign up to explore Arize today or book a demo to learn more about how Arize works for your specific AI use case.