Co-Authored by Sanjana Yeddula, AI Engineer & Aparna Dhinakaran, Co-founder & Chief Product Officer & Sri Chavali, AI Engineer.

—

Technical deep dive inspired by Anthropic’s “Building Effective Agents”

In this piece, we’ll take a close look at the orchestrator–worker agent workflow. We’ll unpack its challenges and nuances, then compare how leading frameworks – Agno, Autogen, CrewAI, OpenAI, LangGraph, and Mastra – approach and implement this pattern.

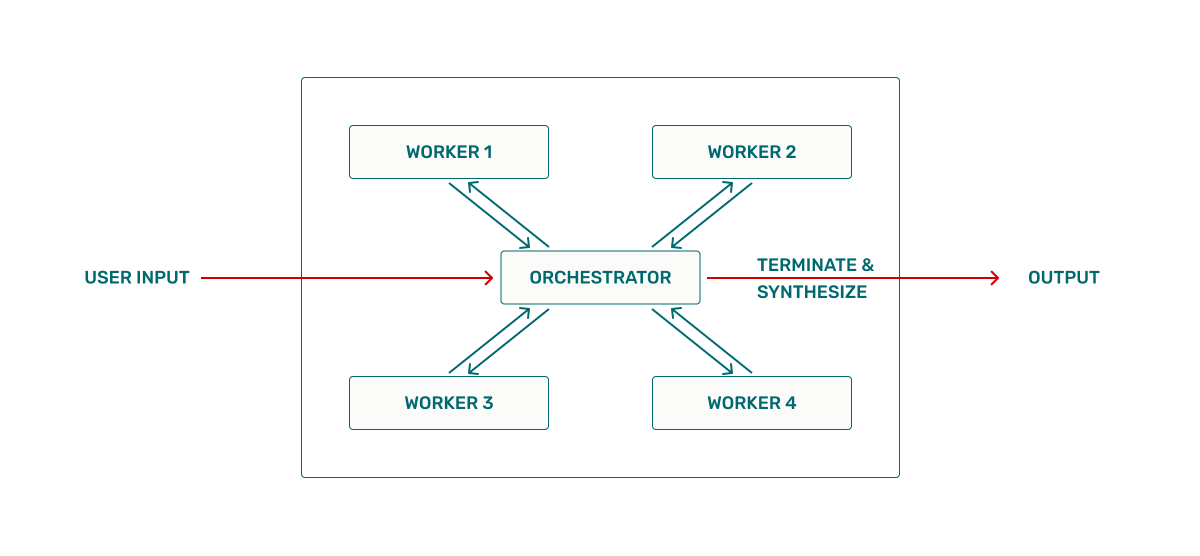

Orchestrator-Worker Architecture

The orchestrator-worker architecture is designed for workflows where problem structure emerges at runtime. A user input S is passed to an orchestrator agent O, which transforms it into a multiset of subtasks {s1,…,sn}. Each subtask si is routed to a corresponding worker agent Wi, which returns a local result ri. The orchestrator observes these results and dynamically determines the next best subtasks or actions, repeating this loop until the final output R is produced.

This approach is distinct from prompt chaining or fixed tool routing. It operates under different assumptions:

- The number and type of subtasks depend on the properties of the input, not on a predefined flow.

- Subtasks may vary in complexity and require different strategies or tools.

- Workers may operate in parallel or sequentially; in some cases, specific models or tools are assigned to subtasks based on their content.

The orchestrator must go beyond simple decomposition. It needs multi-stage reasoning, maintaining a representation of the evolving task graph, tracking which nodes are resolved, deciding when partial results require refinement, and determining when to terminate or recurse.

Execution often unfolds in cycles: generate subtasks, assign workers, collect results, update state, and repeat until the goal is reached.

Challenges

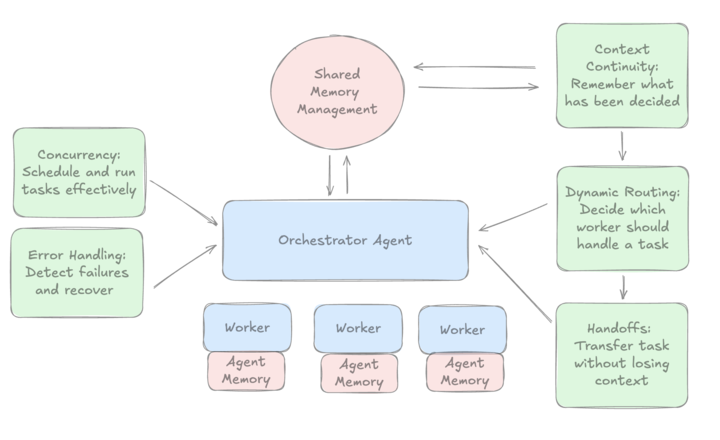

When building an orchestrator–worker agent framework, several key challenges emerge:

- Dynamic Routing: Deciding which worker agent should handle each subtask requires balancing workflow state, subtask complexity, and worker specialization.

- Context Continuity: Agents need to remember what has been decided so far, even across multiple cycles.

- Seamless Handoffs: Tasks must transfer between agents without losing context or duplicating work.

- Error Handling: The system must detect failures and recover gracefully without derailing the workflow.

- Concurrency: Orchestrators must schedule and run agents efficiently, whether tasks are independent or interdependent.

- Memory Management: Managing information across cycles and agents is especially complex. This includes:

- Short-term vs. Long-term State: Distinguishing transient scratchpad data from persistent decisions.

- Context Propagation: Passing only what’s necessary between agents to avoid bloat.

- Storage Patterns: Using in-prompt embeddings, caches, or external stores depending on scale and reliability needs.

We compared common agent frameworks by building minimal orchestrator–worker prototypes in each. The purpose of this comparison is to highlight how the same high-level architecture maps onto different runtimes. We enabled only native routing, handoff, memory, and retry features, avoiding custom heuristics except where required for basic functionality. We inspected execution traces and code to evaluate four pillars and then summarized tradeoffs into a concise verdict for each framework.

Architecture

Multi-agent frameworks may look different on the surface, but under the hood they all solve the same orchestration challenge: how to break down a top-level task, decide which agent should act next, and combine results into a coherent output. Each framework encodes this logic into two main primitives: Orchestrator and Worker.

Some frameworks, like AutoGen, lean into chat-style coordination where agents “talk” until a result emerges. Others, like LangGraph or Mastra, formalize orchestration as graphs or workflows. And few frameworks support both, such as CrewAI. The table below compares how various frameworks implement the orchestrator and worker roles, and highlights what stands out in each approach.

| Framework | Orchestrator primitive Core control unit that routes work |

Worker model How individual agents or tools are represented |

What stands out |

|---|---|---|---|

| Agno (Python) | Team (with coordinate mode set) decides who acts based on instructions and success criteria | Role agents registered as team members | Declarative team that routes without manual stitching; built-in task transfer tool surfaces handoffs in Phoenix. |

| AutoGen / AG2 (Python) | GroupChat Manager selects the next speaker | AssistantAgent, UserProxyAgent (human), other agents inside one chat | Conversation-centric orchestration that emerges from chat history and roles. |

| CrewAI (Python) | Manager agent in hierarchical process plans, delegates, validates | Role-based agents executing YAML or code-defined tasks | Structured ‘team with a manager’ model with a detailed event bus for coordination and visibility. |

| OpenAI Agents (Python/JS) | Runner loop in Agents SDK | Sub-agents registered for handoffs | Handoffs are first-class tool calls with built-in tracing identifiers. |

| LangGraph (Py/TS) | Execution graph with nodes and edges | Supervisor, dynamic workers via Send API, subgraphs |

Graph-native orchestration with hierarchical supervision and modular subgraphs. |

| Mastra (TypeScript) | Supervisor Agent plus Workflows for sequencing and branching | Workers as tools or agents | TypeScript-native with structured streaming and OpenTelemetry spans across runs. |

Execution Model and Handoffs

Beyond how agents are orchestrated conceptually, each framework has its own execution loop and rules for handing off control. These mechanics determine whether workflows feel conversational, procedural, or graph-driven, and they also shape how parallelism is supported.

Two dimensions matter most:

- Execution loop – the control cycle that advances the system until completion (stepwise coordination, chat turns, or graph traversal).

- Handoff form – how one agent (or node) transfers work, context, or control to another.

| Framework | Execution loop (How steps advance end to end) | Handoff form (How control and context move between agents) | Parallelism notes (Concurrency: branching and join strategy) |

|---|---|---|---|

| Agno | Team coordinates actions per step using pre-defined rules and instructions. | Explicit handoffs via methods like transfer_task_to_member(). Handoff includes relevant state and expected output. |

Supports concurrent agents working on different sub-tasks. |

| AutoGen | A manager-led conversation where the manager agent selects the next speaker from the group. | Conversation-driven handoffs; an agent implicitly hands off to another by generating a response that prompts the manager to select a new speaker. | Agents run sequentially by default; true parallelism needs manual orchestration, and max_rounds must be tuned to avoid dead-ends. |

| CrewAI | Central manager delegates tasks to agents and validates their outputs. | Explicit manager-to-agent task handoff with dependencies and shared context. | Supports both in-crew and multi-crew parallelism. |

| OpenAI Agents | The Runner drives tool calls and outputs until completion or stop. | Handoffs are effectively tool calls. handoff_input_filter can be used to limit the forwarded context. |

Execution is sequential by default; broader parallelism requires explicit orchestration. |

| LangGraph | Execution is a directed graph traversal, with nodes as steps and edges defining branching and loops. | Command API moves control across parent/child graphs and subgraphs. | The graph natively supports parallel fan-out and structured fan-in with reducers, enabling efficient concurrent execution. |

| Mastra | A supervisor drives workflow execution, routing steps and tool calls; streams progress through a defined Workflow. | Delegation appears as a tool call, with the supervisor routing agent actions and passing context. | Supports parallel steps within a single Workflow. Also supports suspend-resume for long runs with nested streaming. |

Memory

Memory in multi-agent systems can be analyzed along three core aspects:

- Shared context model – How information flows across agents. Some frameworks offer a global transcript or team-level state, while others externalize context and require explicit passing.

- Per-agent or scoped memory – What each agent retains individually, such as message history, role-specific preferences, allowing specialization without bloating the global context.

- Durability and persistence – Whether memory extends beyond a single run. Some frameworks checkpoint state for resumability, others persist embeddings across sessions, and some leave persistence to the developer.

Together, these dimensions shape how well an agent framework maintains continuity, recall, and grounding over time.

| Framework | Shared context model Global state accessible to all agents |

Per-agent or scoped memory Private or limited-scope state per agent |

Durability and persistence How state survives long runs or restarts |

|---|---|---|---|

| Agno | The Team-level shared global context is automatically synced across members. No need for manual context passing. | Each agent can keep scoped memory; plug in vector stores or DBs. | Persistent global context with minimal glue, though highly custom pipelines may bypass defaults. |

| AutoGen | GroupChat transcript is the primary shared context for all agents. | Each agent stores its own chat history and configurations. This allows for specialized, role-based behaviors. | Use BufferedChatCompletionContext to manage the context window. External RAG at agent or chat level for more durable memory. |

| CrewAI | Shared context can extend beyond the immediate chat history, allowing the Crew and the Manager to share information. | Short-term, long-term, and entity memory which are configurable with different embedding models. Allows for fine-grained control over agent memory. | Memory persists across runs, ensuring continuity for complex workflows |

| OpenAI Agents | The session is the core mechanism for shared context, preserving the conversation history across runs. | The session is the core mechanism for shared context. When agents run under the same session, they automatically share conversation history across runs. | History can be filtered on handoff between workers to prevent data leakage and manage costs. |

| LangGraph | The framework stores state as a graph of nodes and edges, rather than a single shared transcript. | Each thread_id has its own checkpointed state. This enables agents to maintain their individual context within a specific thread. |

Checkpointing enables resumability, time travel, and human‑in‑the‑loop interactions via via interrupt() and resume. |

| Mastra | Context passed via workflows or resource-scoped memory (per user/entity) | Built-in per-agent memory: working memory, message history, semantic recall | Configurable; persists with storage adapters (e.g. LibSQL, Postgres) or ephemeral if none set |

Error Handling

Error handling determines how frameworks recover from failed steps, prevent runaway loops, and surface useful debugging signals. The mechanisms vary widely: some provide guardrails and retries at the task or node level (CrewAI, LangGraph), while others rely on conversation rules or termination checks (AutoGen).

The table below outlines the built-in mechanisms each framework offers, along with known caveats developers should watch for.

| Framework | Built-in mechanisms Native guardrails, retries, and recovery |

Known caveats |

|---|---|---|

| Agno | Team architecture rules reduce custom glue; decision metadata can explain why an agent was chosen | Abstractions could make it difficult to debug race conditions or task collisions in complex, potentially requiring custom orchestration logic to resolve. |

| AutoGen | Termination rules (like a max_rounds limit) and a manager-led turn-taking mechanism prevent infinite loops. The UserProxyAgent can serve as a human-in-the-loop for error resolution. |

The UserProxyAgent can get re-selected repeatedly if the termination conditions aren’t met, potentially leading to a long, unproductive conversation. Long chats risk context loss without buffering. |

| CrewAI | Provides robust guardrails and retries at the task level. Enforces structured outputs to ensure data integrity and includes an event bus for real-time observability. | Enables teams to carefully design agent roles and structured output to ensure clarity, consistency, and easier debugging in complex workflows |

| OpenAI Agents | Features include guardrails and tripwires to prevent unwanted behavior. Tracing can show the flow of a conversation and who handled each part of a handoff. | Rationale behind the assistant’s routing or tool selection can be opaque. Parallelism is supported but requires explicit orchestration with asyncio or SDK helpers. |

| LangGraph | Offers node-level retries, checkpoint-based recovery, and selective resume (e.g. restart only the failed part after an error). | Developers must explicitly define reducers and termination rules to prevent infinite cycles or state conflicts. |

| Mastra | Delegations appear as tool calls within the same run; supports structured streaming, suspend/resume, and OpenTelemetry tracing | Developers can define orchestration and memory/error strategies explicitly. This adds flexibility, allowing teams to tailor error handling to their system needs instead of being locked into a fixed model |

Verdict

- CrewAI: Event-driven manager–worker framework with strong guardrails, retries, and observability. Its event bus and async execution enable meaningful parallelism, while flexible memory types support continuity across runs. Well-suited for production teams that value transparency, control, and scalable coordination.

- Mastra: Strong TypeScript-native framework with built-in streaming, suspend-resume, and OpenTelemetry tracing. Provides a structured memory system (working memory, message history, semantic recall) with defaults for persistence, while allowing developers to configure custom strategies. Offers clear operational visibility and flexible orchestration, giving developers both strong defaults and the freedom to shape state management to their needs.

- Agno: Agno’s strength lies in its declarative coordination: Teams reduce custom glue by enforcing orchestration rules and surfacing handoffs. Memory is simple but persistent, and built-in decision metadata aids transparency. Agno suits developers who want low-friction coordination, though some customization is required for more complex logic.

- OpenAI Agents: Easiest way to get native handoffs, sessions, and guardrails if you are already on the OpenAI stack. This framework is ideal for developers who want tight integration with the OpenAI ecosystem, prioritizing structured APIs over maximal flexibility.

- LangGraph: Best when you want precise control, parallel fan‑out, recursion, and durable state management. Memory is externalized into a checkpointed state, enabling resumability, “time travel,” and human-in-the-loop interventions.

- AutoGen / AG2: AutoGen stands out for its conversation-centric orchestration, where roles and chat history drive emergent behavior. This makes it highly flexible for research use cases. Handoffs between agents rely on the shared conversation context, which can be truncated in long chats, and memory can be managed at both the agent and orchestrator level for continuity.

All demo implementations and minimal orchestrator/worker prototypes live here: github.com/Dylancouzon/orchestrator-agents