Arize AI Launches Bias Tracing, a Tool for Uprooting Algorithmic Bias

Technology helps enterprises quickly get to the bottom of where and why disparate impacts are happening. To try out the new tool, sign up for an account today. Learn more about how to use Arize Bias Tracing here.

In today’s world, it has become all too common to read about AI acting in discriminatory ways. From resume-screening algorithms that disproportionately exclude women and people of color to search engine type-aheads and computer vision systems that amplify racism, examples are unfortunately easy to find. As machine learning models get more complex, the true scale of this problem and its impact on marginalized groups is not fully understood.

For most teams, the existence of these biases going undetected in production systems is a nightmare scenario. It’s also a problem that eludes easy answers, since simply removing a protected group (i.e. race) as a feature is insufficient. In practice, data scientists often unwittingly encode biases from exploration of the data or from historical biases present in the data itself. Real-world examples abound, from home valuation predictions that reflect the continued legacy of redlining to models predicting healthcare needs reflecting racial inequality in income and access to care.

To date, existing solutions built to monitor fairness metrics for ML models lack actionability. While several tools provide dashboards to monitor fairness metrics in the aggregate, they often leave a lot of unknowns when teams want to take the next step, click a level deeper, and figure out why the problem is occurring.

In short, teams need tracing. In system observability, tracing – or following the lifecycle of a request or action across systems – is pivotal for measuring overall system health and detecting and resolving issues quickly. ML performance tracing is the similar but distinct discipline for pinpointing the source of a model performance problem.

Arize Bias Tracing is uniquely purpose-built to help data science and machine learning teams monitor and take action on model fairness metrics. The solution enables teams to make multidimensional comparisons, quickly uncovering the features and cohorts likely contributing to algorithmic bias without time-consuming SQL querying or painful troubleshooting workflows. Leveraging Arize Bias Tracing, enterprises can easily surface and mitigate the impact of potential model bias issues on marginalized groups.

Early reception of the product has been positive.

Using Arize Bias Tracing & Fairness Metrics

The setup process within Arize is straightforward. To evaluate how your model is behaving on any protected attribute, you select a sensitive group (e.g. Asian) and a base group (e.g. all other values – African American, LatinX, Caucasian, etc.) along with a fairness metric. Then, you can can begin to see whether a model is biased against a protected group using methods like the four-fifths (⅘) rule. The four-fifths rule is one threshold that is used by regulatory agencies like the United States Equal Employment Opportunity Commission to help in identifying adverse treatment of protected classes. Leveraging the four-fifths rule, teams can measure whether their model falls outside of the 0.8-1.25 threshold, which means algorithmic bias may be present in their model (note: there are other statistically robust methods to find adverse treatment that will be a focus of future launches).

Let’s take a look at some examples of how fairness metrics available in Arize are used to detect bias in machine learning algorithms.

- Recall parity measures how “sensitive” the model is for one group compared to another, or the model’s ability to predict true positives correctly. A regional healthcare provider might be interested in ensuring that their models predict healthcare needs equally between LatinX (the sensitive group) and caucasians (the base group). If recall parity falls outside of the 0.8-1.25 threshold, it may indicate that LatinX are not receiving the level of needed followup care as caucasians, leading to different levels of future hospitalization and health outcomes. Distributing healthcare care in a representative way is especially important when an algorithm determines an assistive treatment intervention that is only available to a small fraction of patients.

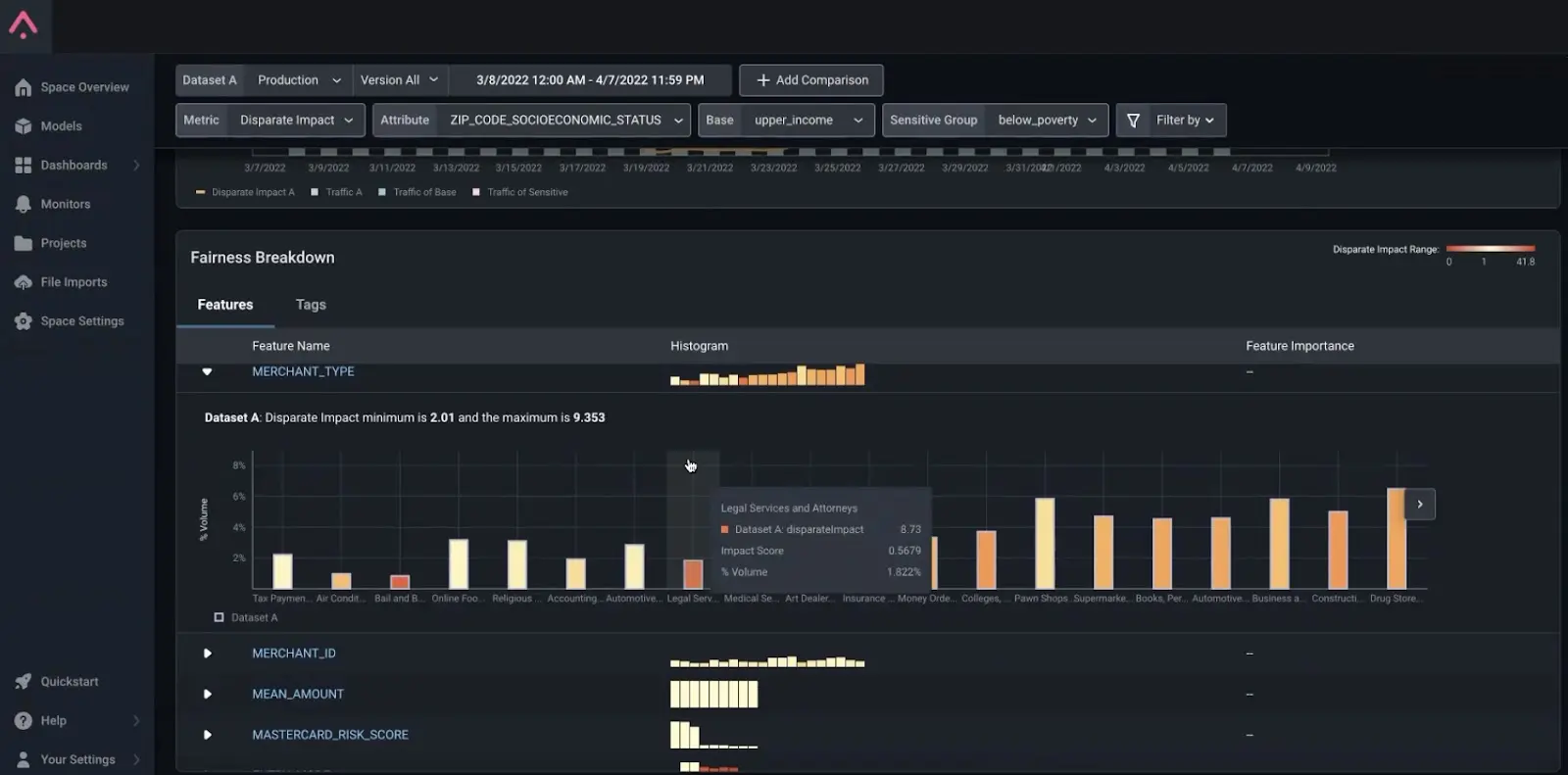

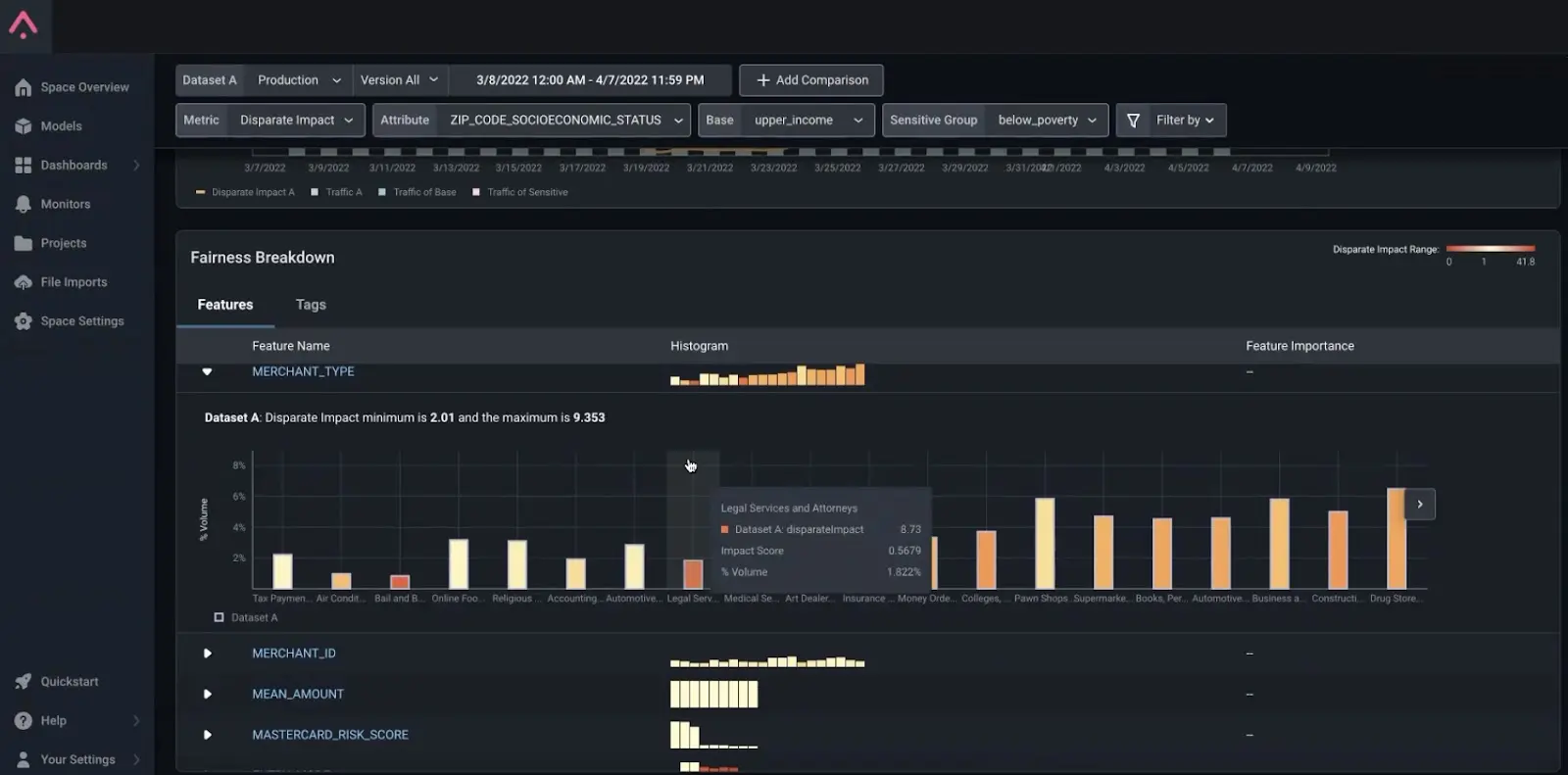

- False positive parity measures whether a model incorrectly predicts something as more likely for a sensitive group than for the base group. In the example above, a financial services company is measuring whether its fraud model is falsely predicting higher rates of fraud for customers in lower-income zip codes (the sensitive group) compared to customers in median-income class zip codes (the base group). Since the false positive rate parity of 7.6 falls far outside the 0.8-1.25 threshold, it suggests the model is treating customers in lower-income neighborhoods unfairly by declining their credit card transactions at a far higher rate – especially for accounting services, bail bonds, and construction services.

- Disparate impact is a quantitative measure of the adverse treatment of protected classes that compares the pass rate – or positive outcome – of one group versus another. Continuing with the fraud example: if disparate impact falls outside of the 0.8-1.25 range, it may mean that the sensitive group – in this case, people residing in zip codes where a majority live below the poverty line – is experiencing adverse treatment. Clicking a level deeper into the feature “merchant_type” shows that this disparate impact is the most pronounced for certain categories of purchases, such as for bail bonds, legal services, colleges, and even drugstore purchases.

The Importance of Multidimensionality

Monitoring fairness over time helps in understanding when a model may be acting in discriminatory ways. ML teams can narrow in on windows of time where a model may have expressed bias and further determine the root causes, whether it’s inadequate representation of a sensitive group in the data or a model demonstrating algorithmic bias despite adequate volume of that sensitive group.

Multidimensionality enables teams to take this analysis a step further. Once it is determined that a model is demonstrating algorithmic bias towards a sensitive group, teams can identify the segments (feature-value combinations) where model unfairness is highest to answer questions like what contributed to the bias and which segments are most negatively contributing to the negative bias. Put another way, teams can tackle the critical task of bias tracing to determine which model inputs are most heavily contributing to a model’s bias.

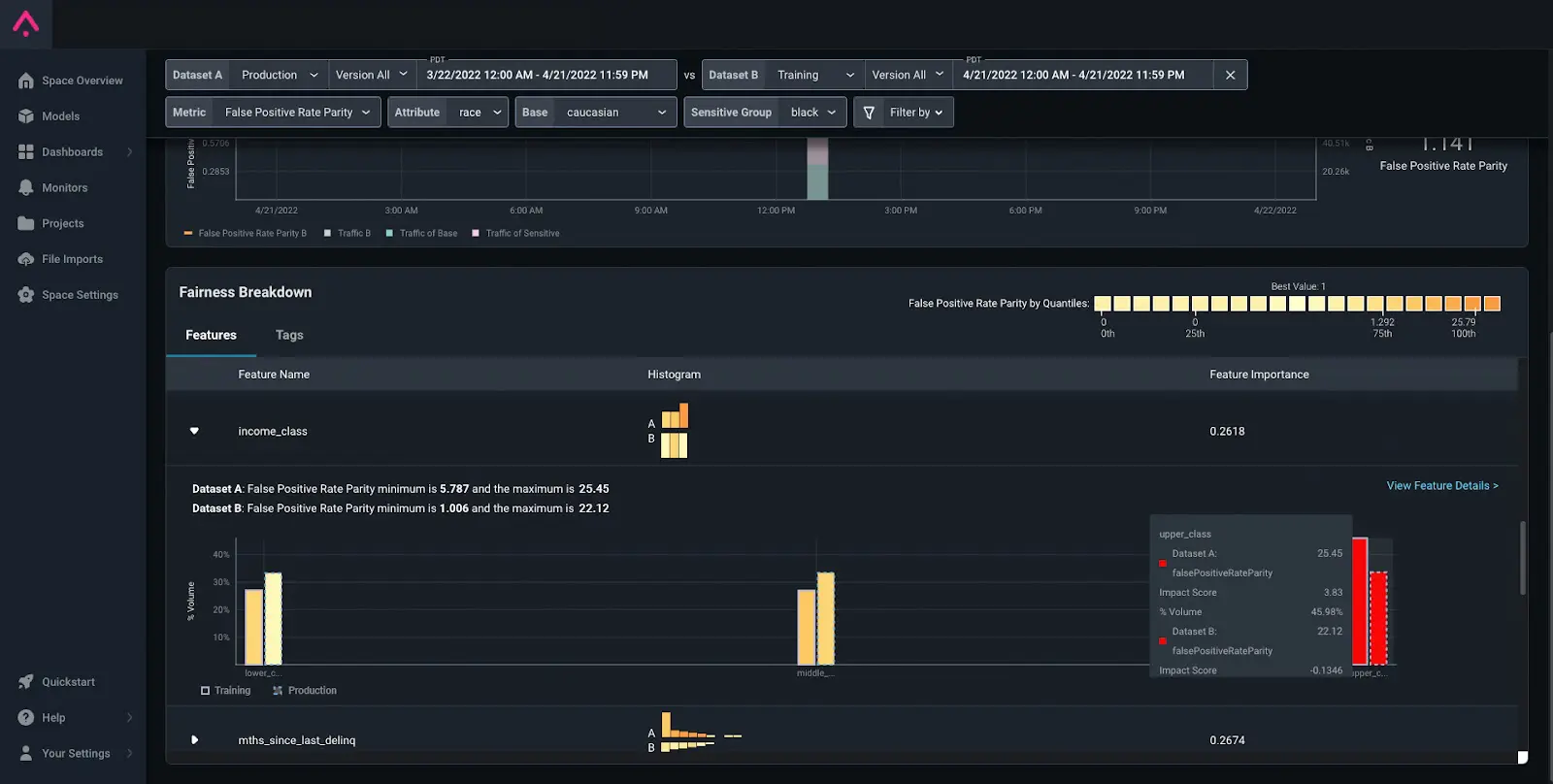

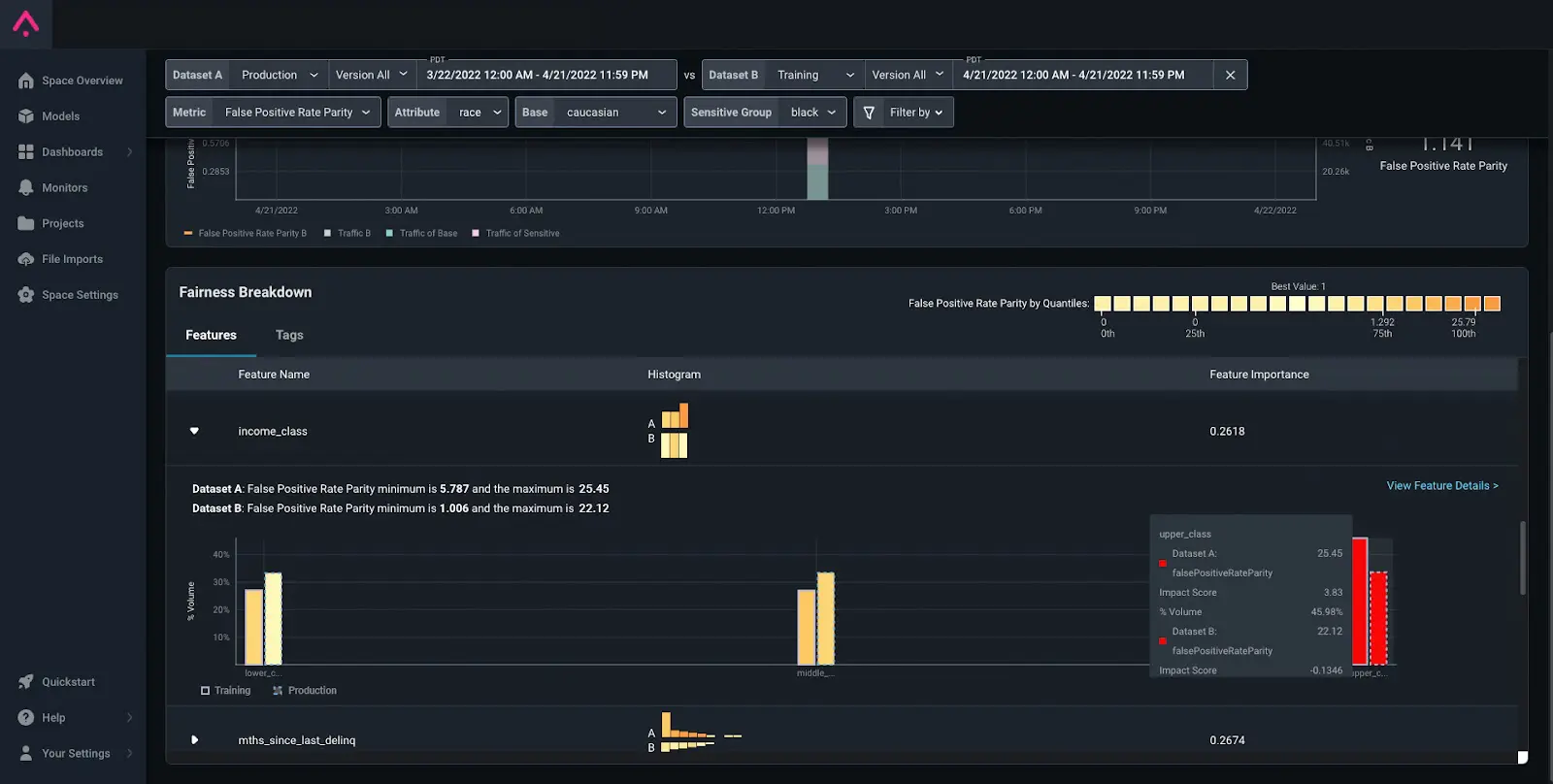

In the example above, a credit-card fraud model is falsely predicting higher rates of fraud for black customers relative to caucasian customers – especially among higher income earners — likely resulting in more unjustified declined transactions at the point of sale. Comparing production to training data further reveals that data the model was trained on is also biased for this cohort. Armed with this insight, ML teams can address the issue and potentially retrain the model.

Arize Bias Tracing is unique in that it enables these types of multidimensional comparisons by default. ML teams can automatically surface the feature-value combinations, filtering by sensitive and base groups, where parity across these groups is most negatively dragging down a model’s overall fairness metrics. This allows you to determine, for example, how income or another seemingly non-sensitive feature may be negatively impacting your model’s predictions for a sensitive attribute such as race.

A Broader Mission

Ensuring fairness is pivotal to the future of AI. It’s also incredibly difficult to realize in practice, as model visibility is often limited and existing tools are geared toward justifying model predictions after-the-fact. This status quo is a big part of why models continue to perpetuate discrimination, with organizations facing multimillion or billion-dollar fines or settlements (or worse).

Fortunately, most large enterprises want to go beyond mere compliance with the law and are signatories to a broader vision of stakeholder capitalism that respects diversity, inclusion, dignity and respect. Arize Bias Tracing helps ensure these organizations can begin to realize that vision in their AI initiatives.