We’re excited to introduce Alyx, the next evolution in Arize’s intelligent assistant.

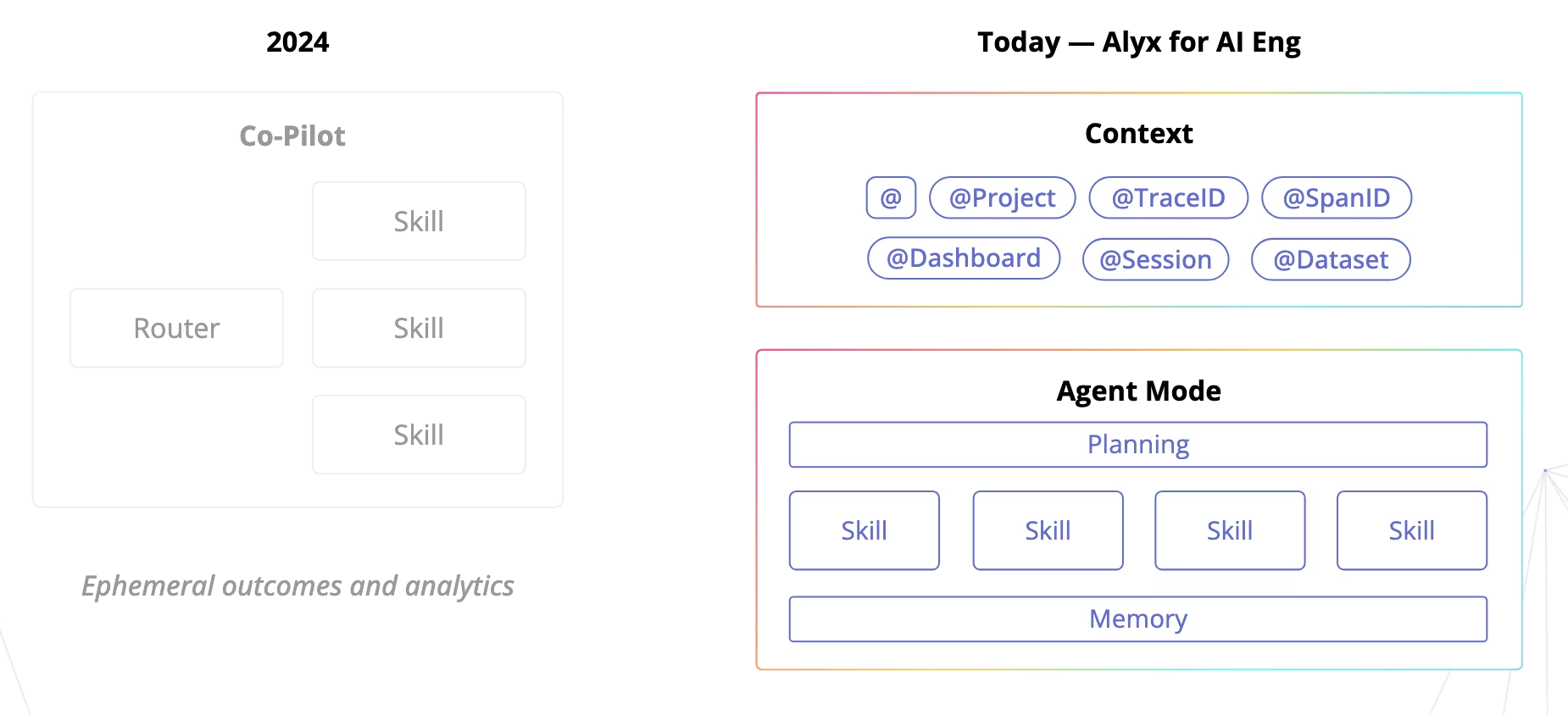

You might remember our first iteration — Copilot — launched last year as a set of tools to help users accomplish small tasks across the platform. Here’s the original blog I wrote about it.

Copilot gave us a glimpse into the future, but we knew we were just scratching the surface.

From Copilot to Agent: Why Alyx?

Over the past year, Copilot steadily expanded. We added more “skills” to help with tasks like debugging evaluations, searching over data, and running lightweight analyses. Some of these worked well, but it became clear that Copilot could do much more.

The core issue? Too many interactions ended in ephemeral insights. A user might get a one-off chart or explanation, followed by a list of generic next steps. But there was no sense of continuity and no real follow-through. It didn’t feel like collaboration. It felt like delegation without commitment.

That’s when we made a decision: Copilot needed to go Agent Mode.

Why Context Matters

One of the most important lessons from early Copilot iterations was how much users wanted control over the assistant’s context. From the start, we knew memory would be important—so we built a basic memory mechanism to help Copilot track where it was in the conversation.

But memory alone isn’t enough. Relying solely on prior interactions, or trying to encode everything in a giant prompt, simply doesn’t scale. If we want interactions to be reliable, accurate, and aligned with user expectations, we need a way to provide explicit context—to tell the LLM exactly what data to use and how.

This insight led us to borrow from tools like Cursor, where users can inject snippets of code or reference files directly into the interaction. In our world, that translates to relevant spans, evals, or metadata, the building blocks of LLM application workflows.

This model works because it’s grounded in user intention: you decide what context matters, and the assistant becomes far more effective as a result. Instead of trying to infer context or retrieve it through elaborate prompt engineering, Alyx prioritizes what you explicitly provide—leading to more accurate, predictable, and trustworthy results.

Alyx embraces this approach. You can guide the assistant with exactly the information you want it to consider — whether it’s a trace, an eval explanation, or a specific dataset. Instead of guessing, Alyx uses what you give it. The result: relevant context in, better outcomes out.

This is a direction we’re continuing to explore and invest in.

Why Agent Mode Is the Future

Our original Copilot started as a slightly enhanced autocomplete—it could respond to prompts, handle narrow tasks, and remember a bit of context across turns. It was great for quick wins, but it quickly became clear: it wasn’t built for the complexity of real LLM workflows.

That’s why we’re evolving Copilot into something more capable: Alyx in Agent Mode.

This shift is about building an assistant that can:

- Plan across steps: Break down high-level goals into sequenced tasks that reflect how real work happens.

- Retain state: Keep track of context, artifacts, and user intent across sessions—so each interaction builds on the last.

- Drive toward outcomes: Move beyond one-off answers to support iterative improvement, deeper analysis, and real resolution.

We’re not fully there yet, but this is the future we believe in. Because LLM workflows aren’t linear. They’re messy, multi-step, and deeply iterative. You’re debugging outputs, exploring failure modes, tweaking prompts, comparing evals — and often doing all of that at once.

We envision a future where Alyx isn’t just a helper, but a teammate — one that understands where you are in your workflow, adapts to the context you provide, and helps close the loop from insight to action to improvement.

That’s the future of LLM tooling. And that’s what Alyx is becoming.

A Glimpse Ahead

We believe Alyx marks the beginning of a new generation of AI tools for AI builders — not just reactive assistants, but proactive partners.

In the months ahead, Alyx will continue to evolve: deeper workflow integrations, smarter task orchestration, and more robust support for evaluation, debugging, and iterative development across the entire LLM application lifecycle.

And we’re thinking beyond the Arize UI. We want Alyx to meet you wherever you work—whether that’s inside your evaluation dashboards, your notebook, or your favorite coding assistant.

This is just the beginning. Welcome to the next chapter.

To try Alyx for free, sign up for Arize AX.