Continuous Monitoring, Continuous Improvements for ML Models Using Neptune AI and Arize AI

Delivering the best machine learning model to production should be as easy as training, testing, and deploying — right? Not quite! Models are far from perfect as they move from research to production, and maintaining model performance once in production is even more challenging. Once out of the offline research environment, the data a model consumes may shift in distribution or integrity, whether due to unexpected dynamics in the online production environment or upstream changes in the data pipeline.

Without the right tools to detect issues and diagnose where and why issues emerge, it can be difficult for ML teams to know when to adapt and retrain their models once deployed to production. It is imperative to build a continuous feedback loop as part of any model performance management workflow. Tooling to experiment, validate, monitor, and actively improve models helps ML practitioners consistently deploy — and maintain — higher-quality models in production. That’s why Arize AI and Neptune AI are pleased to announce a partnership, connecting Arize’s ML observability platform with Neptune’s metadata store for MLOps.

With this partnership, ML teams can more effectively monitor how their production models are performing, drill into problematic cohorts of features and predictions, and ultimately make more informed retraining decisions.

Arize & Neptune

There are a good number of ML infrastructure companies out in the world, so let’s overview what Neptune and Arize specialize in:

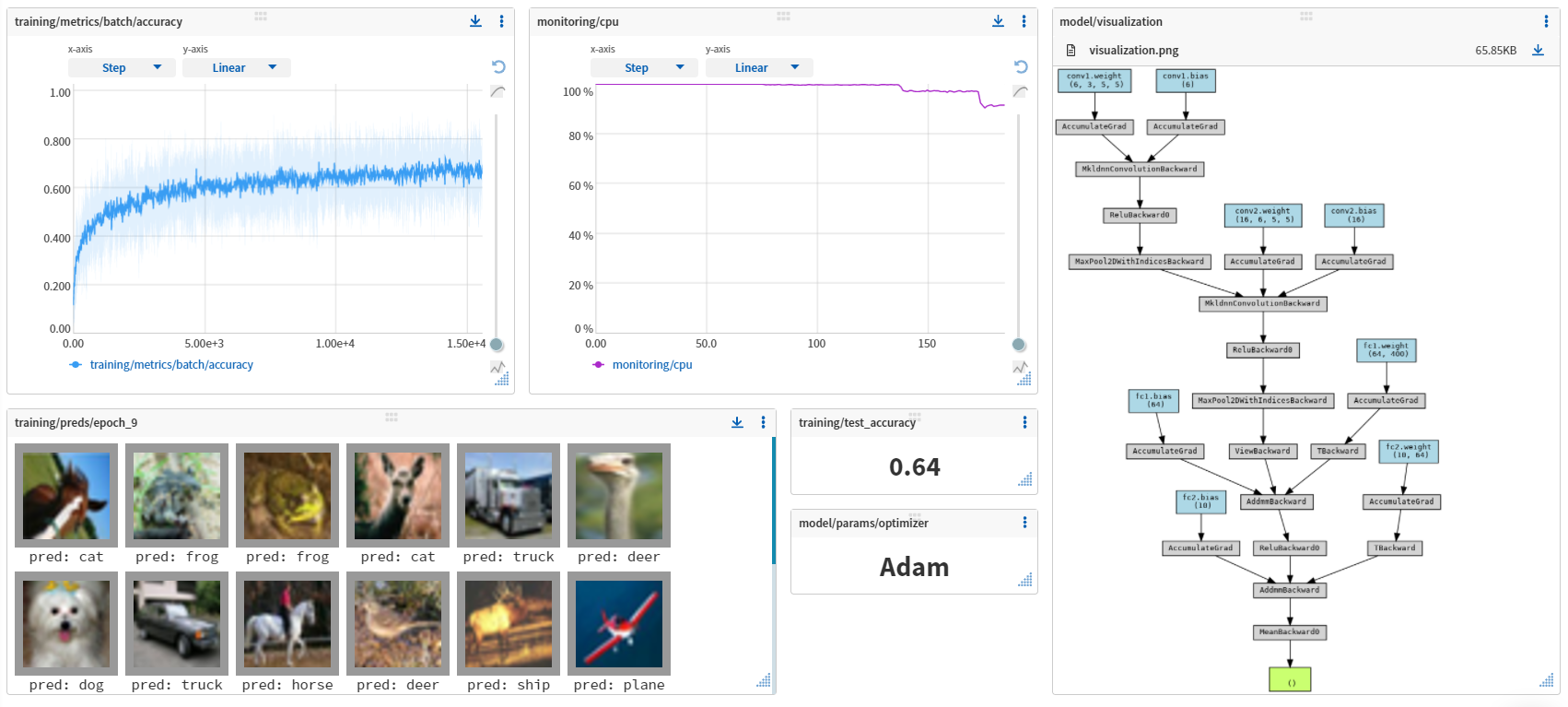

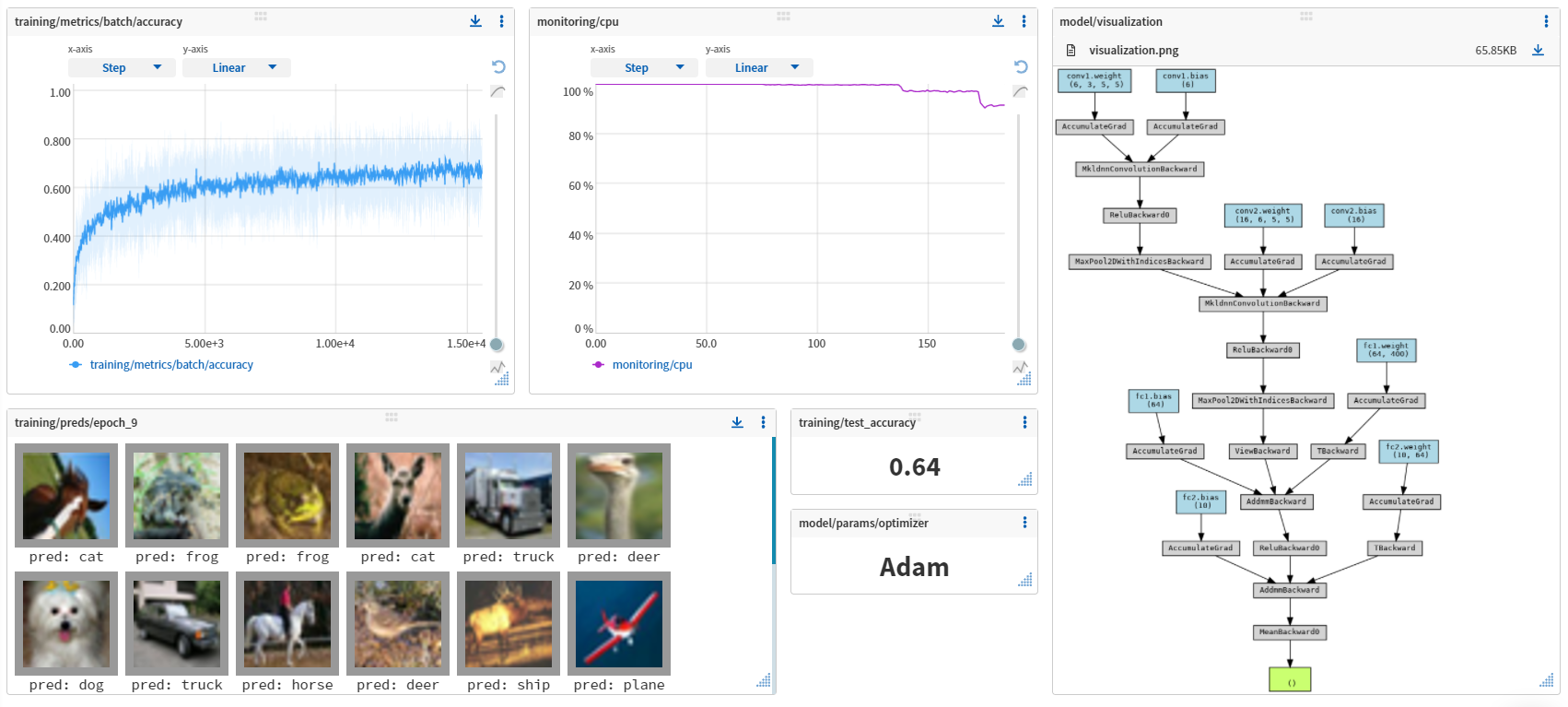

- Neptune logs, stores, displays, and compares your model building metadata. It helps with experiment tracking, data and model versioning, and model registry so that you actually know what models you are putting out there.

Example dashboard in Neptune | See in the app

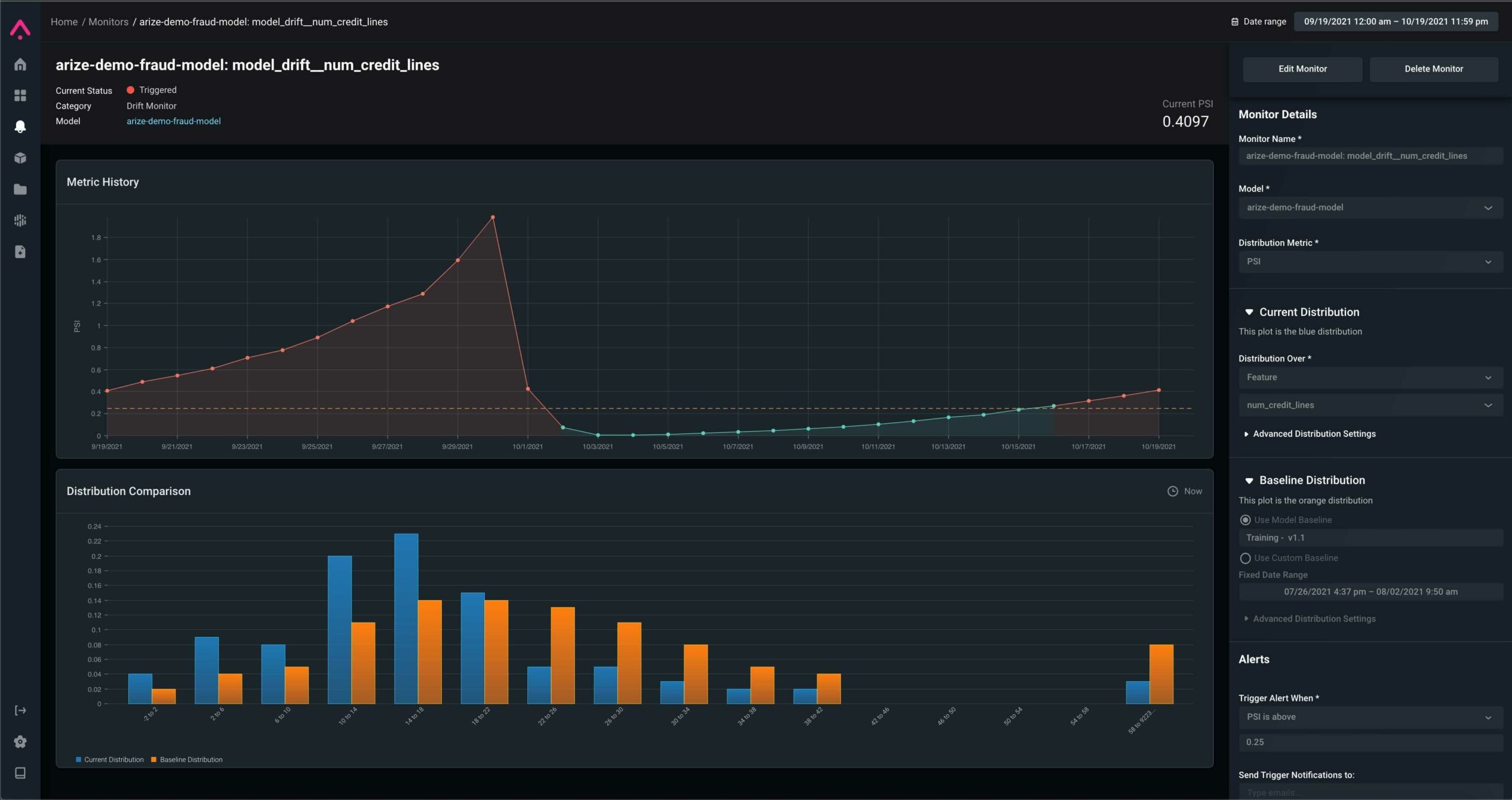

- Arize helps you visualize your production model performance and understand drift, data quality, and performance issues.

Example monitor in Arize

The ML lifecycle should be thought of as an iterative flow across stages of data preparation, model building, and production. While the Arize platform focuses on production model observability, it also monitors production, training, and validation datasets to build a holistic view of problems that may arise before and after deployment. This lets you set any dataset, including a prior period in production, as your baseline or benchmark to compare model performance.

So, why does this matter? With a central ML metadata store like Neptune, it’s significantly easier to track every model version and the lineage of the model’s history. When it comes to experimenting with and optimizing production models, this is important to ensure ML practitioners can:

- Set up a protocol for model versioning and packaging for teams to follow

- Give the ability to query/access model versions via CLI and SDK in languages you use in production

- Provide a place to log hardware, data/concept drift, example predictions from CI/CD pipelines, re-training jobs, and production models

- Set up a protocol for approvals by subject matter experts, production team, or automated checks.

TL;dr – ML practitioners can fine-tune model performance and actively improve models at a more granular level when using Arize and Neptune together.

Get Started

Now, let’s put this all together!

If you haven’t already, sign up for a free account with Neptune and Arize.

As soon as you do it, check the tutorial we prepared for you!

Here’s what you’ll find there:

- Setting up Neptune client and Arize client

- Logging training callbacks to Neptune

- Logging training and validation records to Arize

- Storing and versioning model weights with Neptune

- Logging and versioning models in production with Arize

Conclusion

With Arize and Neptune, you will be able to do your best machine learning work efficiently: identify and train your best model, pre-launch validate your model, and create a feedback loop between model building, experimentation, and monitoring with a simple integration.

About Neptune

Neptune is a metadata store for MLOps, built for research and production teams that run a lot of experiments. It gives you a central place to log, store, display, organize, compare, and query all metadata generated during the machine learning lifecycle.

Thousands of ML engineers and researchers use Neptune for experiment tracking and model registry both as individuals and inside teams at large organizations.

With Neptune, you can replace folder structures, spreadsheets, and naming conventions with a single source of truth where all your model-building metadata is organized, easy to find, share, and query. If you want to know more about Neptune and its capabilities, watch the product tour or explore our documentation.

About Arize

Arize AI is a Machine Learning Observability platform that helps ML practitioners successfully take models from research to production with ease. Arize’s automated model monitoring and analytics platform allow ML teams to quickly detect issues when they emerge, troubleshoot why they happened, and improve overall model performance. By connecting offline training and validation datasets to online production data in a central inference store, ML teams can streamline model validation, drift detection, data quality checks, and model performance management.

Arize AI acts as the guardrail on deployed AI, providing transparency and introspection into historically black box systems to ensure more effective and responsible AI. To learn more about Arize or machine learning observability and monitoring, visit our blog and resource hub!