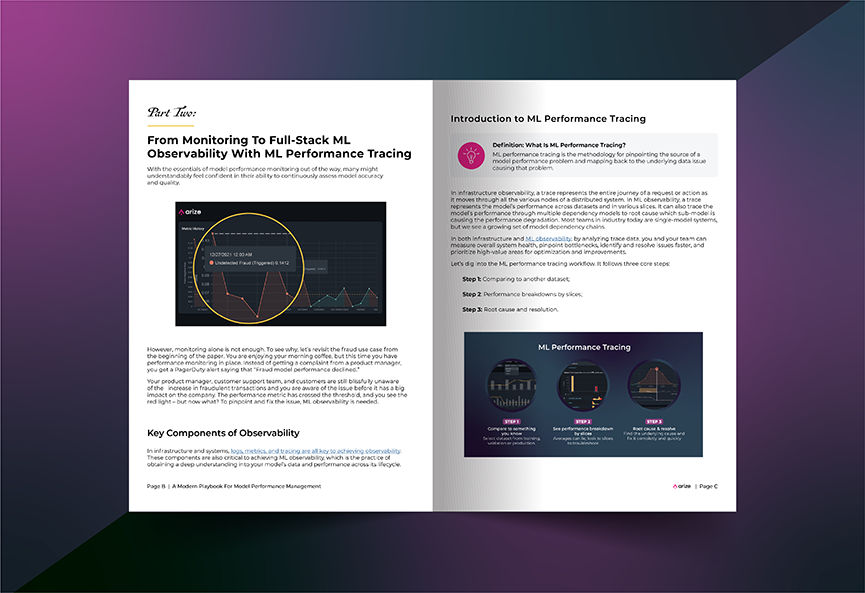

Machine learning troubleshooting is painful and time-consuming today, but it doesn’t have to be. This paper charts the evolution that ML teams go through — from no monitoring to monitoring to full-stack ML observability — and offers a modernization blueprint for teams to implement ML performance tracing to solve problems faster. In this paper, you’ll learn:

- Best practices for ML performance troubleshooting

- The key components of observability and differences between system and ML observability

- Useful definitions of ML performance tracing, data slice, and performance impact score

- How to break down performance by slices and do effective root cause analysis

Download the full paper to learn more about full-stack ML observability with ML performance tracing.