Built by Practitioners, For Practitioners

Surface

Resolve

Improve

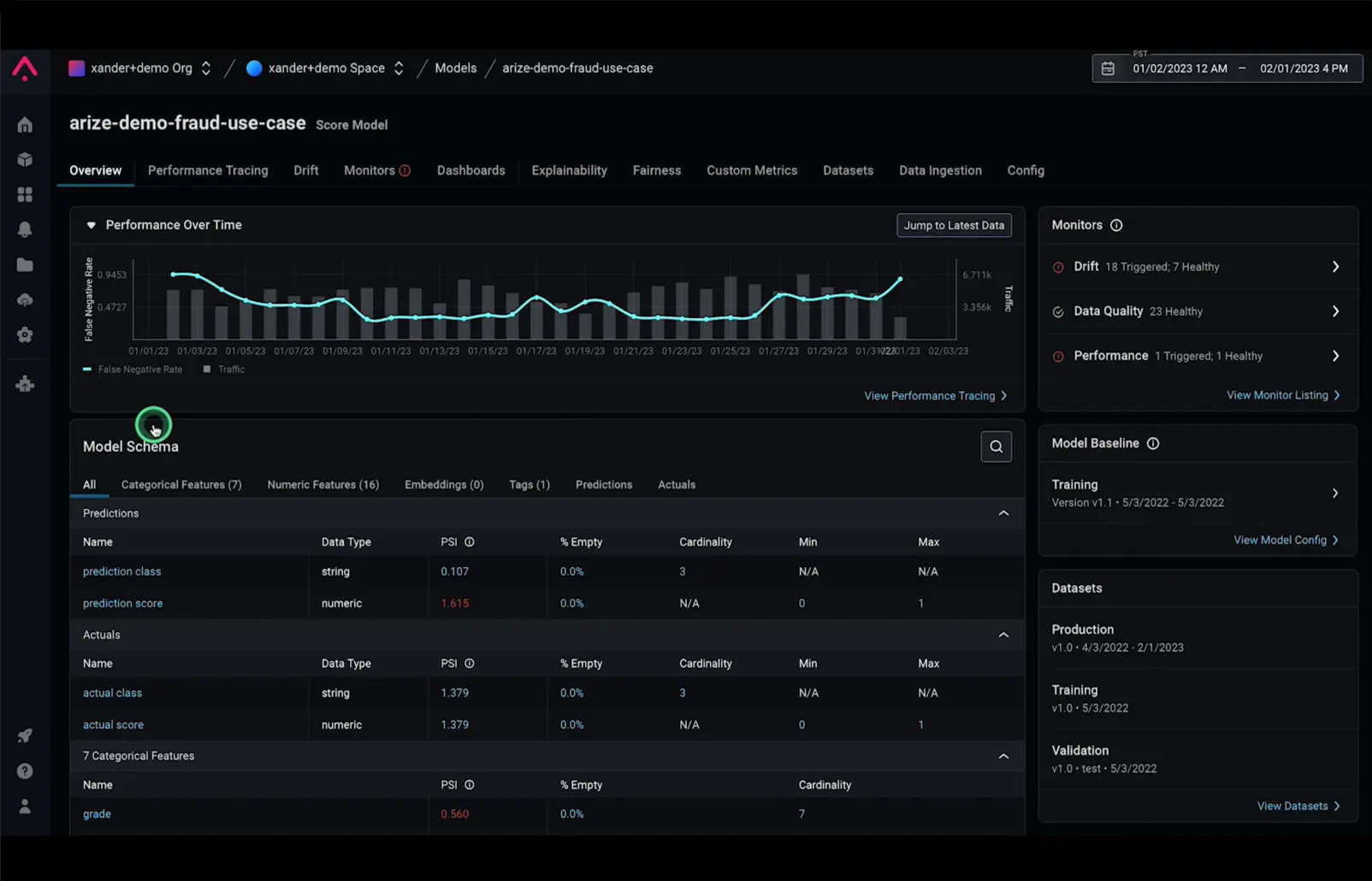

The AI observability and LLM evaluation solution for continuous model improvement

The ability to surface unknown issues and diagnose the root cause is what differentiates machine learning observability from traditional monitoring tools.

By indexing datasets across your training, validation, and production environments in a central inference store, Arize enables ML teams to quickly detect where issues emerge and deeply troubleshoot the reasons behind them.

Designed With Your Goals in Mind

ML Engineers

Deploy & Maintain ML with Confidence

- Automatically monitor model performance on any dimension

- Standardize ML observability efforts across ML projects and teams

- Catch production model issues before they spiral

- Easy to deploy, easy to integrate

- Reduce time-to-detection (TTD) and time-to-resolution (TTR) with tracing workflows

Data Scientists

Keep Up the Model Momentum

- Exploratory data analysis (EDA) workflows

- Proactively uncover opportunities for retraining or to expand a model’s use case

- Surface blind spots in the model

- Dynamic dashboards to track and share model performance

ML Business Leader

Connect AI ROI to Business

- Gain a single pane of glass into your production ML

- Understand how an ML model’s performance impacts your product and business lines

- Align MLOps practices and tooling across engineering and data teams

Recommended resources