Model Monitoring, Automatically Scaled

A centralized model health hub automatically surfaces potential issues with performance and data – with native alerting integrations and tracing workflows so you can take immediate action to remedy problems.

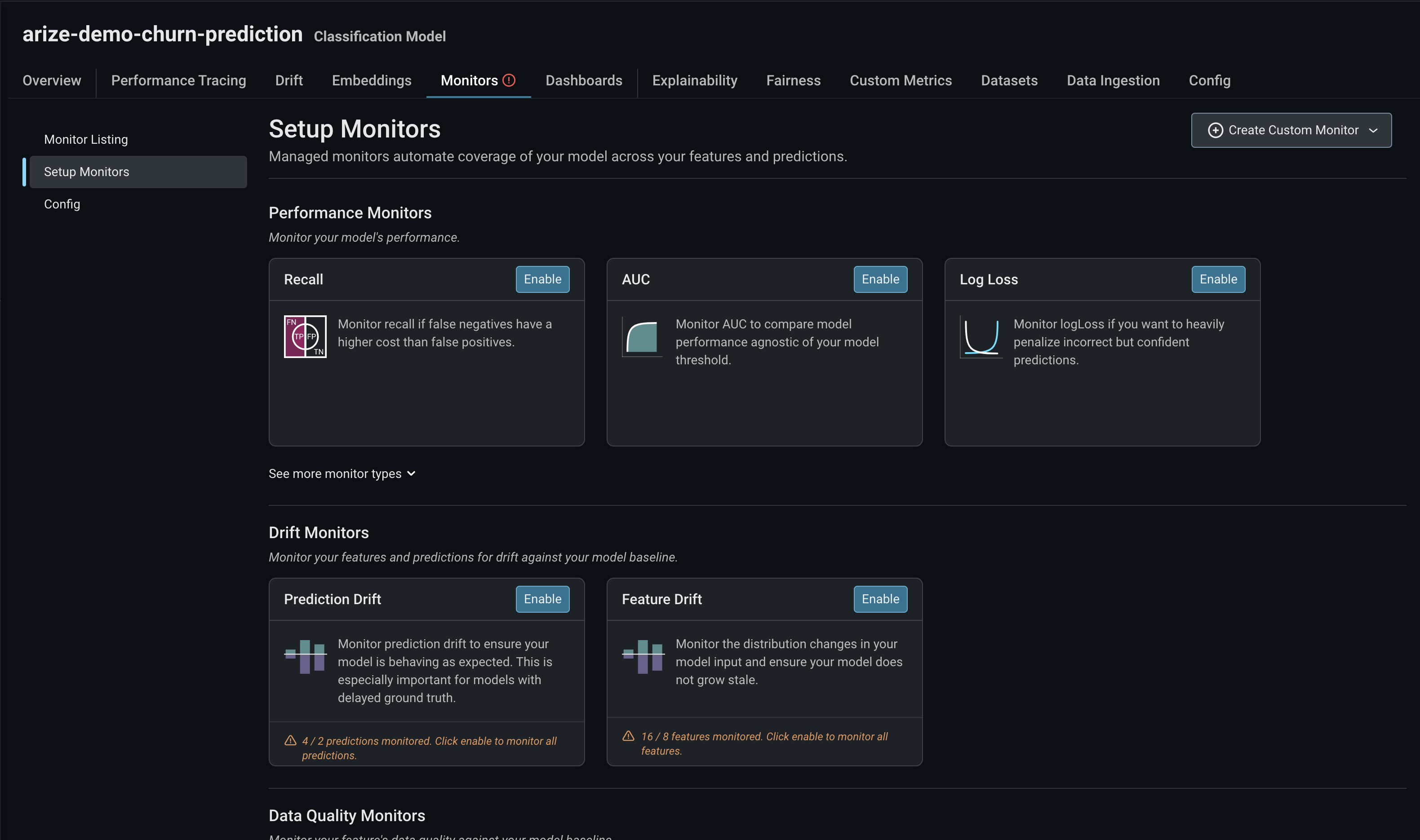

Cover All Your Bases

Monitor model drift, performance, and data quality automatically across any model type – from LLM, generative, and computer vision to recommender systems and traditional ML.

Your model schema and features are automatically discovered and transformed by Arize for monitoring.

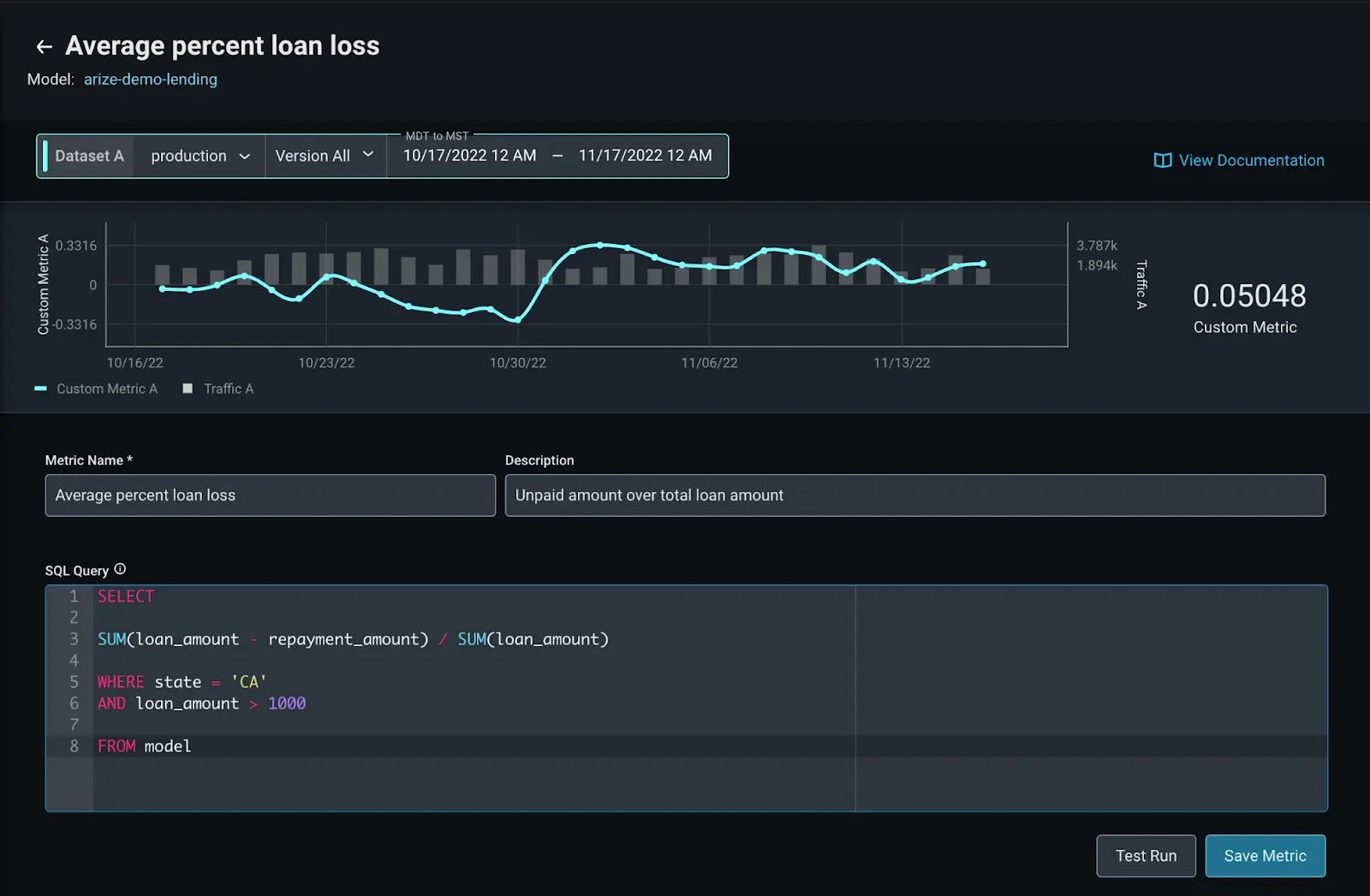

Define Your Own Metrics

Tailor the custom metrics you want to monitor in Arize’s system based on your business’ ML needs. Derive new metrics using a combination of existing model dimensions using a SQL-like query language.

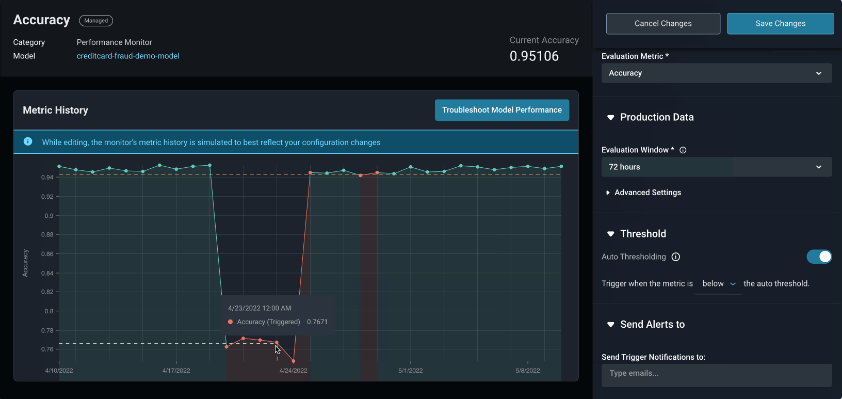

Adaptive Thresholding

Continuous auto-thresholding makes Arize’s monitoring system the most practical and efficient option available.

Monitor thresholds are determined based on patterns discovered from the data sent through – with, of course, the ability for you to customize as desired.

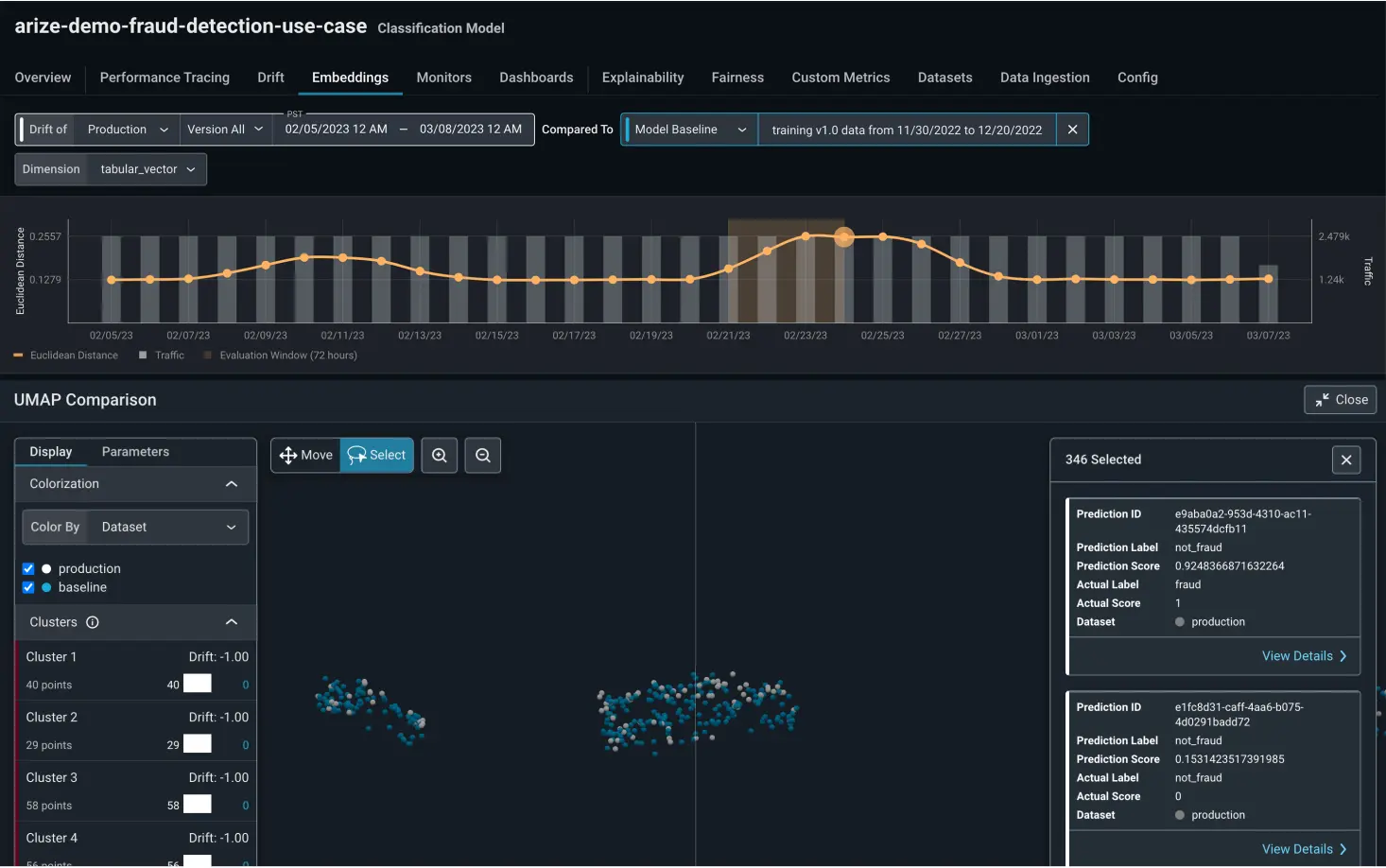

Multi-variate Anomaly Drift Detection

Uncover patterns and insights that may not be intuitive when monitoring a single feature.

Catch issues across combinations of features in a single view using embeddings for tabular data.

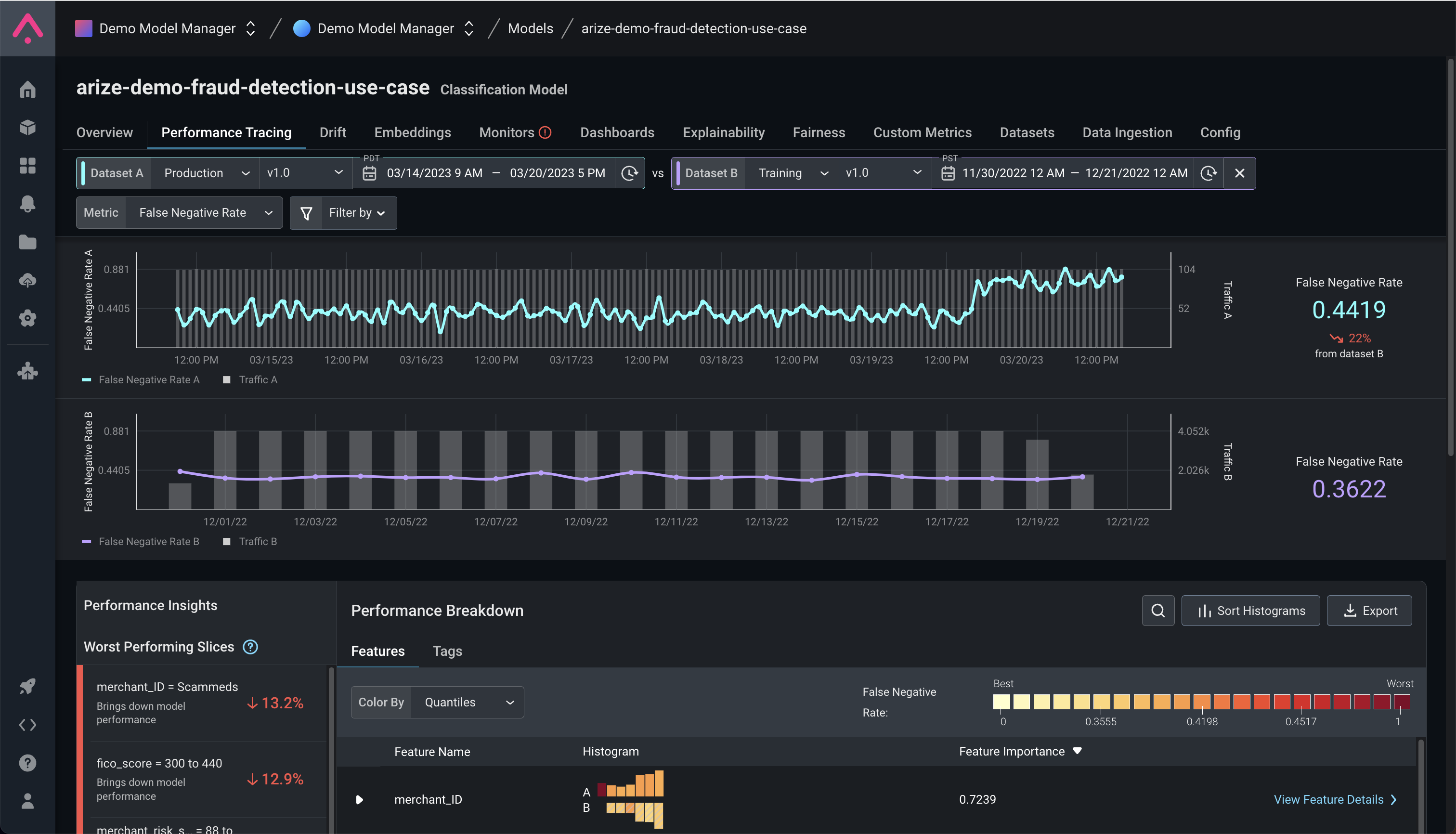

Look Across Environments and Versions

Compare changes and root cause performance degradations against training, validation, and production datasets.

Native A/B comparison views and workflows makes validating performance issues simpler.

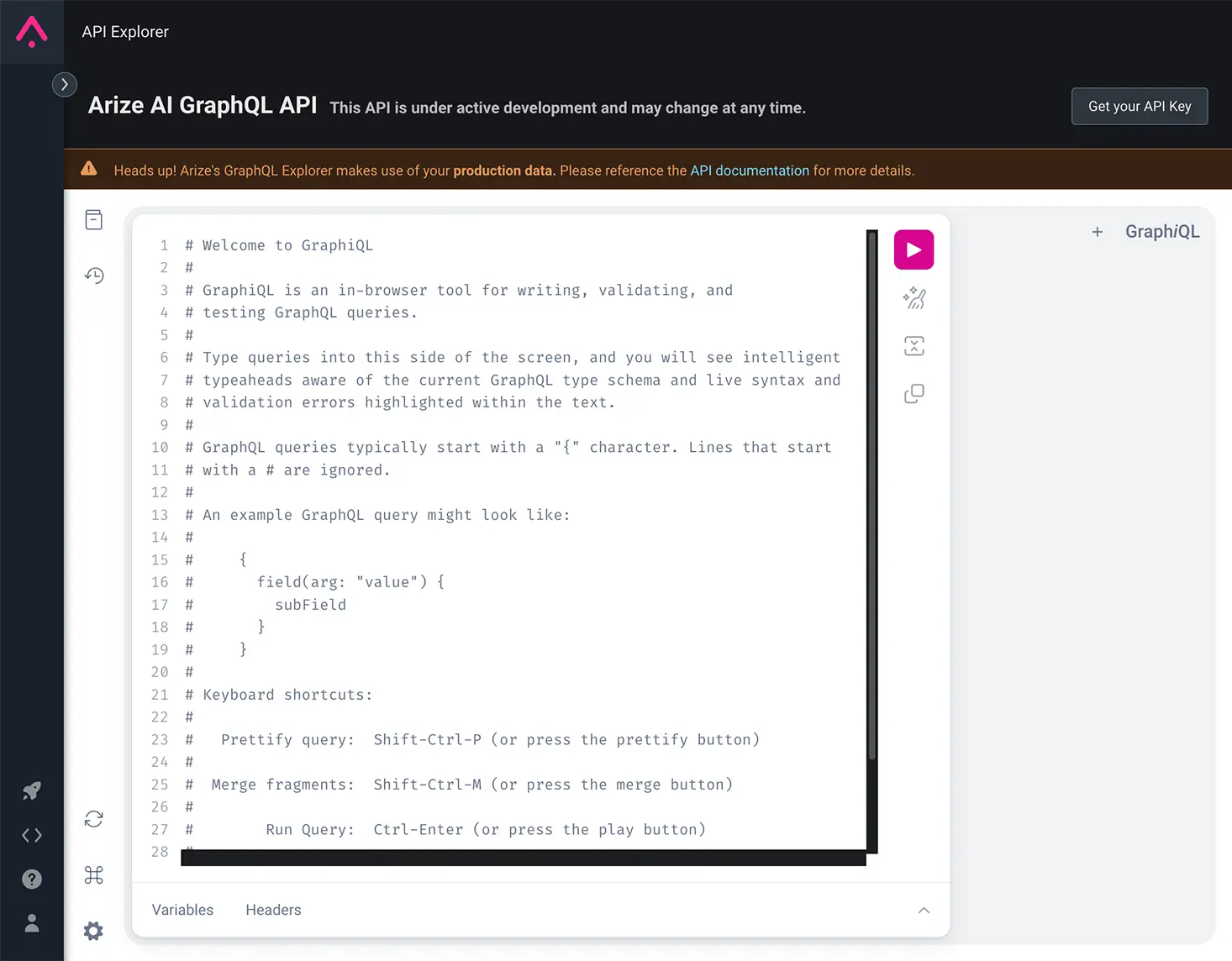

Programmatic API

Use Arize’s GraphQL API for ultimate flexibility configuring model monitors within your own infrastructure.

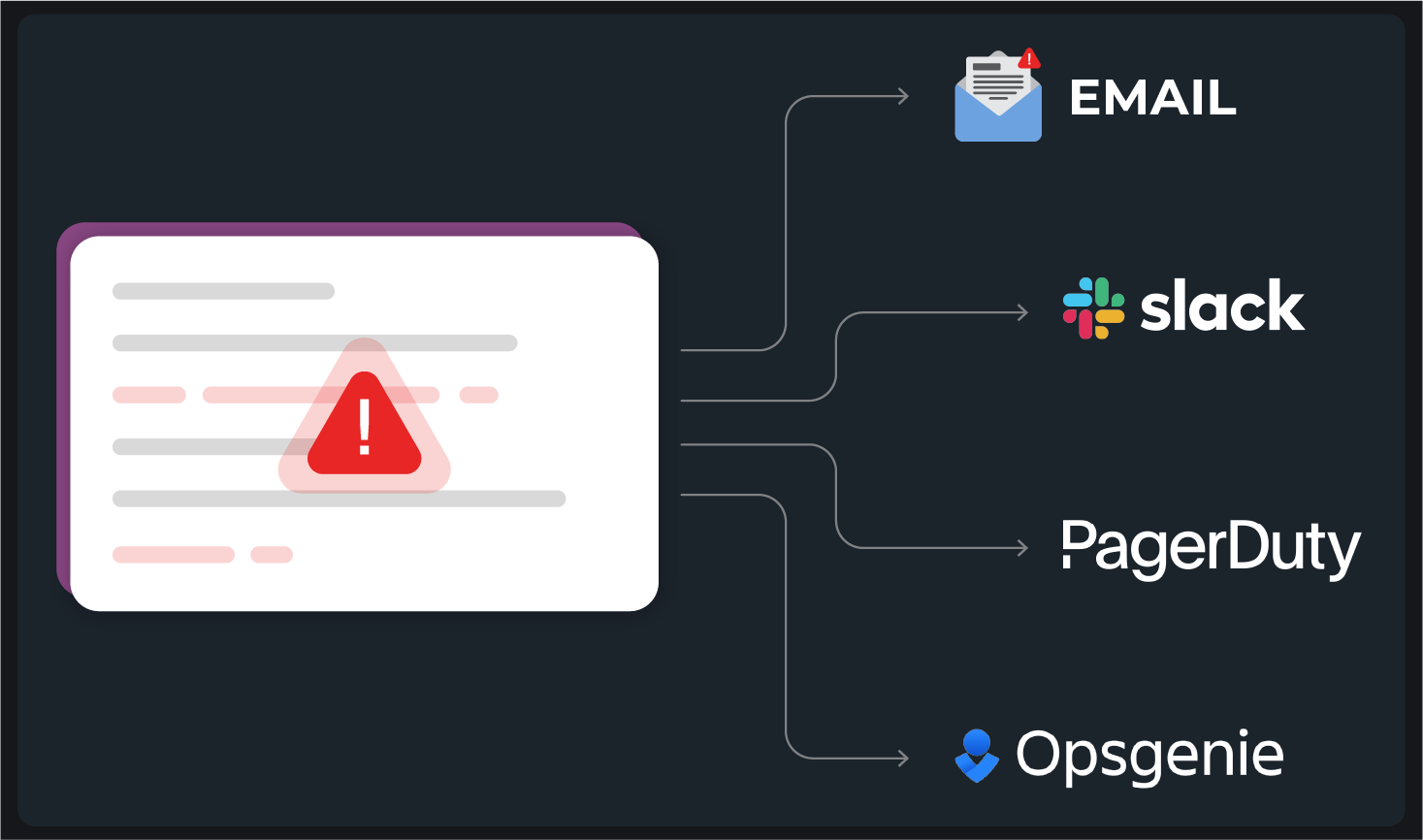

Native Alerting Integrations

Configure how and when you want to receive alerts when your model deviates from expectations with customizable monitor thresholds.

From email and Slack digests to triggered workflows with PagerDuty or OpsGenie – sleep easier knowing the right model guardrails are in place.