Explain Model Outcomes, Root Out Model Bias

Gain insights into why your models arrive at its outcomes to optimize performance over time, and mitigate the impact of potential model bias issues.

Explainability Without Sending Your Model

Gain insights into global feature importance without sending your model into Arize.

Whether you prefer sending user-calculated SHAP or a surrogate model, limit exposure and retain control of your IP.

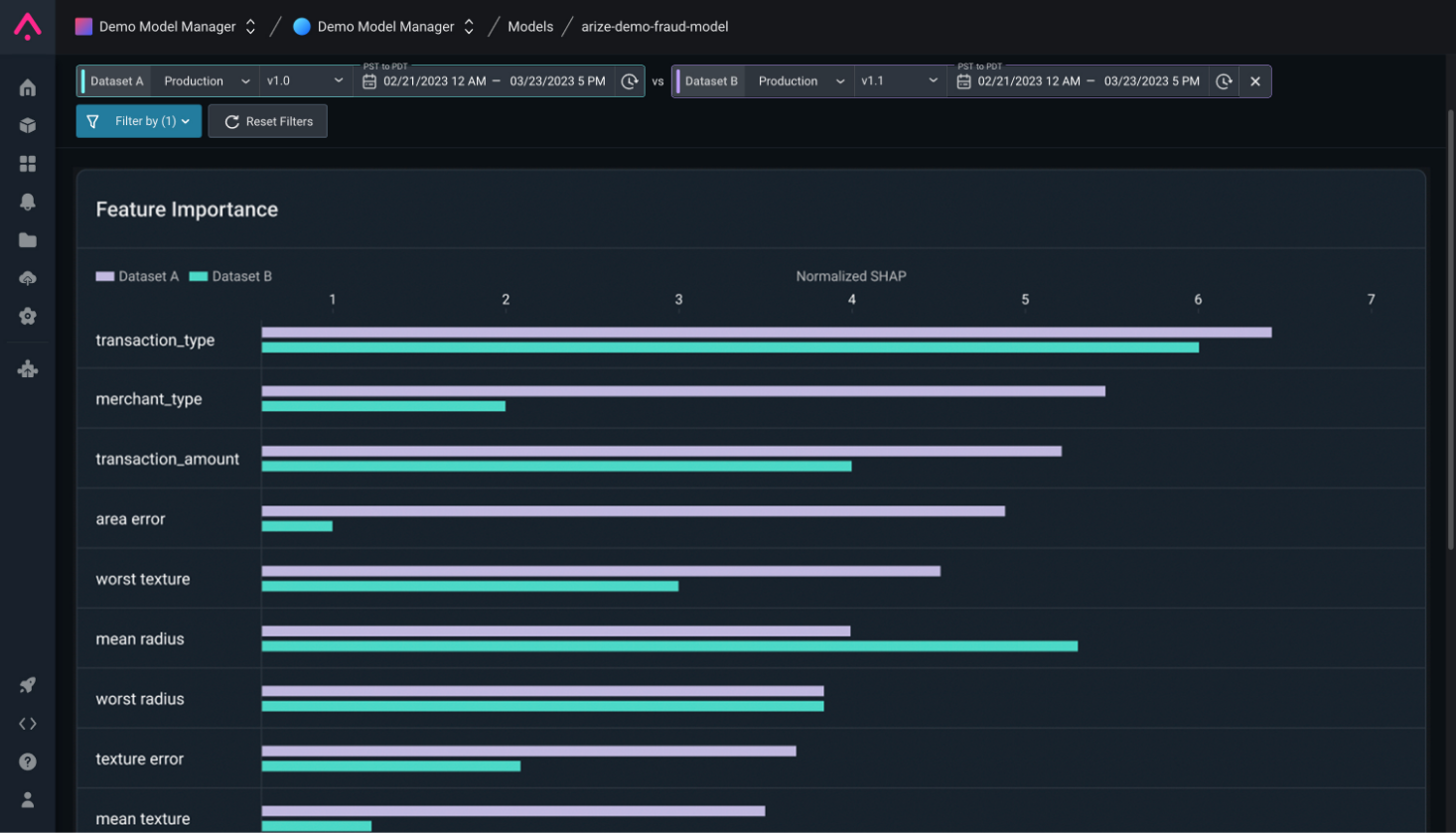

Cohort Analysis

Analyze feature importance for cohorts of interest or a subset of predictions to understand how your model responds to data it encounters.

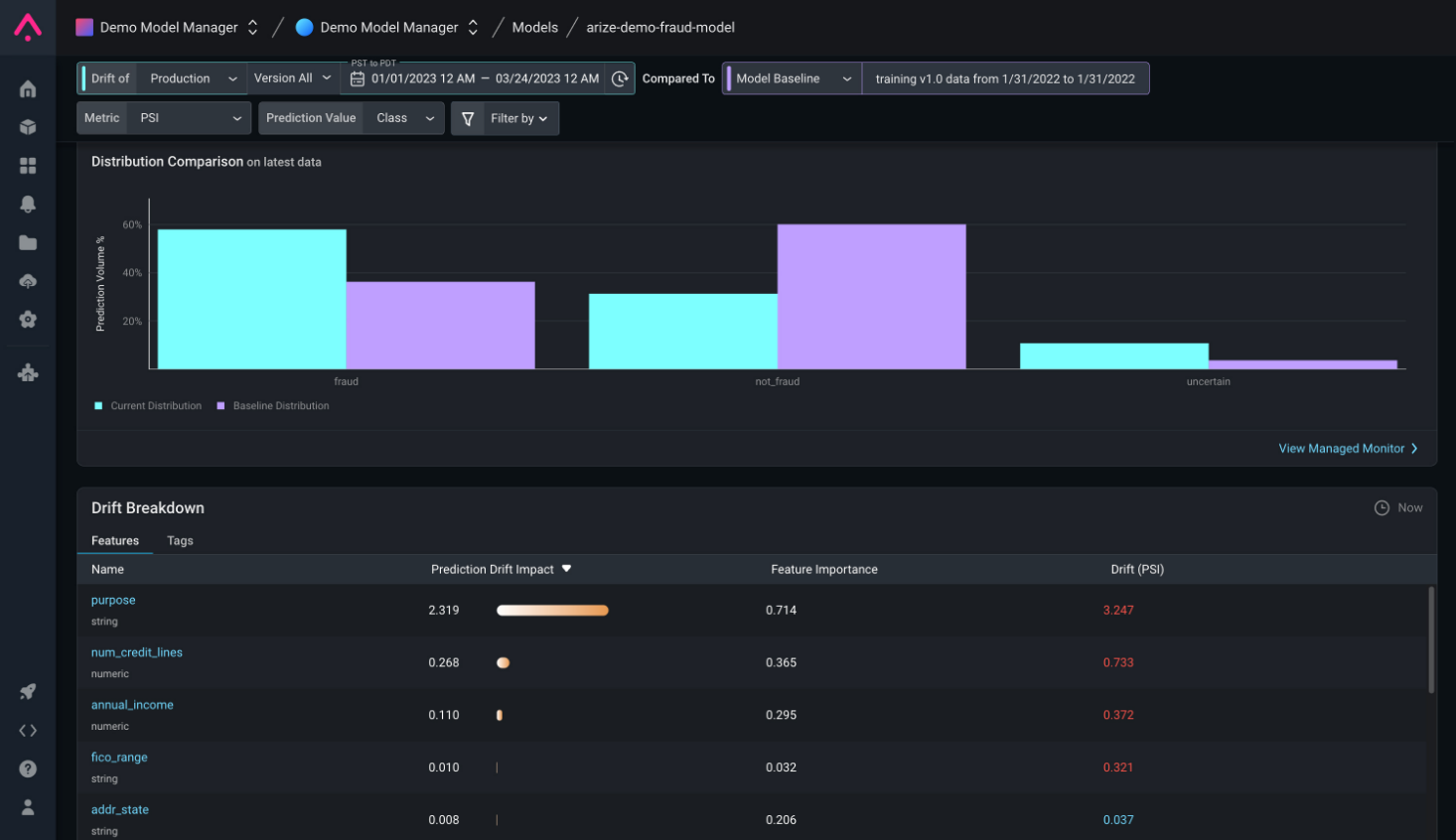

Understand Model Impact

Leverage feature importance to measure prediction drift impact and gauge whether model performance is adversely impacted by deviations in specific features.

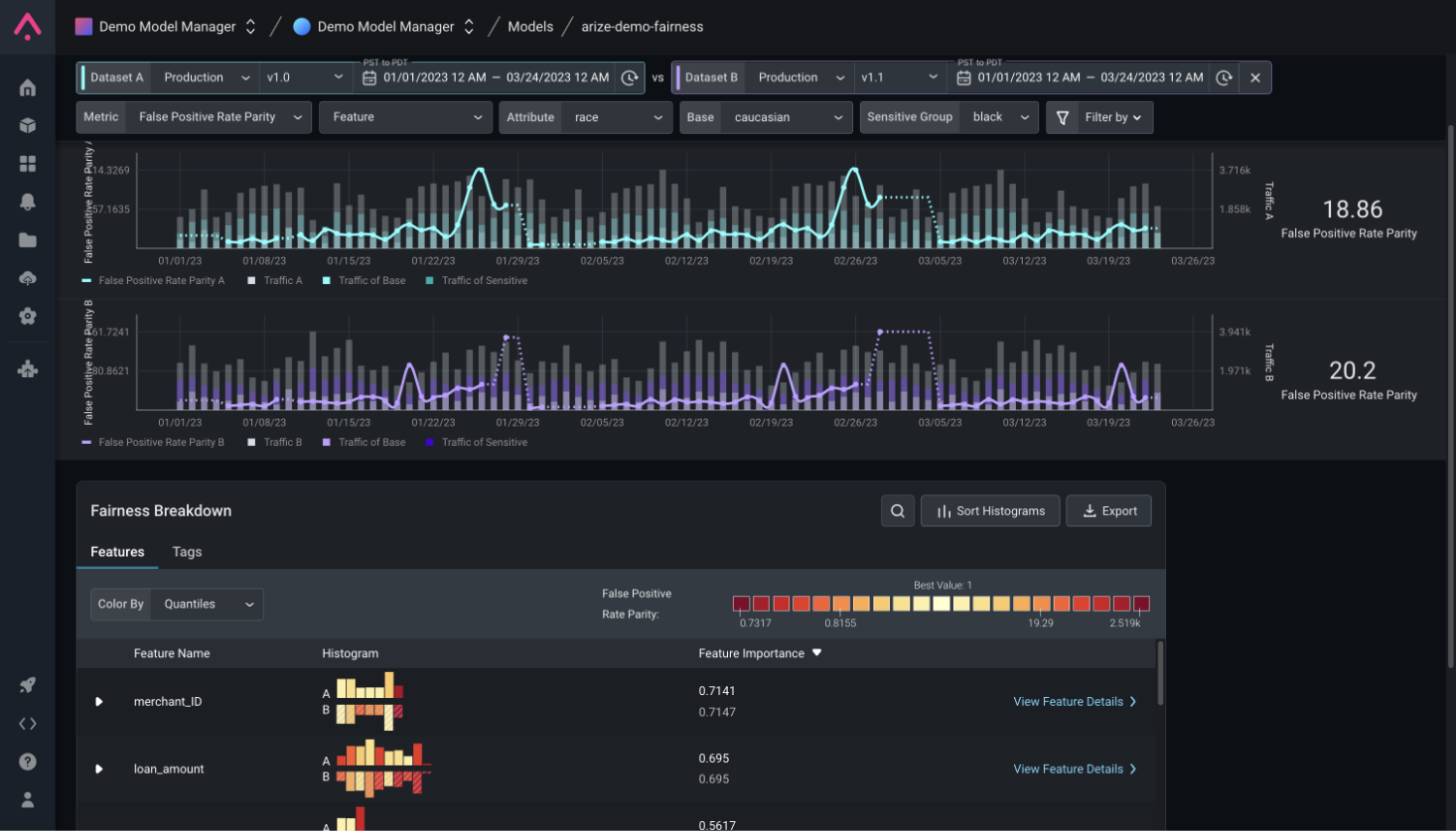

Bias Tracing

Proactively evaluate how models are behaving on protected attributes or sensitive groups by monitoring fairness metrics such as recall parity and disparate impact.

Compare Bias Across Datasets

Glean deeper insight into where model bias occurs by comparing fairness metrics across model versions, training, validation, and production datasets.