As of October 2025, 82% of enterprise leaders now rely on generative AI weekly according to a recent report from Wharton and GBK – with three in four seeing positive returns on AI investments. However, challenges remain in achieving scale and mainstream adoption of agents in production. Amongst multiple reasons, the lack of lifecycle management infrastructure and a robust evaluation pipeline are major issues. To address them, there is an increased need for tools that track, evaluate, and support the complete management of the LLM pipelines: LLM tracing tools.

What is LLM Tracing and Why is it required for AI Agents?

LLM Tracing essentially refers to understanding what happens inside the black box application, right from inputs to outputs. It’s like observing and keeping a track of all minute details for every decision-making step. This helps us gain quick insights into what is working well versus what parts need to be addressed for performance improvement. Overall, LLM Tracing can optimize cost and efficiency while reducing bottlenecks by offering great transparency into the processes.

LLM Tracing is particularly crucial for AI Agents as they function in open-ended environments and deal with multiple tools and frameworks. In such a complex amalgamation, it is difficult to understand the root cause of the problem when the final output is undesirable. Hence, LLM tracing would create audit trails of all the chain of thought steps, so it’s easy to identify any bugs or anomalous behaviour in such multi-agent systems.

What Instrumentation Is Needed for LLM Tracing?

Instrumentation is the process of capturing emitted traces from an application for analysis. It helps log every operation, decision, and interaction within an LLM pipeline. This could either be captured manually or it can be automated by using some instrumentation tools or plugin providers, such as Arize Phoenix. With Phoenix, it becomes very easy to automatically capture traces of all operational units without requiring manual efforts.

To make LLM instrumentation consistent and interoperable, Arize developed OpenInference, an open standard built on top of the OpenTelemetry Protocol (OTLP). OpenInference defines the schema and semantic layer for capturing and labeling LLM-specific events such as model calls, prompts, tool invocations, and evaluation metadata. By extending OTLP, it allows this structured data to flow seamlessly through the OpenTelemetry ecosystem.

OpenInference has quickly gained adoption beyond Arize’s ecosystem. Tools like Comet Opik and LangSmith leverage OpenInference-based integrations to maintain compatibility and ensure consistent trace representation across platforms. This growing adoption is important because it enables a common standard for tracing LLM and agent behavior, reducing integration friction and making it easier for teams to swap models, frameworks, and other tools without having to re-instrument.

Once the traces are captured, they are exported to another entity for processing and generating insights through visualizations, i.e., a collector. Arize Phoenix handles this seamlessly on its own by passing data to the Phoenix collector.

Together, OpenInference and OTLP form the backbone of modern LLM tracing. OpenInference standardizes what to capture for AI systems, while OLTP governs how that data is transmitted across observability tools.

In this blog, we will go through the top tools for LLM Tracing in 2025 in alphabetical order. Let’s begin.

Top Tools for LLM Tracing

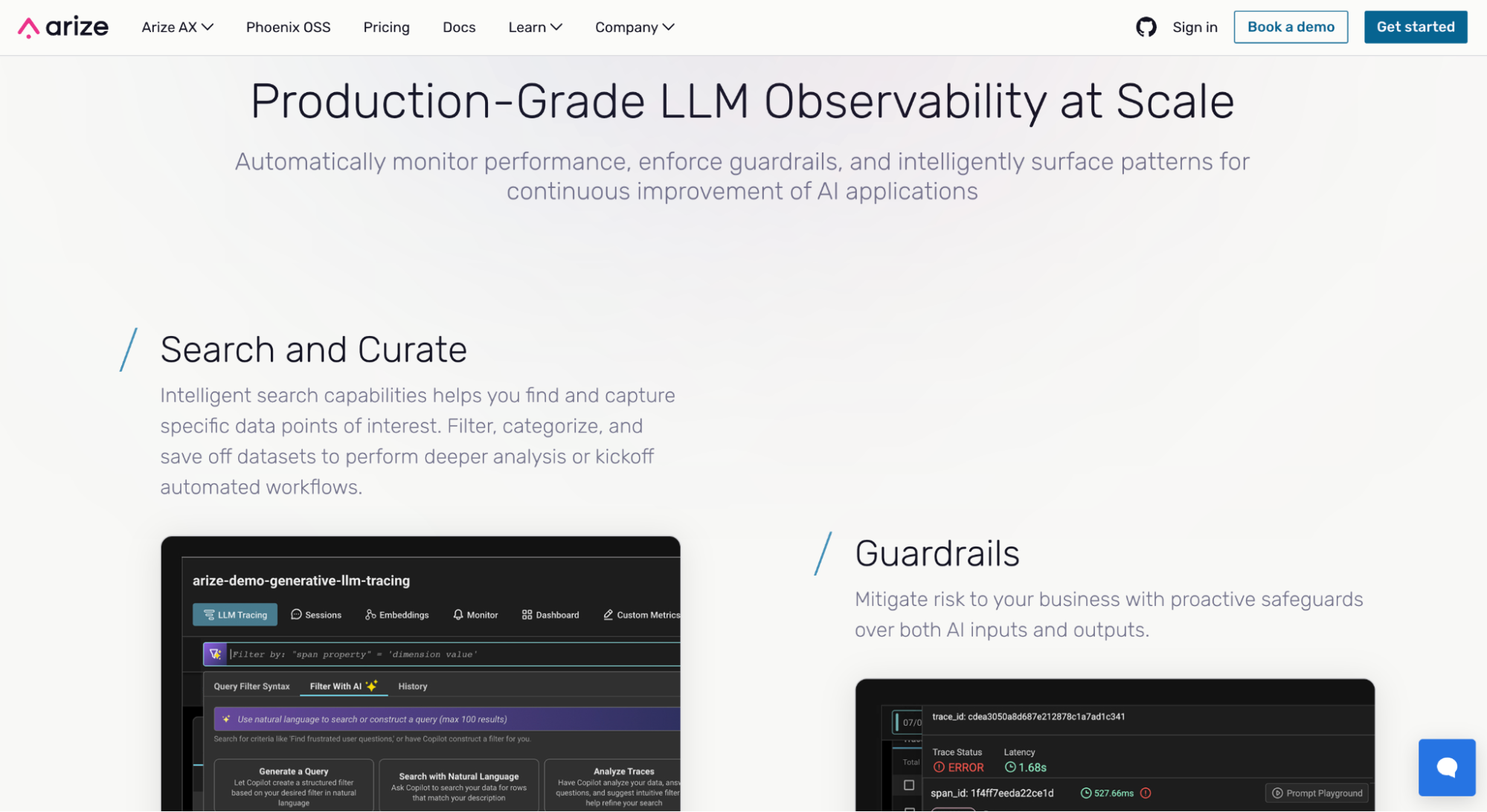

Arize AX

Arize AX a great tool for end-to-end LLM app development for enterprises, making applications production-ready by offering integrated tools that can trace, evaluate, and iterate during development.

Key Features:

- Tracing for Agentic AI applications: Offers easy visualization and debugging of the agent’s end-to-end data flow for LLM calls in Agentic AI applications.

- Tracing can be further enhanced and made seamless with Arize’s tracing assistant – a Model Context Protocol (MCP) server which allows integration of Arize’s support, documentation, and curated examples directly into the IDE.

- Sessions enable groupings of traces and interaction history for each user session, helping to pinpoint where the user experience breaks down and understand overall agent performance over time.

- Arize offers multiple tracing integrations in terms of LLM providers (OpenAI, Amazon Bedrock, Anthropic, etc.), frameworks and platforms (AutoGen, Crew AI, Pydantic, LangChain, etc.).

- Arize provides a cost tracking feature to filter and monitor traces based on costs, and provides trace-level and span-level visualizations for a given request.

To get started with tracing on Arize AX, check out the complete tutorial here.

Install the tracing packages using pip:

pip install arize-otel openai openinference-instrumentation-openai opentelemetry-exporter-otlp

# Import open-telemetry dependencies

from arize.otel import register

# Setup OTel via our convenience function

tracer_provider = register(

space_id = "your-space-id", # in app space settings page

api_key = "your-api-key", # in app space settings page

project_name = "your-project-name", # name this to whatever you would like

)

# Import the automatic instrumentor from OpenInference

from openinference.instrumentation.openai import OpenAIInstrumentor

# Finish automatic instrumentation

OpenAIInstrumentor().instrument(tracer_provider=tracer_provider)

Other key AX features:

- Prompt playground: Allows testing various prompts and receiving real-time performance feedback against different datasets.

- Online and offline evals: Arize LLM evaluation framework provides eval templates, or one can also create custom evals to assess LLM task performance.

- Risk mitigation: Provides guardrails for inputs and outputs

- Alyx, Arize’s co-pilot agent: It inspects traces, clusters failure modes, and drafts follow-up evals, which makes the life of engineers easier by building the system production-ready faster.

- Performance monitoring: Provides performance monitoring dashboards that automatically surface when issues like hallucination are detected.

- Annotation workflows: Offers easy-to-use annotation workflows that can identify and correct errors to refine the quality of LLM apps.

- Pricing: robust community edition of the product with all of the above features is free; pricing page with full breakdowns.

Limitations:

- May not be suitable for smaller teams as it is primarily targeted towards enterprises and high-growth technology companies.

- While integration coverage has expanded through OpenInference, teams may need to add light custom instrumentation for frameworks that are not yet natively supported.

Arize Phoenix

Arize Phoenix is a popular open source LLM Tracing and Evaluation platform, having over 2.5M downloads monthly and a community of over 10K, used by top AI teams such as Booking, Wayfair, and IBM.

Key LLM tracing and related features:

- Multiagent system and LLM application tracing: Provides end-to-end application tracing with options for either manual or automatic tracing.

- With Phoenix, various performance and operational issues can be handled by analyzing trace data metrics. Each project on Phoenix comes with a pre-defined metrics dashboard with metrics such as ‘Trace latency and errors’, ‘Latency Quantiles’, and ‘Cost over Time by token type’.

- Phoenix provides ‘projects’ to build an organisational structure to logically separate LLM observability data.

- It provides ‘sessions’ to track and analyse traces related to multi-turn conversations.

- Supports both Python and TypeScript SDKs for easy integration across different environments.

- To get started with tracing on Phoenix, check out the complete tutorial here.

- Dataset visualization: Offers clustering and visualizations of datasets. With the help of embeddings, it’s easy to identify segmentation chunks, prompts, and responses.

- Easy integration: Phoenix connects well with most of the LLM tools, such as LlamaIndex, OpenAI, LangChain, and more.

- Pricing: Open source (Elastic License 2.0 (ELv2)).

Installing Packages and Registering a Tracer with Phoenix Cloud:

pip install arize-phoenix-otel

pip install openinference-instrumentation-openai

from phoenix.otel import register

tracer_provider = register(

project_name="my-llm-app",

auto_instrument=True,

)

tracer = tracer_provider.get_tracer(__name__)

Limitations:

- Teams without extensive infrastructure knowledge may find it difficult to host and manage the applications.

- No agent to help with LLM tracing tasks.

- Being open source, Phoenix offers community-based support rather than dedicated enterprise assistance.

Braintrust

Braintrust is a development and evaluation platform for AI. The platform provides robust prompt management capabilities, allows cross-functional collaboration, and offers a fast infrastructure to get evals quickly.

Key Features:

- Intuitive mental model: The evals are composed of a dataset, task, and scorers, which gives teams a quick understanding of the performance of AI systems and how they can be improved.

- Batch Testing: Allows running prompts against real-world data samples to understand their performance across scenarios.

- AI-assisted workflows: Offers a built-in AI agent called ‘Loop’ which can assist with automating writing, scores, and datasets.

- Evals: Provides an interactive dashboard with alerts and visualization to see results of experiments on key LLM metrics such as average cost, token count, etc.

- Pricing: 1) Free plan, 2) Pro plan – $249 / month, 3) Enterprise plan – custom pricing

Limitations:

- Difficult to trace agents; multi-step runs with tool calls may need external instrumentation to capture the full context.

- Limited community results in fewer third-party vendor integrations.

- Governance controls such as tenancy isolation and audit logs are still not very mature, hence they can be considered more experimental.

- Tracing in Braintrust is qualified as an advanced use case; the tracing API enables teams to log nested spans and link model calls or evaluations to sub-components of a workflow, but tracing is primarily positioned for debugging and introspection during development rather than for full production observability or online evaluation.

Comet opik

Comet Opik is an open-source LLM evaluation tool that extends beyond conventional tracing workflows. It offers features such as built-in guardrails to ship LLM applications faster.

Features:

- Benchmark LLM applications: Allows optimizing LLM applications by logging traces, computing and scoring evaluation metrics, and comparing performance across different versions.

- Optimization of prompts: Provides four types of prompt packages for various LLM systems, including text-based and multi-agent systems.

- Guardrails: Enhances the safety of LLM applications with guardrails such as screening inputs and outputs for unwanted content and managing off-topic discussions.

- Unit Testing: Provides LLM unit tests (built on PyTest) to comprehensively evaluate the entire system within the CI/CD pipeline.

- Pricing: 1) Free plan , 2) Pro plan – $39/month, 3) Enterprise plan – custom pricing.

Limitations:

- The automatic prompt optimization packages may lack transparency into their working, hence it could take more time for developers to debug or understand the outcomes thoroughly.

- Provides very limited direct integrations with other libraries and frameworks.

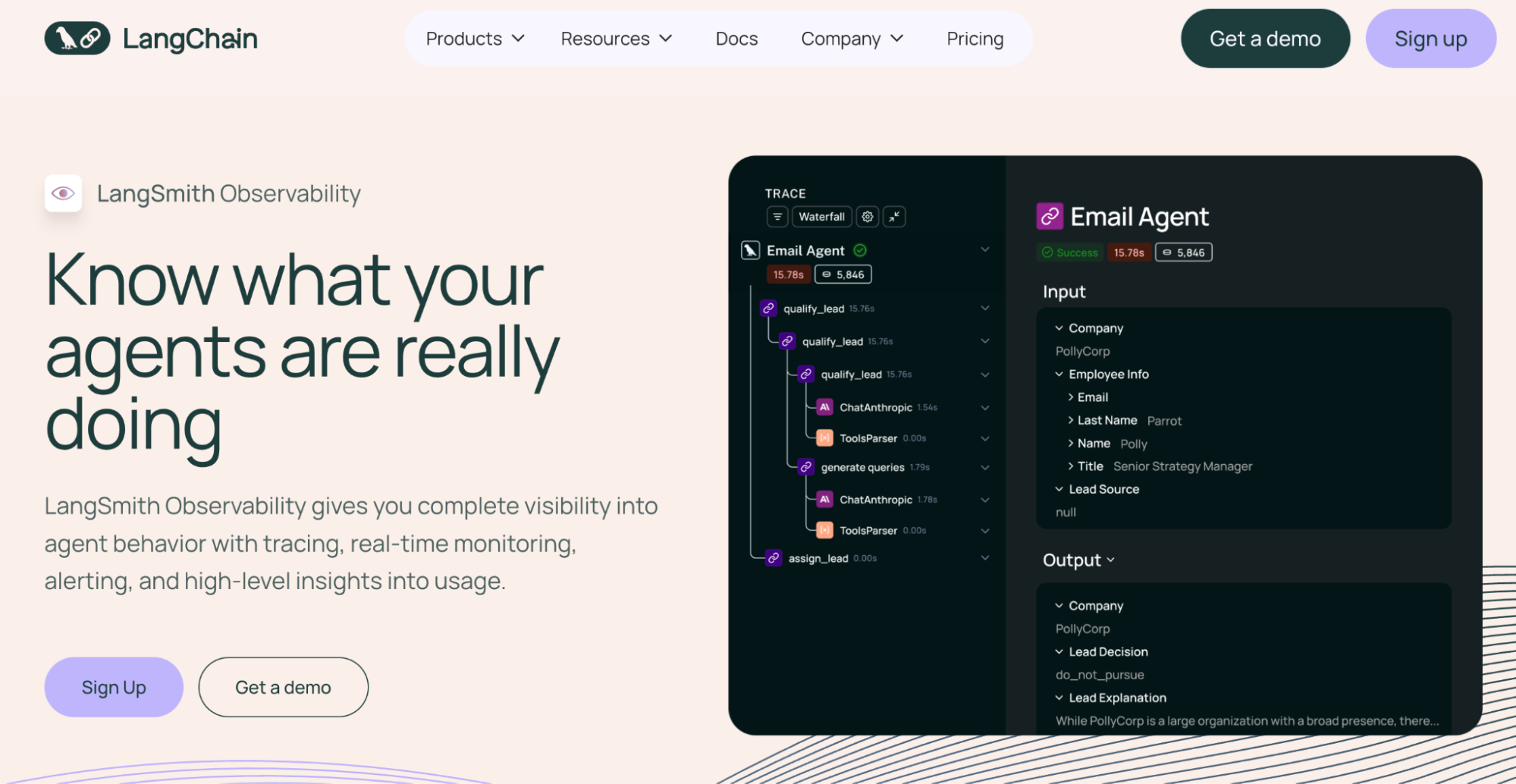

LangSmith

LangSmith Observability is an ideal tool for AI agent behavior tracing for those building in the LangChain ecosystem. It offers comprehensive insights into the overall workings of LLM applications.

mkdir ls-observability-quickstart && cd ls-observability-quickstart

python -m venv .venv && source .venv/bin/activate

python -m pip install --upgrade pip

pip install -U langsmith openai

Installing dependencies for LangSmith.

from openai import OpenAI

from langsmith.wrappers import wrap_openai # traces openai calls

def retriever(query: str):

return ["Harrison worked at Kensho"]

client = wrap_openai(OpenAI()) # log traces by wrapping the model calls

def rag(question: str) -> str:

docs = retriever(question)

system_message = (

"Answer the user's question using only the provided information below:\n"

+ "\n".join(docs)

)

resp = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": system_message},

{"role": "user", "content": question},

],

)

return resp.choices[0].message.content

if __name__ == "__main__":

print(rag("Where did Harrison work?"))

Key Features:

- Relevant metrics monitoring: Tracks crucial metrics such as cost and latency and provides updates on a live dashboard with alerts for when issues happen.

- Meaningful insights: Provides insights about usage patterns, such as finding the most common failure modes automatically without making manual efforts. Also provides actionable insights.

- Integrations: Supports OTEL, OpenInference to unify the observability stack across various services in agentic systems.

- Deployment: Manages long-running agents with their own deployment infrastructure. Offers access to LangSmith Studio, allows exposing agent as an MCP server, and a 1-click deploy option.

- Pricing: 1) Developer plan – $0 / seat per month, pay as you go afterwards, 2) Plus plan for teams working with agents – $39 / seat per month, pay as you go afterwards, 3) Enterprise plan for teams with advanced hosting, security, and support needs – Undisclosed.

Limitations:

- It is very much integrated into the LangChain ecosystem; hence, scaling beyond it is difficult.

- It may not be suitable for large production volumes because users report they often hit workspace limits within a short duration of time.

- Advanced deployment management features are only accessible to teams buying the enterprise plan, which may be expensive for smaller teams.