Most commonly, we hear about evaluating LLM applications at the span level. This involves checking whether a tool call succeeded, whether an LLM hallucinated, or whether a response matched expectations. But sometimes, span-level metrics don’t tell the whole story. To understand if a workflow succeeded end-to-end, we need to zoom out to the trace level.

This tutorial will focus on running trace-level evaluations directly in your code. We will log our results back to the Arize platform for further analysis.

Our notebook provides an easy way to test out these evaluations for yourself with a free Arize AI account.

What’s a Trace?

Whenever your LLM application runs, it takes multiple steps behind the scenes: tool calls, LLM calls, retrieval operations, and more.

- A span represents a single step (e.g., one tool call).

- A trace represents the entire chain of steps taken for a single user request.

Evaluating at the trace level means asking:

- Did the overall request succeed?

- Was the workflow efficient?

- Were the answers relevant to the user’s query?

This perspective is especially valuable for multi-step workflows or multi-agent systems.

Why Trace-Level Evals?

Trace-level evaluations let you answer bigger-picture questions:

- Did the agent follow the right sequence of steps?

- Was the overall recommendation relevant?

- Did the workflow succeed end-to-end?

For example, in our movie recommendation agent example, span-level evals can tell you if each tool worked correctly, but only trace-level evals can confirm whether the final recommendations matched the user’s request (ex: comedy movies instead of action).

Example: Building a Movie Recommendation Agent

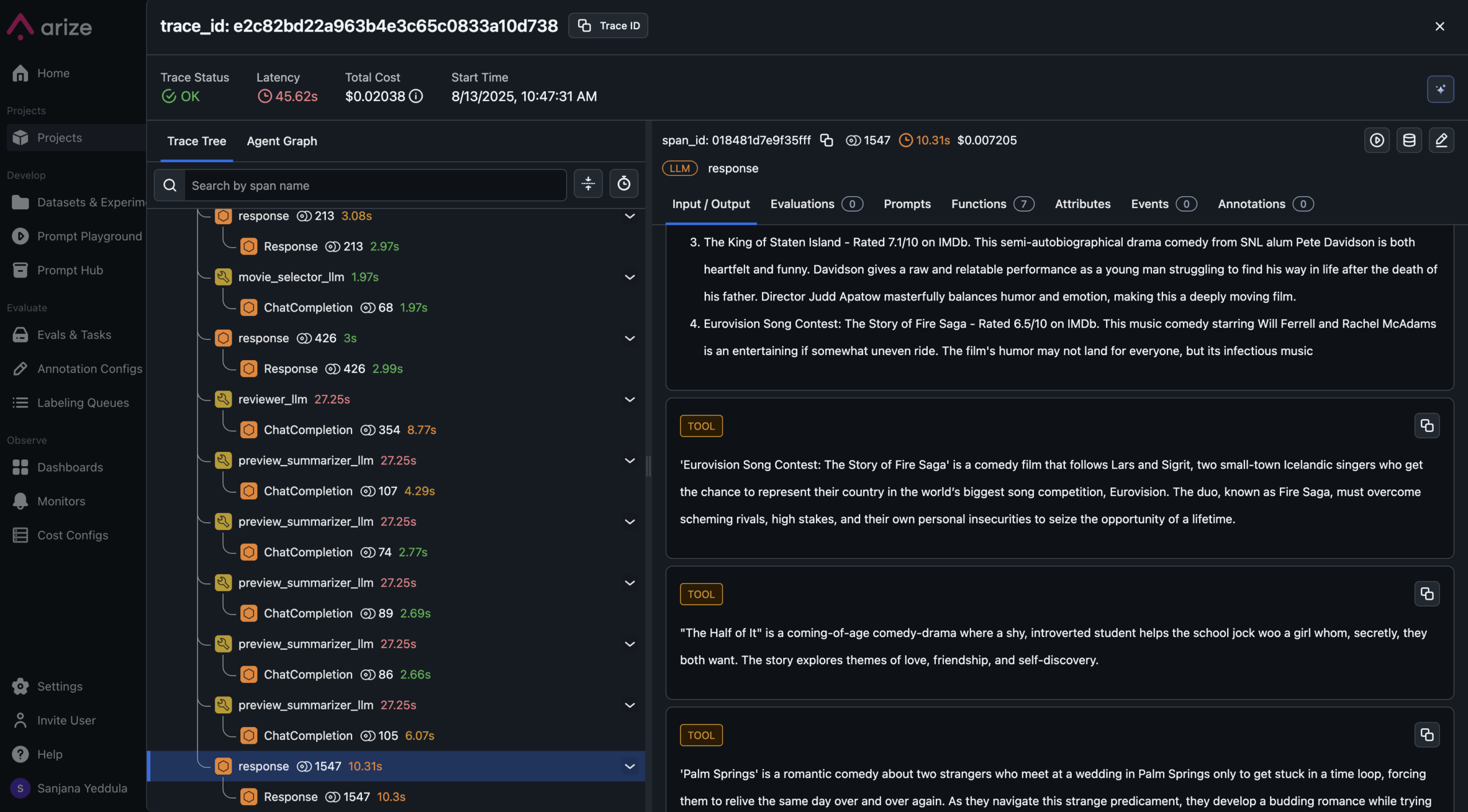

To demonstrate trace-level evaluations, we built a simple agent with three tools:

- Movie Selector – fetches a list of movies based on a genre.

- Reviewer – sorts movies by ratings and explains why.

- Preview Summarizer – generates short previews for each movie.

So when a user asks, “Which comedy movie should I watch?”, the agent:

- Calls the Movie Selector to grab titles

- Sends those titles to the Reviewer

- Runs the Preview Summarizer on each title

- Returns a neat final answer with movies, ratings, and previews

Each of these steps is traced in Arize and together they form the trace.

Running Trace-Level Evaluations in Code

Once we generate multiple traces by asking the agent different questions (ex: “Which Batman movie should I watch?”, “Recommend a scary horror movie”), we can start evaluating them.

Two evaluators we set up:

- Tool Calling Order – checks if the agent used tools in the correct sequence.

- Recommendation Relevance – checks if the final recommendations matched the user’s request throughout the entire process.

Here’s the flow:

- Export traces from Arize into a DataFrame.

- Aggregate spans into traces using trace IDs.

- Define evaluators with LLM as a Judge template (the evaluator will return a correct/incorrect label along with an explanation).

- Run evals with Phoenix evals.

- Log results back into Arize. Once evaluations are logged, you can view them directly inside the Arize platform at the trace level.This makes it easy to identify whether issues stem from individual steps or the overall workflow.

Why Trace-Level Evaluations Matter

In LLM evaluation, trace-level evals are a powerful way to ensure your multi-step AI systems don’t just succeed piece by piece, but actually deliver end-to-end reliability.

- Span-level evals are useful for debugging individual steps.

- Trace-level evals give you a broader view: was the entire workflow successful?

- Running trace evals with Phoenix Evals + Arize is straightforward and integrates directly into your workflow.