Agent Observability and Tracing

By Sanjana Yeddula, AI Engineer at Arize AI

Modern agents are increasingly complex. For starters, many are no longer just single agents – they are often multiple agents connected together through complex routing logic and handovers. They’re also increasingly multimodal. They connect with MCP servers as tools. They may even hand over control to entirely different systems through A2A communications. Despite this complexity, most teams are still debugging with vibes, tweaking prompts and shipping changes.

In order for multiagent systems to scale, something more is needed: agent observability.

Background

AI agents are the brains behind chatbots that can hold a conversation, assistants that can summarize documents in seconds, and agents that stitch together APIs and models to tackle complex tasks.

Conceptually, an AI agent is an LLM augmented with tools, data, and reasoning capabilities. At the heart of many agents is a router, usually an LLM itself, that decides where incoming requests should go. You can think of the router as the traffic controller with a toolbox. With this toolbox in hand, the agent can autonomously take a series of actions – often over multiple turns – to achieve a goal. It assesses its environment and adapts its plan on the fly as new information becomes available.

Instead of just reacting to a single prompt, agents plan and act dynamically in real time – making them more powerful and unpredictable than isolated LLM calls.

What is Agent Observability?

The principles behind agent observability remain the same as other forms of observability: make the agent’s internal workings visible, traceable, and understandable. The goal is for your agent to be a glass box.

Agent observability unveils:

- What steps the agent took to arrive at its final output

- What tools it uses, in what order, and why

- What data is retrieved and whether it’s relevant

- Where reasoning paths stayed on track and where they veered in the wrong direction

Why does this matter? Because subtle failures can snowball. An agent that makes a retrieval error or takes an inefficient path may confuse users, drive up latency, or rack up cost in production. Agent observability is essential for building agents that actually work.

Agent Evaluation

Once you are able to see what an AI agent is doing, the next step is measuring how well they are performing. This is rarely straightforward because agents take multiple steps and follow complex paths. Static test cases won’t do the trick here.

The good news is that there are multiple ways to test and evaluate agents. You can choose the methods that work best for your use case. Here we will share two main types of evaluation strategies: LLM as a Judge and Code Evals.

LLM as a Judge

We can prompt an LLM to assess an agent’s output and trajectories. This is a great alternative to human or user feedback which is expensive and usually difficult to obtain.

First identify what you want to measure, such as correctness or tool usage accuracy. Next, craft a clear evaluation prompt to tell the LLM how to assess the agent’s multi-step outputs. Then, run these evaluations across your agent runs to test and refine performance without using manual labels.

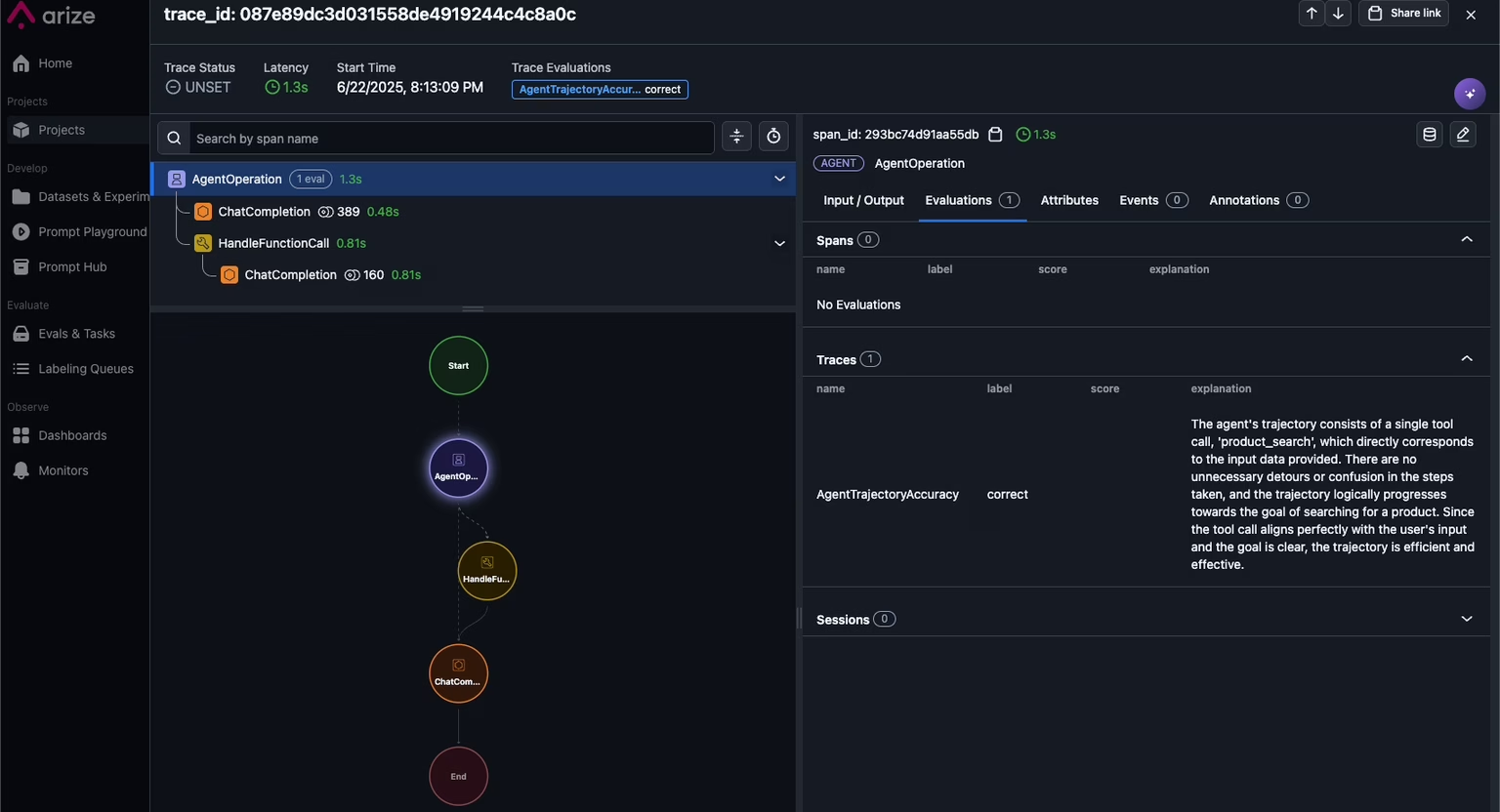

For example, agent trajectory evaluations use an LLM as a Judge to assess the entire sequence of tool calls an agent takes to solve a task. This helps you catch loops or unnecessary steps that inflate cost and latency to ensure the agent follows the expected “golden path.”

TRAJECTORY_ACCURACY_PROMPT = """

You are a helpful AI bot that checks whether an AI agent's internal trajectory is accurate and effective.

You will be given:

1. The agent's actual trajectory of tool calls

2. The user input that initiated the trajectory

3. The definition of each tool that can be called

An accurate trajectory:

- Progresses logically from step to step

- Uses the right tools for the task

- Is reasonably efficient (no unnecessary detours)

Actual Trajectory: {tool_calls}

User Input: {attributes.input.value}

Tool Definitions: {attributes.llm.tools}

Respond with **exactly** one word: `correct` or `incorrect`.

- `correct` → trajectory adheres to the rubric and achieves the task.

- `incorrect` → trajectory is confusing, inefficient, or fails the task.

"""

Arize offers pre-tested LLM as a Judge template for other agent evaluation use cases such as Agent Planning, Agent Tool Calling, Agent Tool Selection, Agent Parameter Extraction, and Agent Reflection.

Code Evaluations

When your evaluation relies on objective criteria that can be verified programmatically, code evals are the way to go. Think of them as the rule-following side of testing that runs quick, reliable checks to confirm the output hits all the right marks.

A great example of this is measuring your agent’s path convergence. When the agent takes multiple steps to reach an answer, you want to ensure it’s following consistent pathways and not wandering off into unnecessary loops.

To measure convergence: Run your agent on a batch of similar queries and record the number of steps taken for each run. Then, calculate a convergence score by averaging the ratio of the minimum steps for each query to the steps taken in each run.

This gives you a quick look at how well your agent sticks to efficient paths over many runs.

all_outputs = [ # Assume you have an output which has a list of messages, which is the path taken ]

optimal_path_length = 999

ratios_sum = 0

for output in all_outputs:

run_length = len(output)

optimal_path_length = min(run_length, optimal_path_length)

ratio = optimal_path_length / run_length

ratios_sum += ratio

# Calculate the average ratio

if len(all_outputs) > 0:

convergence = ratios_sum / len(all_outputs)

else:

convergence = 0

print(f"The optimal path length is {optimal_path_length}")

print(f"The convergence is {convergence}")

Evaluating AI agents requires looking beyond single-step outputs. By mixing evaluation strategies such as LLM-as-a-Judge for the big-picture story and code-based checks for the finer details, you get a 360° view of how your agent’s really doing. It’s important to remember that evaluation methods should be rigorously tested against ground truth datasets to ensure they generalize well to production.

Observability in Multi-Agent Systems

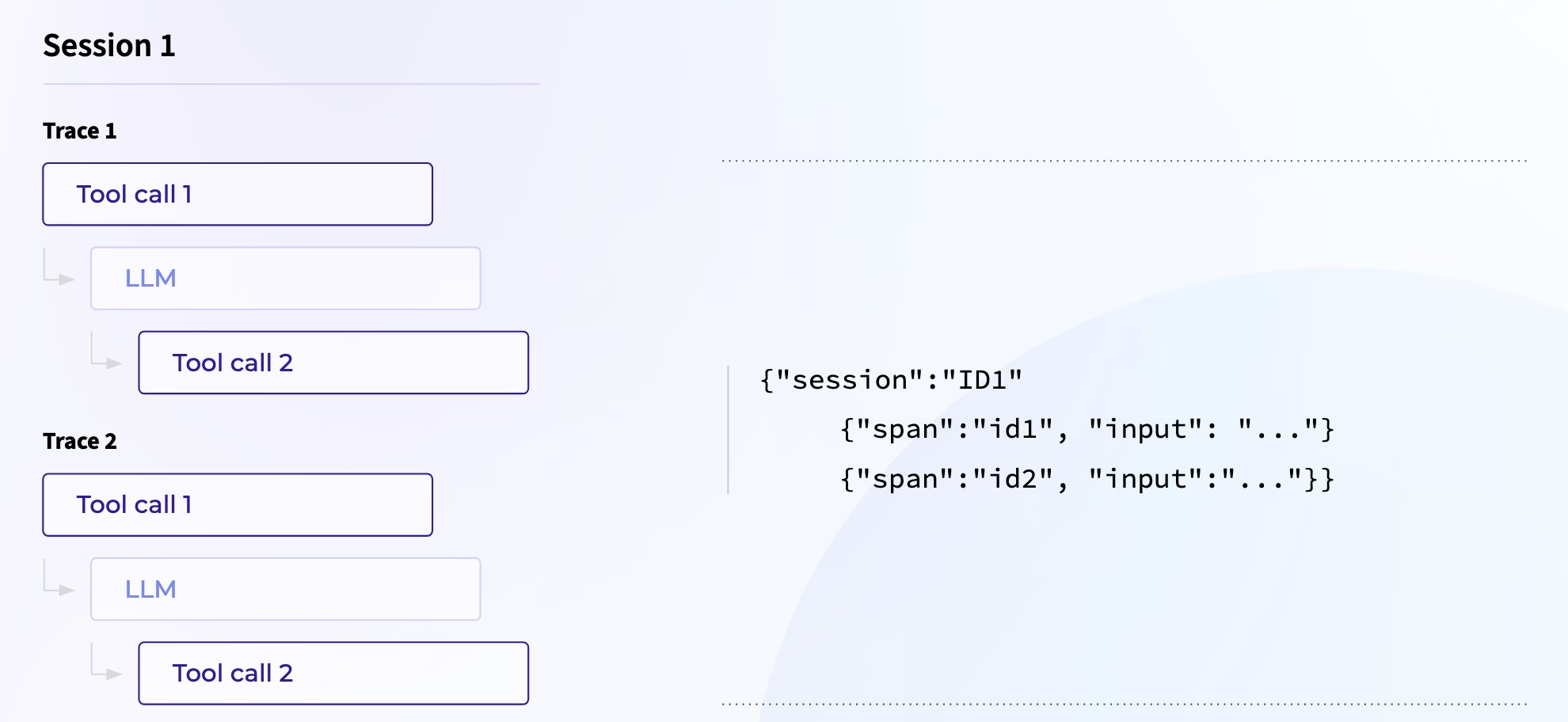

Multi-Agent Tracing

Multi-agent tracing systematically tracks and visualizes interactions among multiple agents within an AI system. Unlike traditional single-agent debugging, multi-agent tracing shows how agents interact, delegate tasks, and utilize tools in real-time. Visualizing these interactions through interactive flowcharts, such as those in “Agent Visibility,” helps engineers understand agent communications and decisions step-by-step. Clear tracing identifies logical breakdowns, bottlenecks, and inefficient tool usage, enabling more effective debugging and optimization.

Unified Observability Across Frameworks

Scaling multi-agent systems requires a unified observability framework across different agent technologies. Observability tools should integrate seamlessly with frameworks like Agno, Autogen, CrewAI, LangGraph, and SmolAgents without custom implementation. Standardized observability offers consistent insights, accelerates troubleshooting, and streamlines debugging across diverse agent setups and architectures.

Session-Level Observability: Context Matters

Why Session-Level Matters

Session-level observability evaluates an agent’s performance over an entire conversational or task-based session, beyond individual interactions. This evaluation addresses coherence (logical consistency), context retention (building effectively on previous interactions), goal achievement (fulfilling user intent), and conversational progression (naturally managing multi-step interactions). Session-level observability ensures reliability and context awareness in agents performing complex, multi-turn tasks.

Best Practices for Session-Level Evaluation

Effective session-level evaluations require careful planning. Best practices include defining criteria for coherence, context retention, goal achievement, and conversational progression before evaluations begin. Evaluation prompts should clearly instruct models or LLMs on assessing these aspects. Combining automated methods (e.g., LLM-as-a-judge) with targeted human reviews ensures comprehensive evaluation. Regularly updating evaluation criteria based on evolving interactions and agent capabilities maintains continual improvement.

Advanced Concepts

Tracing MCP Clients & Servers

MCP refers to Anthropic’s Model Context Protocol, an open standard for securely connecting LLMs (like Claude) to external data sources and tools.

Visibility Gap

Previously, developers could only trace what’s happening on the client — like LLM calls and tool selection — or on the MCP server in isolation. But once a client made a call to an MCP server, you lost visibility into what happened next.

Context Propagation

openinference-instrumentation-mcp bridges this gap. By auto-instrumenting both client and server with OpenTelemetry you can propagate OpenTelemetry context between the MCP client and server, unifying them into a single trace. This allows:

- Tool calls made by the client to show corresponding server-side execution.

- Visibility into LLM calls made by the MCP server itself.

- A full trace of the agent’s behavior across systems—all visible in Arize AX or Phoenix.

This is one of the first examples of MCP tracing and promises to make debugging, optimization, and visibility dramatically easier.

Voice / Multimodal Observability

Multimodality brings new challenges and opportunities for agent observability. Unlike pure text workflows, these agents process voice, images, and other data types.

Tracing multimodal agents helps you align transcriptions, image embeddings, and tool calls within a unified view, making it easier to debug tricky misinterpretations (like a faulty transcription). Tracing also surfaces latency per modality, which is crucial since voice and image processing can sneak in hidden bottlenecks that can quickly erode the user experience.

Evaluation for multimodal agents heavily on checking whether the agent understood and responded to its inputs correctly. Using LLM as a Judge, you can assess whether an image caption matches the uploaded image or if a transcribed voice command triggers the right tool call. Code-based checks add another layer by catching deterministic issues, like missing required objects in generated captions or verifying if a response aligns with structured data pulled from an image.

Multimodality certainly introduces new layers of complexity that demand careful observability and evaluation. Taking the time to truly understand how your agent processes all these different inputs gives you the power to build systems that actually keep up with the unpredictable signals of the real world.

The Ultimate Goal: Self-Improvement Flows

While we’ve talked a lot about how observability helps you catch problems, it’s also about systematically making your agents better overtime. With structured traces and evaluations in place, you can spot patterns in failures and identify which prompts or tool strategies consistently perform best.

Start by reviewing outlier traces and low-scoring evals to pinpoint where your agent is struggling the most. Dig into why behind these failures: Is it poor retrieval that return irrelevant chinks? Is a brittle prompt confusing your LLM? These are the insights you can leverage to refine prompts, adjust tool call logic, and improve your data pipelines where it matters most.

Once improvements are made, rerun evals across your historical data to measure the true impact of your changes across your real workload. This confirms progress and avoids regressions that slip past manual checks.

Improvement flows can also include automation. With automated prompt optimization, you can auto-generate and test new prompt versions using your labeled datasets and feedback loops. Instead of endless trial-and-error, you can let your prompts evolve as your use cases and data change.

Self-improving agents take this a step further. By feeding low-scoring traces and failed evals back into your pipelines, your agents can learn from their mistakes. Instead of staying static, your agents become living systems that adapt, ensuring they stay sharp and aligned with your users as they scale.