MCP Tracing

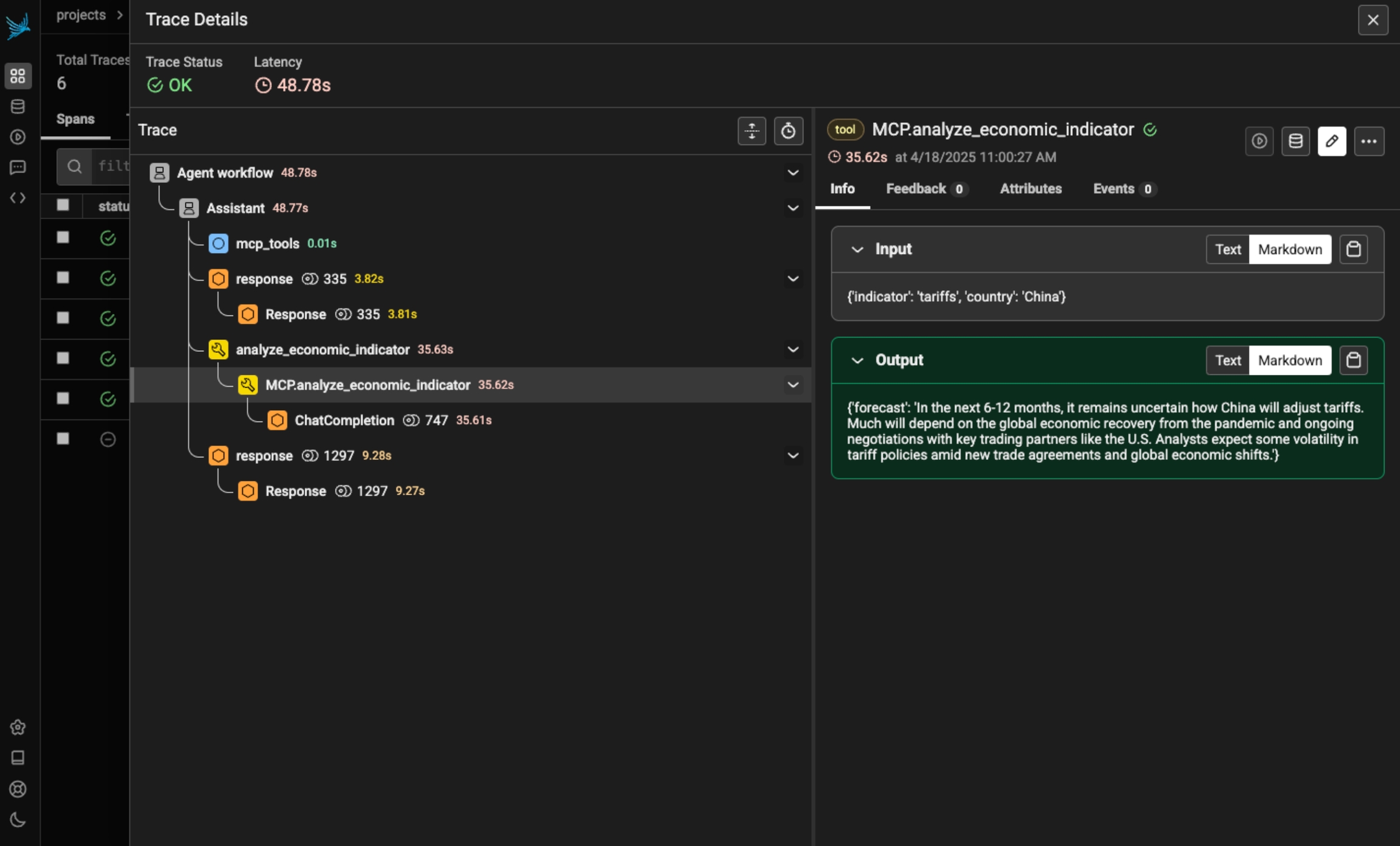

Phoenix provides tracing for MCP clients and servers through OpenInference. This includes the unique capability to trace client to server interactions under a single trace in the correct hierarchy.

The openinference-instrumentation-mcp instrumentor is unique compared to other OpenInference instrumentors. It does not generate any of its own telemetry. Instead, it enables context propagation between MCP clients and servers to unify traces. You still need generate OpenTelemetry traces in both the client and server to see a unified trace.

Install

pip install openinference-instrumentation-mcpBecause the MCP instrumentor does not generate its own telemetry, you must use it alongside other instrumentation code to see traces.

The example code below uses OpenAI agents, which you can instrument using:

pip install openinference-instrumentation-openai-agentsAdd Tracing to your MCP Client

import asyncio

from agents import Agent, Runner

from agents.mcp import MCPServer, MCPServerStdio

from dotenv import load_dotenv

from phoenix.otel import register

load_dotenv()

# Connect to your Phoenix instance

tracer_provider = register(auto_instrument=True)

async def run(mcp_server: MCPServer):

agent = Agent(

name="Assistant",

instructions="Use the tools to answer the users question.",

mcp_servers=[mcp_server],

)

while True:

message = input("\n\nEnter your question (or 'exit' to quit): ")

if message.lower() == "exit" or message.lower() == "q":

break

print(f"\n\nRunning: {message}")

result = await Runner.run(starting_agent=agent, input=message)

print(result.final_output)

async def main():

async with MCPServerStdio(

name="Financial Analysis Server",

params={

"command": "fastmcp",

"args": ["run", "./server.py"],

},

client_session_timeout_seconds=30,

) as server:

await run(server)

if __name__ == "__main__":

asyncio.run(main())Add Tracing to your MCP Server

Observe

Now that you have tracing setup, all invocations of your client and server will be streamed to Phoenix for observability and evaluation, and connected in the platform.

Resources

Last updated

Was this helpful?