Five Unexpected Ways To Use ML Observability

This blog is written in partnership with Dan Schonfeld, Data Science Manager at eBay

Taking a machine learning (ML) model from research to production and maintaining it once live is a difficult endeavor. Done well, it takes a village of people and purpose-built tools. ML observability is emerging as an indispensable part of that MLOps toolchain, helping teams automatically surface and resolve model performance problems before they negatively impact business results.

Of course, there is more to ML observability than setting up monitors and troubleshooting model performance. For those who want to skip the basics and move ahead to the master class, here are five underutilized or unexpected ways you can leverage ML observability to make your production workflow more efficient.

Retraining Workflows

Chip Huyen, Co-Founder of Claypot AI and author of O’Reilly’s Designing Machine Learning Systems, recently lamented how often model deployment devolves into a one-off process at companies:

I was at a roundtable with the heads of ML at some pretty big companies that I consider to be progressive when it comes to new technology. It was a very interesting discussion, and I’m grateful to be invited. But one question stood out to me…One executive asked: ‘How do we know if the model development is done and it’s time to deploy the model?’…I asked: ‘Wait a second. How often do you retrain your model?’ People laughed. One said 6 months. Another said a year. Another said never. This schedule turns model deployment into a one-off process. After a model is deployed, it can’t be changed or updated. Bugs can’t be fixed. But deployment isn’t a one-off process. It’s a continual process…The question shouldn’t be how to get to the perfect model for the next 6 months, but should be how to build a pipeline to update and deploy a model as fast as possible.

It is widely known that retraining ML models – especially large computer vision (CV) or natural language processing (NLP) models – is computationally expensive and time consuming. Since no one knows exactly how a retrained model will act once redeployed, there is also uncertainty at the end of the process. Will the model have new data quality issues? Will a new bias be introduced, with the model suddenly making worse decisions for a marginalized group or protected class? Or will there be unintended consequences from retraining that no one could have predicted?

Here’s the rub: you need to retrain models to keep them relevant, but you also don’t want to retrain more than necessary. So how do you find the sweet spot to maximize model performance? The answer is ML observability.

For standalone ML models, a ML observability platform can enable you to set drift monitors. By tracking prediction drift, concept drift and data/feature drift, you can know immediately if a model is drifting due to changes between the current and reference distributions. One example is when a data input or slice exists in your production environment but not in your training data. In these cases, you can promptly take down your current model when there is an issue, put a challenger model in place, and then retrain on the new inference data.

But what about large unstructured models that could impact multiple ML teams and millions of customers? Most teams opt to retrain on a recurring time period rather than on the basis of needs. The problem with retraining at a regular cadence is that you are either training more than necessary to increase performance, or you aren’t training frequently enough to catch errors. If you retrain your model every six months, the model has likely experienced both circumstances.

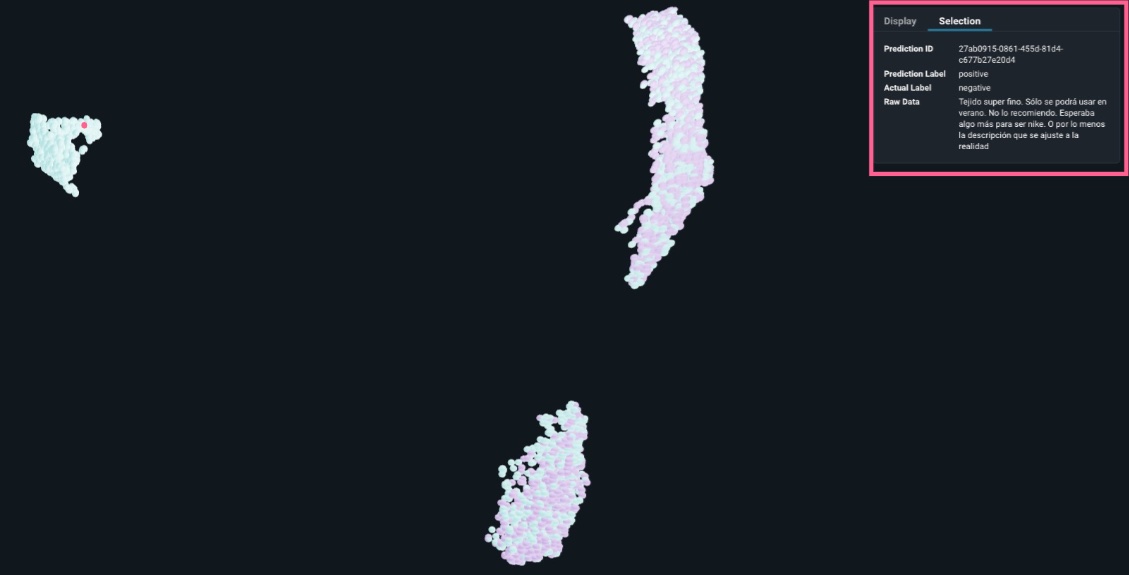

Thanks to emerging techniques around monitoring unstructured data, there is a better way. In unstructured use cases embeddings can represent text, images, and audio signals. Now, ML teams can track their embeddings through a latent space over time to see the evolution of their data and identify new patterns. With embedding drift monitors, teams can gather the evidence necessary for when and where they need to retrain their CV and NLP models and export problematic cohorts that need to be labeled. This is especially important for large organizations where retraining a model can impact multiple teams.

Model Version Control

One other way an ML observability platform can be valuable is in providing side-by-side analysis of how each version of a model is performing. Even if you employ an experimentation platform during the model building and testing phases, the ability to see how different model versions perform in the real world is the ultimate evaluation of the efficacy of your optimizations and retraining efforts. This is especially advantageous with shadow or canary deploys, in which you are gauging performance of a model version on a subset of production data before full deployment. Ultimately, fast evaluation of challenger models can enable teams to be more nimble in a changing world. And because new model versions often emerge to service a new need or improvement — such as expanding a delivery service to new neighborhoods — the ability to compare performance against specific cohorts of predictions and features will usually help yield more fruitful analysis. The comparison of multiple data environments (training, validation and production) should be multidimensional, meaning accompanied by filters on features, metadata, predictions and your ground truths for each version, to obtain a concrete evaluation.

Deprecating Models

In programming, deprecation is the process of marking older code as no longer useful in the codebase – usually making the way for new code to replace it. To prevent regression errors, deprecated code is not immediately removed.

For ML teams, a deprecated model means that the model is going to be replaced – or at least not officially recommended until it is completely removed. This process takes time since there are parts of the ML pipeline which may depend on an output from the model targeted for deprecation. Since ML observability monitors the inferences of production models, ML teams can leverage ML observability to track traffic flowing to each and every model in production to answer fundamental questions. Why is a deprecated model having an increased traffic flow? Should we deprecate this old model with hardly any traffic flow? Is the deprecated model having a decrease in traffic as we onboard its successor? Knowing the answers to these questions is crucial for maintaining a reliable ML environment in production.

Fairness and Bias

Fairness checks and bias tracing in production have become a priority for ML teams in recent years due to the continuous deployment of complex models that have a direct impact on the customers’ experience. In order to accelerate this process of model transparency, teams leverage model explainability techniques to inform them of the most important and least important features that lead to model decisions. However, it is worth noting that explainability, while a useful technique in the ML toolchain, is not a catch-all; model explainability can’t tell you if model decisions are correct. Model explainability techniques like SHAP (Shapley Additive Explanations) also can’t tell you if your model is making responsible decisions, or if those decisions are eliciting bias. For determining whether or not models in production are exhibiting algorithmic bias, teams need robust fairness checks in production.

ML systems develop biases even when a protected class or variable is absent. In order for teams to be accountable for ML decisions, fairness checks in production are critical. ML observability tools can be useful in monitoring fairness metrics (i.e. disparate impact) over time to determine if a model is acting in a discriminatory way. Narrowing in on windows of time where a model may have expressed bias can help in determining the root causes, whether it’s inadequate representation of a sensitive group in the data or a model demonstrating algorithmic bias despite adequate volume of that sensitive group.

Responsible AI requires leveraging a ML observability platform where ML teams can drill down to the feature-value combinations to determine which model inputs are most heavily contributing to a model’s bias. In practice, this technique of bias tracing can be the difference in unearthing a blindspot around a model perpetuating discrimination in the real world.

Data Labeling

ML Observability for data labeling? Yes, data labeling. First, a bit of context: according to multiple analyst estimates, “a majority of data (80% to 90%)…is unstructured information like text, video, audio, web server logs, social media, and more.” Due to the expense required to hire and manage human data labeling teams, most deep learning models are trained on a small sample of data (typically less than 1% of data collected). When these models are put into production, new patterns often emerge that were not present in the training data.

Detecting these changes is easier said than done. When training a model, ML teams are typically using a fixed dataset and are able to figure out what to label next manually. In production, however, a constant stream of data and potentially billions of inferences will test any team’s ability to manually label data to keep up with new patterns or data issues.

ML observability can help. By monitoring the embeddings generated by their deep learning models, ML teams can learn patterns of change and curate the data to figure out what to label next. By visualizing high dimensional data in a low dimensional space, teams can identify new patterns and then export problematic segments for high-value labeling. For example, a team might discover that their NLP sentiment classification model is encountering product reviews in Spanish for the first time – and export, label, and retrain accordingly.

Conclusion

Compared to DevOps or data engineering, MLOps is still relatively young as a practice despite tremendous growth. By implementing ML observability best practices and leveraging them to the fullest extent beyond mere model performance management, ML leaders can ensure they have a solid foundation for future success.