AI Research Papers

Dive into the latest AI and agent engineering research papers. Sign up to join our paper readings and author office hours, or learn from prior readings on demand.

Explore More AI Research

Stay up to date with the latest breakthroughs in AI.

Deploying IBM Generalist Agent in Enterprise Production

IBM researchers discuss Computer Using Generalist Agent (CUGA), which has been open-sourced for the community.

Read full paper

TUMIX: Multi-Agent Test-Time Scaling with Tool-Use Mixture

Google and MIT researcher covers TUMIX; achieves significant gains over SOTA tool-augmented and test-time scaling methods

Read full paper

ARE: scaling up agent environments and evaluations

Meta AI Researcher Explains ARE and Gaia2: Scaling Up Agent Environments and Evaluations

Read full paper

AgentArch: Benchmarking AI Agents for Enterprise Workflows

ServiceNow’s Tara Bogavelli on AgentArch: Benchmarking AI Agents for Enterprise Workflows

Read full paper

Small Language Models are the Future of Agentic AI

NVIDIA’s Peter Belcak distills why small language models are the future of agentic AI.

Read full paper

Verizon’s Stan Miasnikov Walks Through His Latest Paper On Inter-Agent Communication

Presents an extension of a recursive consciousness framework to analyze communication between agents and the inevitable loss of meaning in translation

Read full paper

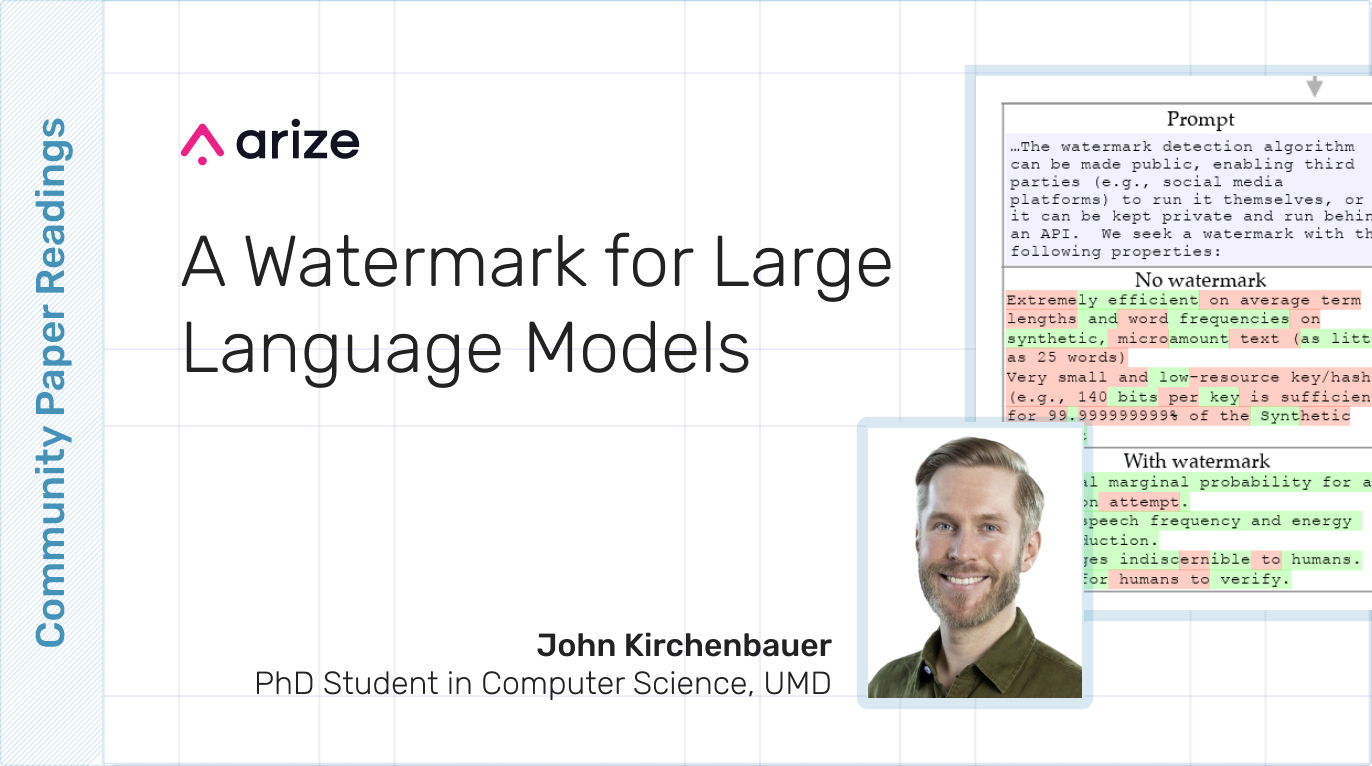

A Watermark for Large Language Models

Proposed LLM watermark that can be embedded with negligible impact on text quality.

Read full paper

Self-Adapting Language Models

A novel method for enabling LLMs to adapt their own weights using self-generated data and training directives

Read full paper

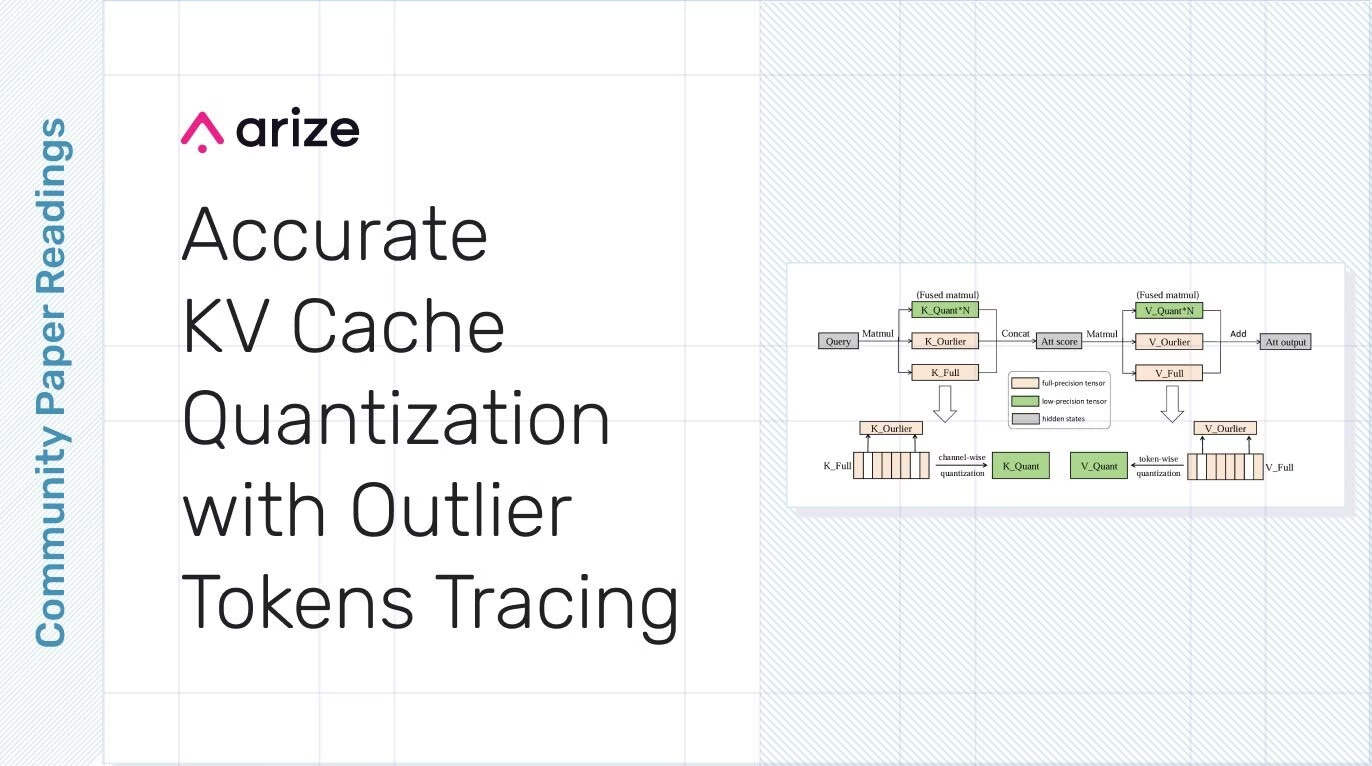

Accurate KV Cache Quantization with Outlier Tokens Tracing

Researchers propose a smarter way to compress the KV Cache while preserving model quality.

Read full paper

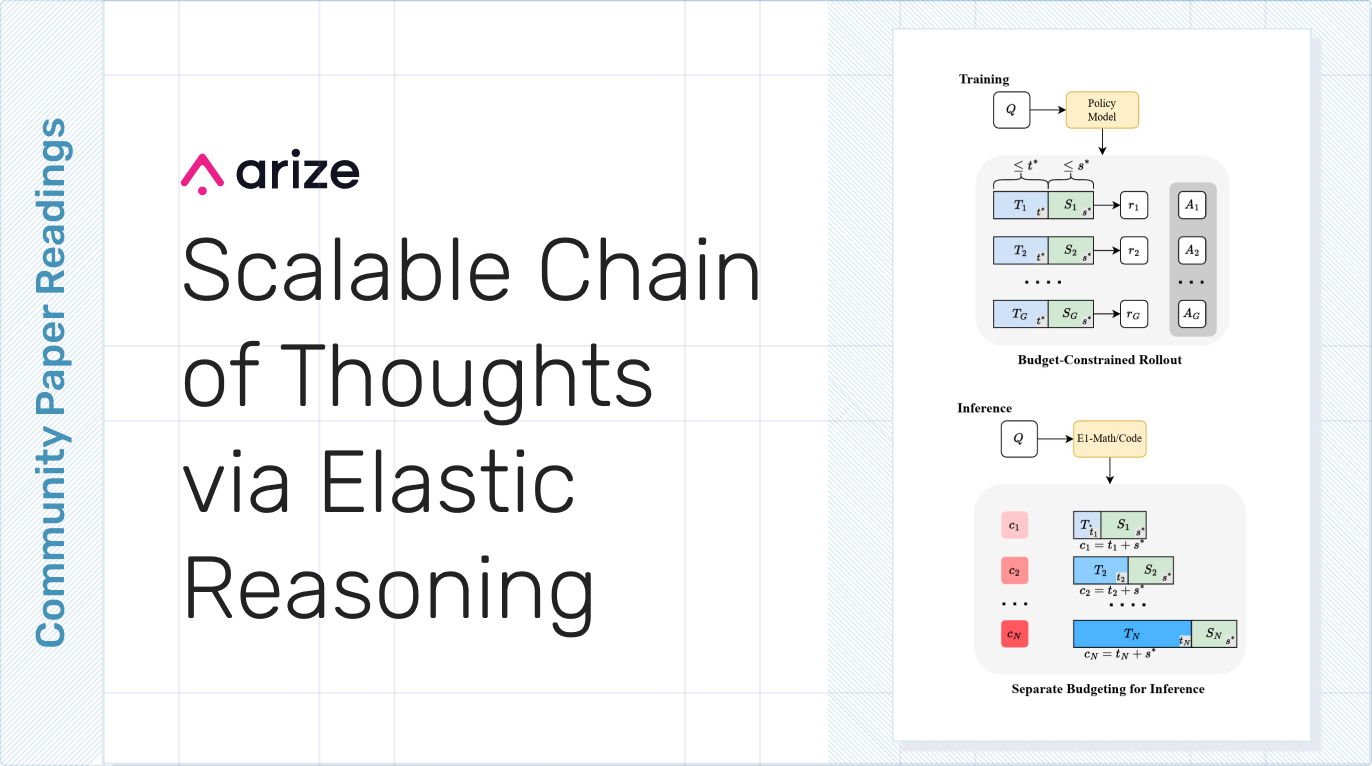

Scalable Chain of Thoughts via Elastic Reasoning

Elastic Reasoning, a novel framework designed to enhance the efficiency and scalability of large reasoning models (LRMs) by explicitly separating the reasoning process into two distinct phases: thinking and solution.

Read full paper

Sleep-time Compute: Beyond Inference Scaling at Test-time

We recently discussed “Sleep Time Compute: Beyond Inference Scaling at Test Time,” new research from the team at Letta.

Read full paper

Merge, Ensemble, and Cooperate! A Survey on Collaborative LLM Strategies

LLMs have revolutionized natural language processing, showcasing remarkable versatility and capabilities. But individual LLMs often exhibit distinct strengths and weaknesses, influenced by differences in their training corpora. This diversity poses a challenge: how can we maximize the efficiency and utility of large language models?

Read full paper

Agent-as-a-Judge: Evaluate Agents with Agents

This week we dive into a paper that presents the “Agent-as-a-Judge” framework, a new paradigm for evaluating agent systems.

Read full paper

Introduction to OpenAI’s Realtime API

We break down OpenAI’s realtime API. Sally-Ann DeLucia and Aparna Dhinakaran cover how to seamlessly integrate powerful language models into your applications for instant, context-aware responses that drive user engagement.

Read full paper

Model Context Protocol (MCP) from Anthropic

Want to learn more about Anthropic’s groundbreaking Model Context Protocol (MCP)? We break down how this open standard is revolutionizing AI by enabling seamless integration between LLMs and external data sources, fundamentally transforming them into capable, context-aware agents.

Read full paper

How DeepSeek is Pushing the Boundaries of AI Development

How do you train an AI model to think more like a human? That’s the challenge DeepSeek is tackling with its latest models, which push the boundaries of reasoning and reinforcement learning.

Read full paper

Multiagent Finetuning: A Conversation with Researcher Yilun Du

This week we were excited to talk to Google DeepMind Senior Research Scientist (and incoming Assistant Professor at Harvard), Yilun Du, about his latest paper “Multiagent Finetuning: Self Improvement with Diverse Reasoning Chains.”

Read full paper

Swarm: OpenAI’s Experimental Approach to Multi-Agent Systems

As multi-agent systems grow in importance for fields ranging from customer support to autonomous decision-making, OpenAI has introduced Swarm, an experimental framework that simplifies the process of building and managing these systems.

Read full paper

Breaking Down Reflection Tuning: Enhancing LLM Performance with Self-Learning

A recent announcement on X boasted a tuned model with pretty outstanding performance, and claimed these results were achieved through reflection tuning.

Read full paperTop AI research papers

| Source | Description | ||

|---|---|---|---|

| Source | Why Language Models Hallucinate | Description |

Shows the math and evaluation choices that underlie LLM hallucinations. |

| Source | ARE and Gaia2 | Description |

Gaia2 is a new AI agent benchmark that checks write actions — the ones that modify the world (like sending an email) — and don’t explicitly verify pure reads. |