ML Service-Level Performance Monitoring: the Essentials

In order for an ML system to be successful, it isn’t sufficient to just understand the data going in and out of the ML system or monitor its performance over time. When viewed as an overall service, the ML application also has to be measured by its overall service performance.

This post covers the sometimes overlooked field of service-level ML performance, dissecting how it can be measured and improved.

Service Latency vs Inference Latency In ML

ML Service latency is the time it takes to load the model into memory, gather the requisite data, and compute the features the model needs to make its prediction. Service performance also includes the time it takes for the user to be made aware of the decision that the model has made.

ML Inference Latency is the time it takes for your model to make its prediction once it is fed its input.

In a real-time system, both of these metrics contribute to the user-perceived latencies of your application. As a result, it’s important not just to monitor these service-level performance metrics, but also make real progress in reducing these latencies in your application.

Let’s start by taking a look at what you might want to monitor and improve upon to hone in on making your service more performant.

Optimizing ML Service Latency

Input Feature Lookup

Before the model can even make a prediction, all of the input features must be gathered or computed by the service layer of the ML system. Some of the features will be passed in by the caller, while other features might be collected from a datastore or calculated in real-time.

For example, a model predicting the likelihood of a customer responding to an ad might take in the historical purchase information of this customer. The customer wouldn’t provide this when they view the page themselves, but the model service would query a data warehouse to fetch this information. Gathering input features can generally be classified into two groups:

- Static Features: Features that are less likely to change quickly and can be stored or calculated ahead of time. For example, a customer’s historical purchase patterns or preferences can be calculated ahead of time.

- Real-Time Calculated Features: Features that require being calculated over a dynamic time window. For example, when predicting ETAs (estimated time of arrival) for food delivery, you might need to know how many other orders have been made in the last hour.

In practice, a model typically uses a mix of static and real-time calculated features. Monitoring the lookup and transformations needed for each feature is important to trace where the latency is coming from in the ML system. It’s important to remember that your service level performance in the input feature lookup stage is only as good as your slowest feature.

Pre-Computing Predictions

In some use cases, it is possible to reduce prediction latency by precomputing predictions, storing them, and serving them using a low-latency read datastore. For example, a streaming service might compute and store ahead of time the recommendations for a new user of their service.

This type of offline batch-scoring job can vastly reduce latencies in the serving environment because the brunt of the work has been done before the model has even been called.

For example, recommendation systems used by a streaming service like Netflix can pre-compute the movies or TV shows that you are likely to enjoy when you are not using the service so that the next time you login you are quickly greeted with some personalized content without the long loading screen.

Optimizing ML Inference Latency

Reduce Complexity

Now that we have looked at service performance, let’s turn our attention to how you might monitor and improve your inference latency.

One approach to optimize model prediction latency is to reduce the complexity of the model. Some examples of reducing complexity could be reducing the number of layers in a neural network, reducing levels in decision trees, or pruning any irrelevant or unused part of a model.

In some cases, this might be a direct tradeoff to the model efficacy. For example, if there are more levels in a decision tree, there are more complex relationships that can be captured from the data and therefore increase the overall effectiveness of the model. However, fewer levels in a decision tree can reduce prediction latency.

Balancing the efficacy of the model (accuracy, precision, AUC, etc.) with its required operational constraints is important to strive for any model to be deployed. This becomes especially relevant for models that are embedded on more constrained mobile devices.

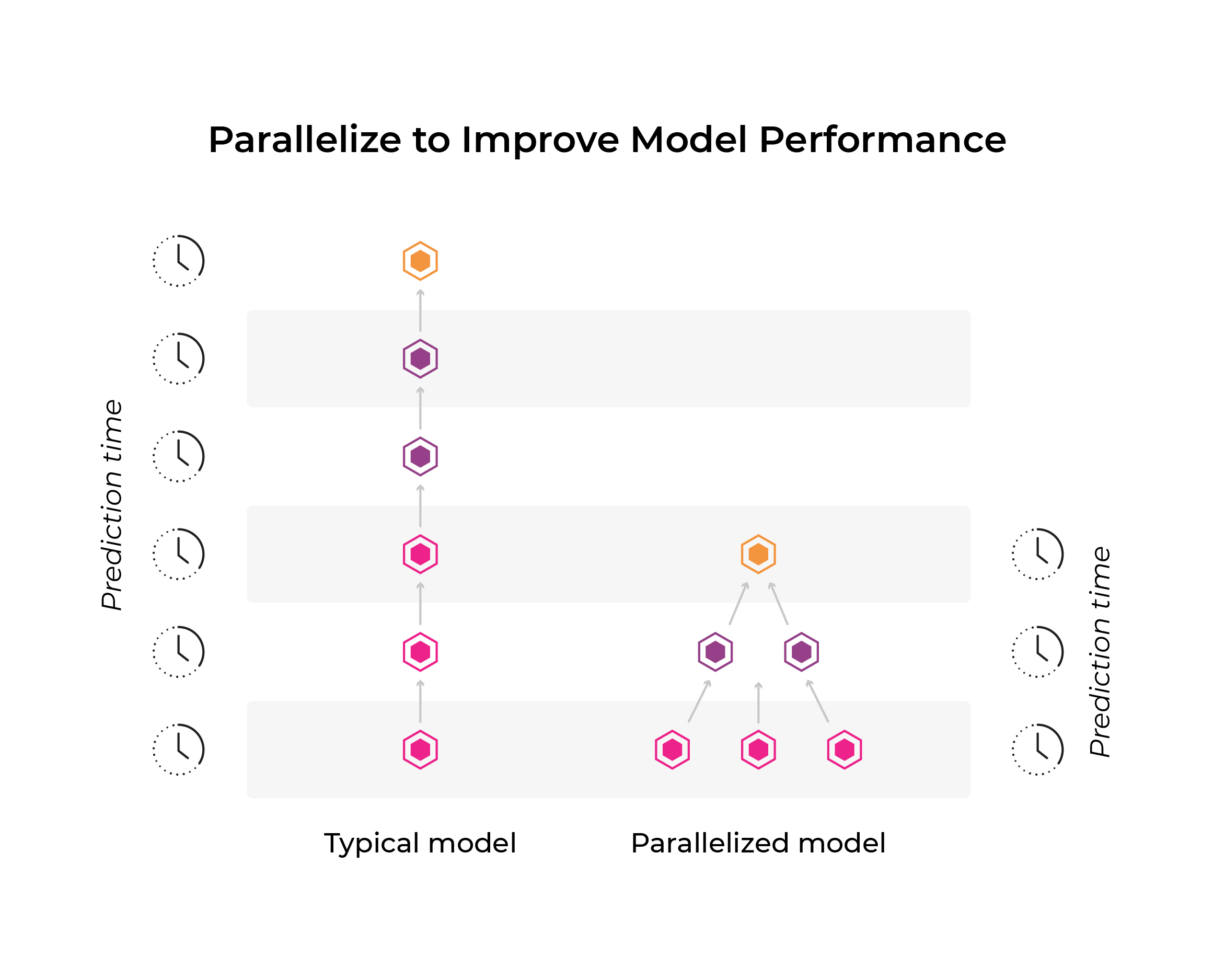

Parallelization

Aside from reducing the complexity of the model, something you can do to improve your model performance in production is to re-architect your model to be more parallelizable. If a part of your model doesn’t depend on the output of another part of your model, why not run both of these sections at the same time.

The cloud-ML industry is moving to highly scalable on-demand clouds that allow you to leverage football fields of specialized computers to run your model on. In a similar vein, mobile processors are dedicating significant portions of their chips to machine learning accelerators, which allow developers to exploit the parallel nature of their model inference pipelines.

If you have the ability to throw more cores at the problem at prediction time, you can leverage a parallel nature of your model to speed up your prediction time. You may be leaving performance on the table if you don’t look into how your model can be reimagined in a more parallel way.

Takeaway

While traditional ML performance monitoring is of the utmost importance for measuring and improving your application of machine learning, it doesn’t capture the full picture of how a user is experiencing your application. Service level performance metrics matter, and a change that increases your accuracy by 1 percent that causes a 500 millisecond regression might not be worth it for your use case!

If you don’t see the trade-offs you are making with every change you make to your system, you are going to slowly bury your model in a pile of small performance regressions that add up to a slow, unwieldy product. Have no fear: there are a number of techniques to diagnose performance issues and ultimately improve your model’s service-level performance, but first you have to start paying attention to the milliseconds.