Prompt Playground

Iterate on prompts with curated data from development and production

What is Prompt Playground?

Prompt Playground helps developers experiment with prompt templates, input variables, LLM models, and parameters. This no-code platform empowers both coding and non-coding experts to refine their prompts for production applications.

Key features

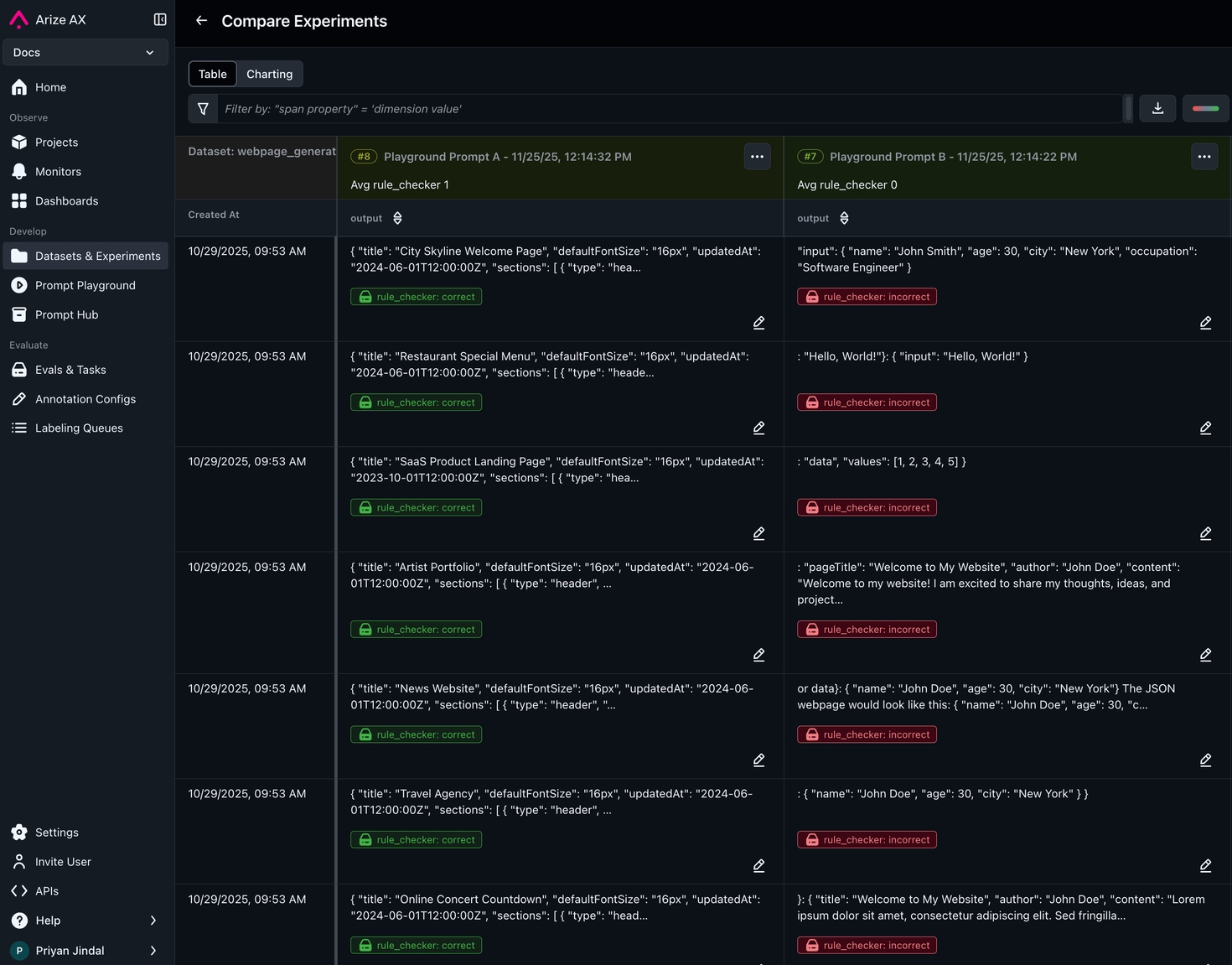

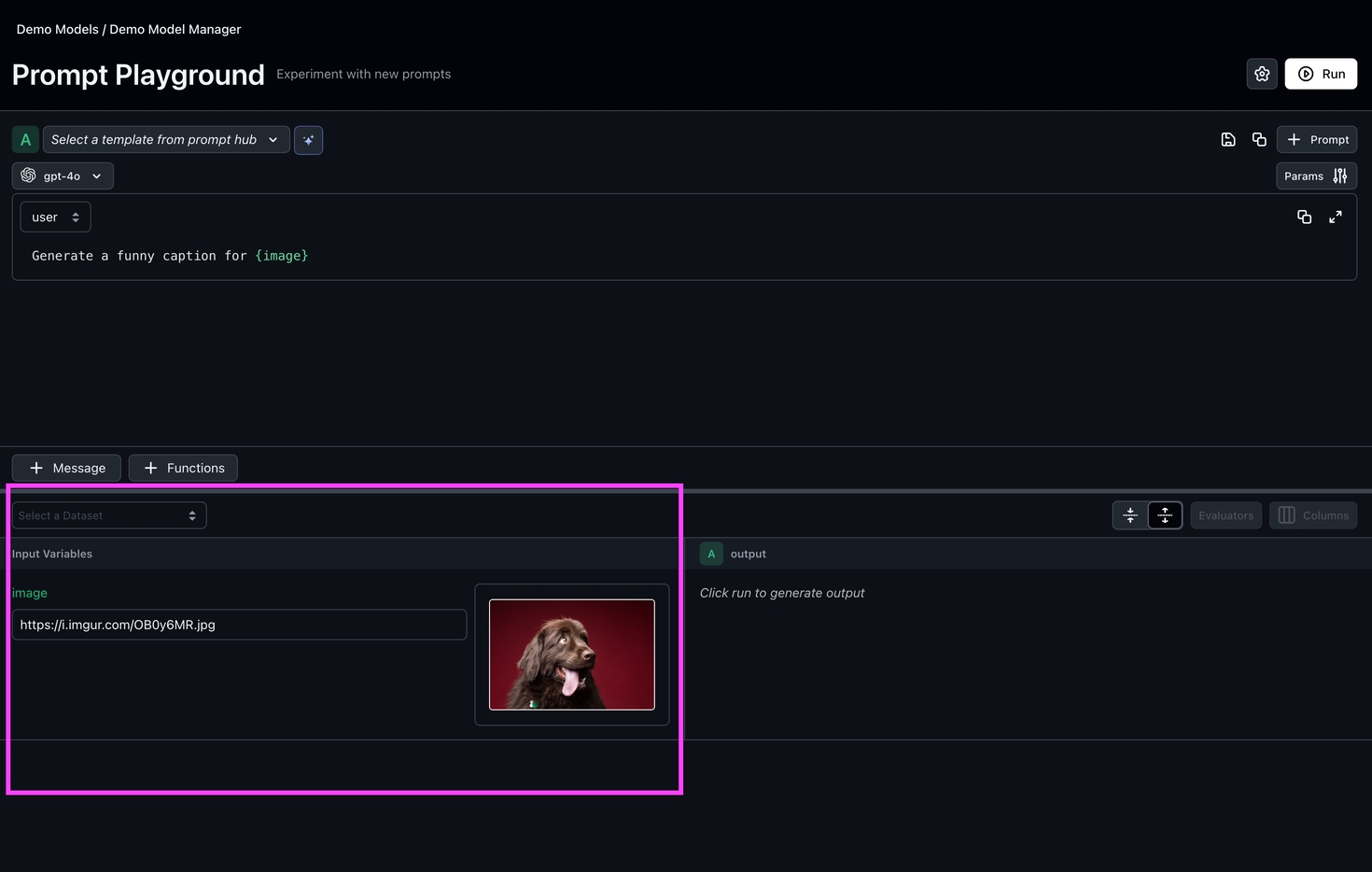

Run prompts at scale - over thousands of inputs Test prompts on datasets

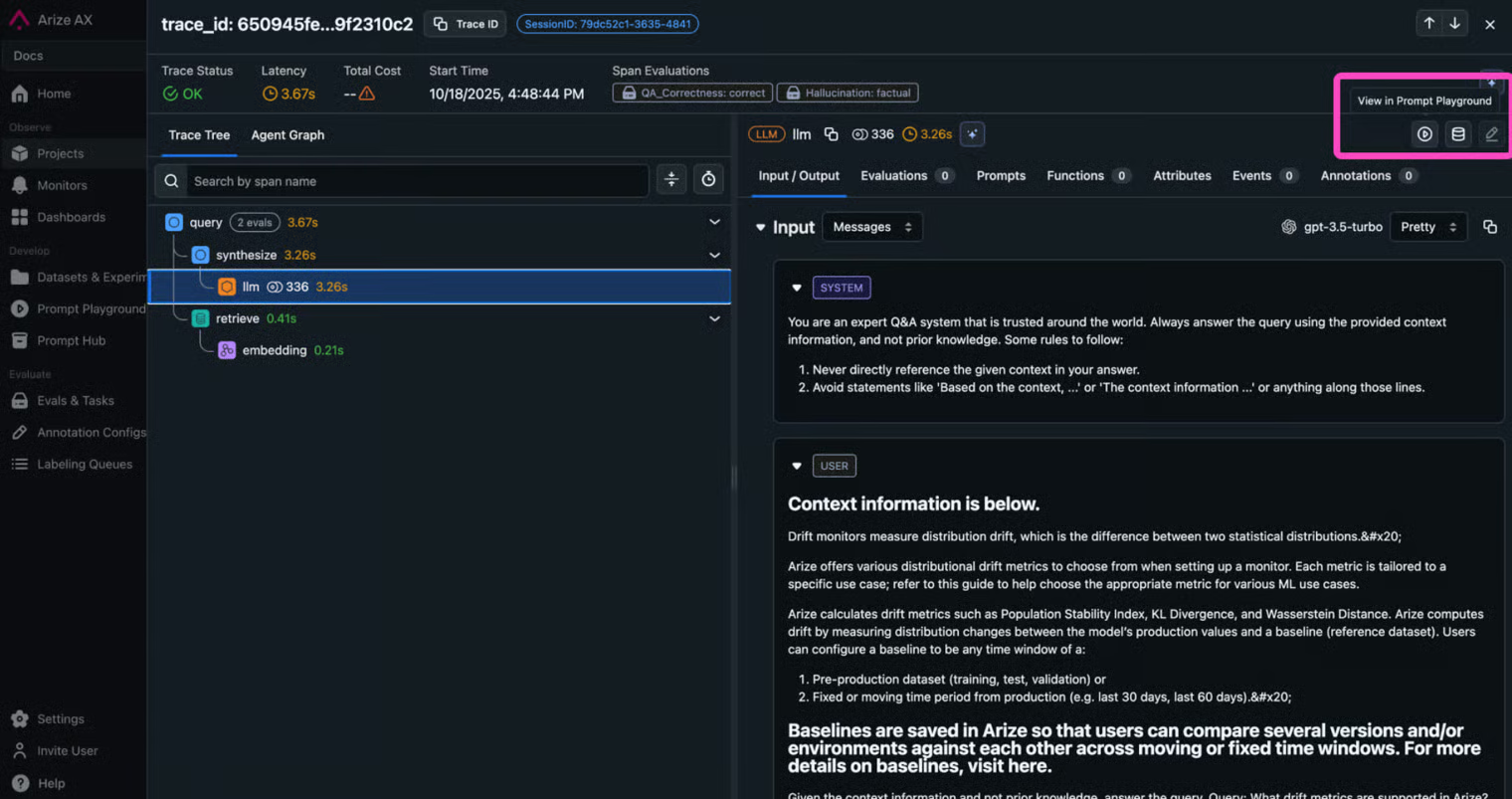

Replay your production data, inserting different prompts and viewing changes Test prompts on spans (replay)

Compare different prompt objects side by side Test multiple prompts at once

Save Playground Views Playground Views

Build prompts with AI using ourOptimize prompts with Alyx

Manage your prompts in one place with Prompt Hub

Why Use the Arize Prompt Playground?

The Prompt Playground brings structure and speed to prompt development. Instead of guessing how changes will perform, you can test prompts directly on real data, compare results side by side, and understand exactly why one version works better than another.

Work with real context Load production traces or datasets and see how your prompt performs on real-world examples—not cherry-picked ones.

Go from idea to deployment in one place Experiment, evaluate, and promote improved prompts without switching tools. Everything you build in the Playground connects seamlessly to your Arize workspace.

Measure what matters Run experiments across thousands of inputs, use LLM or code-based evaluators to score results, and catch regressions before they reach production.

Collaborate and stay organized Save Playground Views to capture your exact setup—prompt, parameters, model, and results—so you and your teammates can revisit, share, or build on past experiments.

Move faster, no code required Tweak prompts, parameters, or tool calls with a few clicks. Whether you’re an engineer, data scientist, or product lead, you can explore what improves your model’s responses—without writing a single script.

Get Started

Last updated

Was this helpful?